Tianyang Pan

Task and Motion Planning for Execution in the Real

Jun 05, 2024

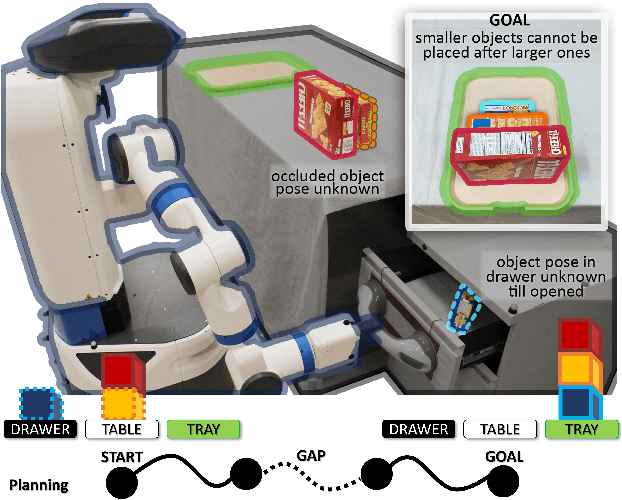

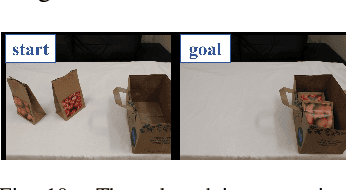

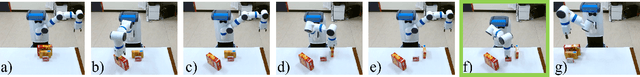

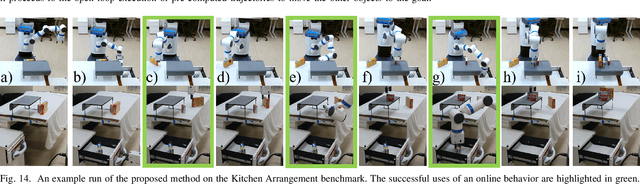

Abstract:Task and motion planning represents a powerful set of hybrid planning methods that combine reasoning over discrete task domains and continuous motion generation. Traditional reasoning necessitates task domain models and enough information to ground actions to motion planning queries. Gaps in this knowledge often arise from sources like occlusion or imprecise modeling. This work generates task and motion plans that include actions cannot be fully grounded at planning time. During execution, such an action is handled by a provided human-designed or learned closed-loop behavior. Execution combines offline planned motions and online behaviors till reaching the task goal. Failures of behaviors are fed back as constraints to find new plans. Forty real-robot trials and motivating demonstrations are performed to evaluate the proposed framework and compare against state-of-the-art. Results show faster execution time, less number of actions, and more success in problems where diverse gaps arise. The experiment data is shared for researchers to simulate these settings. The work shows promise in expanding the applicable class of realistic partially grounded problems that robots can address.

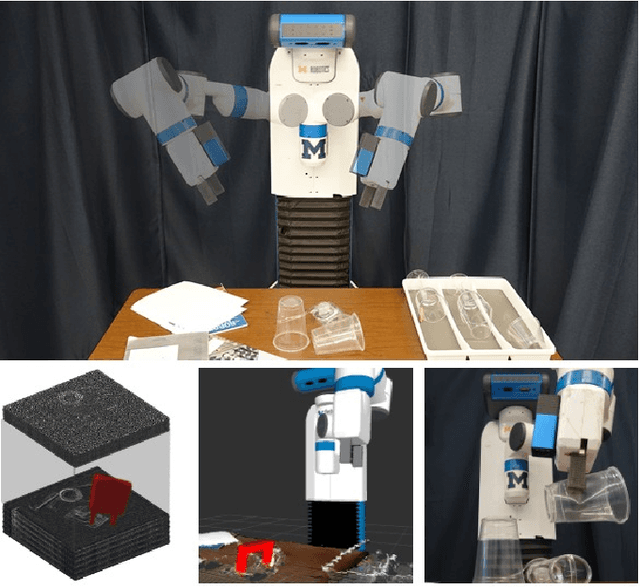

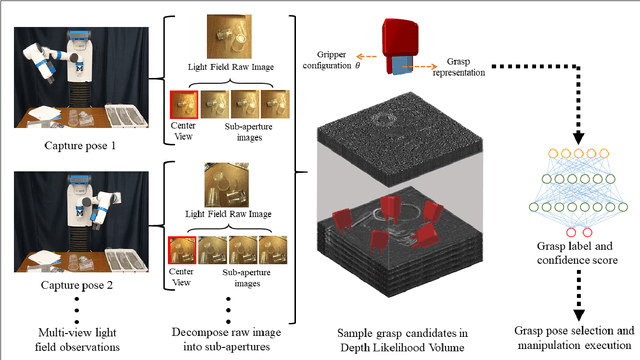

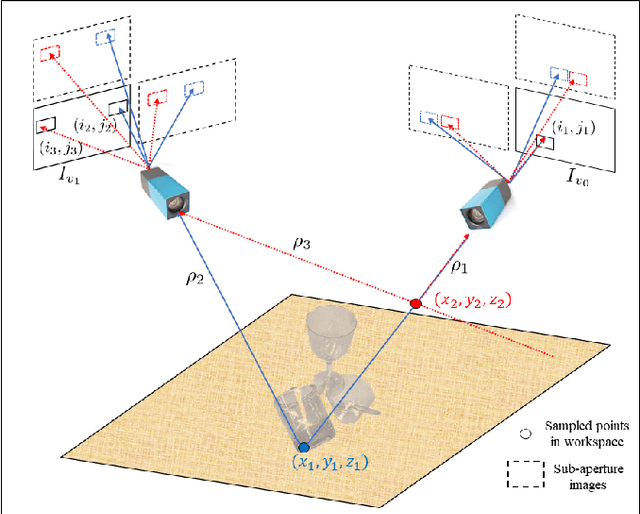

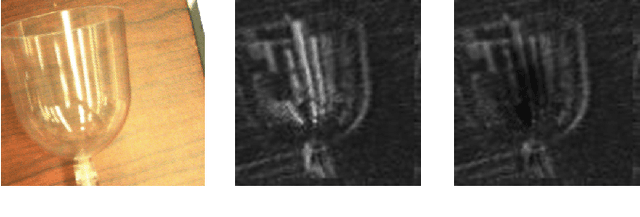

GlassLoc: Plenoptic Grasp Pose Detection in Transparent Clutter

Sep 17, 2019

Abstract:Transparent objects are prevalent across many environments of interest for dexterous robotic manipulation. Such transparent material leads to considerable uncertainty for robot perception and manipulation, and remains an open challenge for robotics. This problem is exacerbated when multiple transparent objects cluster into piles of clutter. In household environments, for example, it is common to encounter piles of glassware in kitchens, dining rooms, and reception areas, which are essentially invisible to modern robots. We present the GlassLoc algorithm for grasp pose detection of transparent objects in transparent clutter using plenoptic sensing. GlassLoc classifies graspable locations in space informed by a Depth Likelihood Volume (DLV) descriptor. We extend the DLV to infer the occupancy of transparent objects over a given space from multiple plenoptic viewpoints. We demonstrate and evaluate the GlassLoc algorithm on a Michigan Progress Fetch mounted with a first-generation Lytro. The effectiveness of our algorithm is evaluated through experiments for grasp detection and execution with a variety of transparent glassware in minor clutter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge