Tianjian Zhang

Joint DOA estimation and distorted sensor detection under entangled low-rank and row-sparse constraints

Dec 21, 2023

Abstract:The problem of joint direction-of-arrival estimation and distorted sensor detection has received a lot of attention in recent decades. Most state-of-the-art work formulated such a problem via low-rank and row-sparse decomposition, where the low-rank and row-sparse components were treated in an isolated manner. Such a formulation results in a performance loss. Differently, in this paper, we entangle the low-rank and row-sparse components by exploring their inherent connection. Furthermore, we take into account the maximal distortion level of the sensors. An alternating optimization scheme is proposed to solve the low-rank component and the sparse component, where a closed-form solution is derived for the low-rank component and a quadratic programming is developed for the sparse component. Numerical results exhibit the effectiveness and superiority of the proposed method.

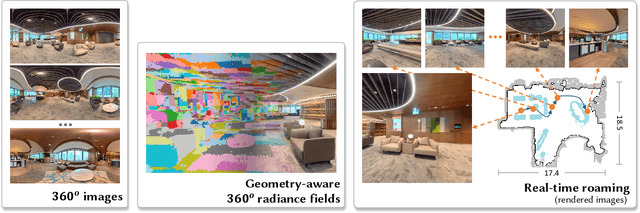

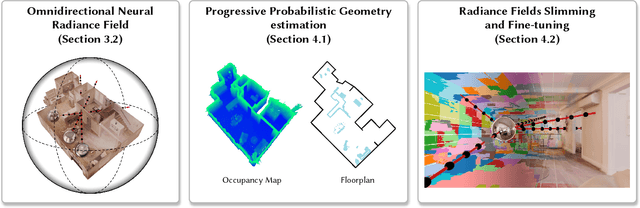

360Roam: Real-Time Indoor Roaming Using Geometry-Aware ${360^\circ}$ Radiance Fields

Aug 04, 2022

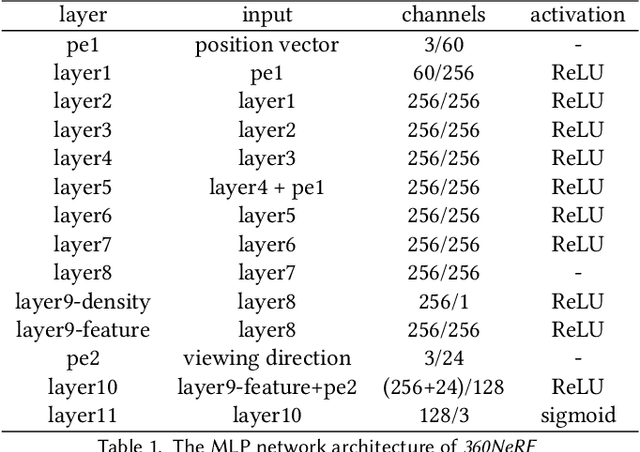

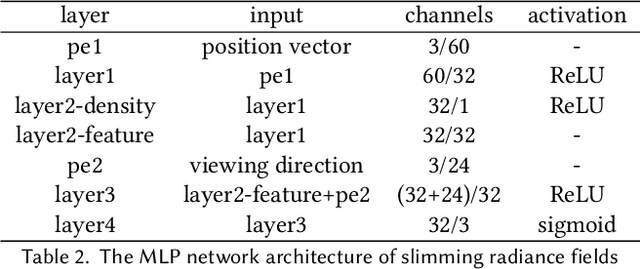

Abstract:Neural radiance field (NeRF) has recently achieved impressive results in novel view synthesis. However, previous works on NeRF mainly focus on object-centric scenarios. In this work, we propose 360Roam, a novel scene-level NeRF system that can synthesize images of large-scale indoor scenes in real time and support VR roaming. Our system first builds an omnidirectional neural radiance field 360NeRF from multiple input $360^\circ$ images. Using 360NeRF, we then progressively estimate a 3D probabilistic occupancy map which represents the scene geometry in the form of spacial density. Skipping empty spaces and upsampling occupied voxels essentially allows us to accelerate volume rendering by using 360NeRF in a geometry-aware fashion. Furthermore, we use an adaptive divide-and-conquer strategy to slim and fine-tune the radiance fields for further improvement. The floorplan of the scene extracted from the occupancy map can provide guidance for ray sampling and facilitate a realistic roaming experience. To show the efficacy of our system, we collect a $360^\circ$ image dataset in a large variety of scenes and conduct extensive experiments. Quantitative and qualitative comparisons among baselines illustrated our predominant performance in novel view synthesis for complex indoor scenes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge