Thomas Rowntree

Robotic Vision for Space Mining

Sep 30, 2021

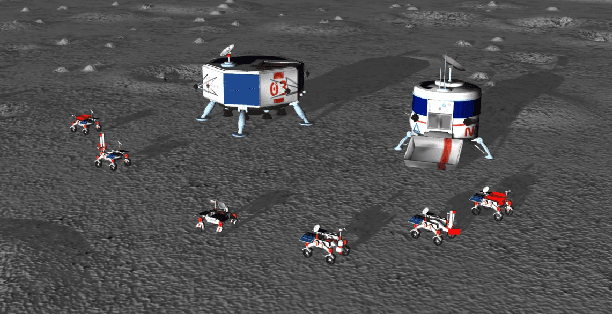

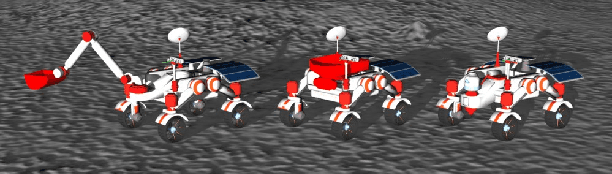

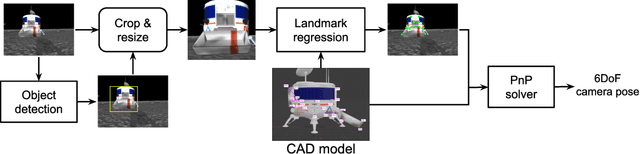

Abstract:Future Moon bases will likely be constructed using resources mined from the surface of the Moon. The difficulty of maintaining a human workforce on the Moon and communications lag with Earth means that mining will need to be conducted using collaborative robots with a high degree of autonomy. In this paper, we explore the utility of robotic vision towards addressing several major challenges in autonomous mining in the lunar environment: lack of satellite positioning systems, navigation in hazardous terrain, and delicate robot interactions. Specifically, we describe and report the results of robotic vision algorithms that we developed for Phase 2 of the NASA Space Robotics Challenge, which was framed in the context of autonomous collaborative robots for mining on the Moon. The competition provided a simulated lunar environment that exhibits the complexities alluded to above. We show how machine learning-enabled vision could help alleviate the challenges posed by the lunar environment. A robust multi-robot coordinator was also developed to achieve long-term operation and effective collaboration between robots.

Practical Robot Learning from Demonstrations using Deep End-to-End Training

Jun 07, 2019

Abstract:Robots need to learn behaviors in intuitive and practical ways for widespread deployment in human environments. To learn a robot behavior end-to-end, we train a variant of the ResNet that maps eye-in-hand camera images to end-effector velocities. In our setup, a human teacher demonstrates the task via joystick. We show that a simple servoing task can be learned in less than an hour including data collection, model training and deployment time. Moreover, 16 minutes of demonstrations were enough for the robot to learn the task.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge