Thomas Lampert

PRISM: Lightweight Multivariate Time-Series Classification through Symmetric Multi-Resolution Convolutional Layers

Aug 06, 2025Abstract:Multivariate time-series classification is pivotal in domains ranging from wearable sensing to biomedical monitoring. Despite recent advances, Transformer- and CNN-based models often remain computationally heavy, offer limited frequency diversity, and require extensive parameter budgets. We propose PRISM (Per-channel Resolution-Informed Symmetric Module), a convolutional-based feature extractor that applies symmetric finite-impulse-response (FIR) filters at multiple temporal scales, independently per channel. This multi-resolution, per-channel design yields highly frequency-selective embeddings without any inter-channel convolutions, greatly reducing model size and complexity. Across human-activity, sleep-stage and biomedical benchmarks, PRISM, paired with lightweight classification heads, matches or outperforms leading CNN and Transformer baselines, while using roughly an order of magnitude fewer parameters and FLOPs. By uniting classical signal processing insights with modern deep learning, PRISM offers an accurate, resource-efficient solution for multivariate time-series classification.

Maximising Histopathology Segmentation using Minimal Labels via Self-Supervision

Dec 19, 2024Abstract:Histopathology, the microscopic examination of tissue samples, is essential for disease diagnosis and prognosis. Accurate segmentation and identification of key regions in histopathology images are crucial for developing automated solutions. However, state-of-art deep learning segmentation methods like UNet require extensive labels, which is both costly and time-consuming, particularly when dealing with multiple stainings. To mitigate this, multi-stain segmentation methods such as MDS1 and UDAGAN have been developed, which reduce the need for labels by requiring only one (source) stain to be labelled. Nonetheless, obtaining source stain labels can still be challenging, and segmentation models fail when they are unavailable. This article shows that through self-supervised pre-training, including SimCLR, BYOL, and a novel approach, HR-CS-CO, the performance of these segmentation methods (UNet, MDS1, and UDAGAN) can be retained even with 95% fewer labels. Notably, with self-supervised pre-training and using only 5% labels, the performance drops are minimal: 5.9% for UNet, 4.5% for MDS1, and 6.2% for UDAGAN, compared to their respective fully supervised counterparts (without pre-training, using 100% labels). The code is available from https://github.com/zeeshannisar/improve_kidney_glomeruli_segmentation [to be made public upon acceptance].

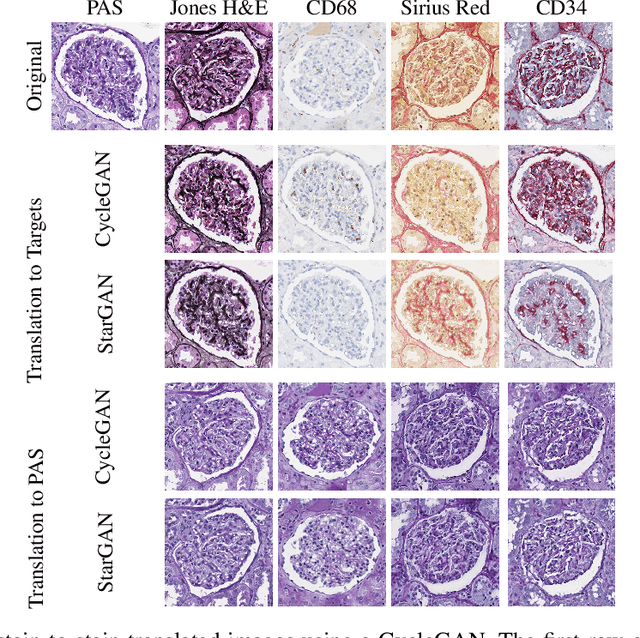

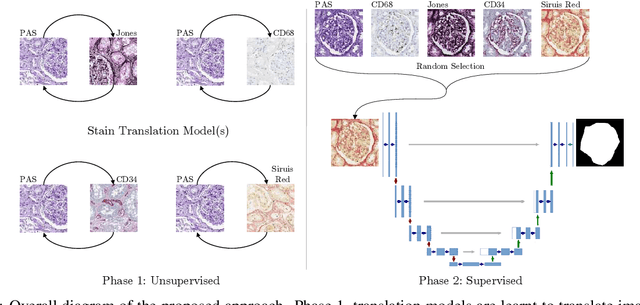

HistoStarGAN: A Unified Approach to Stain Normalisation, Stain Transfer and Stain Invariant Segmentation in Renal Histopathology

Oct 18, 2022

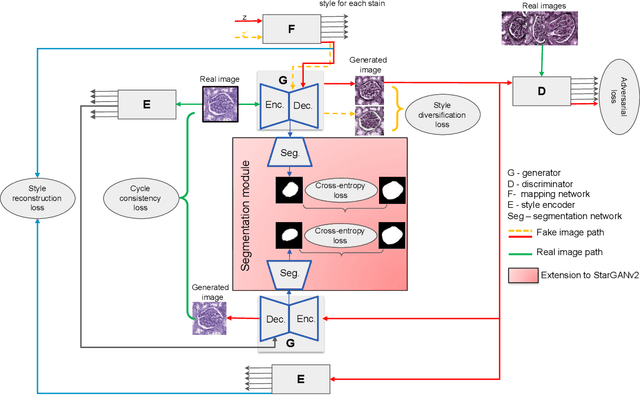

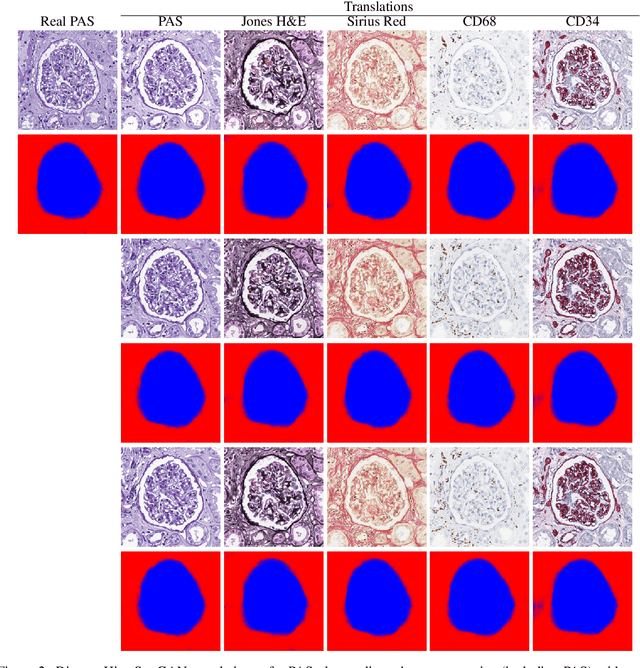

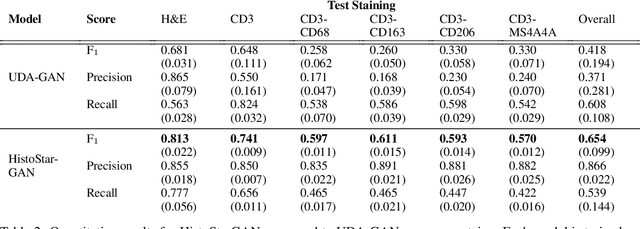

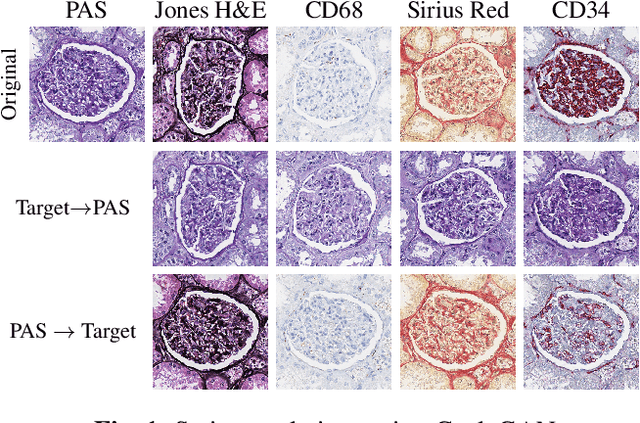

Abstract:Virtual stain transfer is a promising area of research in Computational Pathology, which has a great potential to alleviate important limitations when applying deeplearningbased solutions such as lack of annotations and sensitivity to a domain shift. However, in the literature, the majority of virtual staining approaches are trained for a specific staining or stain combination, and their extension to unseen stainings requires the acquisition of additional data and training. In this paper, we propose HistoStarGAN, a unified framework that performs stain transfer between multiple stainings, stain normalisation and stain invariant segmentation, all in one inference of the model. We demonstrate the generalisation abilities of the proposed solution to perform diverse stain transfer and accurate stain invariant segmentation over numerous unseen stainings, which is the first such demonstration in the field. Moreover, the pre-trained HistoStar-GAN model can serve as a synthetic data generator, which paves the way for the use of fully annotated synthetic image data to improve the training of deep learning-based algorithms. To illustrate the capabilities of our approach, as well as the potential risks in the microscopy domain, inspired by applications in natural images, we generated KidneyArtPathology, a fully annotated artificial image dataset for renal pathology.

Towards Measuring Domain Shift in Histopathological Stain Translation in an Unsupervised Manner

May 09, 2022

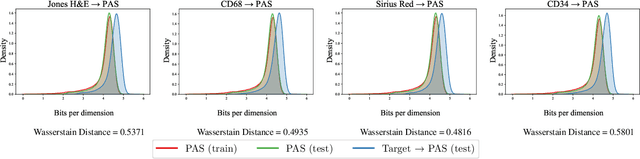

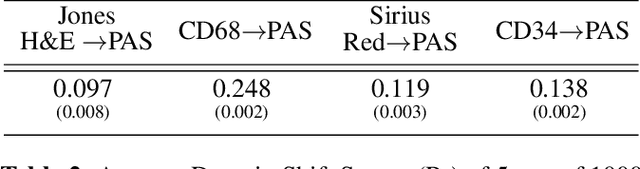

Abstract:Domain shift in digital histopathology can occur when different stains or scanners are used, during stain translation, etc. A deep neural network trained on source data may not generalise well to data that has undergone some domain shift. An important step towards being robust to domain shift is the ability to detect and measure it. This article demonstrates that the PixelCNN and domain shift metric can be used to detect and quantify domain shift in digital histopathology, and they demonstrate a strong correlation with generalisation performance. These findings pave the way for a mechanism to infer the average performance of a model (trained on source data) on unseen and unlabelled target data.

* 5 pages, 3 figures, 2 tables

Self adversarial attack as an augmentation method for immunohistochemical stainings

Mar 21, 2021

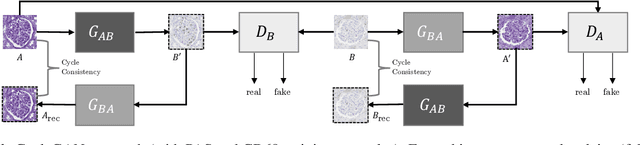

Abstract:It has been shown that unpaired image-to-image translation methods constrained by cycle-consistency hide the information necessary for accurate input reconstruction as imperceptible noise. We demonstrate that, when applied to histopathology data, this hidden noise appears to be related to stain specific features and show that this is the case with two immunohistochemical stainings during translation to Periodic acid- Schiff (PAS), a histochemical staining method commonly applied in renal pathology. Moreover, by perturbing this hidden information, the translation models produce different, plausible outputs. We demonstrate that this property can be used as an augmentation method which, in a case of supervised glomeruli segmentation, leads to improved performance.

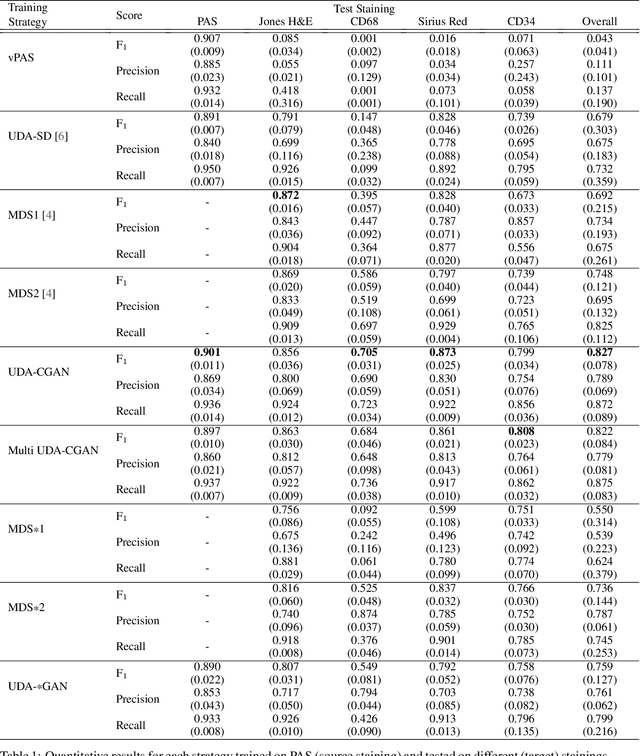

Towards Histopathological Stain Invariance by Unsupervised Domain Augmentation using Generative Adversarial Networks

Dec 22, 2020

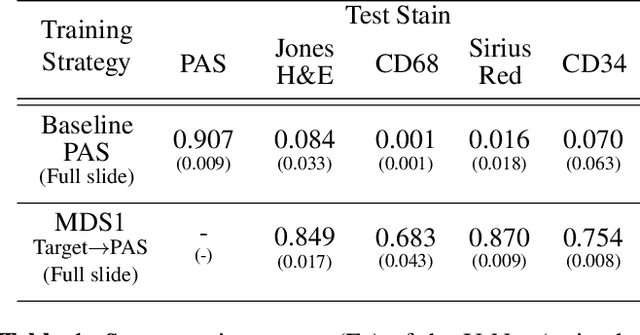

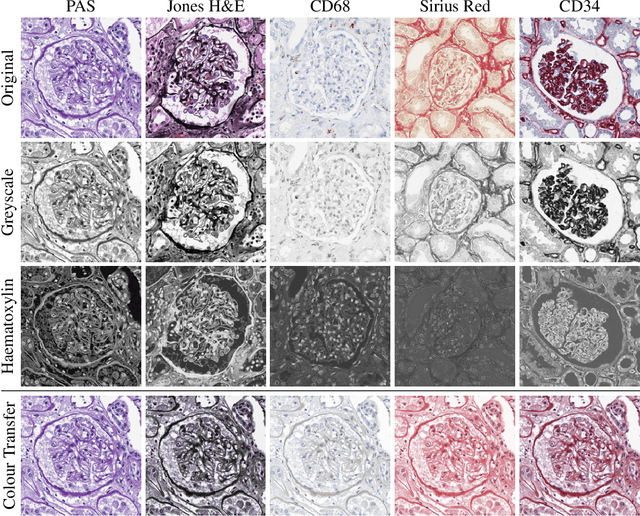

Abstract:The application of supervised deep learning methods in digital pathology is limited due to their sensitivity to domain shift. Digital Pathology is an area prone to high variability due to many sources, including the common practice of evaluating several consecutive tissue sections stained with different staining protocols. Obtaining labels for each stain is very expensive and time consuming as it requires a high level of domain knowledge. In this article, we propose an unsupervised augmentation approach based on adversarial image-to-image translation, which facilitates the training of stain invariant supervised convolutional neural networks. By training the network on one commonly used staining modality and applying it to images that include corresponding, but differently stained, tissue structures, the presented method demonstrates significant improvements over other approaches. These benefits are illustrated in the problem of glomeruli segmentation in seven different staining modalities (PAS, Jones H&E, CD68, Sirius Red, CD34, H&E and CD3) and analysis of the learned representations demonstrate their stain invariance.

An automatic framework to study the tissue micro-environment of renal glomeruli in differently stained consecutive digital whole slide images

Aug 29, 2020

Abstract:Objective: This article presents an automatic image processing framework to extract quantitative high-level information describing the micro-environment of glomeruli in consecutive whole slide images (WSIs) processed with different staining modalities of patients with chronic kidney rejection after kidney transplantation. Methods: This three step framework consists of: 1) cell and anatomical structure segmentation based on colour deconvolution and deep learning 2) fusion of information from different stainings using a newly developed registration algorithm 3) feature extraction. Results: Each step of the framework is validated independently both quantitatively and qualitatively by pathologists. An illustration of the different types of features that can be extracted is presented. Conclusion: The proposed generic framework allows for the analysis of the micro-environment surrounding large structures that can be segmented (either manually or automatically). It is independent of the segmentation approach and is therefore applicable to a variety of biomedical research questions. Significance: Chronic tissue remodelling processes after kidney transplantation can result in interstitial fibrosis and tubular atrophy (IFTA) and glomerulosclerosis. This pipeline provides tools to quantitatively analyse, in the same spatial context, information from different consecutive WSIs and help researchers understand the complex underlying mechanisms leading to IFTA and glomerulosclerosis.

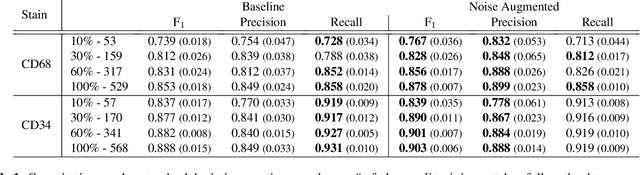

Strategies for Training Stain Invariant CNNs

Nov 03, 2018

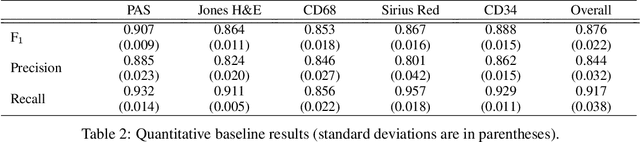

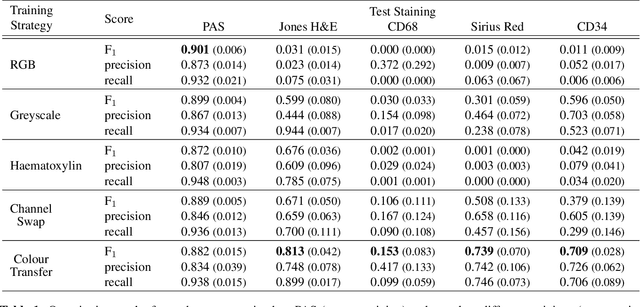

Abstract:An important part of Digital Pathology is the analysis of multiple digitised whole slide images from differently stained tissue sections. It is common practice to mount consecutive sections containing corresponding microscopic structures on glass slides, and to stain them differently to highlight specific tissue components. These multiple staining modalities result in very different images but include a significant amount of consistent image information. Deep learning approaches have recently been proposed to analyse these images in order to automatically identify objects of interest for pathologists. These supervised approaches require a vast amount of annotations, which are difficult and expensive to acquire---a problem that is multiplied with multiple stainings. This article presents several training strategies that make progress towards stain invariant networks. By training the network on one commonly used staining modality and applying it to images that include corresponding but differently stained tissue structures, the presented unsupervised strategies demonstrate significant improvements over standard training strategies.

Retrieving and Ranking Similar Questions from Question-Answer Archives Using Topic Modelling and Topic Distribution Regression

Jun 12, 2016

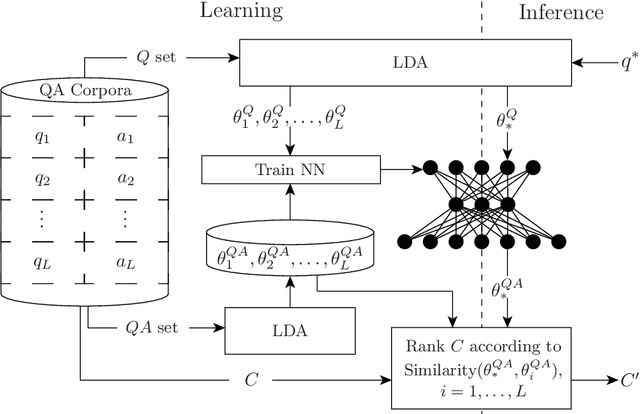

Abstract:Presented herein is a novel model for similar question ranking within collaborative question answer platforms. The presented approach integrates a regression stage to relate topics derived from questions to those derived from question-answer pairs. This helps to avoid problems caused by the differences in vocabulary used within questions and answers, and the tendency for questions to be shorter than answers. The performance of the model is shown to outperform translation methods and topic modelling (without regression) on several real-world datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge