Thijs Becker

Evaluating Feature Attribution Methods in the Image Domain

Feb 22, 2022

Abstract:Feature attribution maps are a popular approach to highlight the most important pixels in an image for a given prediction of a model. Despite a recent growth in popularity and available methods, little attention is given to the objective evaluation of such attribution maps. Building on previous work in this domain, we investigate existing metrics and propose new variants of metrics for the evaluation of attribution maps. We confirm a recent finding that different attribution metrics seem to measure different underlying concepts of attribution maps, and extend this finding to a larger selection of attribution metrics. We also find that metric results on one dataset do not necessarily generalize to other datasets, and methods with desirable theoretical properties such as DeepSHAP do not necessarily outperform computationally cheaper alternatives. Based on these findings, we propose a general benchmarking approach to identify the ideal feature attribution method for a given use case. Implementations of attribution metrics and our experiments are available online.

Longitudinal modeling of MS patient trajectories improves predictions of disability progression

Nov 09, 2020

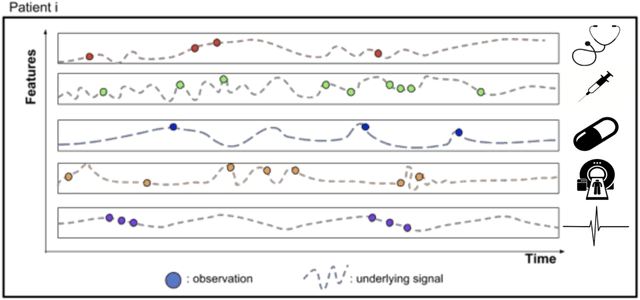

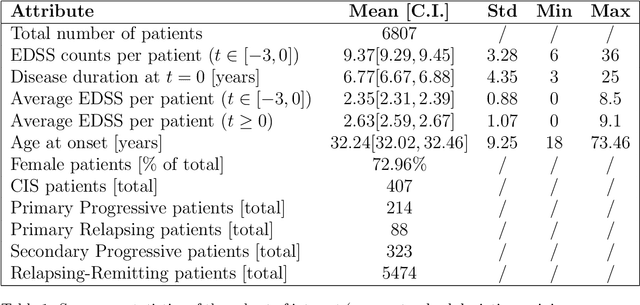

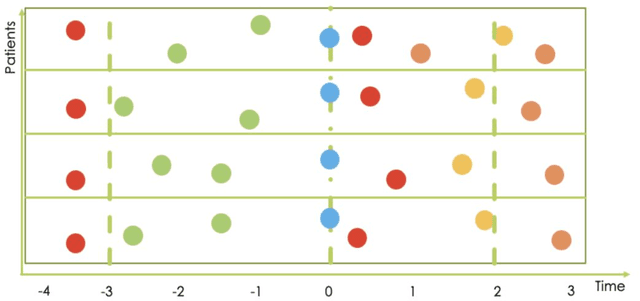

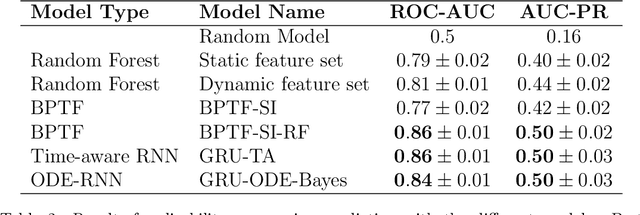

Abstract:Research in Multiple Sclerosis (MS) has recently focused on extracting knowledge from real-world clinical data sources. This type of data is more abundant than data produced during clinical trials and potentially more informative about real-world clinical practice. However, this comes at the cost of less curated and controlled data sets. In this work, we address the task of optimally extracting information from longitudinal patient data in the real-world setting with a special focus on the sporadic sampling problem. Using the MSBase registry, we show that with machine learning methods suited for patient trajectories modeling, such as recurrent neural networks and tensor factorization, we can predict disability progression of patients in a two-year horizon with an ROC-AUC of 0.86, which represents a 33% decrease in the ranking pair error (1-AUC) compared to reference methods using static clinical features. Compared to the models available in the literature, this work uses the most complete patient history for MS disease progression prediction.

Bayesian optimization of hyper-parameters in reservoir computing

Jun 14, 2017

Abstract:We describe a method for searching the optimal hyper-parameters in reservoir computing, which consists of a Gaussian process with Bayesian optimization. It provides an alternative to other frequently used optimization methods such as grid, random, or manual search. In addition to a set of optimal hyper-parameters, the method also provides a probability distribution of the cost function as a function of the hyper-parameters. We apply this method to two types of reservoirs: nonlinear delay nodes and echo state networks. It shows excellent performance on all considered benchmarks, either matching or significantly surpassing results found in the literature. In general, the algorithm achieves optimal results in fewer iterations when compared to other optimization methods. We have optimized up to six hyper-parameters simultaneously, which would have been infeasible using, e.g., grid search. Due to its automated nature, this method significantly reduces the need for expert knowledge when optimizing the hyper-parameters in reservoir computing. Existing software libraries for Bayesian optimization, such as Spearmint, make the implementation of the algorithm straightforward. A fork of the Spearmint framework along with a tutorial on how to use it in practice is available at https://bitbucket.org/uhasseltmachinelearning/spearmint/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge