Thanh Nguyen-Duc

Use of a Multiscale Vision Transformer to predict Nursing Activities Score from Low Resolution Thermal Videos in an Intensive Care Unit

May 30, 2024

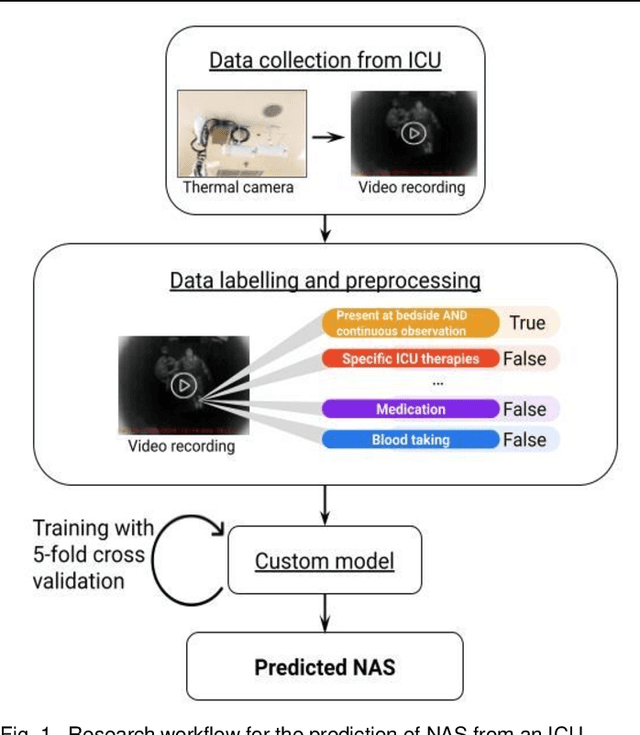

Abstract:Excessive caregiver workload in hospital nurses has been implicated in poorer patient care and increased worker burnout. Measurement of this workload in the Intensive Care Unit (ICU) is often done using the Nursing Activities Score (NAS), but this is usually recorded manually and sporadically. Previous work has made use of Ambient Intelligence (AmI) by using computer vision to passively derive caregiver-patient interaction times to monitor staff workload. In this letter, we propose using a Multiscale Vision Transformer (MViT) to passively predict the NAS from low-resolution thermal videos recorded in an ICU. 458 videos were obtained from an ICU in Melbourne, Australia and used to train a MViTv2 model using an indirect prediction and a direct prediction method. The indirect method predicted 1 of 8 potentially identifiable NAS activities from the video before inferring the NAS. The direct method predicted the NAS score immediately from the video. The indirect method yielded an average 5-fold accuracy of 57.21%, an area under the receiver operating characteristic curve (ROC AUC) of 0.865, a F1 score of 0.570 and a mean squared error (MSE) of 28.16. The direct method yielded a MSE of 18.16. We also showed that the MViTv2 outperforms similar models such as R(2+1)D and ResNet50-LSTM under identical settings. This study shows the feasibility of using a MViTv2 to passively predict the NAS in an ICU and monitor staff workload automatically. Our results above also show an increased accuracy in predicting NAS directly versus predicting NAS indirectly. We hope that our study can provide a direction for future work and further improve the accuracy of passive NAS monitoring.

Cross-adversarial local distribution regularization for semi-supervised medical image segmentation

Oct 02, 2023Abstract:Medical semi-supervised segmentation is a technique where a model is trained to segment objects of interest in medical images with limited annotated data. Existing semi-supervised segmentation methods are usually based on the smoothness assumption. This assumption implies that the model output distributions of two similar data samples are encouraged to be invariant. In other words, the smoothness assumption states that similar samples (e.g., adding small perturbations to an image) should have similar outputs. In this paper, we introduce a novel cross-adversarial local distribution (Cross-ALD) regularization to further enhance the smoothness assumption for semi-supervised medical image segmentation task. We conducted comprehensive experiments that the Cross-ALD archives state-of-the-art performance against many recent methods on the public LA and ACDC datasets.

Estimation of Clinical Workload and Patient Activity using Deep Learning and Optical Flow

Feb 09, 2022

Abstract:Contactless monitoring using thermal imaging has become increasingly proposed to monitor patient deterioration in hospital, most recently to detect fevers and infections during the COVID-19 pandemic. In this letter, we propose a novel method to estimate patient motion and observe clinical workload using a similar technical setup but combined with open source object detection algorithms (YOLOv4) and optical flow. Patient motion estimation was used to approximate patient agitation and sedation, while worker motion was used as a surrogate for caregiver workload. Performance was illustrated by comparing over 32000 frames from videos of patients recorded in an Intensive Care Unit, to clinical agitation scores recorded by clinical workers.

Deep EHR Spotlight: a Framework and Mechanism to Highlight Events in Electronic Health Records for Explainable Predictions

Mar 25, 2021Abstract:The wide adoption of Electronic Health Records (EHR) has resulted in large amounts of clinical data becoming available, which promises to support service delivery and advance clinical and informatics research. Deep learning techniques have demonstrated performance in predictive analytic tasks using EHRs yet they typically lack model result transparency or explainability functionalities and require cumbersome pre-processing tasks. Moreover, EHRs contain heterogeneous and multi-modal data points such as text, numbers and time series which further hinder visualisation and interpretability. This paper proposes a deep learning framework to: 1) encode patient pathways from EHRs into images, 2) highlight important events within pathway images, and 3) enable more complex predictions with additional intelligibility. The proposed method relies on a deep attention mechanism for visualisation of the predictions and allows predicting multiple sequential outcomes.

* AMIA 2021 Virtual Informatics Summit

MED-TEX: Transferring and Explaining Knowledge with Less Data from Pretrained Medical Imaging Models

Aug 06, 2020

Abstract:Deep neural network based image classification methods usually require a large amount of training data and lack interpretability, which are critical in the medical imaging domain. In this paper, we develop a novel knowledge distillation and model interpretation framework for medical image classification that jointly solves the above two issues. Specifically, to address the data-hungry issue, we propose to learn a small student model with less data by distilling knowledge only from a cumbersome pretrained teacher model. To interpret the teacher model as well as assisting the learning of the student, an explainer module is introduced to highlight the regions of an input medical image that are important for the predictions of the teacher model. Furthermore, the joint framework is trained by a principled way derived from the information-theoretic perspective. Our framework performance is demonstrated by the comprehensive experiments on the knowledge distillation and model interpretation tasks compared to state-of-the-art methods on a fundus disease dataset.

Compressed Sensing MRI Reconstruction using a Generative Adversarial Network with a Cyclic Loss

Mar 15, 2018

Abstract:Compressed Sensing MRI (CS-MRI) has provided theoretical foundations upon which the time-consuming MRI acquisition process can be accelerated. However, it primarily relies on iterative numerical solvers which still hinders their adaptation in time-critical applications. In addition, recent advances in deep neural networks have shown their potential in computer vision and image processing, but their adaptation to MRI reconstruction is still in an early stage. In this paper, we propose a novel deep learning-based generative adversarial model, RefineGAN, for fast and accurate CS-MRI reconstruction. The proposed model is a variant of fully-residual convolutional autoencoder and generative adversarial networks (GANs), specifically designed for CS-MRI formulation; it employs deeper generator and discriminator networks with cyclic data consistency loss for faithful interpolation in the given under-sampled k-space data. In addition, our solution leverages a chained network to further enhance the reconstruction quality. RefineGAN is fast and accurate -- the reconstruction process is extremely rapid, as low as tens of milliseconds for reconstruction of a 256x256 image, because it is one-way deployment on a feed-forward network, and the image quality is superior even for extremely low sampling rate (as low as 10%) due to the data-driven nature of the method. We demonstrate that RefineGAN outperforms the state-of-the-art CS-MRI methods by a large margin in terms of both running time and image quality via evaluation using several open-source MRI databases.

* submitted to IEEE Transactions on Medical Imaging

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge