Tasnim Sakib Apon

Transforming Precision: A Comparative Analysis of Vision Transformers, CNNs, and Traditional ML for Knee Osteoarthritis Severity Diagnosis

Oct 26, 2024Abstract:Knee osteoarthritis(KO) is a degenerative joint disease that can cause severe pain and impairment. With increased prevalence, precise diagnosis by medical imaging analytics is crucial for appropriate illness management. This research investigates a comparative analysis between traditional machine learning techniques and new deep learning models for diagnosing KO severity from X-ray pictures. This study does not introduce new architectural innovations but rather illuminates the robust applicability and comparative effectiveness of pre-existing ViT models in a medical imaging context, specifically for KO severity diagnosis. The insights garnered from this comparative analysis advocate for the integration of advanced ViT models in clinical diagnostic workflows, potentially revolutionizing the precision and reliability of KO assessments. This study does not introduce new architectural innovations but rather illuminates the robust applicability and comparative effectiveness of pre-existing ViT models in a medical imaging context, specifically for KO severity diagnosis. The insights garnered from this comparative analysis advocate for the integration of advanced ViT models in clinical diagnostic workflows, potentially revolutionizing the precision & reliability of KO assessments. The study utilizes an osteoarthritis dataset from the Osteoarthritis Initiative (OAI) comprising images with 5 severity categories and uneven class distribution. While classic machine learning models like GaussianNB and KNN struggle in feature extraction, Convolutional Neural Networks such as Inception-V3, VGG-19 achieve better accuracy between 55-65% by learning hierarchical visual patterns. However, Vision Transformer architectures like Da-VIT, GCViT and MaxViT emerge as indisputable champions, displaying 66.14% accuracy, 0.703 precision, 0.614 recall, AUC exceeding 0.835 thanks to self-attention processes.

Interpretable Bangla Sarcasm Detection using BERT and Explainable AI

Mar 22, 2023

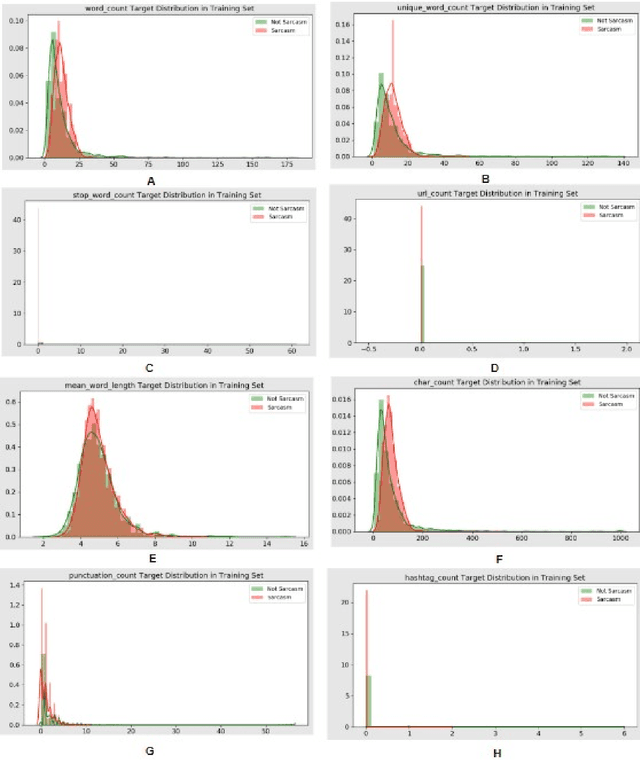

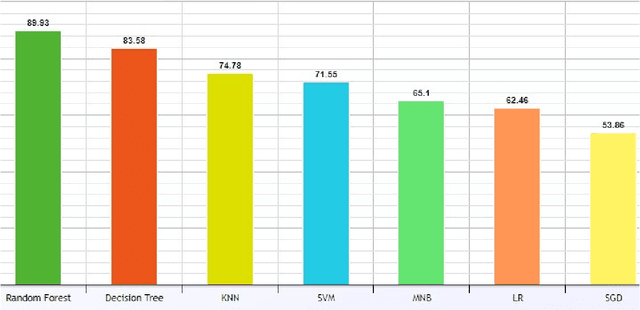

Abstract:A positive phrase or a sentence with an underlying negative motive is usually defined as sarcasm that is widely used in today's social media platforms such as Facebook, Twitter, Reddit, etc. In recent times active users in social media platforms are increasing dramatically which raises the need for an automated NLP-based system that can be utilized in various tasks such as determining market demand, sentiment analysis, threat detection, etc. However, since sarcasm usually implies the opposite meaning and its detection is frequently a challenging issue, data meaning extraction through an NLP-based model becomes more complicated. As a result, there has been a lot of study on sarcasm detection in English over the past several years, and there's been a noticeable improvement and yet sarcasm detection in the Bangla language's state remains the same. In this article, we present a BERT-based system that can achieve 99.60\% while the utilized traditional machine learning algorithms are only capable of achieving 89.93\%. Additionally, we have employed Local Interpretable Model-Agnostic Explanations that introduce explainability to our system. Moreover, we have utilized a newly collected bangla sarcasm dataset, BanglaSarc that was constructed specifically for the evaluation of this study. This dataset consists of fresh records of sarcastic and non-sarcastic comments, the majority of which are acquired from Facebook and YouTube comment sections.

Explainable AI based Glaucoma Detection using Transfer Learning and LIME

Oct 07, 2022

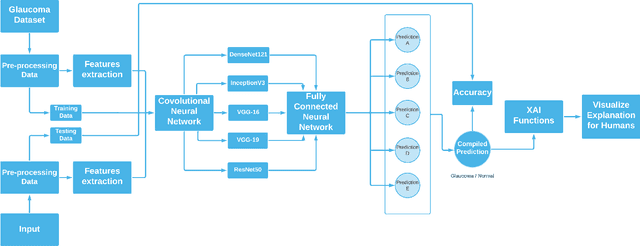

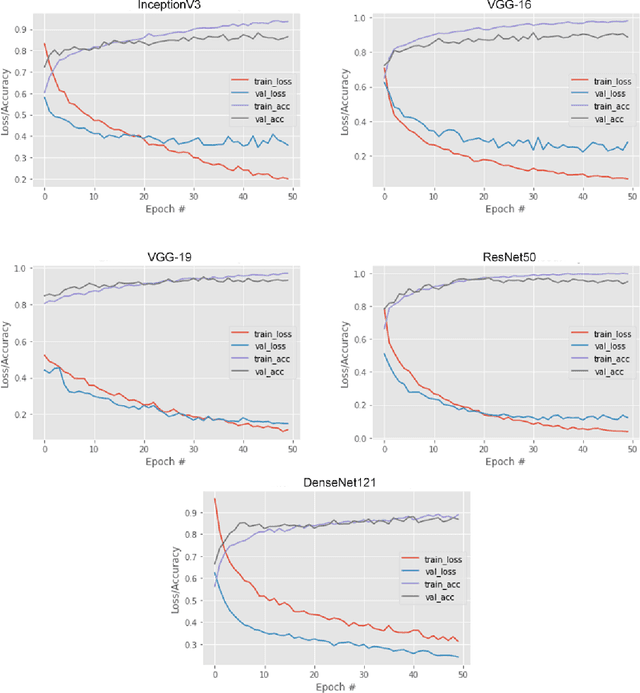

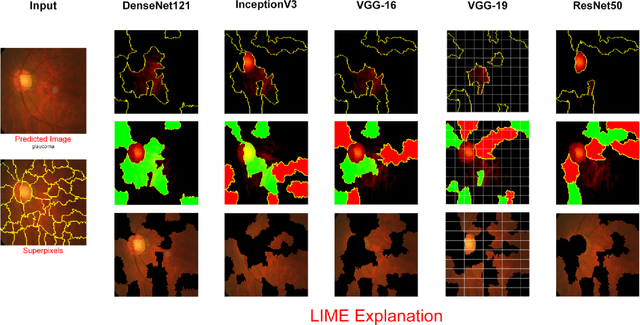

Abstract:Glaucoma is the second driving reason for partial or complete blindness among all the visual deficiencies which mainly occurs because of excessive pressure in the eye due to anxiety or depression which damages the optic nerve and creates complications in vision. Traditional glaucoma screening is a time-consuming process that necessitates the medical professionals' constant attention, and even so time to time due to the time constrains and pressure they fail to classify correctly that leads to wrong treatment. Numerous efforts have been made to automate the entire glaucoma classification procedure however, these existing models in general have a black box characteristics that prevents users from understanding the key reasons behind the prediction and thus medical practitioners generally can not rely on these system. In this article after comparing with various pre-trained models, we propose a transfer learning model that is able to classify Glaucoma with 94.71\% accuracy. In addition, we have utilized Local Interpretable Model-Agnostic Explanations(LIME) that introduces explainability in our system. This improvement enables medical professionals obtain important and comprehensive information that aid them in making judgments. It also lessen the opacity and fragility of the traditional deep learning models.

BanglaSarc: A Dataset for Sarcasm Detection

Sep 27, 2022

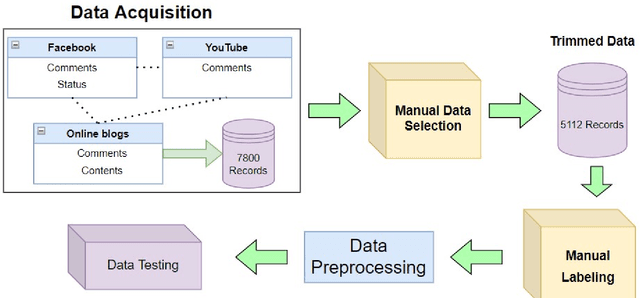

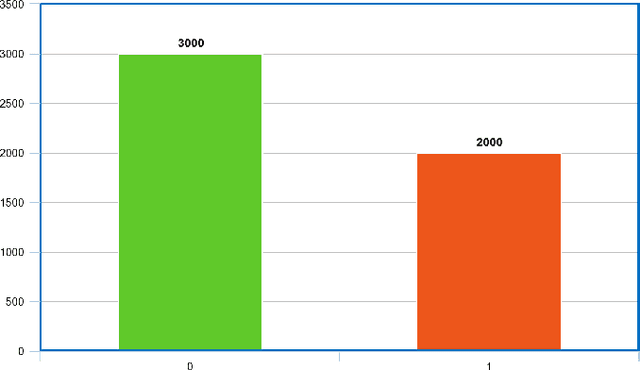

Abstract:Being one of the most widely spoken language in the world, the use of Bangla has been increasing in the world of social media as well. Sarcasm is a positive statement or remark with an underlying negative motivation that is extensively employed in today's social media platforms. There has been a significant improvement in sarcasm detection in English over the previous many years, however the situation regarding Bangla sarcasm detection remains unchanged. As a result, it is still difficult to identify sarcasm in bangla, and a lack of high-quality data is a major contributing factor. This article proposes BanglaSarc, a dataset constructed specifically for bangla textual data sarcasm detection. This dataset contains of 5112 comments/status and contents collected from various online social platforms such as Facebook, YouTube, along with a few online blogs. Due to the limited amount of data collection of categorized comments in Bengali, this dataset will aid in the of study identifying sarcasm, recognizing people's emotion, detecting various types of Bengali expressions, and other domains. The dataset is publicly available at https://www.kaggle.com/datasets/sakibapon/banglasarc.

Real Time Action Recognition from Video Footage

Dec 13, 2021

Abstract:Crime rate is increasing proportionally with the increasing rate of the population. The most prominent approach was to introduce Closed-Circuit Television (CCTV) camera-based surveillance to tackle the issue. Video surveillance cameras have added a new dimension to detect crime. Several research works on autonomous security camera surveillance are currently ongoing, where the fundamental goal is to discover violent activity from video feeds. From the technical viewpoint, this is a challenging problem because analyzing a set of frames, i.e., videos in temporal dimension to detect violence might need careful machine learning model training to reduce false results. This research focuses on this problem by integrating state-of-the-art Deep Learning methods to ensure a robust pipeline for autonomous surveillance for detecting violent activities, e.g., kicking, punching, and slapping. Initially, we designed a dataset of this specific interest, which contains 600 videos (200 for each action). Later, we have utilized existing pre-trained model architectures to extract features, and later used deep learning network for classification. Also, We have classified our models' accuracy, and confusion matrix on different pre-trained architectures like VGG16, InceptionV3, ResNet50, Xception and MobileNet V2 among which VGG16 and MobileNet V2 performed better.

Demystifying Deep Learning Models for Retinal OCT Disease Classification using Explainable AI

Nov 06, 2021

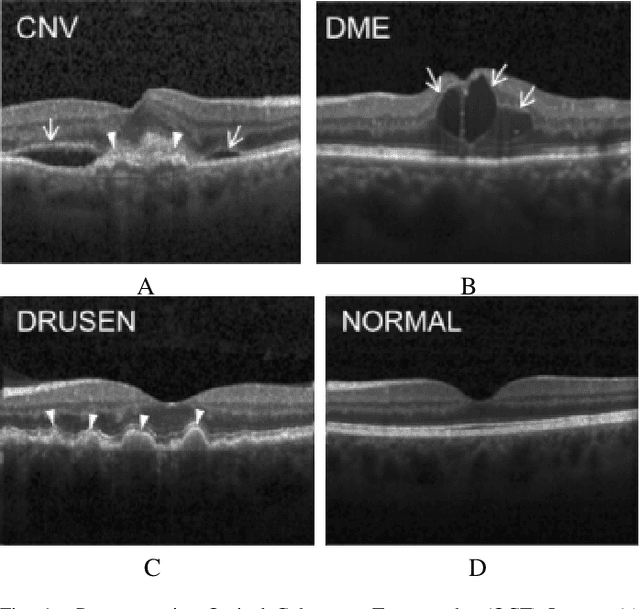

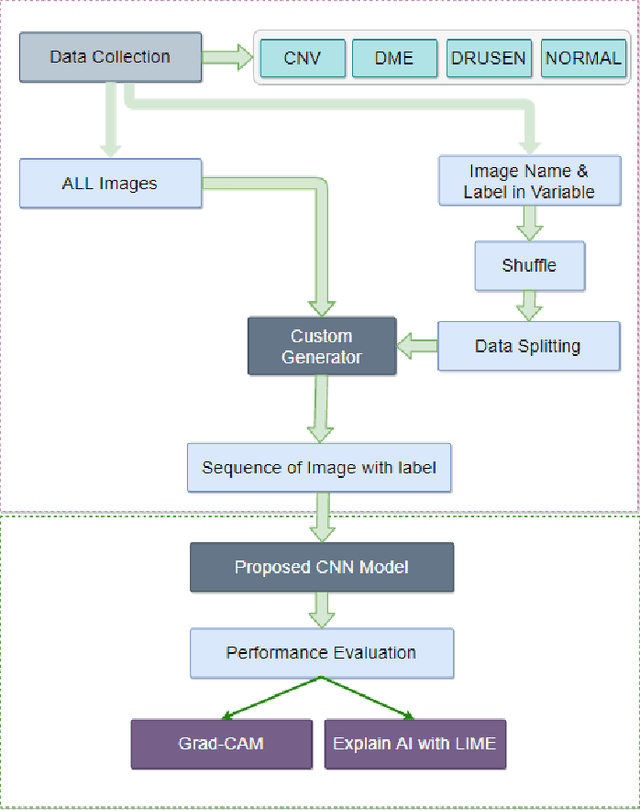

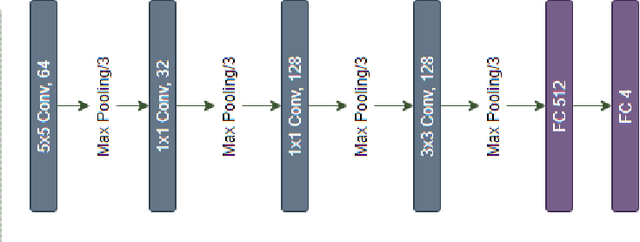

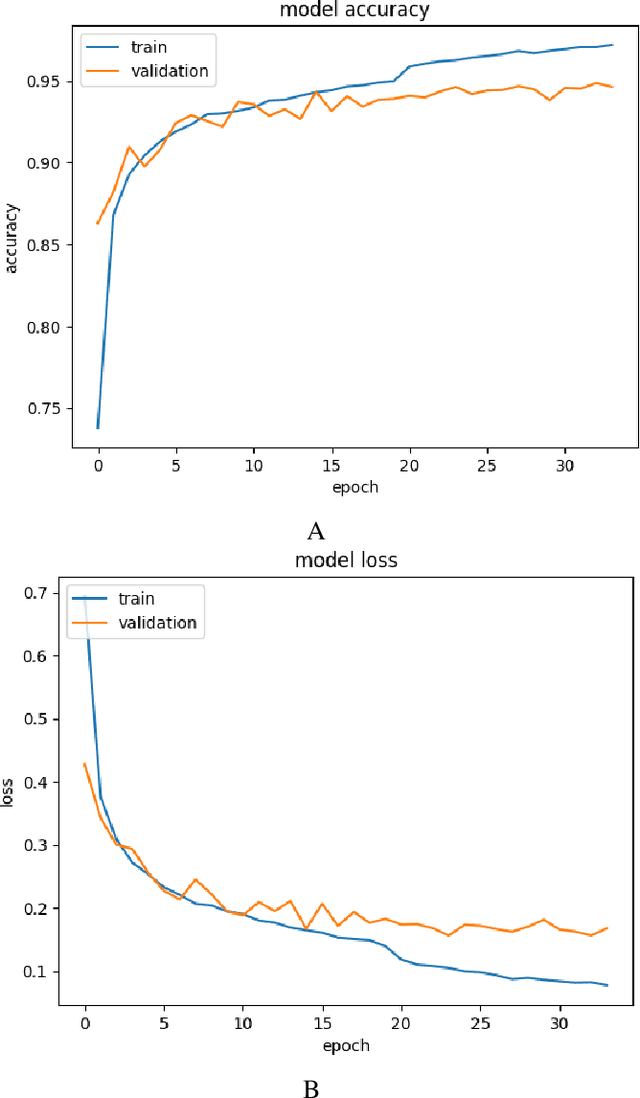

Abstract:In the world of medical diagnostics, the adoption of various deep learning techniques is quite common as well as effective, and its statement is equally true when it comes to implementing it into the retina Optical Coherence Tomography (OCT) sector, but (i)These techniques have the black box characteristics that prevent the medical professionals to completely trust the results generated from them (ii)Lack of precision of these methods restricts their implementation in clinical and complex cases (iii)The existing works and models on the OCT classification are substantially large and complicated and they require a considerable amount of memory and computational power, reducing the quality of classifiers in real-time applications. To meet these problems, in this paper a self-developed CNN model has been proposed which is comparatively smaller and simpler along with the use of Lime that introduces Explainable AI to the study and helps to increase the interpretability of the model. This addition will be an asset to the medical experts for getting major and detailed information and will help them in making final decisions and will also reduce the opacity and vulnerability of the conventional deep learning models.

Action Recognition using Transfer Learning and Majority Voting for CSGO

Nov 06, 2021

Abstract:Presently online video games have become a progressively favorite source of recreation and Counter Strike: Global Offensive (CS: GO) is one of the top-listed online first-person shooting games. Numerous competitive games are arranged every year by Esports. Nonetheless, (i) No study has been conducted on video analysis and action recognition of CS: GO game-play which can play a substantial role in the gaming industry for prediction model (ii) No work has been done on the real-time application on the actions and results of a CS: GO match (iii) Game data of a match is usually available in the HLTV as a CSV formatted file however it does not have open access and HLTV tends to prevent users from taking data. This manuscript aims to develop a model for accurate prediction of 4 different actions and compare the performance among the five different transfer learning models with our self-developed deep neural network and identify the best-fitted model and also including major voting later on, which is qualified to provide real time prediction and the result of this model aids to the construction of the automated system of gathering and processing more data alongside solving the issue of collecting data from HLTV.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge