Taro Sunagawa

Three approaches to facilitate DNN generalization to objects in out-of-distribution orientations and illuminations: late-stopping, tuning batch normalization and invariance loss

Oct 30, 2021

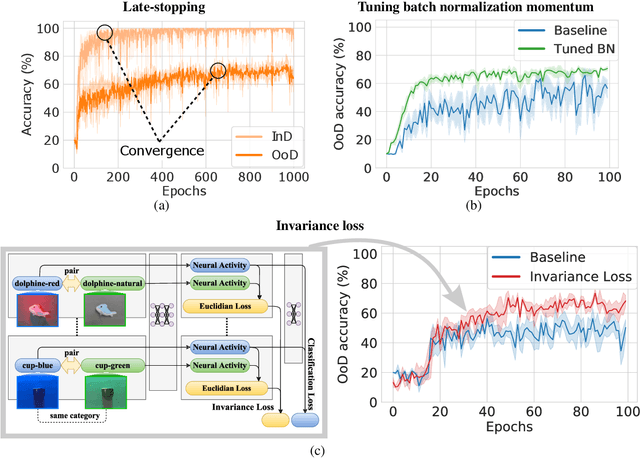

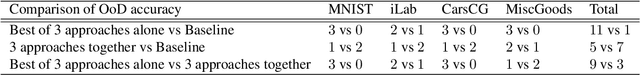

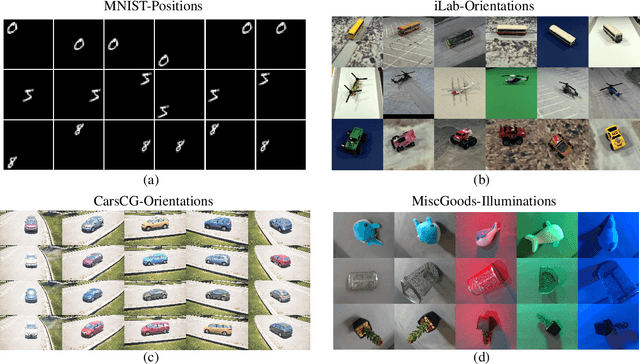

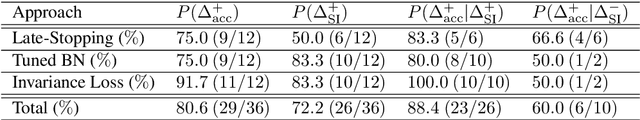

Abstract:The training data distribution is often biased towards objects in certain orientations and illumination conditions. While humans have a remarkable capability of recognizing objects in out-of-distribution (OoD) orientations and illuminations, Deep Neural Networks (DNNs) severely suffer in this case, even when large amounts of training examples are available. In this paper, we investigate three different approaches to improve DNNs in recognizing objects in OoD orientations and illuminations. Namely, these are (i) training much longer after convergence of the in-distribution (InD) validation accuracy, i.e., late-stopping, (ii) tuning the momentum parameter of the batch normalization layers, and (iii) enforcing invariance of the neural activity in an intermediate layer to orientation and illumination conditions. Each of these approaches substantially improves the DNN's OoD accuracy (more than 20% in some cases). We report results in four datasets: two datasets are modified from the MNIST and iLab datasets, and the other two are novel (one of 3D rendered cars and another of objects taken from various controlled orientations and illumination conditions). These datasets allow to study the effects of different amounts of bias and are challenging as DNNs perform poorly in OoD conditions. Finally, we demonstrate that even though the three approaches focus on different aspects of DNNs, they all tend to lead to the same underlying neural mechanism to enable OoD accuracy gains -- individual neurons in the intermediate layers become more selective to a category and also invariant to OoD orientations and illuminations.

Annotation Cost Reduction of Stream-based Active Learning by Automated Weak Labeling using a Robot Arm

Oct 03, 2021

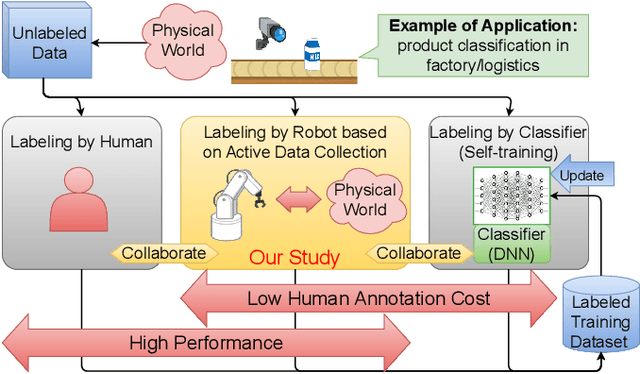

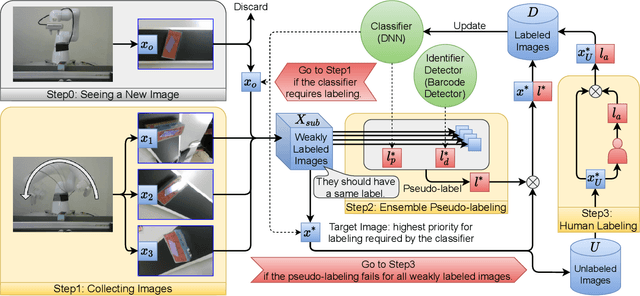

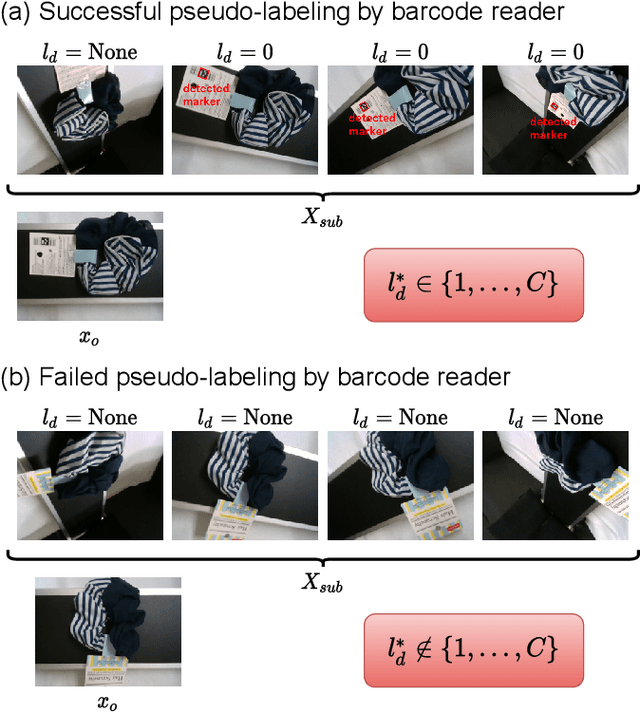

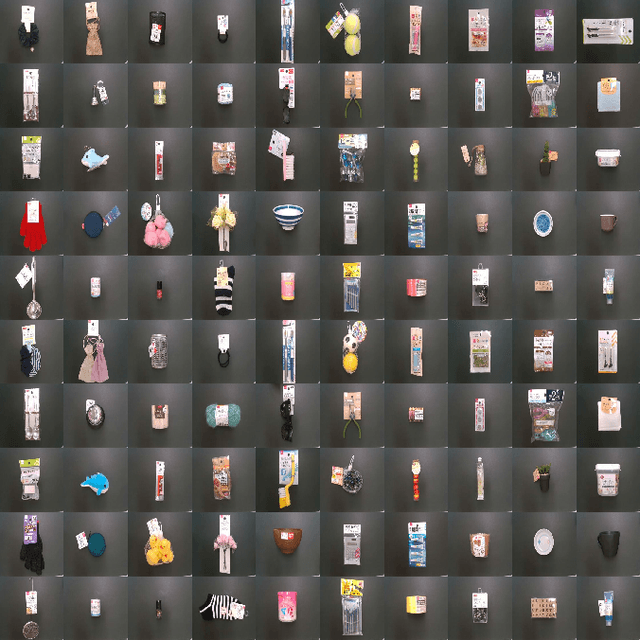

Abstract:Stream-based active learning (AL) is an efficient training data collection method, and it is used to reduce human annotation cost required in machine learning. However, it is difficult to say that the human cost is low enough because most previous studies have assumed that an oracle is a human with domain knowledge. In this study, we propose a method to replace a part of the oracle's work in stream-based AL by self-training with weak labeling using a robot arm. A camera attached to a robot arm takes a series of image data related to a streamed object, which should have the same label. We use this information as a weak label to connect a pseudo-label (estimated class label) and a target instance. Our method selects two data from a series of image data; high confidence data for correcting pseudo-labels and low confidence data for improving the performance of the classifier. We paired a pseudo-label provided to high confidence data with a target instance (low confidence data). By using this technique, we mitigate the inefficiency in self-training, that is, difficulty in creating pseudo-labeled training data with a high impact on the target classifier. In the experiments, we employed the proposed method in the classification task of objects on a belt conveyor. We evaluated the performance against human cost on multiple scenarios considering the temporal variation of data. The proposed method achieves the same or better performance as the conventional methods while reducing human cost.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge