Tanya Jonker

Less or More: Towards Glanceable Explanations for LLM Recommendations Using Ultra-Small Devices

Feb 26, 2025Abstract:Large Language Models (LLMs) have shown remarkable potential in recommending everyday actions as personal AI assistants, while Explainable AI (XAI) techniques are being increasingly utilized to help users understand why a recommendation is given. Personal AI assistants today are often located on ultra-small devices such as smartwatches, which have limited screen space. The verbosity of LLM-generated explanations, however, makes it challenging to deliver glanceable LLM explanations on such ultra-small devices. To address this, we explored 1) spatially structuring an LLM's explanation text using defined contextual components during prompting and 2) presenting temporally adaptive explanations to users based on confidence levels. We conducted a user study to understand how these approaches impacted user experiences when interacting with LLM recommendations and explanations on ultra-small devices. The results showed that structured explanations reduced users' time to action and cognitive load when reading an explanation. Always-on structured explanations increased users' acceptance of AI recommendations. However, users were less satisfied with structured explanations compared to unstructured ones due to their lack of sufficient, readable details. Additionally, adaptively presenting structured explanations was less effective at improving user perceptions of the AI compared to the always-on structured explanations. Together with users' interview feedback, the results led to design implications to be mindful of when personalizing the content and timing of LLM explanations that are displayed on ultra-small devices.

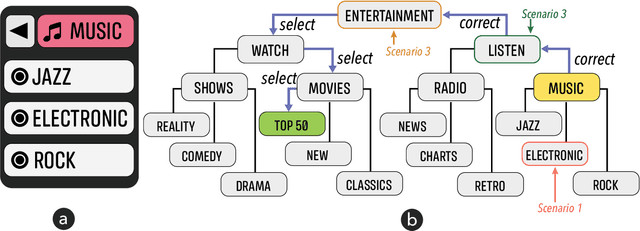

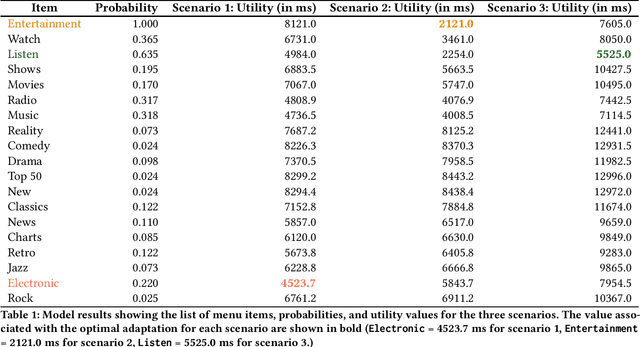

Computational Adaptation of XR Interfaces Through Interaction Simulation

Apr 19, 2022

Abstract:Adaptive and intelligent user interfaces have been proposed as a critical component of a successful extended reality (XR) system. In particular, a predictive system can make inferences about a user and provide them with task-relevant recommendations or adaptations. However, we believe such adaptive interfaces should carefully consider the overall \emph{cost} of interactions to better address uncertainty of predictions. In this position paper, we discuss a computational approach to adapt XR interfaces, with the goal of improving user experience and performance. Our novel model, applied to menu selection tasks, simulates user interactions by considering both cognitive and motor costs. In contrast to greedy algorithms that adapt based on predictions alone, our model holistically accounts for costs and benefits of adaptations towards adapting the interface and providing optimal recommendations to the user.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge