Tamoghna Roy

Transformer-Driven Neural Beamforming with Imperfect CSI in Urban Macro Wireless Channels

Apr 15, 2025

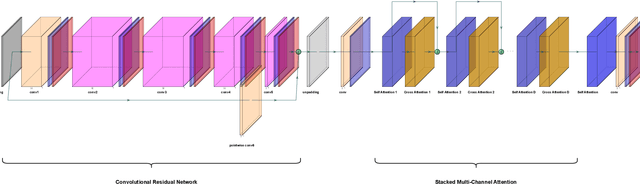

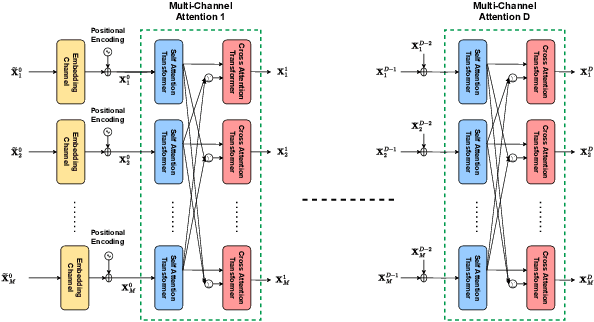

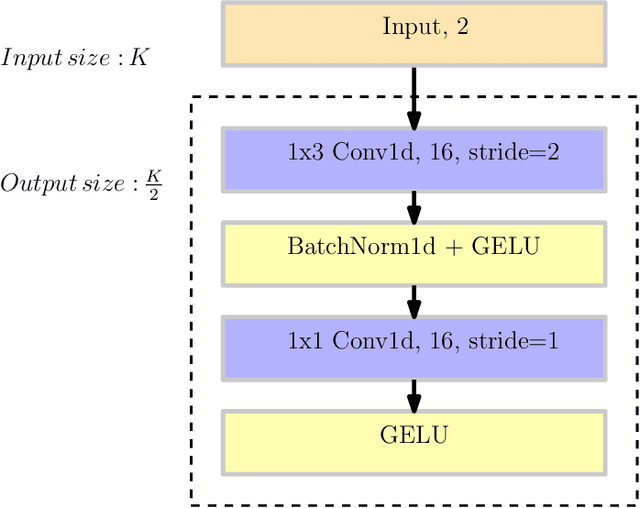

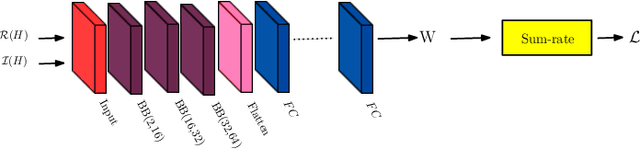

Abstract:The literature is abundant with methodologies focusing on using transformer architectures due to their prominence in wireless signal processing and their capability to capture long-range dependencies via attention mechanisms. In particular, depthwise separable convolutions enhance parameter efficiency for the process of high-dimensional data characteristics of MIMO systems. In this work, we introduce a novel unsupervised deep learning framework that integrates depthwise separable convolutions and transformers to generate beamforming weights under imperfect channel state information (CSI) for a multi-user single-input multiple-output (MU-SIMO) system in dense urban environments. The primary goal is to enhance throughput by maximizing sum-rate while ensuring reliable communication. Spectral efficiency and block error rate (BLER) are considered as performance metrics. Experiments are carried out under various conditions to compare the performance of the proposed NNBF framework against baseline methods zero-forcing beamforming (ZFBF) and minimum mean square error (MMSE) beamforming. Experimental results demonstrate the superiority of the proposed framework over the baseline techniques.

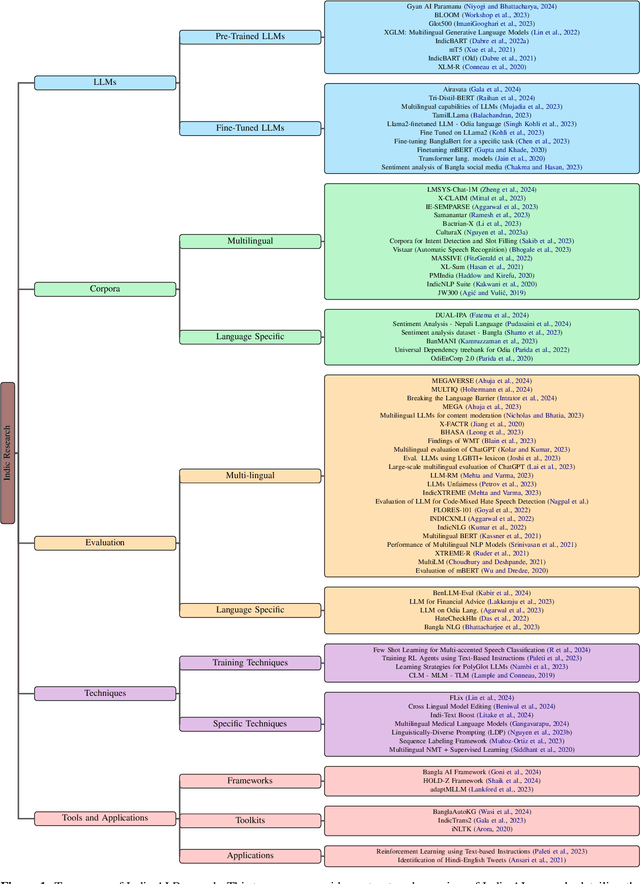

Decoding the Diversity: A Review of the Indic AI Research Landscape

Jun 13, 2024

Abstract:This review paper provides a comprehensive overview of large language model (LLM) research directions within Indic languages. Indic languages are those spoken in the Indian subcontinent, including India, Pakistan, Bangladesh, Sri Lanka, Nepal, and Bhutan, among others. These languages have a rich cultural and linguistic heritage and are spoken by over 1.5 billion people worldwide. With the tremendous market potential and growing demand for natural language processing (NLP) based applications in diverse languages, generative applications for Indic languages pose unique challenges and opportunities for research. Our paper deep dives into the recent advancements in Indic generative modeling, contributing with a taxonomy of research directions, tabulating 84 recent publications. Research directions surveyed in this paper include LLM development, fine-tuning existing LLMs, development of corpora, benchmarking and evaluation, as well as publications around specific techniques, tools, and applications. We found that researchers across the publications emphasize the challenges associated with limited data availability, lack of standardization, and the peculiar linguistic complexities of Indic languages. This work aims to serve as a valuable resource for researchers and practitioners working in the field of NLP, particularly those focused on Indic languages, and contributes to the development of more accurate and efficient LLM applications for these languages.

Deep Learning Based Joint Multi-User MISO Power Allocation and Beamforming Design

Jun 12, 2024

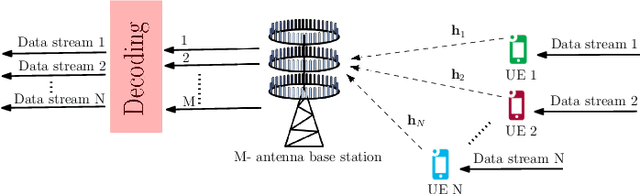

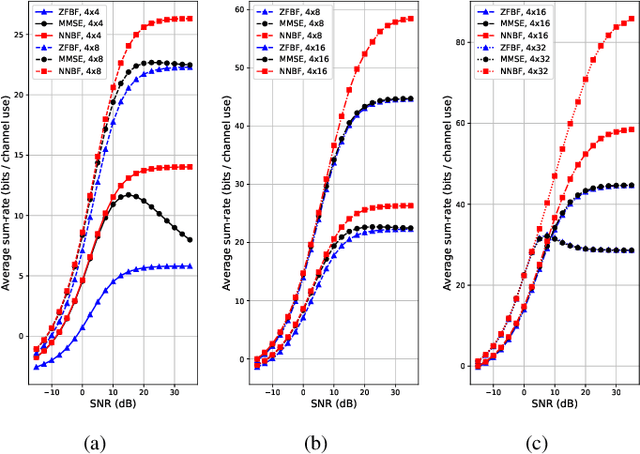

Abstract:The evolution of fifth generation (5G) wireless communication networks has led to an increased need for wireless resource management solutions that provide higher data rates, wide coverage, low latency, and power efficiency. Yet, many of existing traditional approaches remain non-practical due to computational limitations, and unrealistic presumptions of static network conditions and algorithm initialization dependencies. This creates an important gap between theoretical analysis and real-time processing of algorithms. To bridge this gap, deep learning based techniques offer promising solutions with their representational capabilities for universal function approximation. We propose a novel unsupervised deep learning based joint power allocation and beamforming design for multi-user multiple-input single-output (MU-MISO) system. The objective is to enhance the spectral efficiency by maximizing the sum-rate with the proposed joint design framework, NNBF-P while also offering computationally efficient solution in contrast to conventional approaches. We conduct experiments for diverse settings to compare the performance of NNBF-P with zero-forcing beamforming (ZFBF), minimum mean square error (MMSE) beamforming, and NNBF, which is also our deep learning based beamforming design without joint power allocation scheme. Experiment results demonstrate the superiority of NNBF-P compared to ZFBF, and MMSE while NNBF can have lower performances than MMSE and ZFBF in some experiment settings. It can also demonstrate the effectiveness of joint design framework with respect to NNBF.

Parameter Efficient Fine Tuning: A Comprehensive Analysis Across Applications

Apr 23, 2024

Abstract:The rise of deep learning has marked significant progress in fields such as computer vision, natural language processing, and medical imaging, primarily through the adaptation of pre-trained models for specific tasks. Traditional fine-tuning methods, involving adjustments to all parameters, face challenges due to high computational and memory demands. This has led to the development of Parameter Efficient Fine-Tuning (PEFT) techniques, which selectively update parameters to balance computational efficiency with performance. This review examines PEFT approaches, offering a detailed comparison of various strategies highlighting applications across different domains, including text generation, medical imaging, protein modeling, and speech synthesis. By assessing the effectiveness of PEFT methods in reducing computational load, speeding up training, and lowering memory usage, this paper contributes to making deep learning more accessible and adaptable, facilitating its wider application and encouraging innovation in model optimization. Ultimately, the paper aims to contribute towards insights into PEFT's evolving landscape, guiding researchers and practitioners in overcoming the limitations of conventional fine-tuning approaches.

Deep Learning Based Uplink Multi-User SIMO Beamforming Design

Sep 28, 2023

Abstract:The advancement of fifth generation (5G) wireless communication networks has created a greater demand for wireless resource management solutions that offer high data rates, extensive coverage, minimal latency and energy-efficient performance. Nonetheless, traditional approaches have shortcomings when it comes to computational complexity and their ability to adapt to dynamic conditions, creating a gap between theoretical analysis and the practical execution of algorithmic solutions for managing wireless resources. Deep learning-based techniques offer promising solutions for bridging this gap with their substantial representation capabilities. We propose a novel unsupervised deep learning framework, which is called NNBF, for the design of uplink receive multi-user single input multiple output (MU-SIMO) beamforming. The primary objective is to enhance the throughput by focusing on maximizing the sum-rate while also offering computationally efficient solution, in contrast to established conventional methods. We conduct experiments for several antenna configurations. Our experimental results demonstrate that NNBF exhibits superior performance compared to our baseline methods, namely, zero-forcing beamforming (ZFBF) and minimum mean square error (MMSE) equalizer. Additionally, NNBF is scalable to the number of single-antenna user equipments (UEs) while baseline methods have significant computational burden due to matrix pseudo-inverse operation.

Wideband Signal Localization with Spectral Segmentation

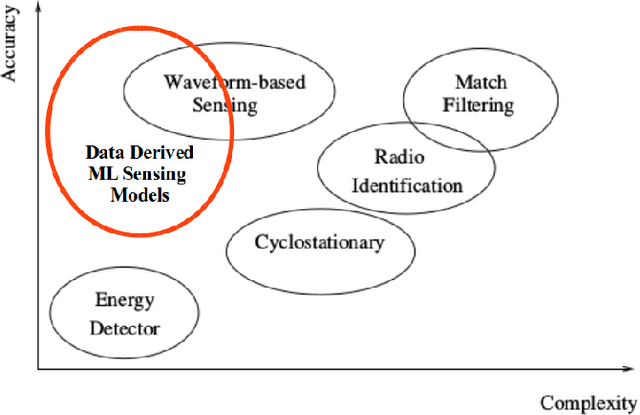

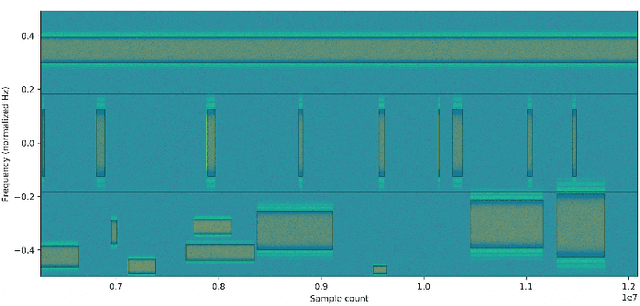

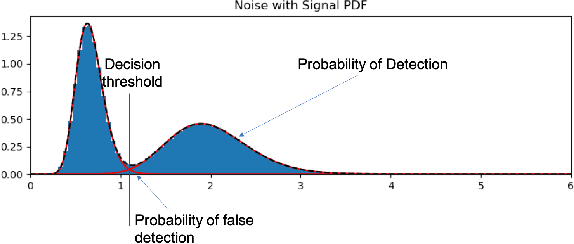

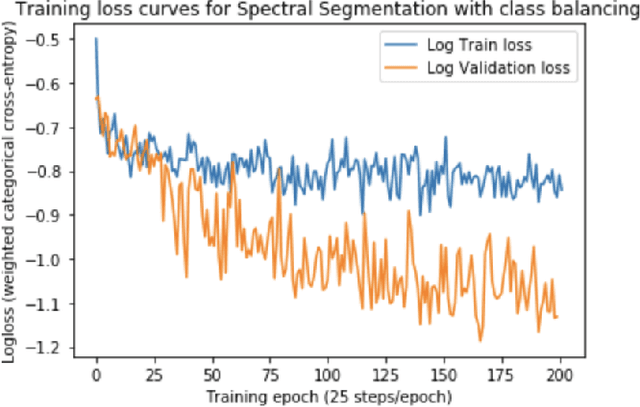

Oct 01, 2021

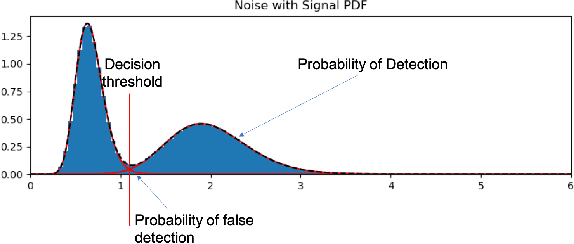

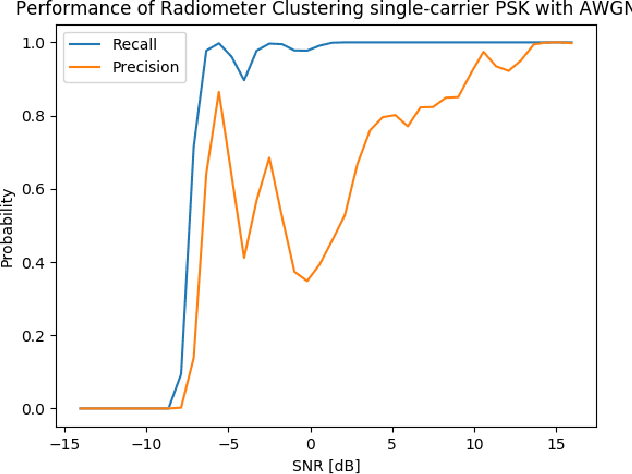

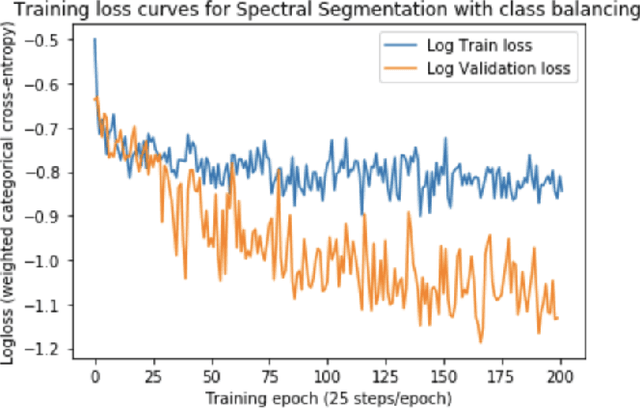

Abstract:Signal localization is a spectrum sensing problem that jointly detects the presence of a signal and estimates a center frequency and bandwidth. This is a step beyond most spectrum sensing work which estimates "present" or "not present" detections for either a single channel or fixed sized channels. We define the signal localization task, present the metrics of precision and recall, and establish baselines for traditional energy detection on this task. We introduce a new dataset that is useful for training neural networks to perform this task and show a training framework to train signal detectors to achieve the task and present precision and recall curves over SNR. This neural network based approach shows an 8 dB improvement in recall over the traditional energy detection approach with minor improvements in precision.

* arXiv admin note: substantial text overlap with arXiv:2110.00518

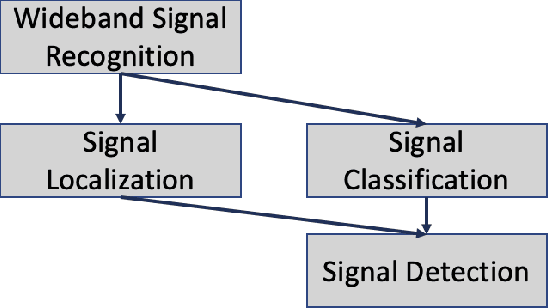

A Wideband Signal Recognition Dataset

Oct 01, 2021

Abstract:Signal recognition is a spectrum sensing problem that jointly requires detection, localization in time and frequency, and classification. This is a step beyond most spectrum sensing work which involves signal detection to estimate "present" or "not present" detections for either a single channel or fixed sized channels or classification which assumes a signal is present. We define the signal recognition task, present the metrics of precision and recall to the RF domain, and review recent machine-learning based approaches to this problem. We introduce a new dataset that is useful for training neural networks to perform these tasks and show a training framework to train wideband signal recognizers.

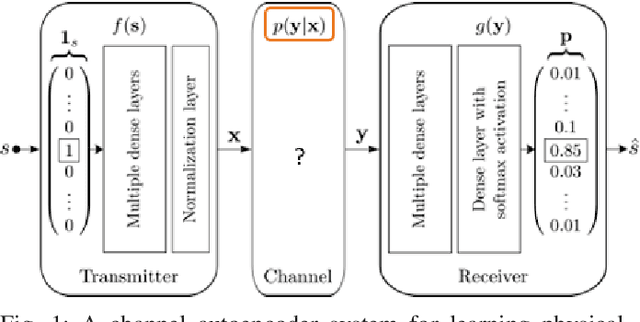

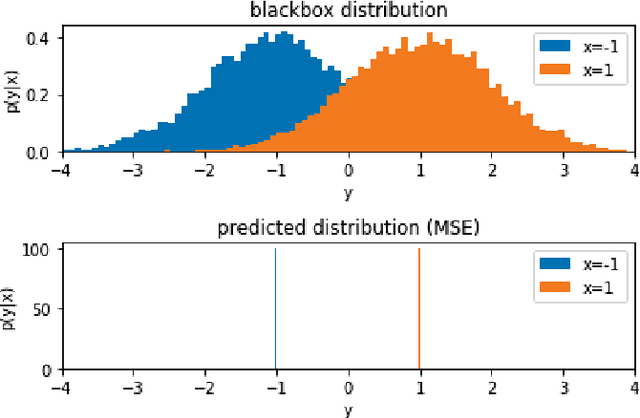

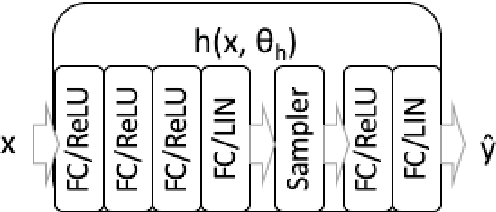

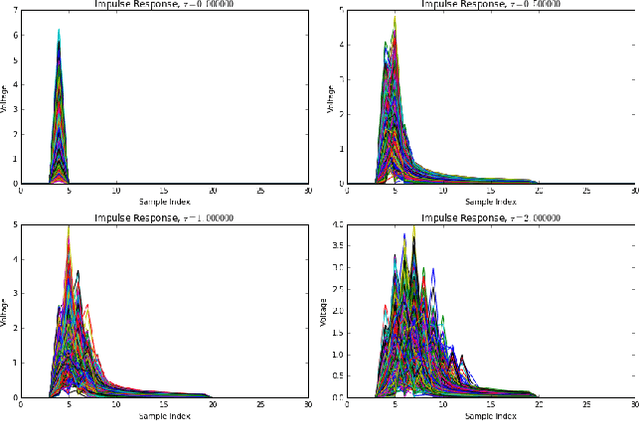

Approximating the Void: Learning Stochastic Channel Models from Observation with Variational Generative Adversarial Networks

Aug 20, 2018

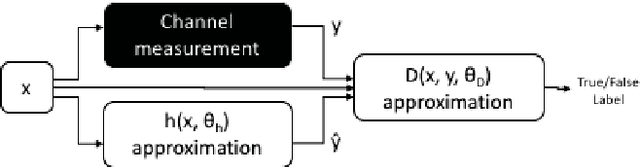

Abstract:Channel modeling is a critical topic when considering designing, learning, or evaluating the performance of any communications system. Most prior work in designing or learning new modulation schemes has focused on using highly simplified analytic channel models such as additive white Gaussian noise (AWGN), Rayleigh fading channels or similar. Recently, we proposed the usage of a generative adversarial networks (GANs) to jointly approximate a wireless channel response model (e.g. from real black box measurements) and optimize for an efficient modulation scheme over it using machine learning. This approach worked to some degree, but was unable to produce accurate probability distribution functions (PDFs) representing the stochastic channel response. In this paper, we focus specifically on the problem of accurately learning a channel PDF using a variational GAN, introducing an architecture and loss function which can accurately capture stochastic behavior. We illustrate where our prior method failed and share results capturing the performance of such as system over a range of realistic channel distributions.

Physical Layer Communications System Design Over-the-Air Using Adversarial Networks

Mar 08, 2018

Abstract:This paper presents a novel method for synthesizing new physical layer modulation and coding schemes for communications systems using a learning-based approach which does not require an analytic model of the impairments in the channel. It extends prior work published on the channel autoencoder to consider the case where the channel response is not known or can not be easily modeled in a closed form analytic expression. By adopting an adversarial approach for channel response approximation and information encoding, we can jointly learn a good solution to both tasks over a wide range of channel environments. We describe the operation of the proposed adversarial system, share results for its training and validation over-the-air, and discuss implications and future work in the area.

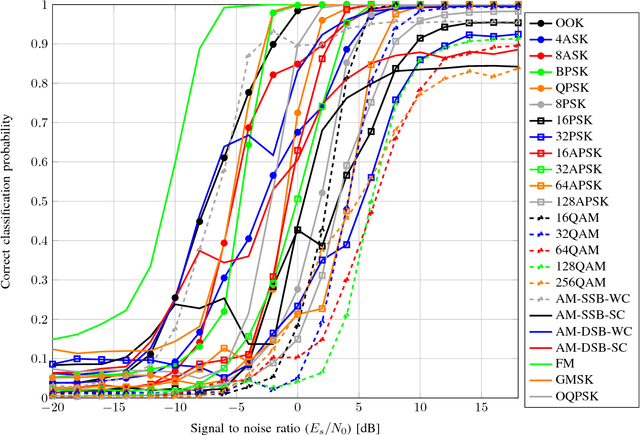

Over the Air Deep Learning Based Radio Signal Classification

Dec 13, 2017

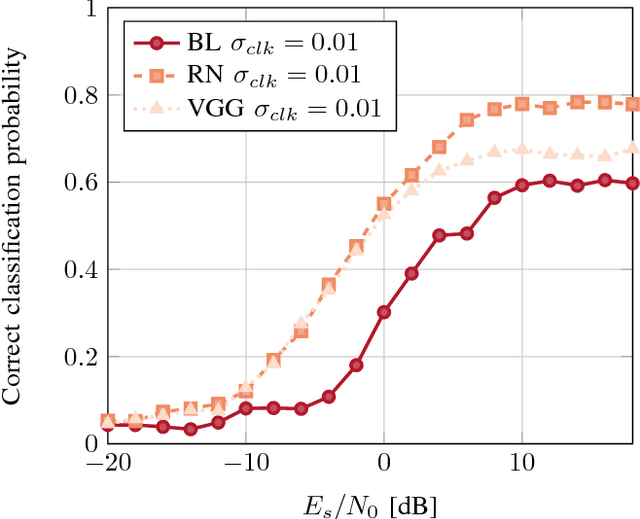

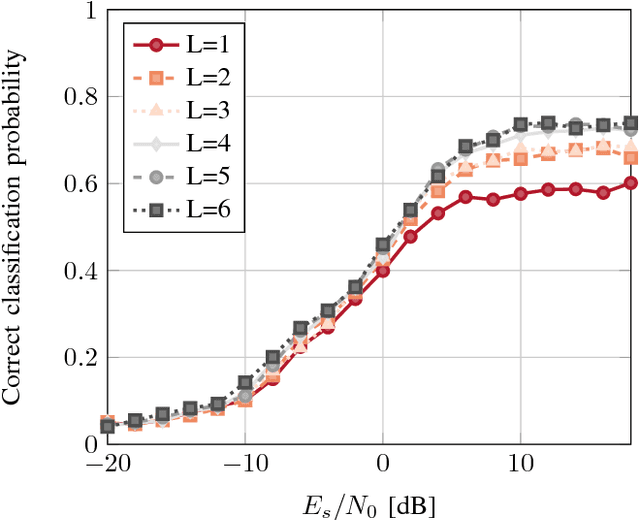

Abstract:We conduct an in depth study on the performance of deep learning based radio signal classification for radio communications signals. We consider a rigorous baseline method using higher order moments and strong boosted gradient tree classification and compare performance between the two approaches across a range of configurations and channel impairments. We consider the effects of carrier frequency offset, symbol rate, and multi-path fading in simulation and conduct over-the-air measurement of radio classification performance in the lab using software radios and compare performance and training strategies for both. Finally we conclude with a discussion of remaining problems, and design considerations for using such techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge