Tae Kwan Lee

A Benchmark on Tricks for Large-scale Image Retrieval

Jul 27, 2019

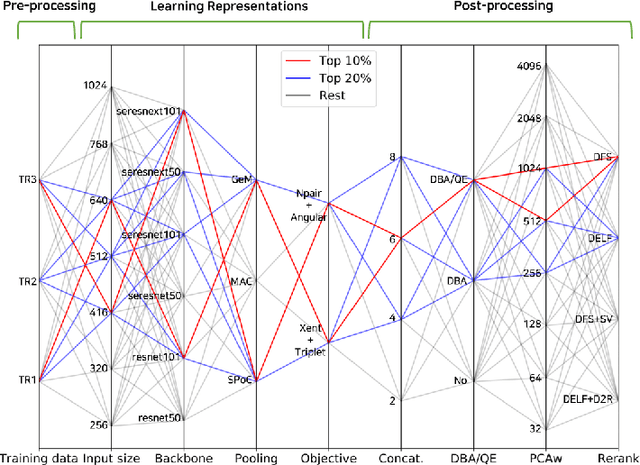

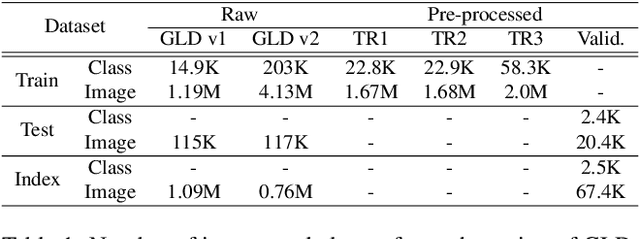

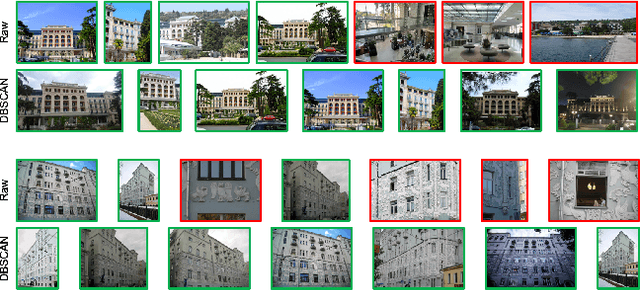

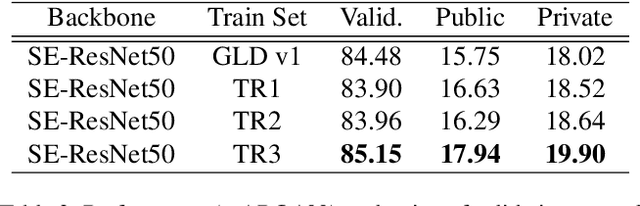

Abstract:Many studies have been performed on metric learning, which has become a key ingredient in top-performing methods of instance-level image retrieval. Meanwhile, less attention has been paid to pre-processing and post-processing tricks that can significantly boost performance. Furthermore, we found that most previous studies used small scale datasets to simplify processing. Because the behavior of a feature representation in a deep learning model depends on both domain and data, it is important to understand how model behave in large-scale environments when a proper combination of retrieval tricks is used. In this paper, we extensively analyze the effect of well-known pre-processing, post-processing tricks, and their combination for large-scale image retrieval. We found that proper use of these tricks can significantly improve model performance without necessitating complex architecture or introducing loss, as confirmed by achieving a competitive result on the Google Landmark Retrieval Challenge 2019.

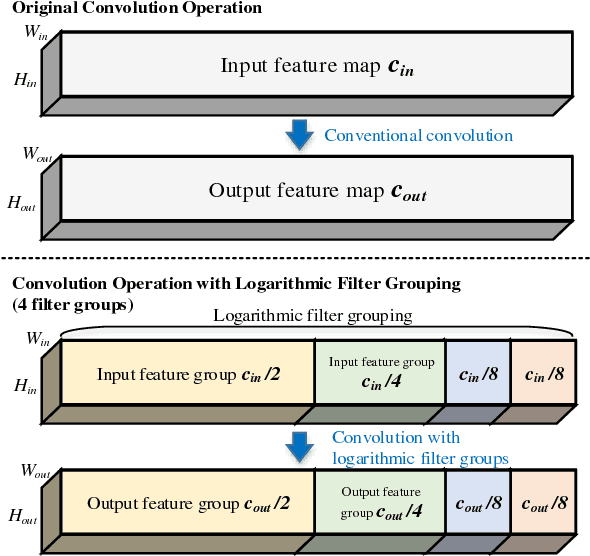

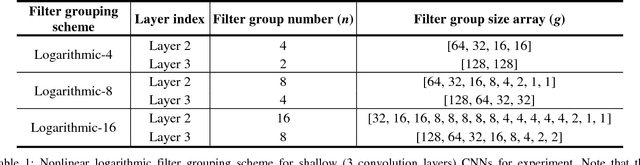

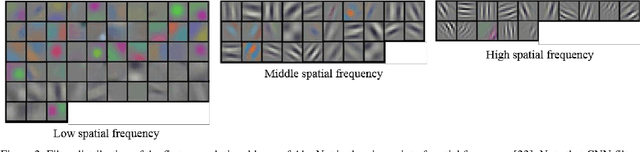

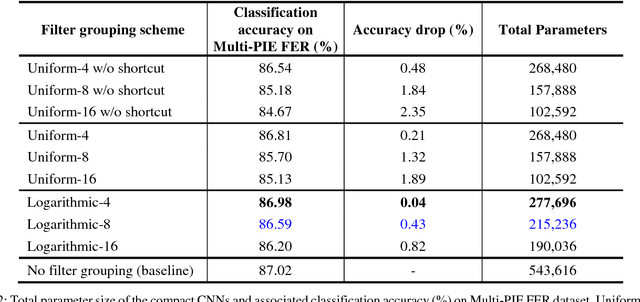

Convolution with Logarithmic Filter Groups for Efficient Shallow CNN

Sep 14, 2017

Abstract:In convolutional neural networks (CNNs), the filter grouping in convolution layers is known to be useful to reduce the network parameter size. In this paper, we propose a new logarithmic filter grouping which can capture the nonlinearity of filter distribution in CNNs. The proposed logarithmic filter grouping is installed in shallow CNNs applicable in a mobile application. Experiments were performed with the shallow CNNs for classification tasks. Our classification results on Multi-PIE dataset for facial expression recognition and CIFAR-10 dataset for object classification reveal that the compact CNN with the proposed logarithmic filter grouping scheme outperforms the same network with the uniform filter grouping in terms of accuracy and parameter efficiency. Our results indicate that the efficiency of shallow CNNs can be improved by the proposed logarithmic filter grouping.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge