Sven Cattell

To Err is AI : A Case Study Informing LLM Flaw Reporting Practices

Oct 15, 2024

Abstract:In August of 2024, 495 hackers generated evaluations in an open-ended bug bounty targeting the Open Language Model (OLMo) from The Allen Institute for AI. A vendor panel staffed by representatives of OLMo's safety program adjudicated changes to OLMo's documentation and awarded cash bounties to participants who successfully demonstrated a need for public disclosure clarifying the intent, capacities, and hazards of model deployment. This paper presents a collection of lessons learned, illustrative of flaw reporting best practices intended to reduce the likelihood of incidents and produce safer large language models (LLMs). These include best practices for safety reporting processes, their artifacts, and safety program staffing.

Coordinated Disclosure for AI: Beyond Security Vulnerabilities

Feb 10, 2024Abstract:Harm reporting in the field of Artificial Intelligence (AI) currently operates on an ad hoc basis, lacking a structured process for disclosing or addressing algorithmic flaws. In contrast, the Coordinated Vulnerability Disclosure (CVD) ethos and ecosystem play a pivotal role in software security and transparency. Within the U.S. context, there has been a protracted legal and policy struggle to establish a safe harbor from the Computer Fraud and Abuse Act, aiming to foster institutional support for security researchers acting in good faith. Notably, algorithmic flaws in Machine Learning (ML) models present distinct challenges compared to traditional software vulnerabilities, warranting a specialized approach. To address this gap, we propose the implementation of a dedicated Coordinated Flaw Disclosure (CFD) framework tailored to the intricacies of machine learning and artificial intelligence issues. This paper delves into the historical landscape of disclosures in ML, encompassing the ad hoc reporting of harms and the emergence of participatory auditing. By juxtaposing these practices with the well-established disclosure norms in cybersecurity, we argue that the broader adoption of CFD has the potential to enhance public trust through transparent processes that carefully balance the interests of both organizations and the community.

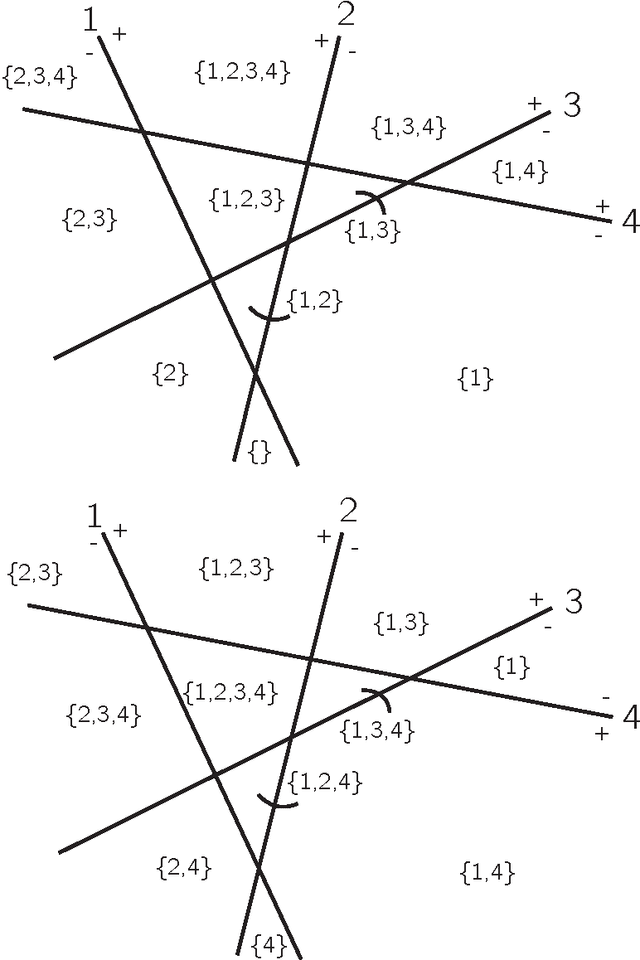

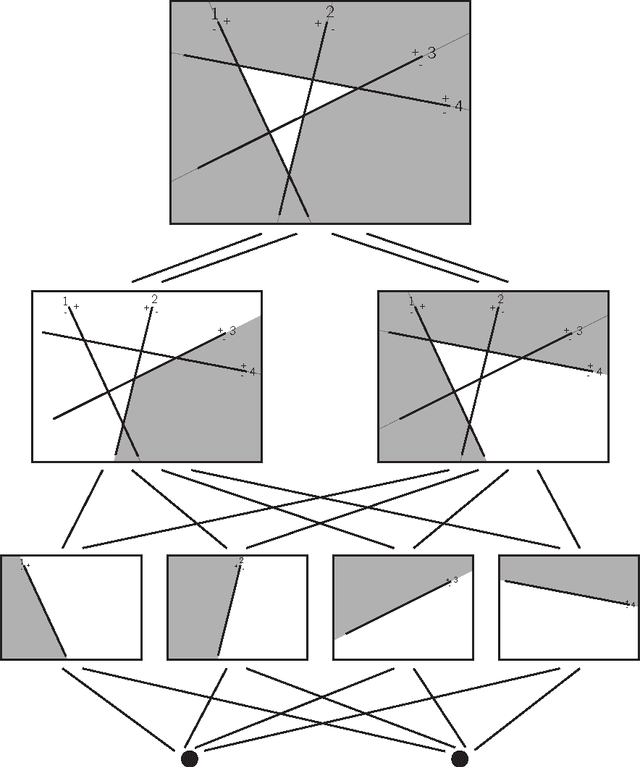

Geometric Decomposition of Feed Forward Neural Networks

Dec 08, 2016

Abstract:There have been several attempts to mathematically understand neural networks and many more from biological and computational perspectives. The field has exploded in the last decade, yet neural networks are still treated much like a black box. In this work we describe a structure that is inherent to a feed forward neural network. This will provide a framework for future work on neural networks to improve training algorithms, compute the homology of the network, and other applications. Our approach takes a more geometric point of view and is unlike other attempts to mathematically understand neural networks that rely on a functional perspective.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge