Sung Eun Kim

RadGame: An AI-Powered Platform for Radiology Education

Sep 16, 2025

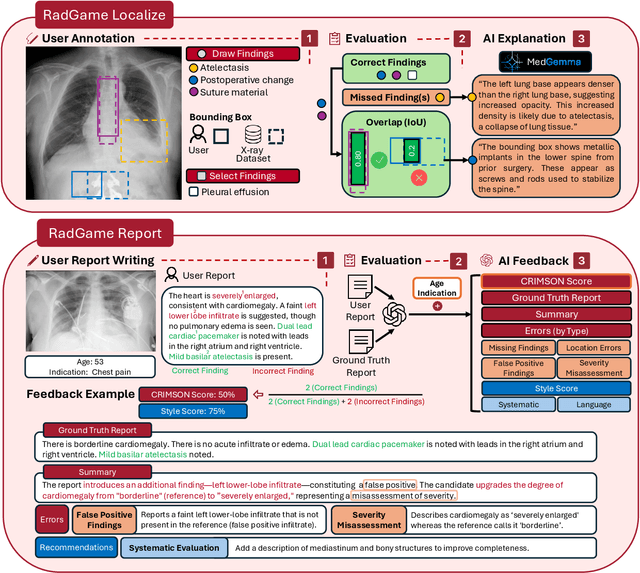

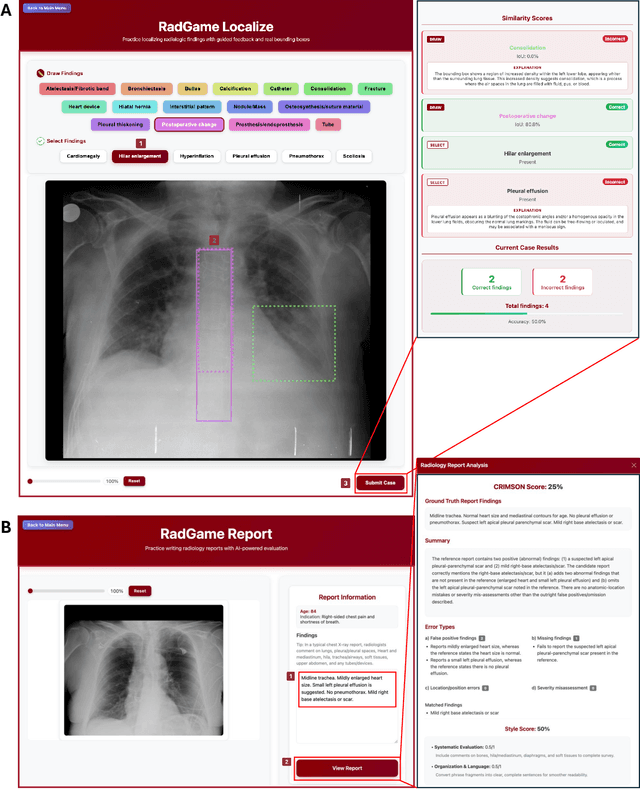

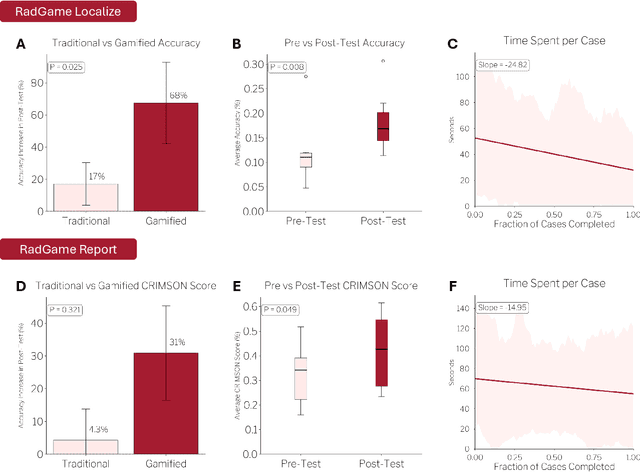

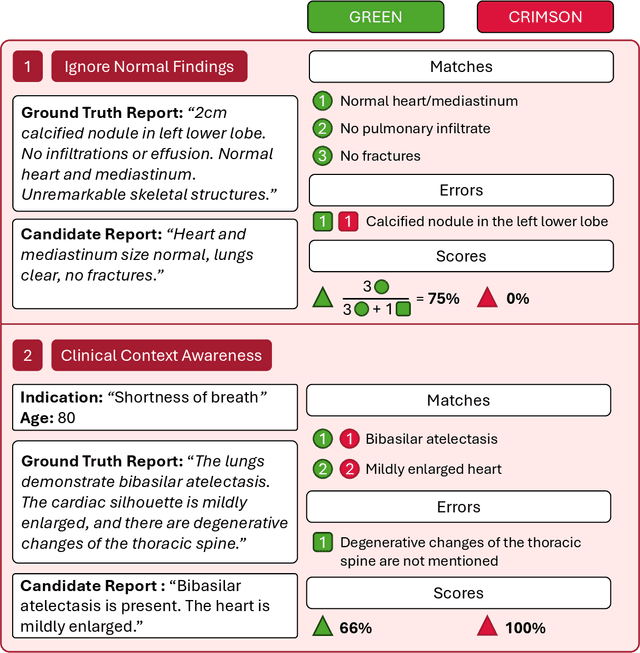

Abstract:We introduce RadGame, an AI-powered gamified platform for radiology education that targets two core skills: localizing findings and generating reports. Traditional radiology training is based on passive exposure to cases or active practice with real-time input from supervising radiologists, limiting opportunities for immediate and scalable feedback. RadGame addresses this gap by combining gamification with large-scale public datasets and automated, AI-driven feedback that provides clear, structured guidance to human learners. In RadGame Localize, players draw bounding boxes around abnormalities, which are automatically compared to radiologist-drawn annotations from public datasets, and visual explanations are generated by vision-language models for user missed findings. In RadGame Report, players compose findings given a chest X-ray, patient age and indication, and receive structured AI feedback based on radiology report generation metrics, highlighting errors and omissions compared to a radiologist's written ground truth report from public datasets, producing a final performance and style score. In a prospective evaluation, participants using RadGame achieved a 68% improvement in localization accuracy compared to 17% with traditional passive methods and a 31% improvement in report-writing accuracy compared to 4% with traditional methods after seeing the same cases. RadGame highlights the potential of AI-driven gamification to deliver scalable, feedback-rich radiology training and reimagines the application of medical AI resources in education.

ReXGroundingCT: A 3D Chest CT Dataset for Segmentation of Findings from Free-Text Reports

Jul 29, 2025Abstract:We present ReXGroundingCT, the first publicly available dataset to link free-text radiology findings with pixel-level segmentations in 3D chest CT scans that is manually annotated. While prior datasets have relied on structured labels or predefined categories, ReXGroundingCT captures the full expressiveness of clinical language represented in free text and grounds it to spatially localized 3D segmentation annotations in volumetric imaging. This addresses a critical gap in medical AI: the ability to connect complex, descriptive text, such as "3 mm nodule in the left lower lobe", to its precise anatomical location in three-dimensional space, a capability essential for grounded radiology report generation systems. The dataset comprises 3,142 non-contrast chest CT scans paired with standardized radiology reports from the CT-RATE dataset. Using a systematic three-stage pipeline, GPT-4 was used to extract positive lung and pleural findings, which were then manually segmented by expert annotators. A total of 8,028 findings across 16,301 entities were annotated, with quality control performed by board-certified radiologists. Approximately 79% of findings are focal abnormalities, while 21% are non-focal. The training set includes up to three representative segmentations per finding, while the validation and test sets contain exhaustive labels for each finding entity. ReXGroundingCT establishes a new benchmark for developing and evaluating sentence-level grounding and free-text medical segmentation models in chest CT. The dataset can be accessed at https://huggingface.co/datasets/rajpurkarlab/ReXGroundingCT.

ReXTrust: A Model for Fine-Grained Hallucination Detection in AI-Generated Radiology Reports

Dec 17, 2024

Abstract:The increasing adoption of AI-generated radiology reports necessitates robust methods for detecting hallucinations--false or unfounded statements that could impact patient care. We present ReXTrust, a novel framework for fine-grained hallucination detection in AI-generated radiology reports. Our approach leverages sequences of hidden states from large vision-language models to produce finding-level hallucination risk scores. We evaluate ReXTrust on a subset of the MIMIC-CXR dataset and demonstrate superior performance compared to existing approaches, achieving an AUROC of 0.8751 across all findings and 0.8963 on clinically significant findings. Our results show that white-box approaches leveraging model hidden states can provide reliable hallucination detection for medical AI systems, potentially improving the safety and reliability of automated radiology reporting.

FactCheXcker: Mitigating Measurement Hallucinations in Chest X-ray Report Generation Models

Nov 27, 2024

Abstract:Medical vision-language model models often struggle with generating accurate quantitative measurements in radiology reports, leading to hallucinations that undermine clinical reliability. We introduce FactCheXcker, a modular framework that de-hallucinates radiology report measurements by leveraging an improved query-code-update paradigm. Specifically, FactCheXcker employs specialized modules and the code generation capabilities of large language models to solve measurement queries generated based on the original report. After extracting measurable findings, the results are incorporated into an updated report. We evaluate FactCheXcker on endotracheal tube placement, which accounts for an average of 78% of report measurements, using the MIMIC-CXR dataset and 11 medical report-generation models. Our results show that FactCheXcker significantly reduces hallucinations, improves measurement precision, and maintains the quality of the original reports. Specifically, FactCheXcker improves the performance of all 11 models and achieves an average improvement of 94.0% in reducing measurement hallucinations measured by mean absolute error.

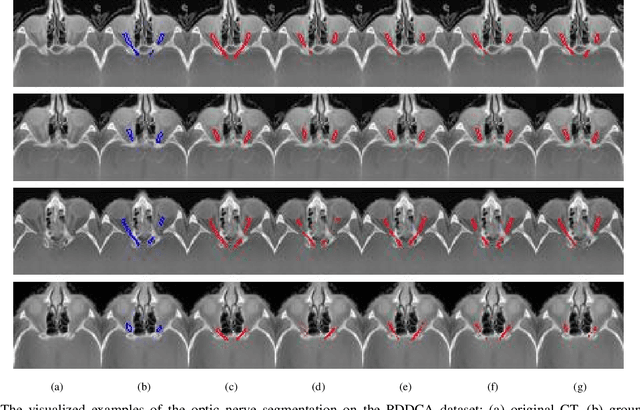

Supervised Segmentation with Domain Adaptation for Small Sampled Orbital CT Images

Jul 01, 2021

Abstract:Deep neural networks (DNNs) have been widely used for medical image analysis. However, the lack of access a to large-scale annotated dataset poses a great challenge, especially in the case of rare diseases, or new domains for the research society. Transfer of pre-trained features, from the relatively large dataset is a considerable solution. In this paper, we have explored supervised segmentation using domain adaptation for optic nerve and orbital tumor, when only small sampled CT images are given. Even the lung image database consortium image collection (LIDC-IDRI) is a cross-domain to orbital CT, but the proposed domain adaptation method improved the performance of attention U-Net for the segmentation in public optic nerve dataset and our clinical orbital tumor dataset. The code and dataset are available at https://github.com/cmcbigdata.

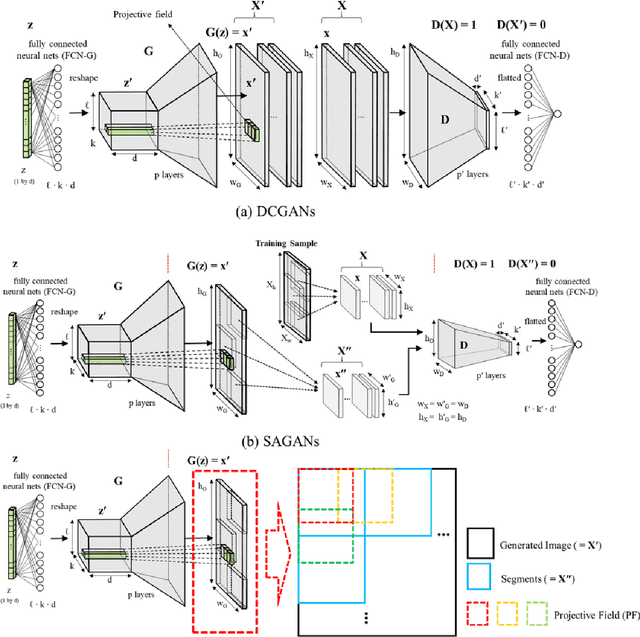

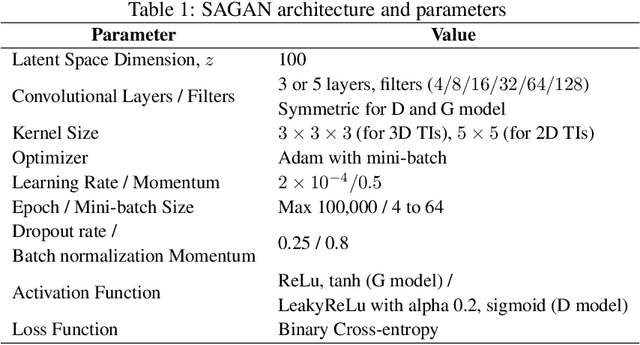

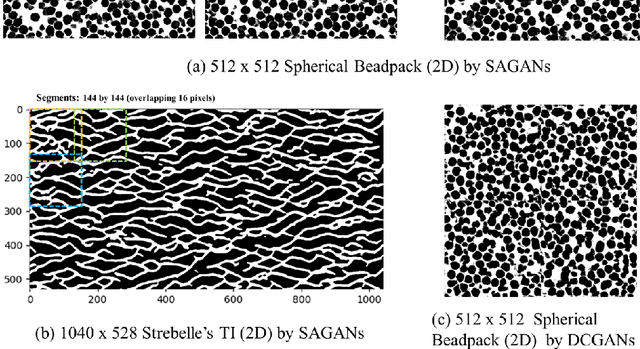

Fast and Scalable Earth Texture Synthesis using Spatially Assembled Generative Adversarial Neural Networks

Nov 13, 2020

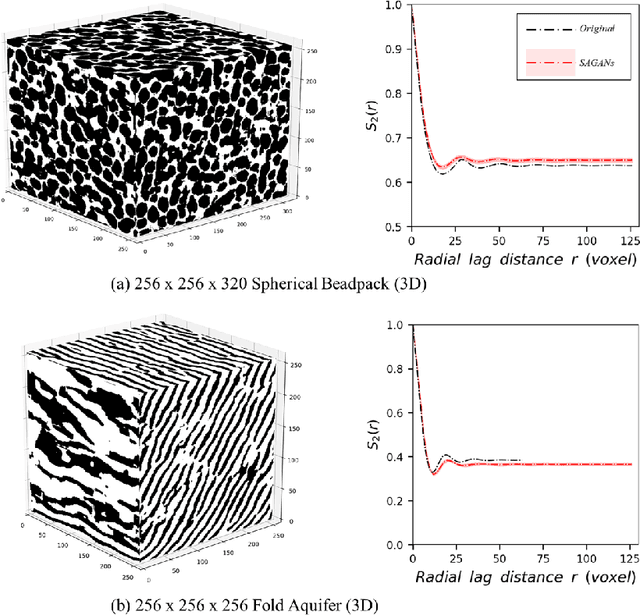

Abstract:The earth texture with complex morphological geometry and compositions such as shale and carbonate rocks, is typically characterized with sparse field samples because of an expensive and time-consuming characterization process. Accordingly, generating arbitrary large size of the geological texture with similar topological structures at a low computation cost has become one of the key tasks for realistic geomaterial reconstruction. Recently, generative adversarial neural networks (GANs) have demonstrated a potential of synthesizing input textural images and creating equiprobable geomaterial images. However, the texture synthesis with the GANs framework is often limited by the computational cost and scalability of the output texture size. In this study, we proposed a spatially assembled GANs (SAGANs) that can generate output images of an arbitrary large size regardless of the size of training images with computational efficiency. The performance of the SAGANs was evaluated with two and three dimensional (2D and 3D) rock image samples widely used in geostatistical reconstruction of the earth texture. We demonstrate SAGANs can generate the arbitrary large size of statistical realizations with connectivity and structural properties similar to training images, and also can generate a variety of realizations even on a single training image. In addition, the computational time was significantly improved compared to standard GANs frameworks.

Connectivity-informed Drainage Network Generation using Deep Convolution Generative Adversarial Networks

Jun 16, 2020

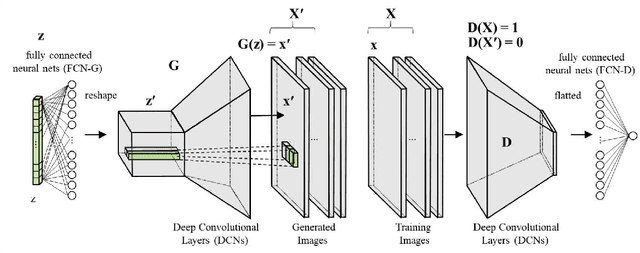

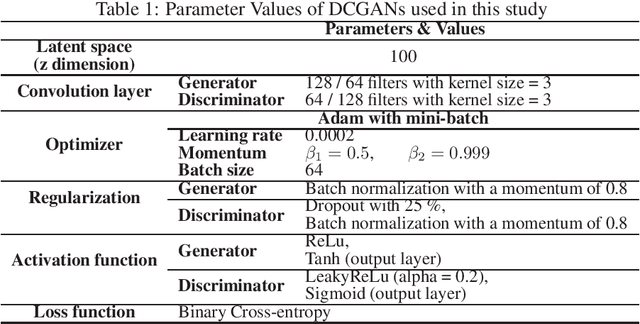

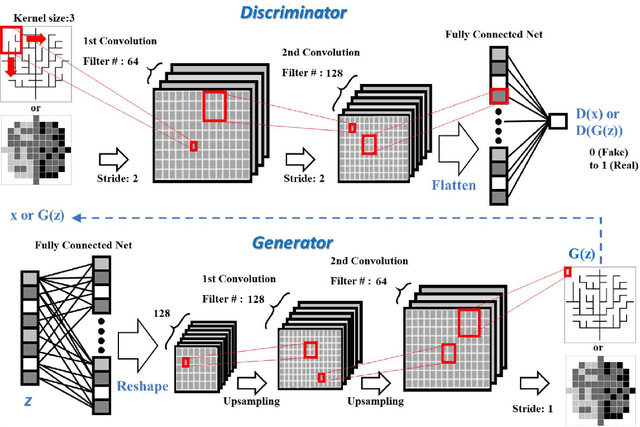

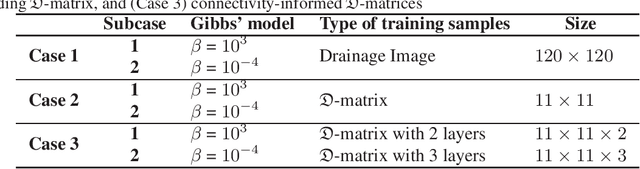

Abstract:Stochastic network modeling is often limited by high computational costs to generate a large number of networks enough for meaningful statistical evaluation. In this study, Deep Convolutional Generative Adversarial Networks (DCGANs) were applied to quickly reproduce drainage networks from the already generated network samples without repetitive long modeling of the stochastic network model, Gibb's model. In particular, we developed a novel connectivity-informed method that converts the drainage network images to the directional information of flow on each node of the drainage network, and then transform it into multiple binary layers where the connectivity constraints between nodes in the drainage network are stored. DCGANs trained with three different types of training samples were compared; 1) original drainage network images, 2) their corresponding directional information only, and 3) the connectivity-informed directional information. Comparison of generated images demonstrated that the novel connectivity-informed method outperformed the other two methods by training DCGANs more effectively and better reproducing accurate drainage networks due to its compact representation of the network complexity and connectivity. This work highlights that DCGANs can be applicable for high contrast images common in earth and material sciences where the network, fractures, and other high contrast features are important.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge