Sundar Sripada V. S.

Annotating sleep states in children from wrist-worn accelerometer data using Machine Learning

Dec 09, 2023

Abstract:Sleep detection and annotation are crucial for researchers to understand sleep patterns, especially in children. With modern wrist-worn watches comprising built-in accelerometers, sleep logs can be collected. However, the annotation of these logs into distinct sleep events: onset and wakeup, proves to be challenging. These annotations must be automated, precise, and scalable. We propose to model the accelerometer data using different machine learning (ML) techniques such as support vectors, boosting, ensemble methods, and more complex approaches involving LSTMs and Region-based CNNs. Later, we aim to evaluate these approaches using the Event Detection Average Precision (EDAP) score (similar to the IOU metric) to eventually compare the predictive power and model performance.

Style Transfer to Calvin and Hobbes comics using Stable Diffusion

Dec 07, 2023

Abstract:This project report summarizes our journey to perform stable diffusion fine-tuning on a dataset containing Calvin and Hobbes comics. The purpose is to convert any given input image into the comic style of Calvin and Hobbes, essentially performing style transfer. We train stable-diffusion-v1.5 using Low Rank Adaptation (LoRA) to efficiently speed up the fine-tuning process. The diffusion itself is handled by a Variational Autoencoder (VAE), which is a U-net. Our results were visually appealing for the amount of training time and the quality of input data that went into training.

Using human and robot synthetic data for training smart hand tools

Dec 05, 2023Abstract:The future of work does not require a choice between human and robot. Aside from explicit human-robot collaboration, robotics can play an increasingly important role in helping train workers as well as the tools they may use, especially in complex tasks that may be difficult to automate or effectively roboticize. This paper introduces a form of smart tool for use by human workers and shows how training the tool for task recognition, one of the key requirements, can be accomplished. Machine learning (ML) with purely human-based data can be extremely laborious and time-consuming. First, we show how data synthetically-generated by a robot can be leveraged in the ML training process. Later, we demonstrate how fine-tuning ML models for individual physical tasks and workers can significantly scale up the benefits of using ML to provide this feedback. Experimental results show the effectiveness and scalability of our approach, as we test data size versus accuracy. Smart hand tools of the type introduced here can provide insights and real-time analytics on efficient and safe tool usage and operation, thereby enhancing human participation and skill in a wide range of work environments. Using robotic platforms to help train smart tools will be essential, particularly given the diverse types of applications for which smart hand tools are envisioned for human use.

Learning Actions for Drift-Free Navigation in Highly Dynamic Scenes

Oct 28, 2021

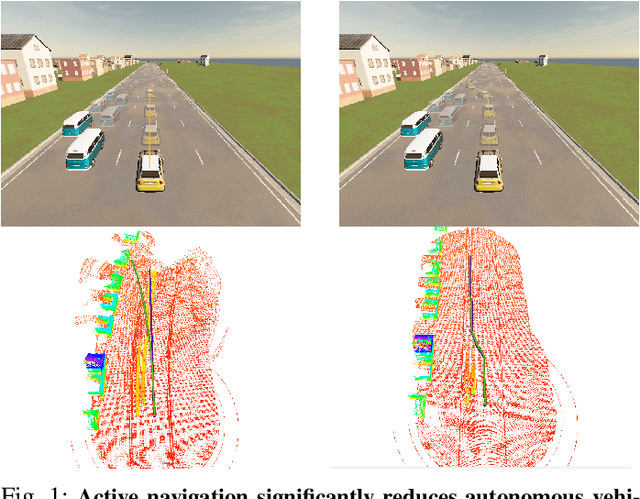

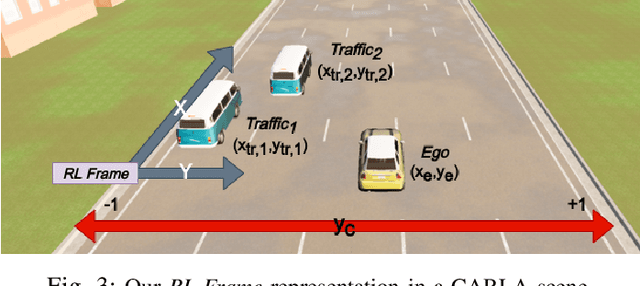

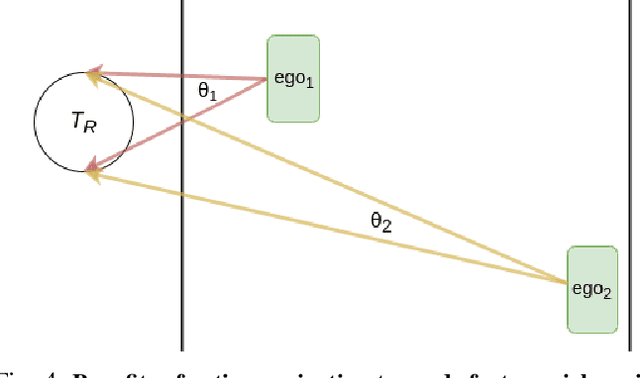

Abstract:We embark on a hitherto unreported problem of an autonomous robot (self-driving car) navigating in dynamic scenes in a manner that reduces its localization error and eventual cumulative drift or Absolute Trajectory Error, which is pronounced in such dynamic scenes. With the hugely popular Velodyne-16 3D LIDAR as the main sensing modality, and the accurate LIDAR-based Localization and Mapping algorithm, LOAM, as the state estimation framework, we show that in the absence of a navigation policy, drift rapidly accumulates in the presence of moving objects. To overcome this, we learn actions that lead to drift-minimized navigation through a suitable set of reward and penalty functions. We use Proximal Policy Optimization, a class of Deep Reinforcement Learning methods, to learn the actions that result in drift-minimized trajectories. We show by extensive comparisons on a variety of synthetic, yet photo-realistic scenes made available through the CARLA Simulator the superior performance of the proposed framework vis-a-vis methods that do not adopt such policies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge