Subhadeep Chakraborty

Diagnosing and Predicting Autonomous Vehicle Operational Safety Using Multiple Simulation Modalities and a Virtual Environment

May 13, 2024Abstract:Even as technology and performance gains are made in the sphere of automated driving, safety concerns remain. Vehicle simulation has long been seen as a tool to overcome the cost associated with a massive amount of on-road testing for development and discovery of safety critical "edge-cases". However, purely software-based vehicle models may leave a large realism gap between their real-world counterparts in terms of dynamic response, and highly realistic vehicle-in-the-loop (VIL) simulations that encapsulate a virtual world around a physical vehicle may still be quite expensive to produce and similarly time intensive as on-road testing. In this work, we demonstrate an AV simulation test bed that combines the realism of vehicle-in-the-loop (VIL) simulation with the ease of implementation of model-in-the-loop (MIL) simulation. The setup demonstrated in this work allows for response diagnosis for the VIL simulations. By observing causal links between virtual weather and lighting conditions that surround the virtual depiction of our vehicle, the vision-based perception model and controller of Openpilot, and the dynamic response of our physical vehicle under test, we can draw conclusions regarding how the perceived environment contributed to vehicle response. Conversely, we also demonstrate response prediction for the MIL setup, where the need for a physical vehicle is not required to draw richer conclusions around the impact of environmental conditions on AV performance than could be obtained with VIL simulation alone. These combine for a simulation setup with accurate real-world implications for edge-case discovery that is both cost effective and time efficient to implement.

GENESIS-RL: GEnerating Natural Edge-cases with Systematic Integration of Safety considerations and Reinforcement Learning

Mar 27, 2024

Abstract:In the rapidly evolving field of autonomous systems, the safety and reliability of the system components are fundamental requirements. These components are often vulnerable to complex and unforeseen environments, making natural edge-case generation essential for enhancing system resilience. This paper presents GENESIS-RL, a novel framework that leverages system-level safety considerations and reinforcement learning techniques to systematically generate naturalistic edge cases. By simulating challenging conditions that mimic the real-world situations, our framework aims to rigorously test entire system's safety and reliability. Although demonstrated within the autonomous driving application, our methodology is adaptable across diverse autonomous systems. Our experimental validation, conducted on high-fidelity simulator underscores the overall effectiveness of this framework.

Active shooter detection and robust tracking utilizing supplemental synthetic data

Sep 06, 2023

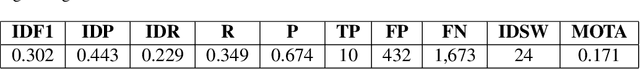

Abstract:The increasing concern surrounding gun violence in the United States has led to a focus on developing systems to improve public safety. One approach to developing such a system is to detect and track shooters, which would help prevent or mitigate the impact of violent incidents. In this paper, we proposed detecting shooters as a whole, rather than just guns, which would allow for improved tracking robustness, as obscuring the gun would no longer cause the system to lose sight of the threat. However, publicly available data on shooters is much more limited and challenging to create than a gun dataset alone. Therefore, we explore the use of domain randomization and transfer learning to improve the effectiveness of training with synthetic data obtained from Unreal Engine environments. This enables the model to be trained on a wider range of data, increasing its ability to generalize to different situations. Using these techniques with YOLOv8 and Deep OC-SORT, we implemented an initial version of a shooter tracking system capable of running on edge hardware, including both a Raspberry Pi and a Jetson Nano.

Fully Embedded Time-Series Generative Adversarial Networks

Aug 30, 2023Abstract:Generative Adversarial Networks (GANs) should produce synthetic data that fits the underlying distribution of the data being modeled. For real valued time-series data, this implies the need to simultaneously capture the static distribution of the data, but also the full temporal distribution of the data for any potential time horizon. This temporal element produces a more complex problem that can potentially leave current solutions under-constrained, unstable during training, or prone to varying degrees of mode collapse. In FETSGAN, entire sequences are translated directly to the generator's sampling space using a seq2seq style adversarial auto encoder (AAE), where adversarial training is used to match the training distribution in both the feature space and the lower dimensional sampling space. This additional constraint provides a loose assurance that the temporal distribution of the synthetic samples will not collapse. In addition, the First Above Threshold (FAT) operator is introduced to supplement the reconstruction of encoded sequences, which improves training stability and the overall quality of the synthetic data being generated. These novel contributions demonstrate a significant improvement to the current state of the art for adversarial learners in qualitative measures of temporal similarity and quantitative predictive ability of data generated through FETSGAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge