Stig Ursing

Evaluation of Out-of-Distribution Detection Performance on Autonomous Driving Datasets

Jan 30, 2024

Abstract:Safety measures need to be systemically investigated to what extent they evaluate the intended performance of Deep Neural Networks (DNNs) for critical applications. Due to a lack of verification methods for high-dimensional DNNs, a trade-off is needed between accepted performance and handling of out-of-distribution (OOD) samples. This work evaluates rejecting outputs from semantic segmentation DNNs by applying a Mahalanobis distance (MD) based on the most probable class-conditional Gaussian distribution for the predicted class as an OOD score. The evaluation follows three DNNs trained on the Cityscapes dataset and tested on four automotive datasets and finds that classification risk can drastically be reduced at the cost of pixel coverage, even when applied on unseen datasets. The applicability of our findings will support legitimizing safety measures and motivate their usage when arguing for safe usage of DNNs in automotive perception.

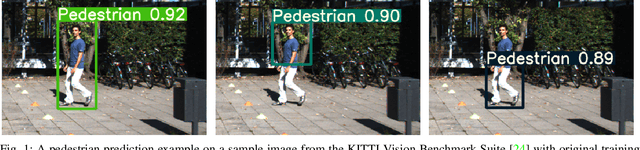

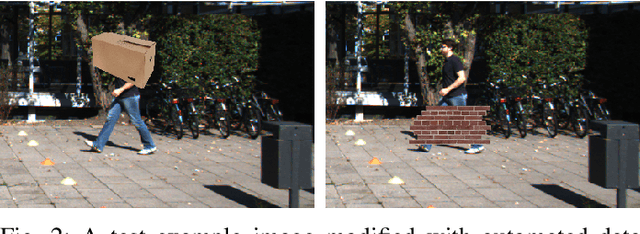

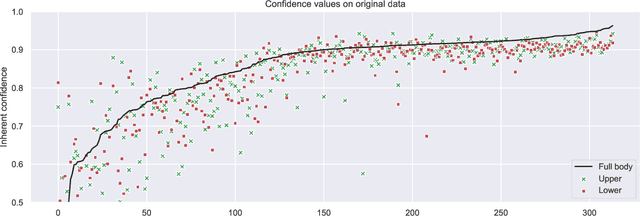

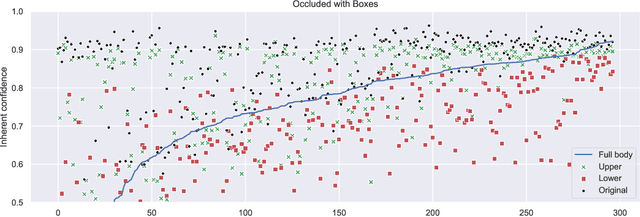

Understanding the Impact of Edge Cases from Occluded Pedestrians for ML Systems

Apr 26, 2022

Abstract:Machine learning (ML)-enabled approaches are considered a substantial support technique of detection and classification of obstacles of traffic participants in self-driving vehicles. Major breakthroughs have been demonstrated the past few years, even covering complete end-to-end data processing chain from sensory inputs through perception and planning to vehicle control of acceleration, breaking and steering. YOLO (you-only-look-once) is a state-of-the-art perception neural network (NN) architecture providing object detection and classification through bounding box estimations on camera images. As the NN is trained on well annotated images, in this paper we study the variations of confidence levels from the NN when tested on hand-crafted occlusion added to a test set. We compare regular pedestrian detection to upper and lower body detection. Our findings show that the two NN using only partial information perform similarly well like the NN for the full body when the full body NN's performance is 0.75 or better. Furthermore and as expected, the network, which is only trained on the lower half body is least prone to disturbances from occlusions of the upper half and vice versa.

Towards Structured Evaluation of Deep Neural Network Supervisors

Mar 07, 2019

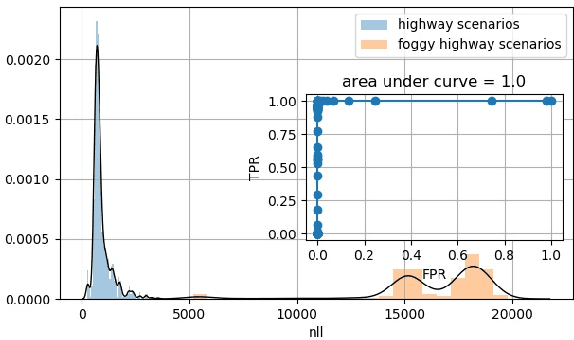

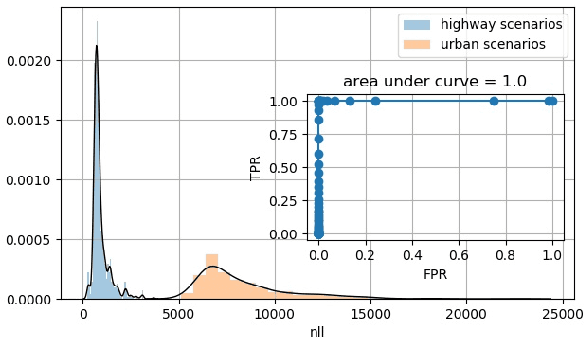

Abstract:Deep Neural Networks (DNN) have improved the quality of several non-safety related products in the past years. However, before DNNs should be deployed to safety-critical applications, their robustness needs to be systematically analyzed. A common challenge for DNNs occurs when input is dissimilar to the training set, which might lead to high confidence predictions despite proper knowledge of the input. Several previous studies have proposed to complement DNNs with a supervisor that detects when inputs are outside the scope of the network. Most of these supervisors, however, are developed and tested for a selected scenario using a specific performance metric. In this work, we emphasize the need to assess and compare the performance of supervisors in a structured way. We present a framework constituted by four datasets organized in six test cases combined with seven evaluation metrics. The test cases provide varying complexity and include data from publicly available sources as well as a novel dataset consisting of images from simulated driving scenarios. The latter we plan to make publicly available. Our framework can be used to support DNN supervisor evaluation, which in turn could be used to motive development, validation, and deployment of DNNs in safety-critical applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge