Stephen Whitelam

Training thermodynamic computers by gradient descent

Sep 18, 2025Abstract:We show how to adjust the parameters of a thermodynamic computer by gradient descent in order to perform a desired computation at a specified observation time. Within a digital simulation of a thermodynamic computer, training proceeds by maximizing the probability with which the computer would generate an idealized dynamical trajectory. The idealized trajectory is designed to reproduce the activations of a neural network trained to perform the desired computation. This teacher-student scheme results in a thermodynamic computer whose finite-time dynamics enacts a computation analogous to that of the neural network. The parameters identified in this way can be implemented in the hardware realization of the thermodynamic computer, which will perform the desired computation automatically, driven by thermal noise. We demonstrate the method on a standard image-classification task, and estimate the thermodynamic advantage -- the ratio of energy costs of the digital and thermodynamic implementations -- to exceed seven orders of magnitude. Our results establish gradient descent as a viable training method for thermodynamic computing, enabling application of the core methodology of machine learning to this emerging field.

Generative thermodynamic computing

Jun 18, 2025Abstract:We introduce a generative modeling framework for thermodynamic computing, in which structured data is synthesized from noise by the natural time evolution of a physical system governed by Langevin dynamics. While conventional diffusion models use neural networks to perform denoising, here the information needed to generate structure from noise is encoded by the dynamics of a thermodynamic system. Training proceeds by maximizing the probability with which the computer generates the reverse of a noising trajectory, which ensures that the computer generates data with minimal heat emission. We demonstrate this framework within a digital simulation of a thermodynamic computer. If realized in analog hardware, such a system would function as a generative model that produces structured samples without the need for artificially-injected noise or active control of denoising.

Thermodynamic computing out of equilibrium

Dec 22, 2024Abstract:We present the design for a thermodynamic computer that can perform arbitrary nonlinear calculations in or out of equilibrium. Simple thermodynamic circuits, fluctuating degrees of freedom in contact with a thermal bath and confined by a quartic potential, display an activity that is a nonlinear function of their input. Such circuits can therefore be regarded as thermodynamic neurons, and can serve as the building blocks of networked structures that act as thermodynamic neural networks, universal function approximators whose operation is powered by thermal fluctuations. We simulate a digital model of a thermodynamic neural network, and show that its parameters can be adjusted by genetic algorithm to perform nonlinear calculations at specified observation times, regardless of whether the system has attained thermal equilibrium. This work expands the field of thermodynamic computing beyond the regime of thermal equilibrium, enabling fully nonlinear computations, analogous to those performed by classical neural networks, at specified observation times.

Increasing the clock speed of a thermodynamic computer by adding noise

Oct 16, 2024

Abstract:We describe a proposal for increasing the effective clock speed of a thermodynamic computer, by altering the interaction scale of the units within the computer and introducing to the computer an additional source of noise. The resulting thermodynamic computer program is equivalent to the original computer program, but runs at a higher clock speed. This approach offers a way of increasing the speed of thermodynamic computing while preserving the fidelity of computation.

Adaptive AI-Driven Material Synthesis: Towards Autonomous 2D Materials Growth

Oct 10, 2024

Abstract:Two-dimensional (2D) materials are poised to revolutionize current solid-state technology with their extraordinary properties. Yet, the primary challenge remains their scalable production. While there have been significant advancements, much of the scientific progress has depended on the exfoliation of materials, a method that poses severe challenges for large-scale applications. With the advent of artificial intelligence (AI) in materials science, innovative synthesis methodologies are now on the horizon. This study explores the forefront of autonomous materials synthesis using an artificial neural network (ANN) trained by evolutionary methods, focusing on the efficient production of graphene. Our approach demonstrates that a neural network can iteratively and autonomously learn a time-dependent protocol for the efficient growth of graphene, without requiring pretraining on what constitutes an effective recipe. Evaluation criteria are based on the proximity of the Raman signature to that of monolayer graphene: higher scores are granted to outcomes whose spectrum more closely resembles that of an ideal continuous monolayer structure. This feedback mechanism allows for iterative refinement of the ANN's time-dependent synthesis protocols, progressively improving sample quality. Through the advancement and application of AI methodologies, this work makes a substantial contribution to the field of materials engineering, fostering a new era of innovation and efficiency in the synthesis process.

Oscillatrons: neural units with time-dependent multifunctionality

May 09, 2024

Abstract:Several branches of computing use a system's physical dynamics to do computation. We show that the dynamics of an underdamped harmonic oscillator can perform multifunctional computation, solving distinct problems at distinct times within a dynamical trajectory. Oscillator computing usually focuses on the oscillator's phase as the information-carrying component. Here we focus on the time-resolved amplitude of an oscillator whose inputs influence its frequency, which has a natural parallel as the activity of a time-dependent neural unit. We call this unit an oscillatron. The activity of an oscillatron at fixed time is a nonmonotonic function of the input, and so it can solve nonlinearly-separable problems such as XOR. The activity of the oscillatron at fixed input is a nonmonotonic function of time, and so it is multifunctional in a temporal sense, able to carry out distinct nonlinear computations at distinct times within the same dynamical trajectory. Time-resolved computing of this nature can be done in or out of equilibrium, with the natural time evolution of the system giving us multiple computations for the price of one.

Springs and a stopwatch: neural units with time-dependent multifunctionality

Apr 23, 2024

Abstract:Several branches of computing use a system's physical dynamics to do computation. We show that the dynamics of an underdamped harmonic oscillator can perform multifunctional computation, solving distinct problems at distinct times within a single dynamical trajectory. Oscillator computing usually focuses on the oscillator's phase as the information-carrying component. Here we focus on the time-resolved amplitude of an oscillator whose inputs influence its frequency, which has a natural parallel as the activity of a time-dependent neural unit. Because the activity of the unit at fixed time is a nonmonotonic function of the input, the unit can solve nonlinearly-separable problems such as XOR. Because the activity of the unit at fixed input is a nonmonotonic function of time, the unit is multifunctional in a temporal sense, able to carry out distinct nonlinear computations at distinct times within the same dynamical trajectory. Time-resolved computing of this nature can be done in or out of equilibrium, with the natural time evolution of the system giving us multiple computations for the price of one.

How to train your demon to do fast information erasure without heat production

May 17, 2023Abstract:Time-dependent protocols that perform irreversible logical operations, such as memory erasure, cost work and produce heat, placing bounds on the efficiency of computers. Here we use a prototypical computer model of a physical memory to show that it is possible to learn feedback-control protocols to do fast memory erasure without input of work or production of heat. These protocols, which are enacted by a neural-network "demon", do not violate the second law of thermodynamics because the demon generates more heat than the memory absorbs. The result is a form of nonlocal heat exchange in which one computation is rendered energetically favorable while a compensating one produces heat elsewhere, a tactic that could be used to rationally design the flow of energy within a computer.

Demon in the machine: learning to extract work and absorb entropy from fluctuating nanosystems

Nov 20, 2022

Abstract:We use Monte Carlo and genetic algorithms to train neural-network feedback-control protocols for simulated fluctuating nanosystems. These protocols convert the information obtained by the feedback process into heat or work, allowing the extraction of work from a colloidal particle pulled by an optical trap and the absorption of entropy by an Ising model undergoing magnetization reversal. The learning framework requires no prior knowledge of the system, depends only upon measurements that are accessible experimentally, and scales to systems of considerable complexity. It could be used in the laboratory to learn protocols for fluctuating nanosystems that convert measurement information into stored work or heat.

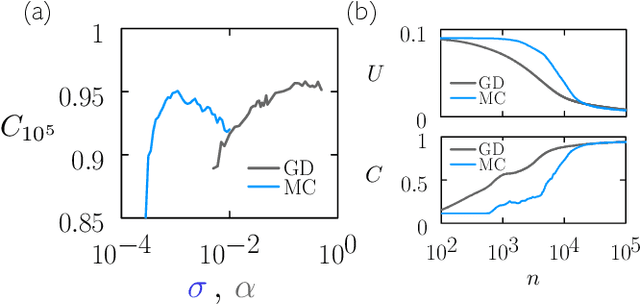

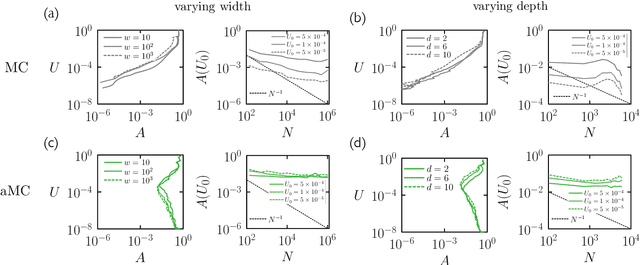

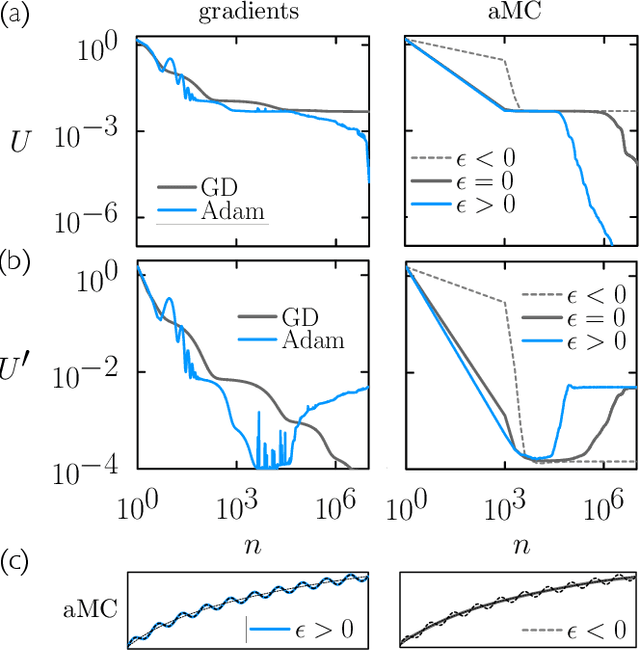

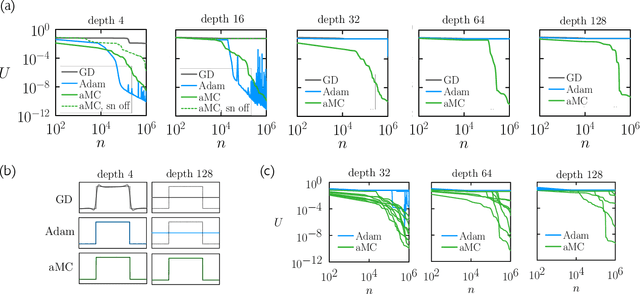

Training neural networks using Metropolis Monte Carlo and an adaptive variant

May 16, 2022

Abstract:We examine the zero-temperature Metropolis Monte Carlo algorithm as a tool for training a neural network by minimizing a loss function. We find that, as expected on theoretical grounds and shown empirically by other authors, Metropolis Monte Carlo can train a neural net with an accuracy comparable to that of gradient descent, if not necessarily as quickly. The Metropolis algorithm does not fail automatically when the number of parameters of a neural network is large. It can fail when a neural network's structure or neuron activations are strongly heterogenous, and we introduce an adaptive Monte Carlo algorithm, aMC, to overcome these limitations. The intrinsic stochasticity of the Monte Carlo method allows aMC to train neural networks in which the gradient is too small to allow training by gradient descent. We suggest that, as for molecular simulation, Monte Carlo methods offer a complement to gradient-based methods for training neural networks, allowing access to a distinct set of network architectures and principles.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge