Stephan Jacobs

EMOTE: An Explainable architecture for Modelling the Other Through Empathy

Jun 01, 2023Abstract:We can usually assume others have goals analogous to our own. This assumption can also, at times, be applied to multi-agent games - e.g. Agent 1's attraction to green pellets is analogous to Agent 2's attraction to red pellets. This "analogy" assumption is tied closely to the cognitive process known as empathy. Inspired by empathy, we design a simple and explainable architecture to model another agent's action-value function. This involves learning an "Imagination Network" to transform the other agent's observed state in order to produce a human-interpretable "empathetic state" which, when presented to the learning agent, produces behaviours that mimic the other agent. Our approach is applicable to multi-agent scenarios consisting of a single learning agent and other (independent) agents acting according to fixed policies. This architecture is particularly beneficial for (but not limited to) algorithms using a composite value or reward function. We show our method produces better performance in multi-agent games, where it robustly estimates the other's model in different environment configurations. Additionally, we show that the empathetic states are human interpretable, and thus verifiable.

The Three Ghosts of Medical AI: Can the Black-Box Present Deliver?

Dec 10, 2020

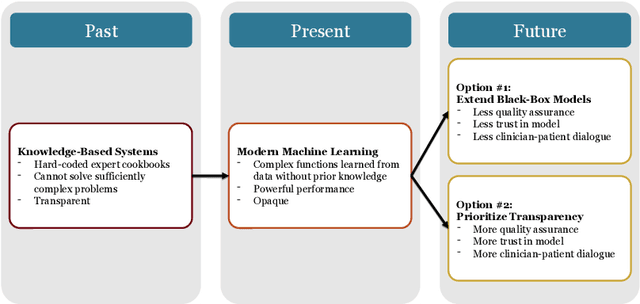

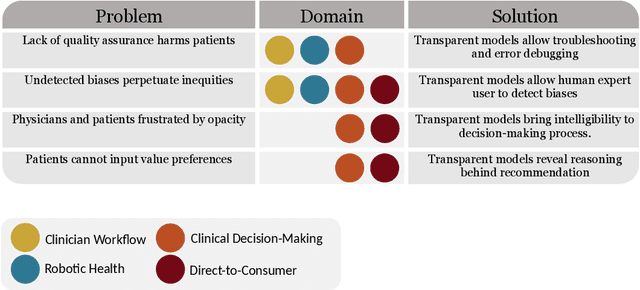

Abstract:Our title alludes to the three Christmas ghosts encountered by Ebenezer Scrooge in \textit{A Christmas Carol}, who guide Ebenezer through the past, present, and future of Christmas holiday events. Similarly, our article will take readers through a journey of the past, present, and future of medical AI. In doing so, we focus on the crux of modern machine learning: the reliance on powerful but intrinsically opaque models. When applied to the healthcare domain, these models fail to meet the needs for transparency that their clinician and patient end-users require. We review the implications of this failure, and argue that opaque models (1) lack quality assurance, (2) fail to elicit trust, and (3) restrict physician-patient dialogue. We then discuss how upholding transparency in all aspects of model design and model validation can help ensure the reliability of medical AI.

Trust and Medical AI: The challenges we face and the expertise needed to overcome them

Aug 18, 2020

Abstract:Artificial intelligence (AI) is increasingly of tremendous interest in the medical field. However, failures of medical AI could have serious consequences for both clinical outcomes and the patient experience. These consequences could erode public trust in AI, which could in turn undermine trust in our healthcare institutions. This article makes two contributions. First, it describes the major conceptual, technical, and humanistic challenges in medical AI. Second, it proposes a solution that hinges on the education and accreditation of new expert groups who specialize in the development, verification, and operation of medical AI technologies. These groups will be required to maintain trust in our healthcare institutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge