Stepan Konev

From 2D to 3D: AISG-SLA Visual Localization Challenge

Jul 26, 2024

Abstract:Research in 3D mapping is crucial for smart city applications, yet the cost of acquiring 3D data often hinders progress. Visual localization, particularly monocular camera position estimation, offers a solution by determining the camera's pose solely through visual cues. However, this task is challenging due to limited data from a single camera. To tackle these challenges, we organized the AISG-SLA Visual Localization Challenge (VLC) at IJCAI 2023 to explore how AI can accurately extract camera pose data from 2D images in 3D space. The challenge attracted over 300 participants worldwide, forming 50+ teams. Winning teams achieved high accuracy in pose estimation using images from a car-mounted camera with low frame rates. The VLC dataset is available for research purposes upon request via vlc-dataset@aisingapore.org.

Kraken: enabling joint trajectory prediction by utilizing Mode Transformer and Greedy Mode Processing

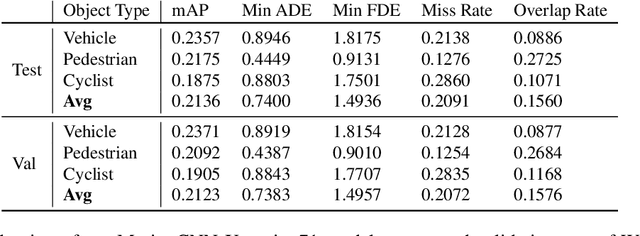

Dec 08, 2023Abstract:Accurate and reliable motion prediction is essential for safe urban autonomy. The most prominent motion prediction approaches are based on modeling the distribution of possible future trajectories of each actor in autonomous system's vicinity. These "independent" marginal predictions might be accurate enough to properly describe casual driving situations where the prediction target is not likely to interact with other actors. They are, however, inadequate for modeling interactive situations where the actors' future trajectories are likely to intersect. To mitigate this issue we propose Kraken -- a real-time trajectory prediction model capable of approximating pairwise interactions between the actors as well as producing accurate marginal predictions. Kraken relies on a simple Greedy Mode Processing technique allowing it to convert a factorized prediction for a pair of agents into a physically-plausible joint prediction. It also utilizes the Mode Transformer module to increase the diversity of predicted trajectories and make the joint prediction more informative. We evaluate Kraken on Waymo Motion Prediction challenge where it held the first place in the Interaction leaderboard and the second place in the Motion leaderboard in October 2021.

Match and Locate: low-frequency monocular odometry based on deep feature matching

Nov 16, 2023

Abstract:Accurate and robust pose estimation plays a crucial role in many robotic systems. Popular algorithms for pose estimation typically rely on high-fidelity and high-frequency signals from various sensors. Inclusion of these sensors makes the system less affordable and much more complicated. In this work we introduce a novel approach for the robotic odometry which only requires a single camera and, importantly, can produce reliable estimates given even extremely low-frequency signal of around one frame per second. The approach is based on matching image features between the consecutive frames of the video stream using deep feature matching models. The resulting coarse estimate is then adjusted by a convolutional neural network, which is also responsible for estimating the scale of the transition, otherwise irretrievable using only the feature matching information. We evaluate the performance of the approach in the AISG-SLA Visual Localisation Challenge and find that while being computationally efficient and easy to implement our method shows competitive results with only around $3^{\circ}$ of orientation estimation error and $2m$ of translation estimation error taking the third place in the challenge.

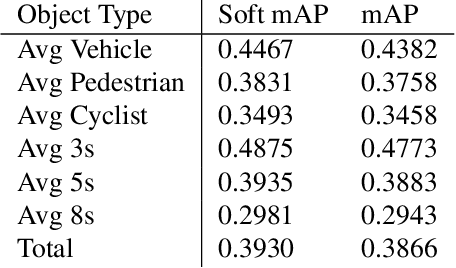

MPA: MultiPath++ Based Architecture for Motion Prediction

Jun 20, 2022

Abstract:Autonomous driving technology is developing rapidly and nowadays first autonomous rides are being provided in city areas. This requires the highest standards for the safety and reliability of the technology. Motion prediction part of the general self-driving pipeline plays a crucial role in providing these qualities. In this work we present one of the solutions for Waymo Motion Prediction Challenge 2022 based on MultiPath++ ranked the 3rd as of May, 26 2022. Our source code is publicly available on GitHub.

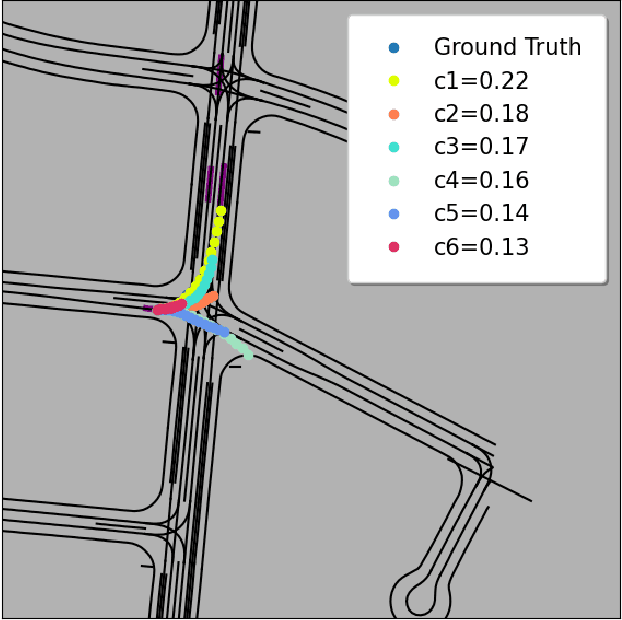

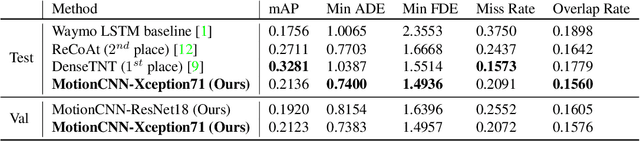

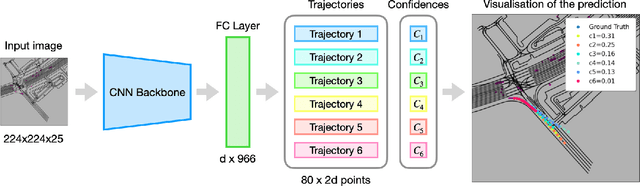

MotionCNN: A Strong Baseline for Motion Prediction in Autonomous Driving

Jun 05, 2022

Abstract:To plan a safe and efficient route, an autonomous vehicle should anticipate future motions of other agents around it. Motion prediction is an extremely challenging task that recently gained significant attention within the research community. In this work, we present a simple and yet very strong baseline for multimodal motion prediction based purely on Convolutional Neural Networks. While being easy-to-implement, the proposed approach achieves competitive performance compared to the state-of-the-art methods and ranks 3rd on the 2021 Waymo Open Dataset Motion Prediction Challenge. Our source code is publicly available at GitHub

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge