Steffen Schotthöfer

Compressing Vision Transformers in Geospatial Transfer Learning with Manifold-Constrained Optimization

Jan 12, 2026Abstract:Deploying geospatial foundation models on resource-constrained edge devices demands compact architectures that maintain high downstream performance. However, their large parameter counts and the accuracy loss often induced by compression limit practical adoption. In this work, we leverage manifold-constrained optimization framework DLRT to compress large vision transformer-based geospatial foundation models during transfer learning. By enforcing structured low-dimensional parameterizations aligned with downstream objectives, this approach achieves strong compression while preserving task-specific accuracy. We show that the method outperforms of-the-shelf low-rank methods as LoRA. Experiments on diverse geospatial benchmarks confirm substantial parameter reduction with minimal accuracy loss, enabling high-performing, on-device geospatial models.

Dynamical Low-Rank Compression of Neural Networks with Robustness under Adversarial Attacks

May 12, 2025Abstract:Deployment of neural networks on resource-constrained devices demands models that are both compact and robust to adversarial inputs. However, compression and adversarial robustness often conflict. In this work, we introduce a dynamical low-rank training scheme enhanced with a novel spectral regularizer that controls the condition number of the low-rank core in each layer. This approach mitigates the sensitivity of compressed models to adversarial perturbations without sacrificing clean accuracy. The method is model- and data-agnostic, computationally efficient, and supports rank adaptivity to automatically compress the network at hand. Extensive experiments across standard architectures, datasets, and adversarial attacks show the regularized networks can achieve over 94% compression while recovering or improving adversarial accuracy relative to uncompressed baselines.

An Augmented Backward-Corrected Projector Splitting Integrator for Dynamical Low-Rank Training

Feb 05, 2025Abstract:Layer factorization has emerged as a widely used technique for training memory-efficient neural networks. However, layer factorization methods face several challenges, particularly a lack of robustness during the training process. To overcome this limitation, dynamical low-rank training methods have been developed, utilizing robust time integration techniques for low-rank matrix differential equations. Although these approaches facilitate efficient training, they still depend on computationally intensive QR and singular value decompositions of matrices with small rank. In this work, we introduce a novel low-rank training method that reduces the number of required QR decompositions. Our approach integrates an augmentation step into a projector-splitting scheme, ensuring convergence to a locally optimal solution. We provide a rigorous theoretical analysis of the proposed method and demonstrate its effectiveness across multiple benchmarks.

GeoLoRA: Geometric integration for parameter efficient fine-tuning

Oct 24, 2024Abstract:Low-Rank Adaptation (LoRA) has become a widely used method for parameter-efficient fine-tuning of large-scale, pre-trained neural networks. However, LoRA and its extensions face several challenges, including the need for rank adaptivity, robustness, and computational efficiency during the fine-tuning process. We introduce GeoLoRA, a novel approach that addresses these limitations by leveraging dynamical low-rank approximation theory. GeoLoRA requires only a single backpropagation pass over the small-rank adapters, significantly reducing computational cost as compared to similar dynamical low-rank training methods and making it faster than popular baselines such as AdaLoRA. This allows GeoLoRA to efficiently adapt the allocated parameter budget across the model, achieving smaller low-rank adapters compared to heuristic methods like AdaLoRA and LoRA, while maintaining critical convergence, descent, and error-bound theoretical guarantees. The resulting method is not only more efficient but also more robust to varying hyperparameter settings. We demonstrate the effectiveness of GeoLoRA on several state-of-the-art benchmarks, showing that it outperforms existing methods in both accuracy and computational efficiency.

Federated Dynamical Low-Rank Training with Global Loss Convergence Guarantees

Jun 25, 2024Abstract:In this work, we propose a federated dynamical low-rank training (FeDLRT) scheme to reduce client compute and communication costs - two significant performance bottlenecks in horizontal federated learning. Our method builds upon dynamical low-rank splitting schemes for manifold-constrained optimization to create a global low-rank basis of network weights, which enables client training on a small coefficient matrix. A consistent global low-rank basis allows us to incorporate a variance correction scheme and prove global loss descent and convergence to a stationary point. Dynamic augmentation and truncation of the low-rank bases automatically optimizes computing and communication resource utilization. We demonstrate the efficiency of FeDLRT in an array of computer vision benchmarks and show a reduction of client compute and communication costs by up to an order of magnitude with minimal impacts on global accuracy.

Structure-preserving neural networks for the regularized entropy-based closure of the Boltzmann moment system

Apr 26, 2024Abstract:The main challenge of large-scale numerical simulation of radiation transport is the high memory and computation time requirements of discretization methods for kinetic equations. In this work, we derive and investigate a neural network-based approximation to the entropy closure method to accurately compute the solution of the multi-dimensional moment system with a low memory footprint and competitive computational time. We extend methods developed for the standard entropy-based closure to the context of regularized entropy-based closures. The main idea is to interpret structure-preserving neural network approximations of the regularized entropy closure as a two-stage approximation to the original entropy closure. We conduct a numerical analysis of this approximation and investigate optimal parameter choices. Our numerical experiments demonstrate that the method has a much lower memory footprint than traditional methods with competitive computation times and simulation accuracy. The code and all trained networks are provided on GitHub https://github.com/ScSteffen/neuralEntropyClosures and https://github.com/CSMMLab/KiT-RT.

Rank-adaptive spectral pruning of convolutional layers during training

May 30, 2023Abstract:The computing cost and memory demand of deep learning pipelines have grown fast in recent years and thus a variety of pruning techniques have been developed to reduce model parameters. The majority of these techniques focus on reducing inference costs by pruning the network after a pass of full training. A smaller number of methods address the reduction of training costs, mostly based on compressing the network via low-rank layer factorizations. Despite their efficiency for linear layers, these methods fail to effectively handle convolutional filters. In this work, we propose a low-parametric training method that factorizes the convolutions into tensor Tucker format and adaptively prunes the Tucker ranks of the convolutional kernel during training. Leveraging fundamental results from geometric integration theory of differential equations on tensor manifolds, we obtain a robust training algorithm that provably approximates the full baseline performance and guarantees loss descent. A variety of experiments against the full model and alternative low-rank baselines are implemented, showing that the proposed method drastically reduces the training costs, while achieving high performance, comparable to or better than the full baseline, and consistently outperforms competing low-rank approaches.

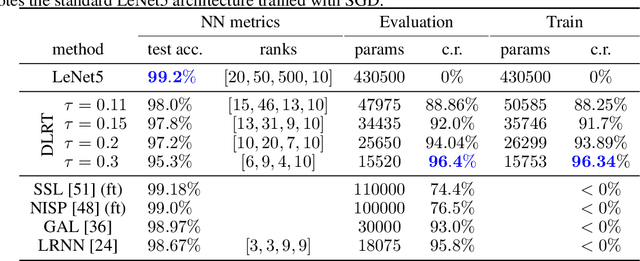

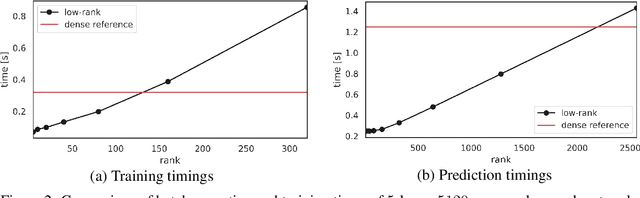

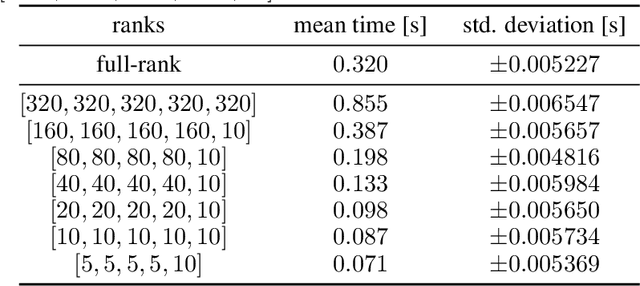

Low-rank lottery tickets: finding efficient low-rank neural networks via matrix differential equations

May 26, 2022

Abstract:Neural networks have achieved tremendous success in a large variety of applications. However, their memory footprint and computational demand can render them impractical in application settings with limited hardware or energy resources. In this work, we propose a novel algorithm to find efficient low-rank subnetworks. Remarkably, these subnetworks are determined and adapted already during the training phase and the overall time and memory resources required by both training and evaluating them is significantly reduced. The main idea is to restrict the weight matrices to a low-rank manifold and to update the low-rank factors rather than the full matrix during training. To derive training updates that are restricted to the prescribed manifold, we employ techniques from dynamic model order reduction for matrix differential equations. Moreover, our method automatically and dynamically adapts the ranks during training to achieve a desired approximation accuracy. The efficiency of the proposed method is demonstrated through a variety of numerical experiments on fully-connected and convolutional networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge