Stefano Bragaglia

TrialGraph: Machine Intelligence Enabled Insight from Graph Modelling of Clinical Trials

Dec 15, 2021

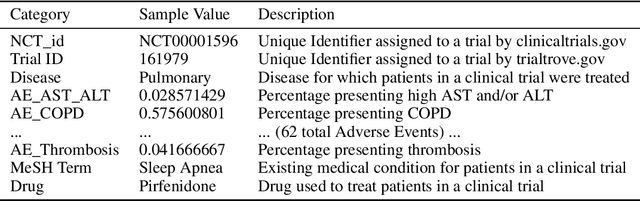

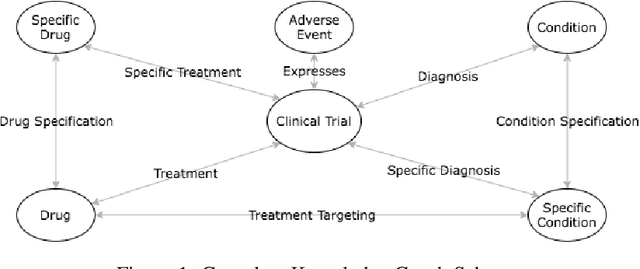

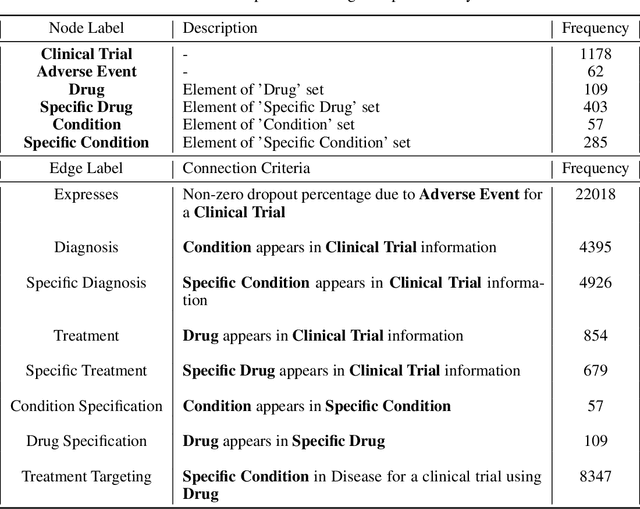

Abstract:A major impediment to successful drug development is the complexity, cost, and scale of clinical trials. The detailed internal structure of clinical trial data can make conventional optimization difficult to achieve. Recent advances in machine learning, specifically graph-structured data analysis, have the potential to enable significant progress in improving the clinical trial design. TrialGraph seeks to apply these methodologies to produce a proof-of-concept framework for developing models which can aid drug development and benefit patients. In this work, we first introduce a curated clinical trial data set compiled from the CT.gov, AACT and TrialTrove databases (n=1191 trials; representing one million patients) and describe the conversion of this data to graph-structured formats. We then detail the mathematical basis and implementation of a selection of graph machine learning algorithms, which typically use standard machine classifiers on graph data embedded in a low-dimensional feature space. We trained these models to predict side effect information for a clinical trial given information on the disease, existing medical conditions, and treatment. The MetaPath2Vec algorithm performed exceptionally well, with standard Logistic Regression, Decision Tree, Random Forest, Support Vector, and Neural Network classifiers exhibiting typical ROC-AUC scores of 0.85, 0.68, 0.86, 0.80, and 0.77, respectively. Remarkably, the best performing classifiers could only produce typical ROC-AUC scores of 0.70 when trained on equivalent array-structured data. Our work demonstrates that graph modelling can significantly improve prediction accuracy on appropriate datasets. Successive versions of the project that refine modelling assumptions and incorporate more data types can produce excellent predictors with real-world applications in drug development.

Named Entity Disambiguation using Deep Learning on Graphs

Oct 22, 2018

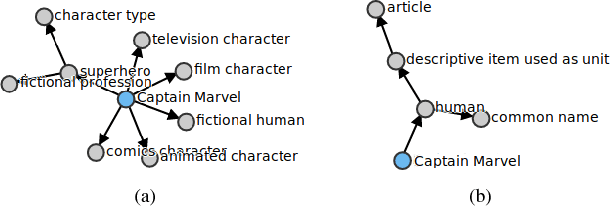

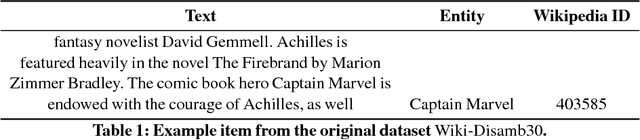

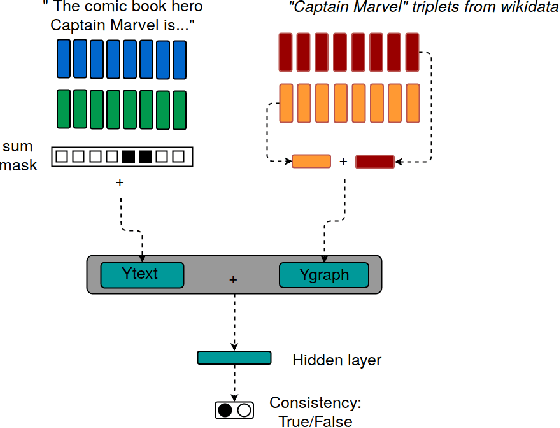

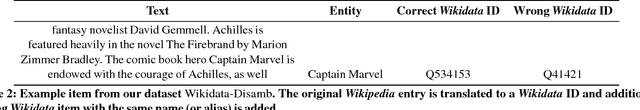

Abstract:We tackle \ac{NED} by comparing entities in short sentences with \wikidata{} graphs. Creating a context vector from graphs through deep learning is a challenging problem that has never been applied to \ac{NED}. Our main contribution is to present an experimental study of recent neural techniques, as well as a discussion about which graph features are most important for the disambiguation task. In addition, a new dataset (\wikidatadisamb{}) is created to allow a clean and scalable evaluation of \ac{NED} with \wikidata{} entries, and to be used as a reference in future research. In the end our results show that a \ac{Bi-LSTM} encoding of the graph triplets performs best, improving upon the baseline models and scoring an \rm{F1} value of $91.6\%$ on the \wikidatadisamb{} test set

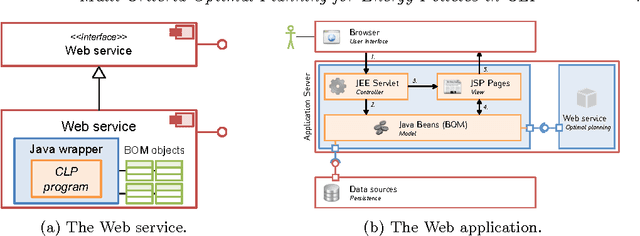

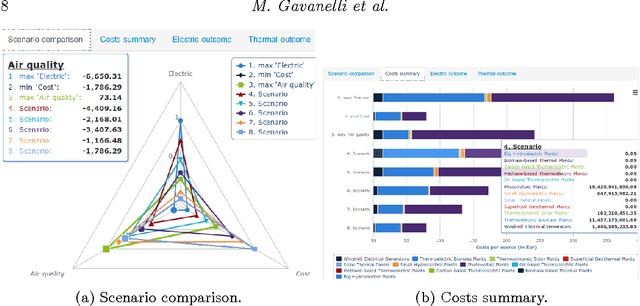

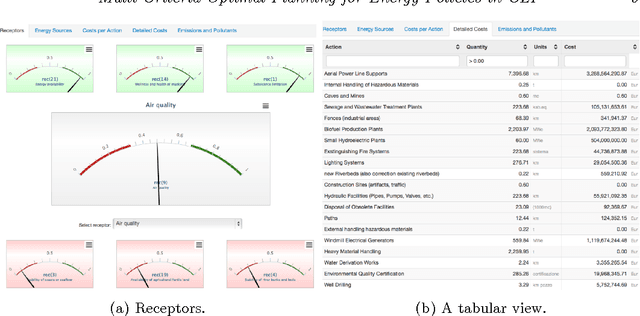

Multi-Criteria Optimal Planning for Energy Policies in CLP

May 15, 2014

Abstract:In the policy making process a number of disparate and diverse issues such as economic development, environmental aspects, as well as the social acceptance of the policy, need to be considered. A single person might not have all the required expertises, and decision support systems featuring optimization components can help to assess policies. Leveraging on previous work on Strategic Environmental Assessment, we developed a fully-fledged system that is able to provide optimal plans with respect to a given objective, to perform multi-objective optimization and provide sets of Pareto optimal plans, and to visually compare them. Each plan is environmentally assessed and its footprint is evaluated. The heart of the system is an application developed in a popular Constraint Logic Programming system on the Reals sort. It has been equipped with a web service module that can be queried through standard interfaces, and an intuitive graphic user interface.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge