Stefanie Mohr

1-2-3-Go! Policy Synthesis for Parameterized Markov Decision Processes via Decision-Tree Learning and Generalization

Oct 23, 2024Abstract:Despite the advances in probabilistic model checking, the scalability of the verification methods remains limited. In particular, the state space often becomes extremely large when instantiating parameterized Markov decision processes (MDPs) even with moderate values. Synthesizing policies for such \emph{huge} MDPs is beyond the reach of available tools. We propose a learning-based approach to obtain a reasonable policy for such huge MDPs. The idea is to generalize optimal policies obtained by model-checking small instances to larger ones using decision-tree learning. Consequently, our method bypasses the need for explicit state-space exploration of large models, providing a practical solution to the state-space explosion problem. We demonstrate the efficacy of our approach by performing extensive experimentation on the relevant models from the quantitative verification benchmark set. The experimental results indicate that our policies perform well, even when the size of the model is orders of magnitude beyond the reach of state-of-the-art analysis tools.

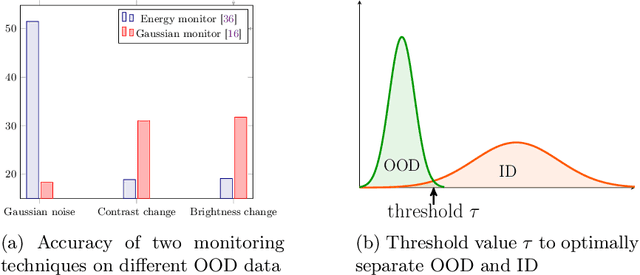

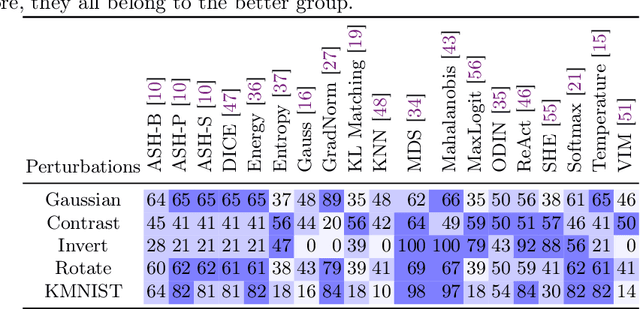

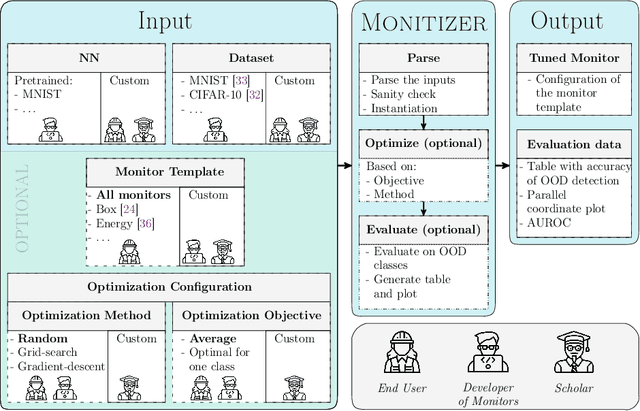

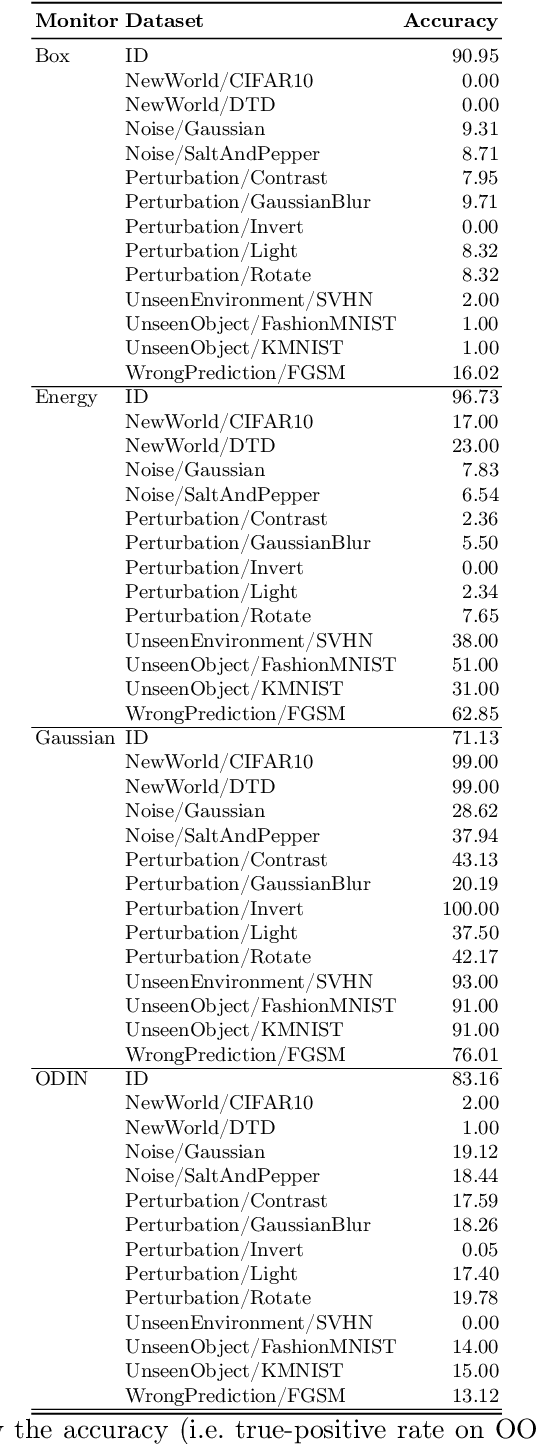

Monitizer: Automating Design and Evaluation of Neural Network Monitors

May 16, 2024

Abstract:The behavior of neural networks (NNs) on previously unseen types of data (out-of-distribution or OOD) is typically unpredictable. This can be dangerous if the network's output is used for decision-making in a safety-critical system. Hence, detecting that an input is OOD is crucial for the safe application of the NN. Verification approaches do not scale to practical NNs, making runtime monitoring more appealing for practical use. While various monitors have been suggested recently, their optimization for a given problem, as well as comparison with each other and reproduction of results, remain challenging. We present a tool for users and developers of NN monitors. It allows for (i) application of various types of monitors from the literature to a given input NN, (ii) optimization of the monitor's hyperparameters, and (iii) experimental evaluation and comparison to other approaches. Besides, it facilitates the development of new monitoring approaches. We demonstrate the tool's usability on several use cases of different types of users as well as on a case study comparing different approaches from recent literature.

Learning Explainable and Better Performing Representations of POMDP Strategies

Jan 20, 2024

Abstract:Strategies for partially observable Markov decision processes (POMDP) typically require memory. One way to represent this memory is via automata. We present a method to learn an automaton representation of a strategy using a modification of the L*-algorithm. Compared to the tabular representation of a strategy, the resulting automaton is dramatically smaller and thus also more explainable. Moreover, in the learning process, our heuristics may even improve the strategy's performance. In contrast to approaches that synthesize an automaton directly from the POMDP thereby solving it, our approach is incomparably more scalable.

Syntactic vs Semantic Linear Abstraction and Refinement of Neural Networks

Jul 20, 2023

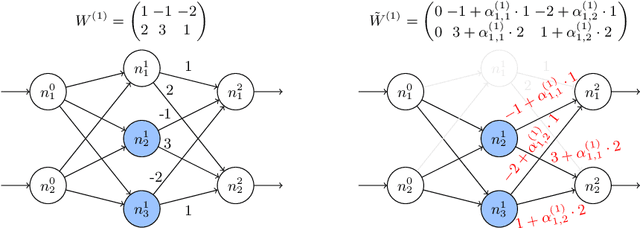

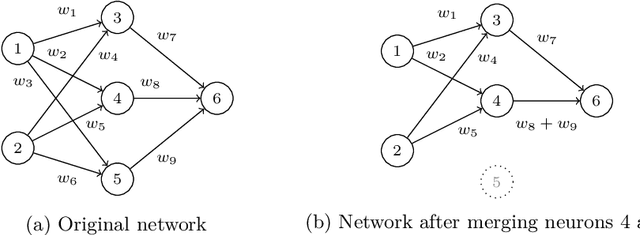

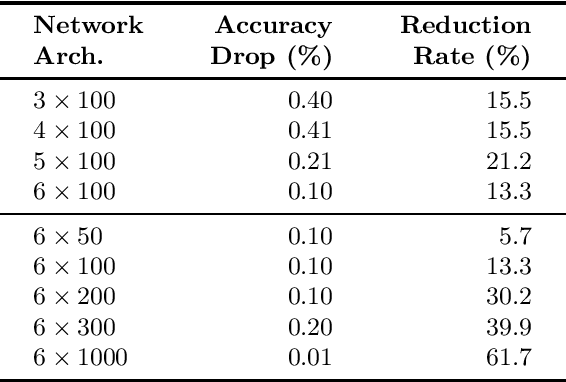

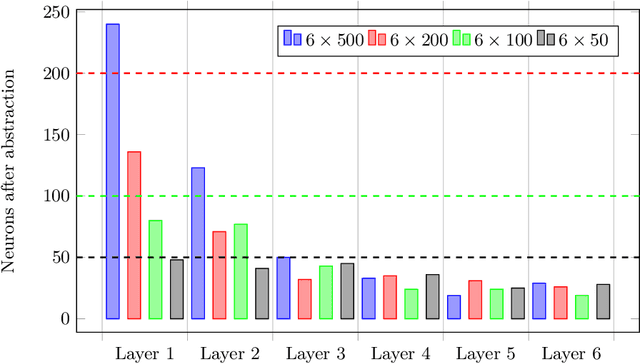

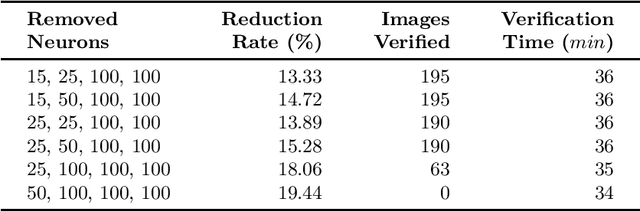

Abstract:Abstraction is a key verification technique to improve scalability. However, its use for neural networks is so far extremely limited. Previous approaches for abstracting classification networks replace several neurons with one of them that is similar enough. We can classify the similarity as defined either syntactically (using quantities on the connections between neurons) or semantically (on the activation values of neurons for various inputs). Unfortunately, the previous approaches only achieve moderate reductions, when implemented at all. In this work, we provide a more flexible framework where a neuron can be replaced with a linear combination of other neurons, improving the reduction. We apply this approach both on syntactic and semantic abstractions, and implement and evaluate them experimentally. Further, we introduce a refinement method for our abstractions, allowing for finding a better balance between reduction and precision.

Assessment of Neural Networks for Stream-Water-Temperature Prediction

Oct 08, 2021

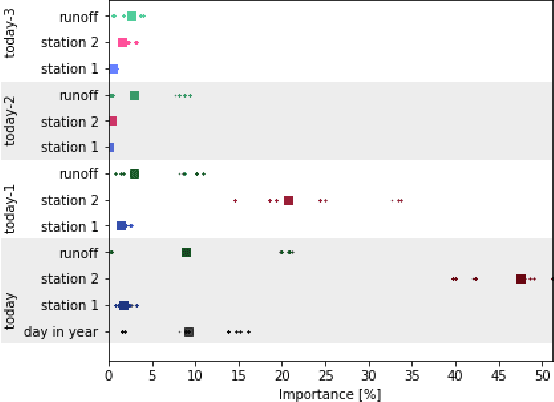

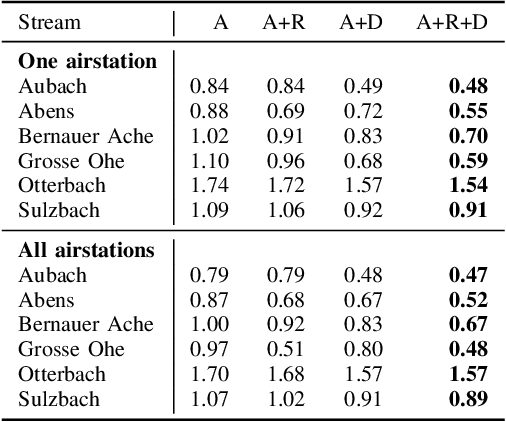

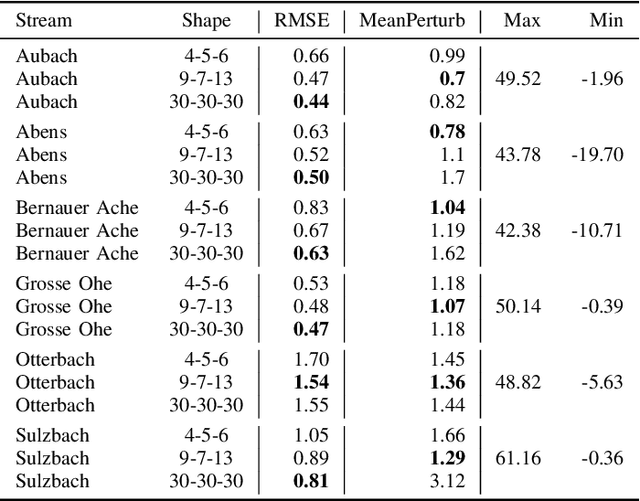

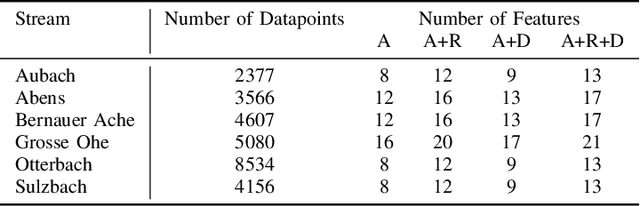

Abstract:Climate change results in altered air and water temperatures. Increases affect physicochemical properties, such as oxygen concentration, and can shift species distribution and survival, with consequences for ecosystem functioning and services. These ecosystem services have integral value for humankind and are forecasted to alter under climate warming. A mechanistic understanding of the drivers and magnitude of expected changes is essential in identifying system resilience and mitigation measures. In this work, we present a selection of state-of-the-art Neural Networks (NN) for the prediction of water temperatures in six streams in Germany. We show that the use of methods that compare observed and predicted values, exemplified with the Root Mean Square Error (RMSE), is not sufficient for their assessment. Hence we introduce additional analysis methods for our models to complement the state-of-the-art metrics. These analyses evaluate the NN's robustness, possible maximal and minimal values, and the impact of single input parameters on the output. We thus contribute to understanding the processes within the NN and help applicants choose architectures and input parameters for reliable water temperature prediction models.

DeepAbstract: Neural Network Abstraction for Accelerating Verification

Jun 24, 2020

Abstract:While abstraction is a classic tool of verification to scale it up, it is not used very often for verifying neural networks. However, it can help with the still open task of scaling existing algorithms to state-of-the-art network architectures. We introduce an abstraction framework applicable to fully-connected feed-forward neural networks based on clustering of neurons that behave similarly on some inputs. For the particular case of ReLU, we additionally provide error bounds incurred by the abstraction. We show how the abstraction reduces the size of the network, while preserving its accuracy, and how verification results on the abstract network can be transferred back to the original network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge