Ssu-Rui Lee

Flexible Multiple-Objective Reinforcement Learning for Chip Placement

Apr 13, 2022

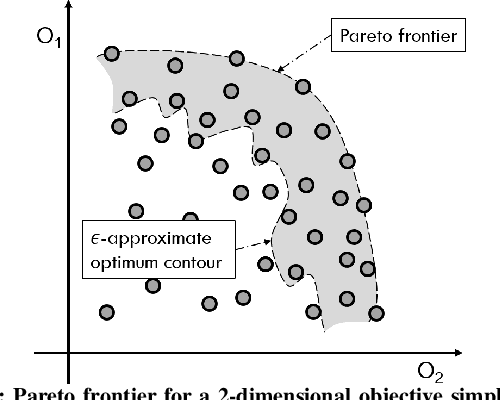

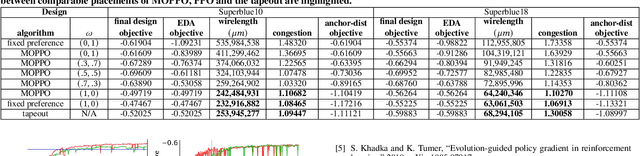

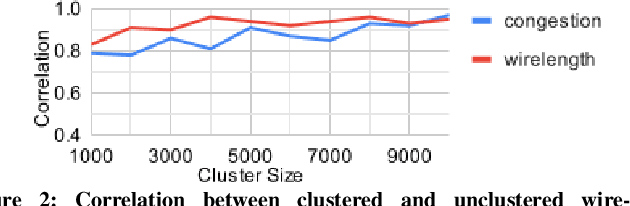

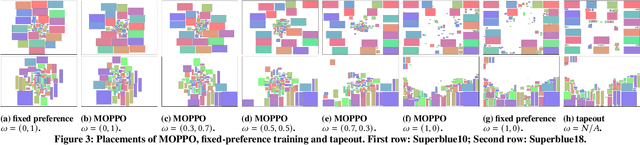

Abstract:Recently, successful applications of reinforcement learning to chip placement have emerged. Pretrained models are necessary to improve efficiency and effectiveness. Currently, the weights of objective metrics (e.g., wirelength, congestion, and timing) are fixed during pretraining. However, fixed-weighed models cannot generate the diversity of placements required for engineers to accommodate changing requirements as they arise. This paper proposes flexible multiple-objective reinforcement learning (MORL) to support objective functions with inference-time variable weights using just a single pretrained model. Our macro placement results show that MORL can generate the Pareto frontier of multiple objectives effectively.

EmotionX-IDEA: Emotion BERT -- an Affectional Model for Conversation

Aug 17, 2019

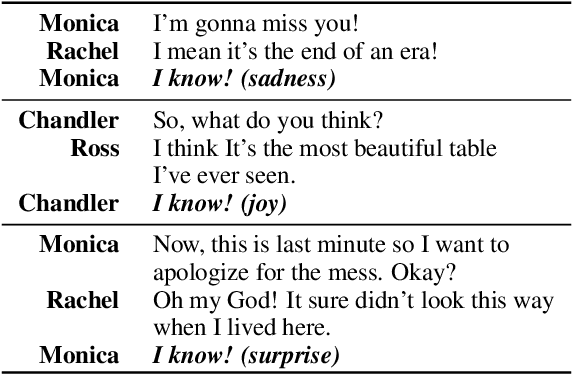

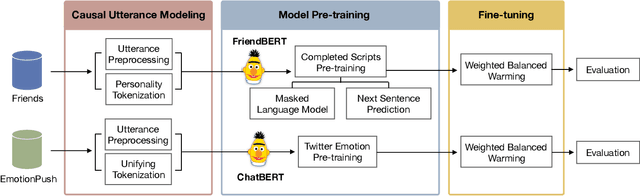

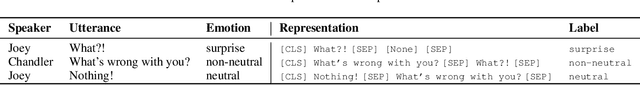

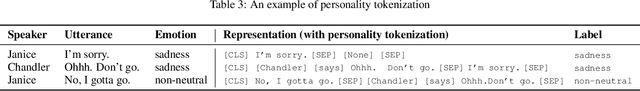

Abstract:In this paper, we investigate the emotion recognition ability of the pre-training language model, namely BERT. By the nature of the framework of BERT, a two-sentence structure, we adapt BERT to continues dialogue emotion prediction tasks, which rely heavily on the sentence-level context-aware understanding. The experiments show that by mapping the continues dialogue into a causal utterance pair, which is constructed by the utterance and the reply utterance, models can better capture the emotions of the reply utterance. The present method has achieved 0.815 and 0.885 micro F1 score in the testing dataset of Friends and EmotionPush, respectively.

Leveraging Linguistic Characteristics for Bipolar Disorder Recognition with Gender Differences

Jul 17, 2019

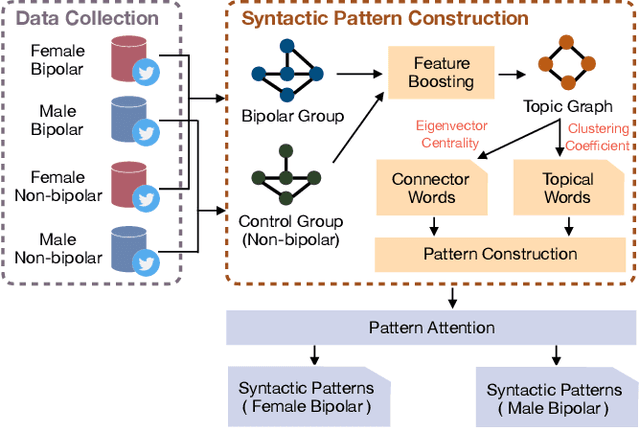

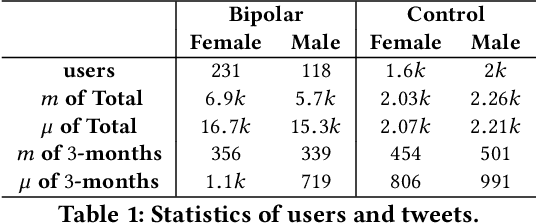

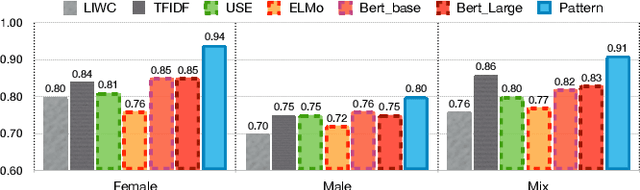

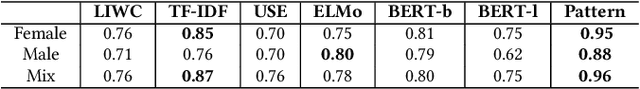

Abstract:Most previous studies on automatic recognition model for bipolar disorder (BD) were based on both social media and linguistic features. The present study investigates the possibility of adopting only language-based features, namely the syntax and morpheme collocation. We also examine the effect of gender on the results considering gender has long been recognized as an important modulating factor for mental disorders, yet it received little attention in previous linguistic models. The present study collects Twitter posts 3 months prior to the self-disclosure by 349 BD users (231 female, 118 male). We construct a set of syntactic patterns in terms of the word usage based on graph pattern construction and pattern attention mechanism. The factors examined are gender differences, syntactic patterns, and bipolar recognition performance. The performance indicates our F1 scores reach over 91% and outperform several baselines, including those using TF-IDF, LIWC and pre-trained language models (ELMO and BERT). The contributions of the present study are: (1) The features are contextualized, domain-agnostic, and purely linguistic. (2) The performance of BD recognition is improved by gender-enriched linguistic pattern features, which are constructed with gender differences in language usage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge