Sophie Fischer

Medical Imaging AI Competitions Lack Fairness

Dec 19, 2025

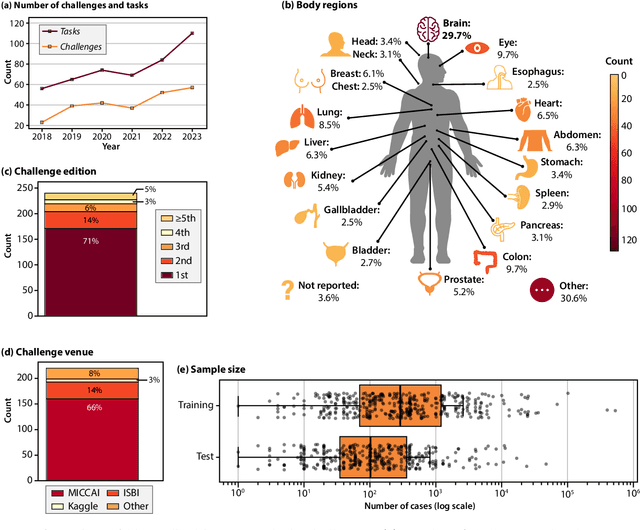

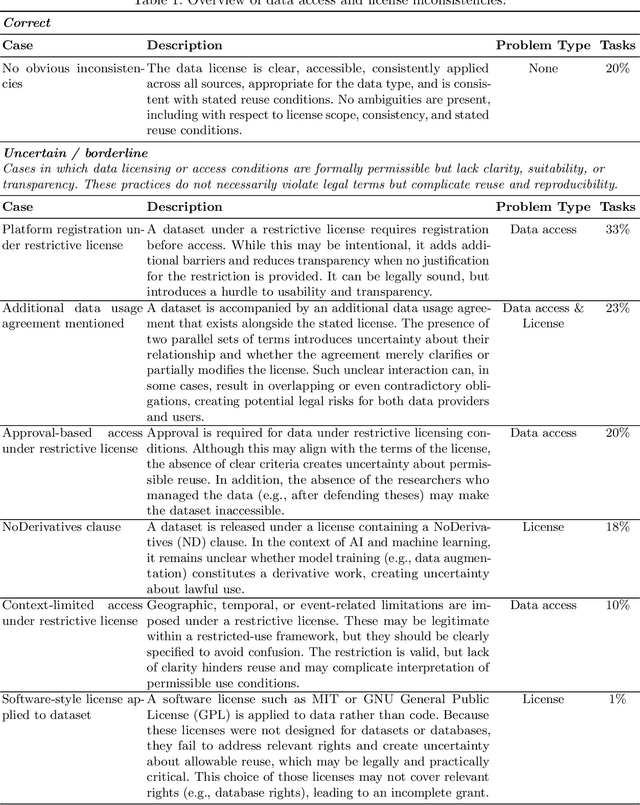

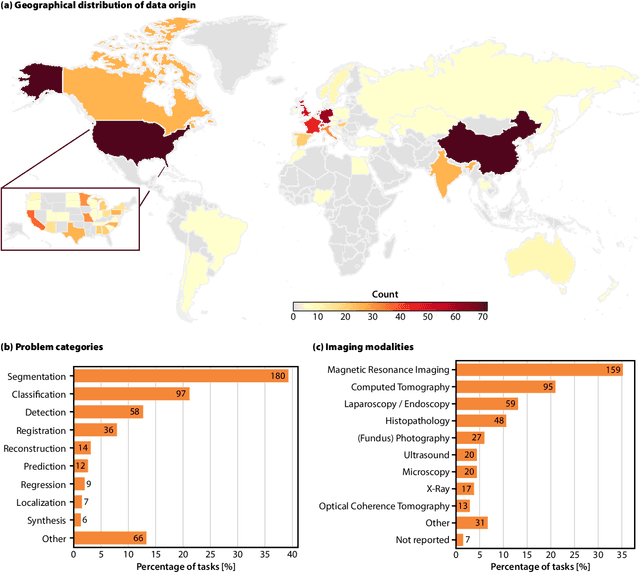

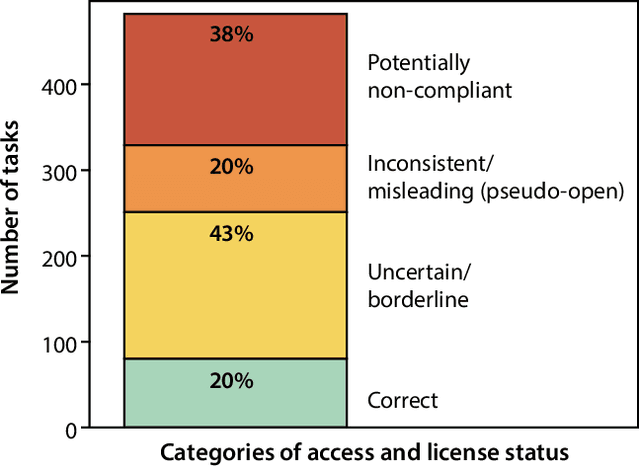

Abstract:Benchmarking competitions are central to the development of artificial intelligence (AI) in medical imaging, defining performance standards and shaping methodological progress. However, it remains unclear whether these benchmarks provide data that are sufficiently representative, accessible, and reusable to support clinically meaningful AI. In this work, we assess fairness along two complementary dimensions: (1) whether challenge datasets are representative of real-world clinical diversity, and (2) whether they are accessible and legally reusable in line with the FAIR principles. To address this question, we conducted a large-scale systematic study of 241 biomedical image analysis challenges comprising 458 tasks across 19 imaging modalities. Our findings show substantial biases in dataset composition, including geographic location, modality-, and problem type-related biases, indicating that current benchmarks do not adequately reflect real-world clinical diversity. Despite their widespread influence, challenge datasets were frequently constrained by restrictive or ambiguous access conditions, inconsistent or non-compliant licensing practices, and incomplete documentation, limiting reproducibility and long-term reuse. Together, these shortcomings expose foundational fairness limitations in our benchmarking ecosystem and highlight a disconnect between leaderboard success and clinical relevance.

Open Assistant Toolkit -- version 2

Mar 01, 2024

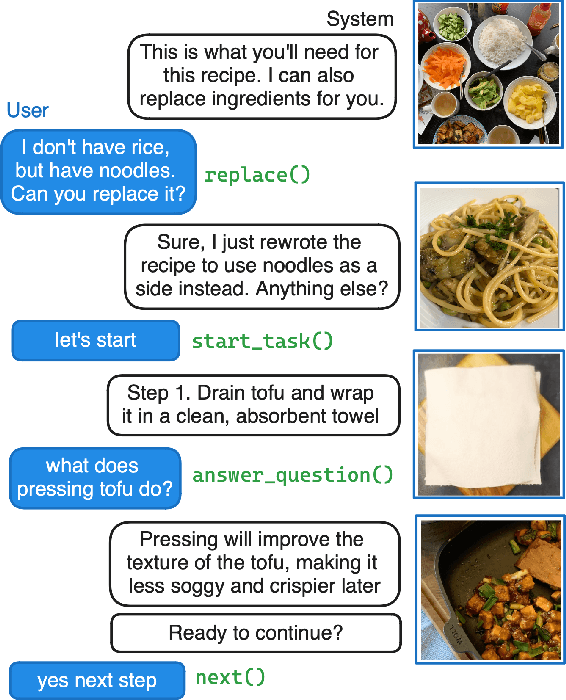

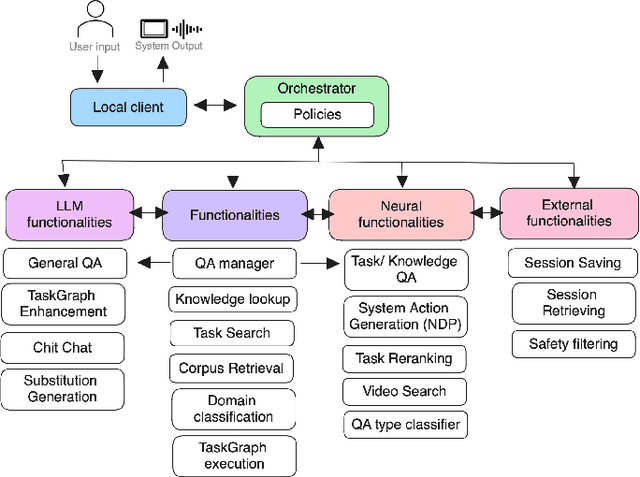

Abstract:We present the second version of the Open Assistant Toolkit (OAT-v2), an open-source task-oriented conversational system for composing generative neural models. OAT-v2 is a scalable and flexible assistant platform supporting multiple domains and modalities of user interaction. It splits processing a user utterance into modular system components, including submodules such as action code generation, multimodal content retrieval, and knowledge-augmented response generation. Developed over multiple years of the Alexa TaskBot challenge, OAT-v2 is a proven system that enables scalable and robust experimentation in experimental and real-world deployment. OAT-v2 provides open models and software for research and commercial applications to enable the future of multimodal virtual assistants across diverse applications and types of rich interaction.

GRILLBot In Practice: Lessons and Tradeoffs Deploying Large Language Models for Adaptable Conversational Task Assistants

Feb 12, 2024

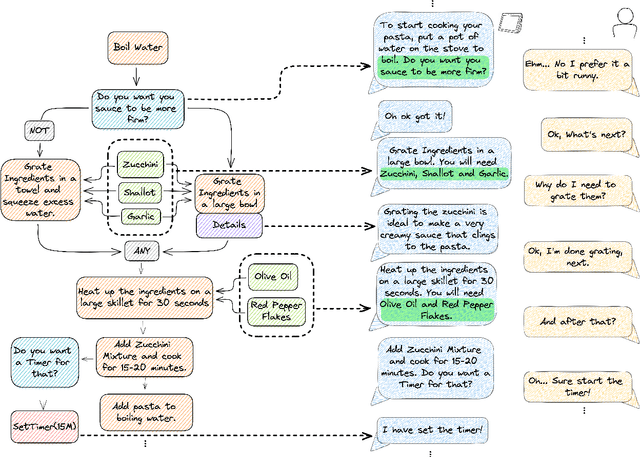

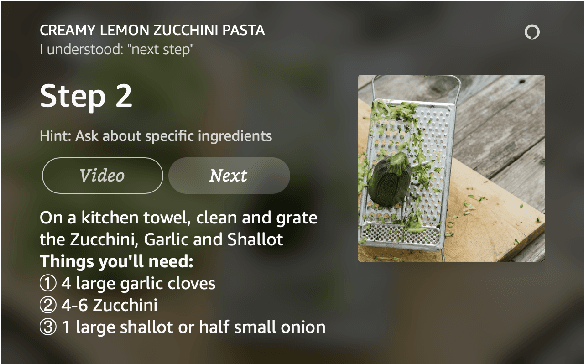

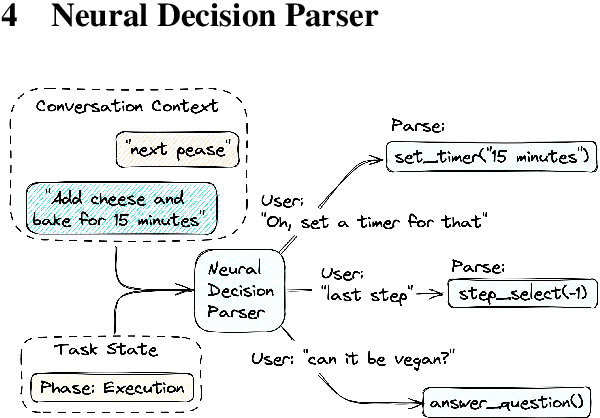

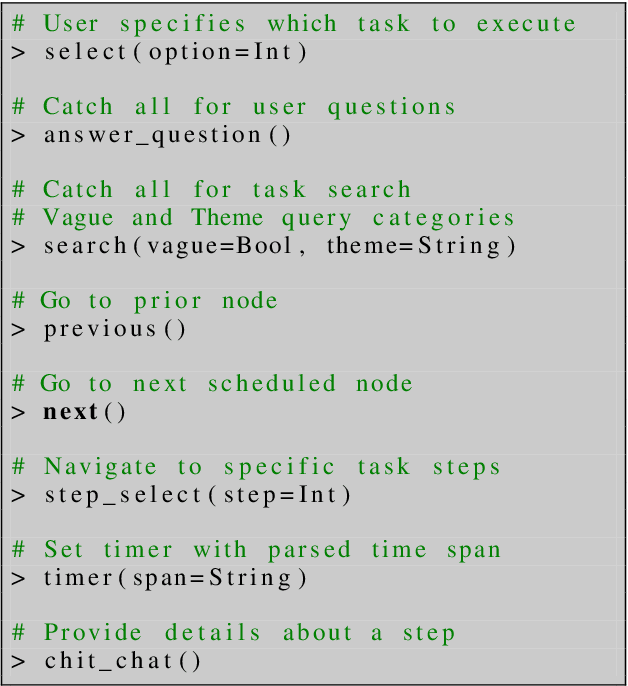

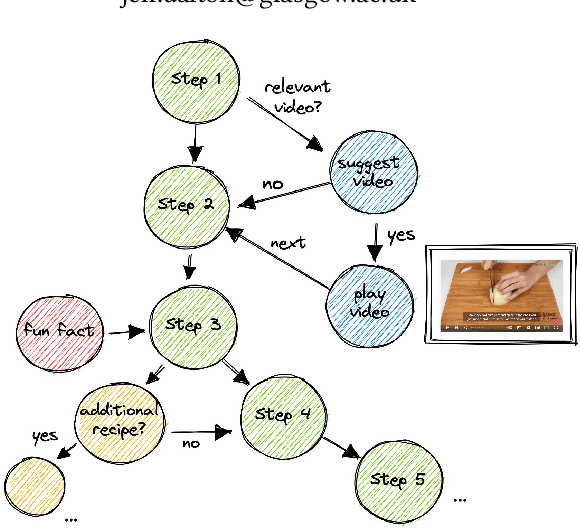

Abstract:We tackle the challenge of building real-world multimodal assistants for complex real-world tasks. We describe the practicalities and challenges of developing and deploying GRILLBot, a leading (first and second prize winning in 2022 and 2023) system deployed in the Alexa Prize TaskBot Challenge. Building on our Open Assistant Toolkit (OAT) framework, we propose a hybrid architecture that leverages Large Language Models (LLMs) and specialised models tuned for specific subtasks requiring very low latency. OAT allows us to define when, how and which LLMs should be used in a structured and deployable manner. For knowledge-grounded question answering and live task adaptations, we show that LLM reasoning abilities over task context and world knowledge outweigh latency concerns. For dialogue state management, we implement a code generation approach and show that specialised smaller models have 84% effectiveness with 100x lower latency. Overall, we provide insights and discuss tradeoffs for deploying both traditional models and LLMs to users in complex real-world multimodal environments in the Alexa TaskBot challenge. These experiences will continue to evolve as LLMs become more capable and efficient -- fundamentally reshaping OAT and future assistant architectures.

GRILLBot: An Assistant for Real-World Tasks with Neural Semantic Parsing and Graph-Based Representations

Aug 31, 2022

Abstract:GRILLBot is the winning system in the 2022 Alexa Prize TaskBot Challenge, moving towards the next generation of multimodal task assistants. It is a voice assistant to guide users through complex real-world tasks in the domains of cooking and home improvement. These are long-running and complex tasks that require flexible adjustment and adaptation. The demo highlights the core aspects, including a novel Neural Decision Parser for contextualized semantic parsing, a new "TaskGraph" state representation that supports conditional execution, knowledge-grounded chit-chat, and automatic enrichment of tasks with images and videos.

VILT: Video Instructions Linking for Complex Tasks

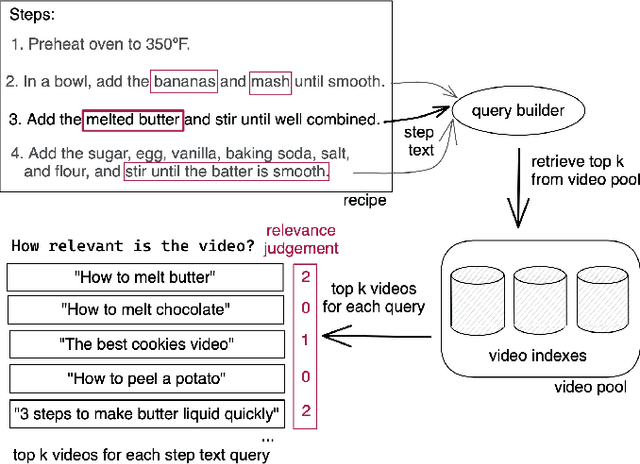

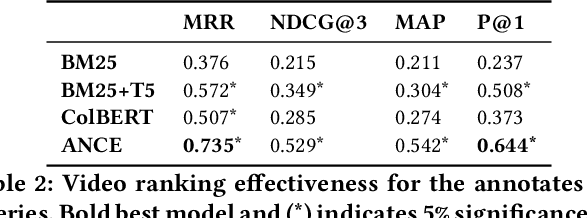

Aug 23, 2022

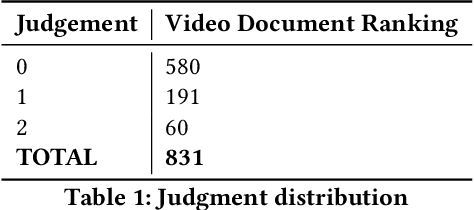

Abstract:This work addresses challenges in developing conversational assistants that support rich multimodal video interactions to accomplish real-world tasks interactively. We introduce the task of automatically linking instructional videos to task steps as "Video Instructions Linking for Complex Tasks" (VILT). Specifically, we focus on the domain of cooking and empowering users to cook meals interactively with a video-enabled Alexa skill. We create a reusable benchmark with 61 queries from recipe tasks and curate a collection of 2,133 instructional "How-To" cooking videos. Studying VILT with state-of-the-art retrieval methods, we find that dense retrieval with ANCE is the most effective, achieving an NDCG@3 of 0.566 and P@1 of 0.644. We also conduct a user study that measures the effect of incorporating videos in a real-world task setting, where 10 participants perform several cooking tasks with varying multimodal experimental conditions using a state-of-the-art Alexa TaskBot system. The users interacting with manually linked videos said they learned something new 64% of the time, which is a 9% increase compared to the automatically linked videos (55%), indicating that linked video relevance is important for task learning.

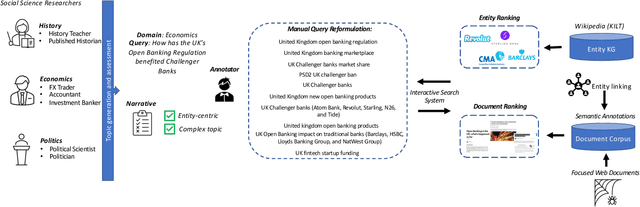

CODEC: Complex Document and Entity Collection

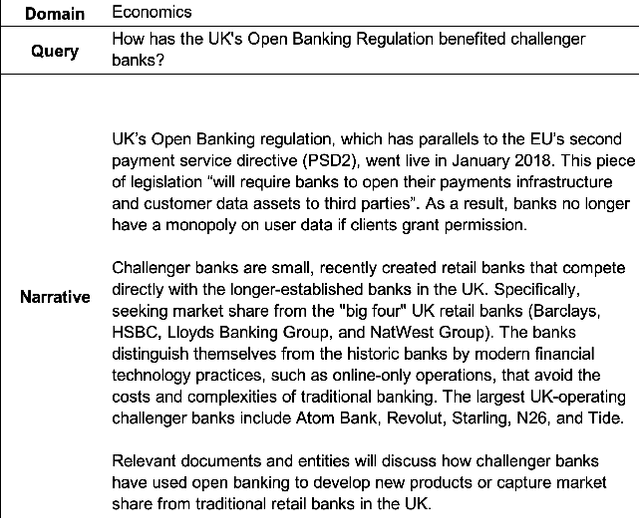

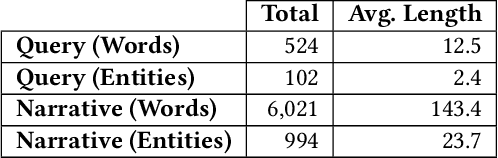

May 17, 2022

Abstract:CODEC is a document and entity ranking benchmark that focuses on complex research topics. We target essay-style information needs of social science researchers, i.e. "How has the UK's Open Banking Regulation benefited Challenger Banks?". CODEC includes 42 topics developed by researchers and a new focused web corpus with semantic annotations including entity links. This resource includes expert judgments on 17,509 documents and entities (416.9 per topic) from diverse automatic and interactive manual runs. The manual runs include 387 query reformulations, providing data for query performance prediction and automatic rewriting evaluation. CODEC includes analysis of state-of-the-art systems, including dense retrieval and neural re-ranking. The results show the topics are challenging with headroom for document and entity ranking improvement. Query expansion with entity information shows significant gains in document ranking, demonstrating the resource's value for evaluating and improving entity-oriented search. We also show that the manual query reformulations significantly improve document ranking and entity ranking performance. Overall, CODEC provides challenging research topics to support the development and evaluation of entity-centric search methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge