Sophie Burkhardt

Focusing Knowledge-based Graph Argument Mining via Topic Modeling

Feb 03, 2021

Abstract:Decision-making usually takes five steps: identifying the problem, collecting data, extracting evidence, identifying pro and con arguments, and making decisions. Focusing on extracting evidence, this paper presents a hybrid model that combines latent Dirichlet allocation and word embeddings to obtain external knowledge from structured and unstructured data. We study the task of sentence-level argument mining, as arguments mostly require some degree of world knowledge to be identified and understood. Given a topic and a sentence, the goal is to classify whether a sentence represents an argument in regard to the topic. We use a topic model to extract topic- and sentence-specific evidence from the structured knowledge base Wikidata, building a graph based on the cosine similarity between the entity word vectors of Wikidata and the vector of the given sentence. Also, we build a second graph based on topic-specific articles found via Google to tackle the general incompleteness of structured knowledge bases. Combining these graphs, we obtain a graph-based model which, as our evaluation shows, successfully capitalizes on both structured and unstructured data.

Rule Extraction from Binary Neural Networks with Convolutional Rules for Model Validation

Dec 15, 2020

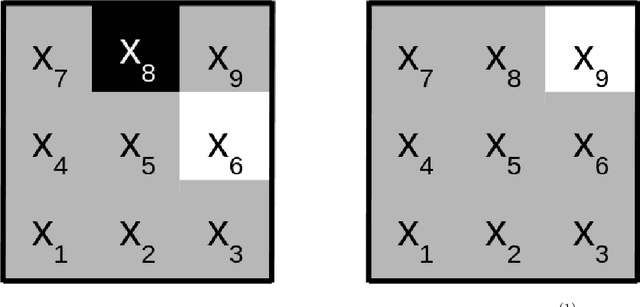

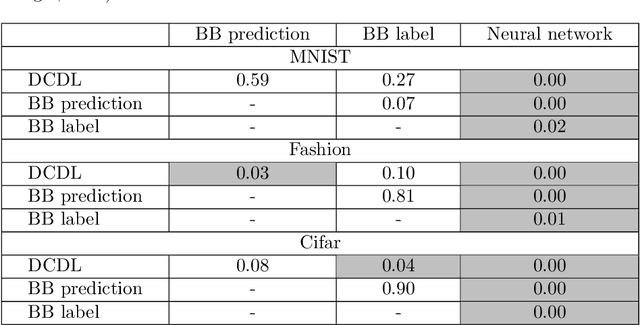

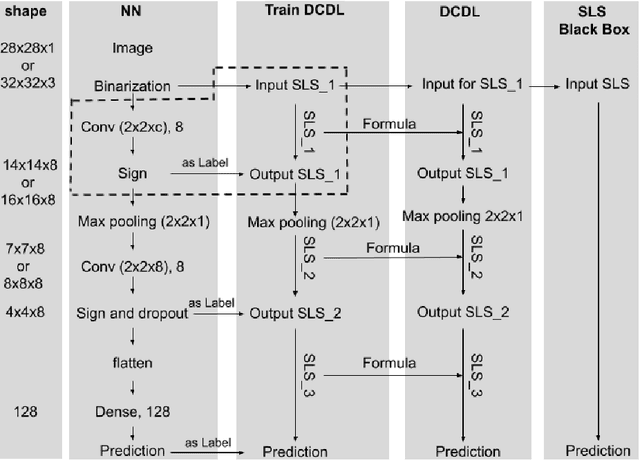

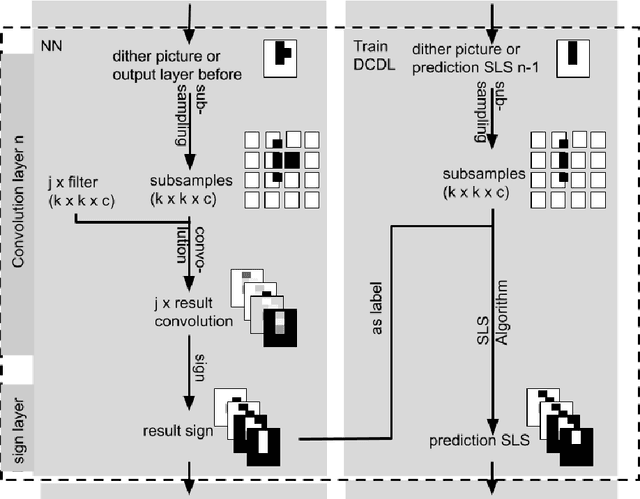

Abstract:Most deep neural networks are considered to be black boxes, meaning their output is hard to interpret. In contrast, logical expressions are considered to be more comprehensible since they use symbols that are semantically close to natural language instead of distributed representations. However, for high-dimensional input data such as images, the individual symbols, i.e. pixels, are not easily interpretable. We introduce the concept of first-order convolutional rules, which are logical rules that can be extracted using a convolutional neural network (CNN), and whose complexity depends on the size of the convolutional filter and not on the dimensionality of the input. Our approach is based on rule extraction from binary neural networks with stochastic local search. We show how to extract rules that are not necessarily short, but characteristic of the input, and easy to visualize. Our experiments show that the proposed approach is able to model the functionality of the neural network while at the same time producing interpretable logical rules.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge