Sonja Kunzmann

An unobtrusive quality supervision approach for medical image annotation

Nov 22, 2022Abstract:Image annotation is one essential prior step to enable data-driven algorithms. In medical imaging, having large and reliably annotated data sets is crucial to recognize various diseases robustly. However, annotator performance varies immensely, thus impacts model training. Therefore, often multiple annotators should be employed, which is however expensive and resource-intensive. Hence, it is desirable that users should annotate unseen data and have an automated system to unobtrusively rate their performance during this process. We examine such a system based on whole slide images (WSIs) showing lung fluid cells. We evaluate two methods the generation of synthetic individual cell images: conditional Generative Adversarial Networks and Diffusion Models (DM). For qualitative and quantitative evaluation, we conduct a user study to highlight the suitability of generated cells. Users could not detect 52.12% of generated images by DM proofing the feasibility to replace the original cells with synthetic cells without being noticed.

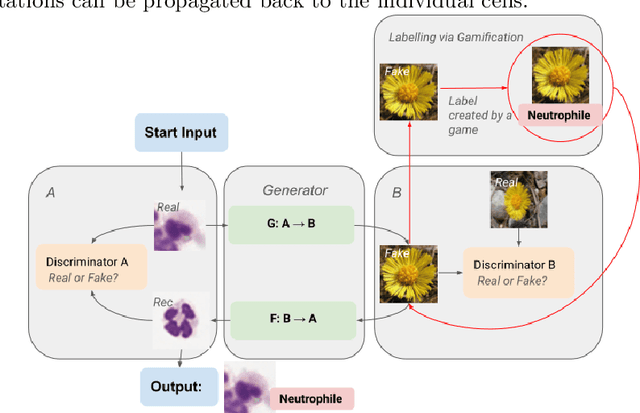

First steps on Gamification of Lung Fluid Cells Annotations in the Flower Domain

Nov 05, 2021

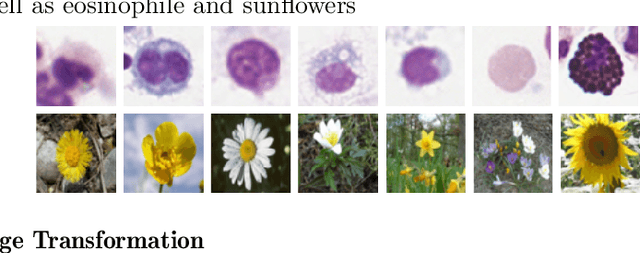

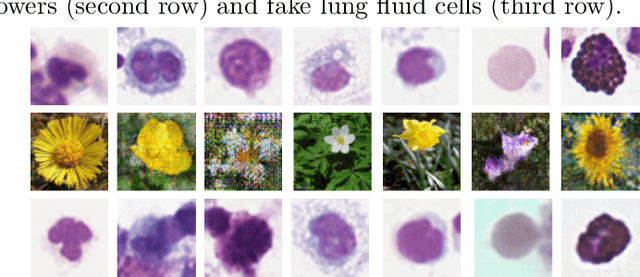

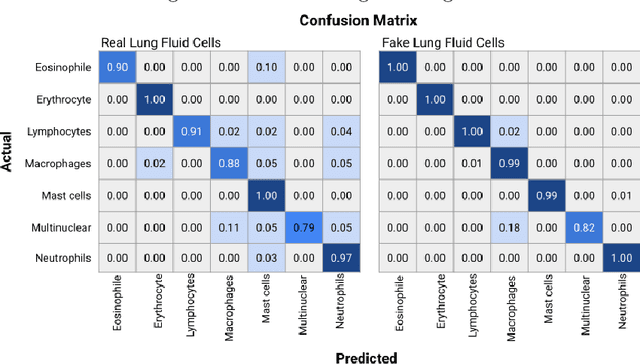

Abstract:Annotating data, especially in the medical domain, requires expert knowledge and a lot of effort. This limits the amount and/or usefulness of available medical data sets for experimentation. Therefore, developing strategies to increase the number of annotations while lowering the needed domain knowledge is of interest. A possible strategy is the use of gamification, that is i.e. transforming the annotation task into a game. We propose an approach to gamify the task of annotating lung fluid cells from pathological whole slide images. As this domain is unknown to non-expert annotators, we transform images of cells detected with a RetinaNet architecture to the domain of flower images. This domain transfer is performed with a CycleGAN architecture for different cell types. In this more assessable domain, non-expert annotators can be (t)asked to annotate different kinds of flowers in a playful setting. In order to provide a proof of concept, this work shows that the domain transfer is possible by evaluating an image classification network trained on real cell images and tested on the cell images generated by the CycleGAN network. The classification network reaches an accuracy of 97.48% and 95.16% on the original lung fluid cells and transformed lung fluid cells, respectively. With this study, we lay the foundation for future research on gamification using CycleGANs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge