Songpo Li

The Use of Gaze-Derived Confidence of Inferred Operator Intent in Adjusting Safety-Conscious Haptic Assistance

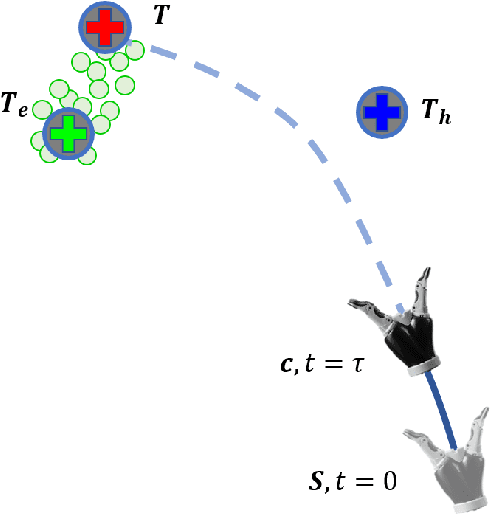

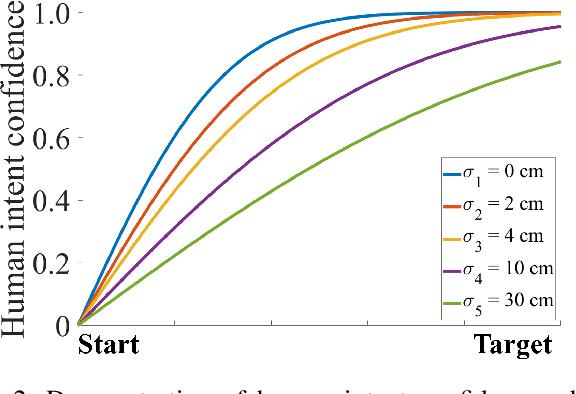

Apr 04, 2025Abstract:Humans directly completing tasks in dangerous or hazardous conditions is not always possible where these tasks are increasingly be performed remotely by teleoperated robots. However, teleoperation is difficult since the operator feels a disconnect with the robot caused by missing feedback from several senses, including touch, and the lack of depth in the video feedback presented to the operator. To overcome this problem, the proposed system actively infers the operator's intent and provides assistance based on the predicted intent. Furthermore, a novel method of calculating confidence in the inferred intent modifies the human-in-the-loop control. The operator's gaze is employed to intuitively indicate the target before the manipulation with the robot begins. A potential field method is used to provide a guiding force towards the intended target, and a safety boundary reduces risk of damage. Modifying these assistances based on the confidence level in the operator's intent makes the control more natural, and gives the robot an intuitive understanding of its human master. Initial validation results show the ability of the system to improve accuracy, execution time, and reduce operator error.

Hierarchical Deep Learning for Intention Estimation of Teleoperation Manipulation in Assembly Tasks

Mar 28, 2024

Abstract:In human-robot collaboration, shared control presents an opportunity to teleoperate robotic manipulation to improve the efficiency of manufacturing and assembly processes. Robots are expected to assist in executing the user's intentions. To this end, robust and prompt intention estimation is needed, relying on behavioral observations. The framework presents an intention estimation technique at hierarchical levels i.e., low-level actions and high-level tasks, by incorporating multi-scale hierarchical information in neural networks. Technically, we employ hierarchical dependency loss to boost overall accuracy. Furthermore, we propose a multi-window method that assigns proper hierarchical prediction windows of input data. An analysis of the predictive power with various inputs demonstrates the predominance of the deep hierarchical model in the sense of prediction accuracy and early intention identification. We implement the algorithm on a virtual reality (VR) setup to teleoperate robotic hands in a simulation with various assembly tasks to show the effectiveness of online estimation.

Robust Robot-assisted Tele-grasping Through Intent-Uncertainty-Aware Planning

May 19, 2020

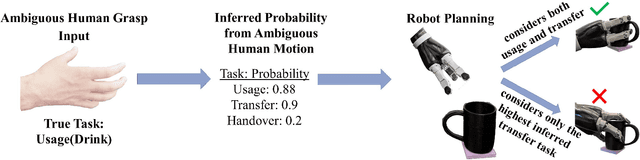

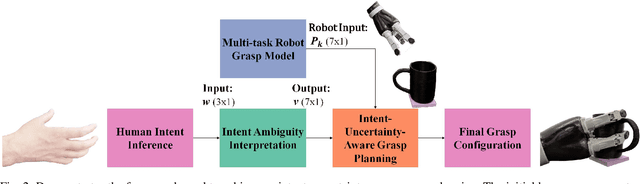

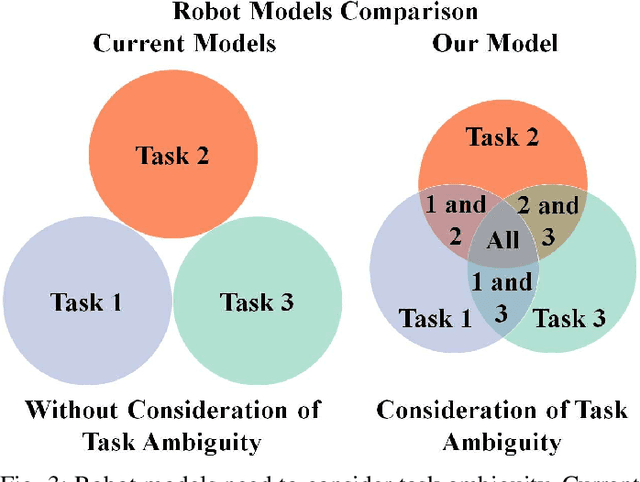

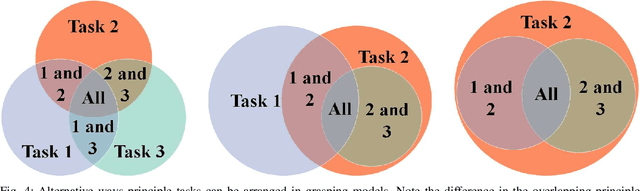

Abstract:In teleoperation, research has mainly focused on target approaching, where we deal with the more challenging object manipulation task by advancing the shared control technique. Appropriately manipulating an object is challenging due to the fine motion constraint requirements for a specific manipulation task. Although these motion constraints are critical for task success, they often are subtle when observing ambiguous human motion. The disembodiment problem and physical discrepancy between the human and robot hands bring additional uncertainty, further exaggerating the complications of the object manipulation task. Moreover, there is a lack of planning and modeling techniques that can effectively combine the human and robot agents' motion input while considering the ambiguity of the human intent. To overcome this challenge, we built a multi-task robot grasping model and developed an intent-uncertainty-aware grasp planner to generate robust grasp poses given the ambiguous human intent inference inputs. With these validated modeling and planning techniques, it is expected to extend teleoperated robots' functionality and adoption in practical telemanipulation scenarios.

A General Arbitration Model for Robust Human-Robot Shared Control with Multi-Source Uncertainty Modeling

Mar 11, 2020

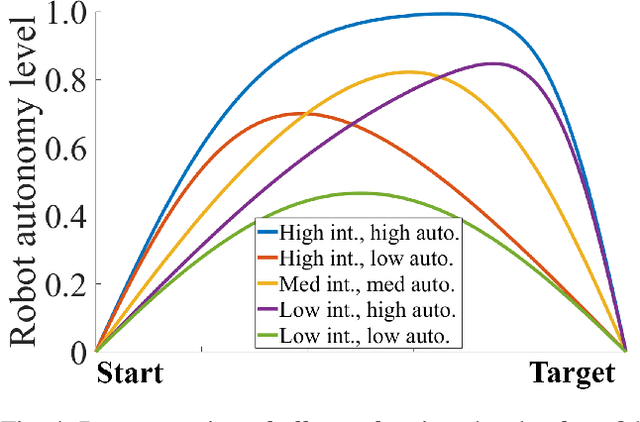

Abstract:Shared control in teleoperation leverages both human and robot's strengths and has demonstrated great advantages of reducing the difficulties in teleoperating a robot and increasing the task performance. One fundamental question in shared control is how to effectively allocate the control power to the human and robot. Researchers have been subjectively defining the arbitrate policies following conflicting principles, which resulted in great inconsistency in the policies. We attribute this inconsistency to the inconsiderateness of the multi-resource uncertainty in the human-robot system. To fill the gap, we developed a multi-source uncertainty model that was applicable to various types of uncertainty in real world, and then a general arbitration model was developed to comprehensively fuse the uncertainty and regulate the arbitration weight assigned to the robotic agent. Beside traditional macro performance metrics, we introduced objective and quantitative metrics of robotic helpfulness and friendliness that evaluated the assistive robot's cooperation at micro and macro levels. Results from simulations and experiments showed the new arbitration model was more effective and friendly over the existing policies and was robust to coping with multi-source uncertainty. With this new arbitration model, we expect the increased adoption of human-robot shared control in practical and complex teleoperation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge