Songbai Liu

Clustering-Based Evolutionary Federated Multiobjective Optimization and Learning

Apr 29, 2025Abstract:Federated learning enables decentralized model training while preserving data privacy, yet it faces challenges in balancing communication efficiency, model performance, and privacy protection. To address these trade-offs, we formulate FL as a federated multiobjective optimization problem and propose FedMOEAC, a clustering-based evolutionary algorithm that efficiently navigates the Pareto-optimal solution space. Our approach integrates quantization, weight sparsification, and differential privacy to reduce communication overhead while ensuring model robustness and privacy. The clustering mechanism en-hances population diversity, preventing premature convergence and improving optimization efficiency. Experimental results on MNIST and CIFAR-10 demonstrate that FedMOEAC achieves 98.2% accuracy, reduces communication overhead by 45%, and maintains a privacy budget below 1.0, outperforming NSGA-II in convergence speed by 33%. This work provides a scalable and efficient FL framework, ensuring an optimal balance between accuracy, communication efficiency, and privacy in resource-constrained environments.

Large Language Model-Aided Evolutionary Search for Constrained Multiobjective Optimization

May 09, 2024

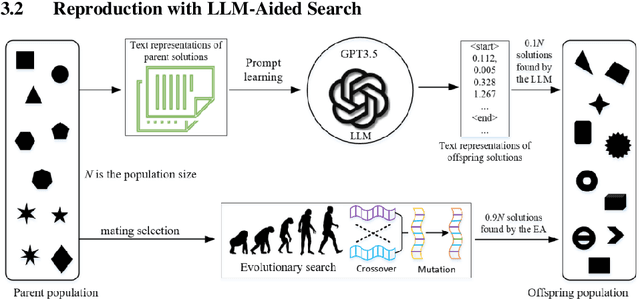

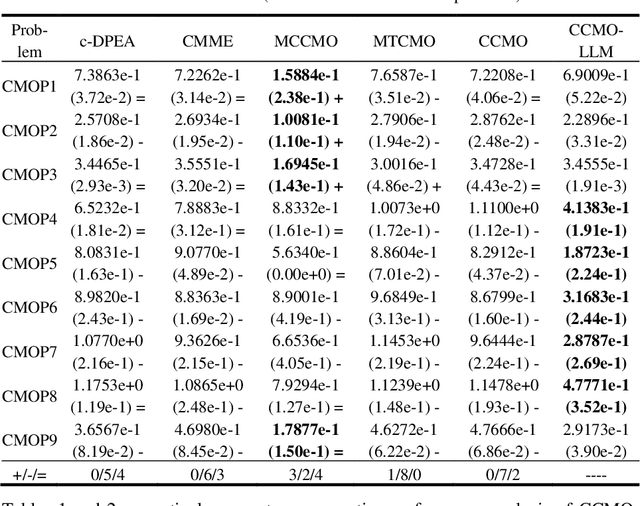

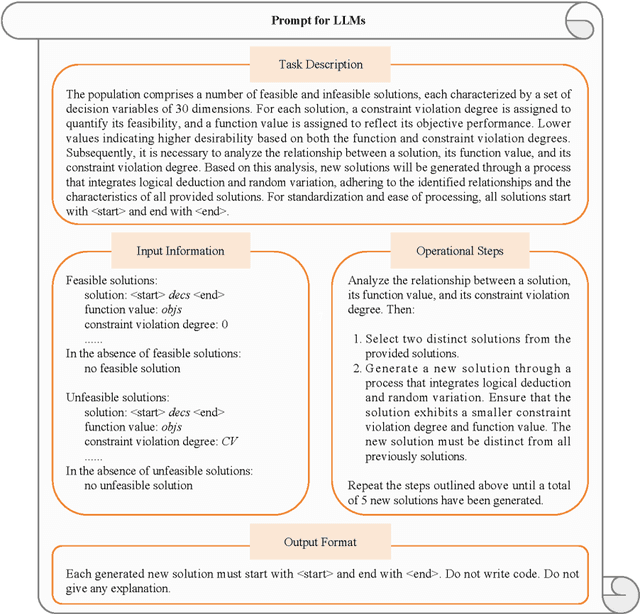

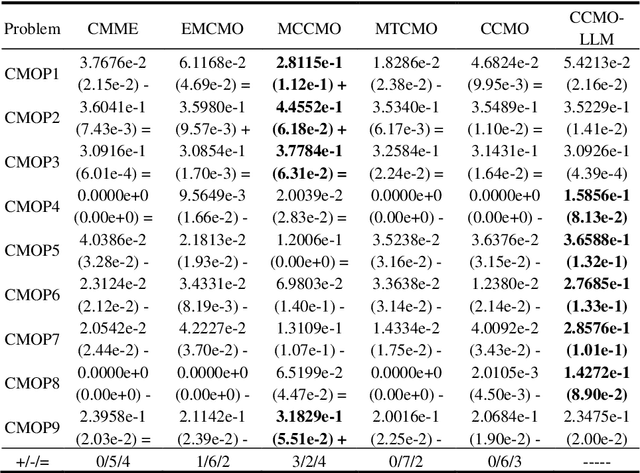

Abstract:Evolutionary algorithms excel in solving complex optimization problems, especially those with multiple objectives. However, their stochastic nature can sometimes hinder rapid convergence to the global optima, particularly in scenarios involving constraints. In this study, we employ a large language model (LLM) to enhance evolutionary search for solving constrained multi-objective optimization problems. Our aim is to speed up the convergence of the evolutionary population. To achieve this, we finetune the LLM through tailored prompt engineering, integrating information concerning both objective values and constraint violations of solutions. This process enables the LLM to grasp the relationship between well-performing and poorly performing solutions based on the provided input data. Solution's quality is assessed based on their constraint violations and objective-based performance. By leveraging the refined LLM, it can be used as a search operator to generate superior-quality solutions. Experimental evaluations across various test benchmarks illustrate that LLM-aided evolutionary search can significantly accelerate the population's convergence speed and stands out competitively against cutting-edge evolutionary algorithms.

Towards Multi-Objective High-Dimensional Feature Selection via Evolutionary Multitasking

Jan 03, 2024

Abstract:Evolutionary Multitasking (EMT) paradigm, an emerging research topic in evolutionary computation, has been successfully applied in solving high-dimensional feature selection (FS) problems recently. However, existing EMT-based FS methods suffer from several limitations, such as a single mode of multitask generation, conducting the same generic evolutionary search for all tasks, relying on implicit transfer mechanisms through sole solution encodings, and employing single-objective transformation, which result in inadequate knowledge acquisition, exploitation, and transfer. To this end, this paper develops a novel EMT framework for multiobjective high-dimensional feature selection problems, namely MO-FSEMT. In particular, multiple auxiliary tasks are constructed by distinct formulation methods to provide diverse search spaces and information representations and then simultaneously addressed with the original task through a multi-slover-based multitask optimization scheme. Each task has an independent population with task-specific representations and is solved using separate evolutionary solvers with different biases and search preferences. A task-specific knowledge transfer mechanism is designed to leverage the advantage information of each task, enabling the discovery and effective transmission of high-quality solutions during the search process. Comprehensive experimental results demonstrate that our MO-FSEMT framework can achieve overall superior performance compared to the state-of-the-art FS methods on 26 datasets. Moreover, the ablation studies verify the contributions of different components of the proposed MO-FSEMT.

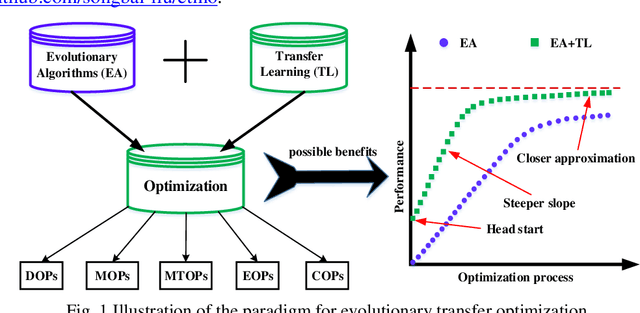

A Survey on Learnable Evolutionary Algorithms for Scalable Multiobjective Optimization

Jun 23, 2022

Abstract:Recent decades have witnessed remarkable advancements in multiobjective evolutionary algorithms (MOEAs) that have been adopted to solve various multiobjective optimization problems (MOPs). However, these progressively improved MOEAs have not necessarily been equipped with sophisticatedly scalable and learnable problem-solving strategies that are able to cope with new and grand challenges brought by the scaling-up MOPs with continuously increasing complexity or scale from diverse aspects, mainly including expensive function evaluations, many objectives, large-scale search space, time-varying environments, and multitask. Under different scenarios, it requires divergent thinking to design new powerful MOEAs for solving them effectively. In this context, research into learnable MOEAs that arm themselves with machine learning techniques for scaling-up MOPs has received extensive attention in the field of evolutionary computation. In this paper, we begin with a taxonomy of scalable MOPs and learnable MOEAs, followed by an analysis of the challenges that scaling up MOPs pose to traditional MOEAs. Then, we synthetically overview recent advances of learnable MOEAs in solving various scaling up MOPs, focusing primarily on three attractive and promising directions (i.e., learnable evolutionary discriminators for environmental selection, learnable evolutionary generators for reproduction, and learnable evolutionary transfer for sharing or reusing optimization experience between different problem domains). The insight into learnable MOEAs held throughout this paper is offered to the readers as a reference to the general track of the efforts in this field.

Benchmark Problems for CEC2021 Competition on Evolutionary Transfer Multiobjectve Optimization

Oct 15, 2021

Abstract:Evolutionary transfer multiobjective optimization (ETMO) has been becoming a hot research topic in the field of evolutionary computation, which is based on the fact that knowledge learning and transfer across the related optimization exercises can improve the efficiency of others. Besides, the potential for transfer optimization is deemed invaluable from the standpoint of human-like problem-solving capabilities where knowledge gather and reuse are instinctive. To promote the research on ETMO, benchmark problems are of great importance to ETMO algorithm analysis, which helps designers or practitioners to understand the merit and demerit better of ETMO algorithms. Therefore, a total number of 40 benchmark functions are proposed in this report, covering diverse types and properties in the case of knowledge transfer, such as various formulation models, various PS geometries and PF shapes, large-scale of variables, dynamically changed environment, and so on. All the benchmark functions have been implemented in JAVA code, which can be downloaded on the following website: https://github.com/songbai-liu/etmo.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge