Slawek Smyl

Probabilistic Multi-Regional Solar Power Forecasting with Any-Quantile Recurrent Neural Networks

Feb 05, 2026Abstract:The increasing penetration of photovoltaic (PV) generation introduces significant uncertainty into power system operation, necessitating forecasting approaches that extend beyond deterministic point predictions. This paper proposes an any-quantile probabilistic forecasting framework for multi-regional PV power generation based on the Any-Quantile Recurrent Neural Network (AQ-RNN). The model integrates an any-quantile forecasting paradigm with a dual-track recurrent architecture that jointly processes series-specific and cross-regional contextual information, supported by dilated recurrent cells, patch-based temporal modeling, and a dynamic ensemble mechanism. The proposed framework enables the estimation of calibrated conditional quantiles at arbitrary probability levels within a single trained model and effectively exploits spatial dependencies to enhance robustness at the system level. The approach is evaluated using 30 years of hourly PV generation data from 259 European regions and compared against established statistical and neural probabilistic baselines. The results demonstrate consistent improvements in forecast accuracy, calibration, and prediction interval quality, underscoring the suitability of the proposed method for uncertainty-aware energy management and operational decision-making in renewable-dominated power systems.

Forecasting Cryptocurrency Prices using Contextual ES-adRNN with Exogenous Variables

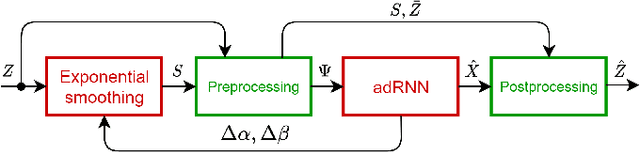

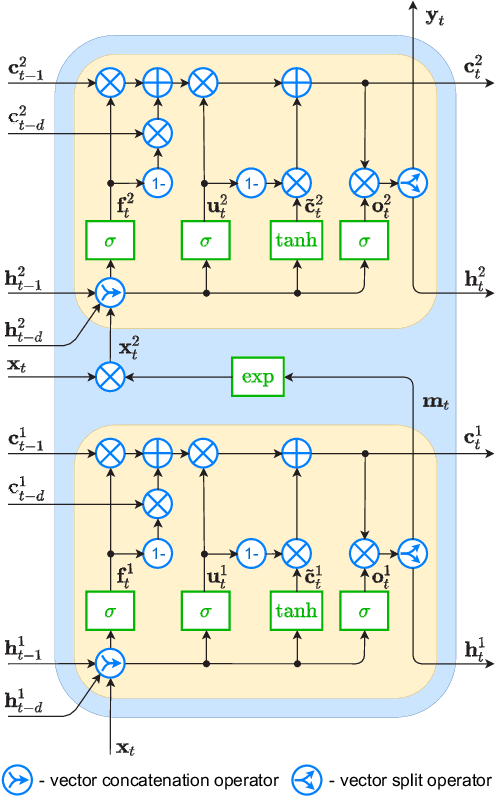

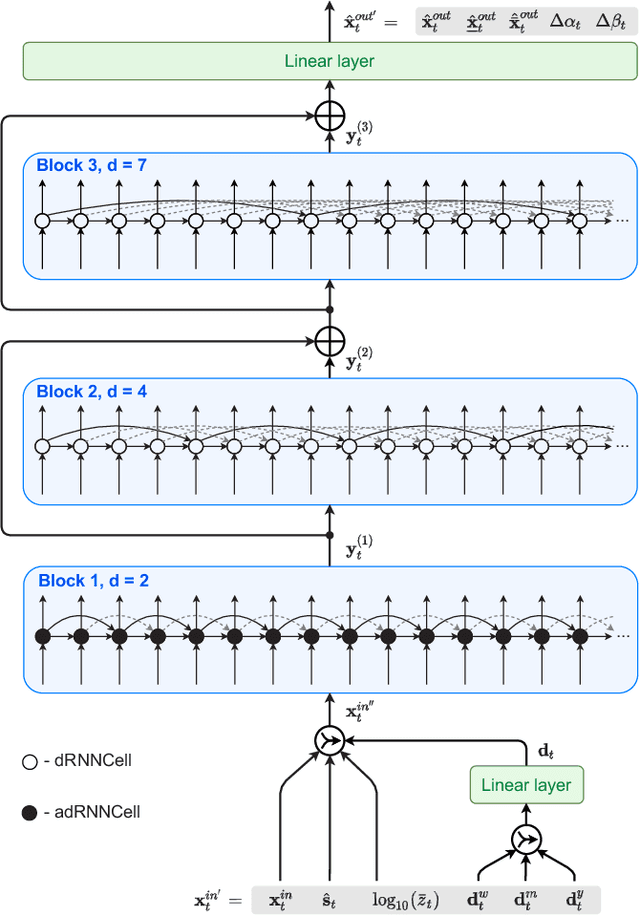

Apr 11, 2025Abstract:In this paper, we introduce a new approach to multivariate forecasting cryptocurrency prices using a hybrid contextual model combining exponential smoothing (ES) and recurrent neural network (RNN). The model consists of two tracks: the context track and the main track. The context track provides additional information to the main track, extracted from representative series. This information as well as information extracted from exogenous variables is dynamically adjusted to the individual series forecasted by the main track. The RNN stacked architecture with hierarchical dilations, incorporating recently developed attentive dilated recurrent cells, allows the model to capture short and long-term dependencies across time series and dynamically weight input information. The model generates both point daily forecasts and predictive intervals for one-day, one-week and four-week horizons. We apply our model to forecast prices of 15 cryptocurrencies based on 17 input variables and compare its performance with that of comparative models, including both statistical and ML ones.

Predicting Customer Lifetime Value Using Recurrent Neural Net

Dec 28, 2024Abstract:This paper introduces a recurrent neural network approach for predicting user lifetime value in Software as a Service (SaaS) applications. The approach accounts for three connected time dimensions. These dimensions are the user cohort (the date the user joined), user age-in-system (the time since the user joined the service) and the calendar date the user is an age-in-system (i.e., contemporaneous information).The recurrent neural networks use a multi-cell architecture, where each cell resembles a long short-term memory neural network. The approach is applied to predicting both acquisition (new users) and rolling (existing user) lifetime values for a variety of time horizons. It is found to significantly improve median absolute percent error versus light gradient boost models and Buy Until You Die models.

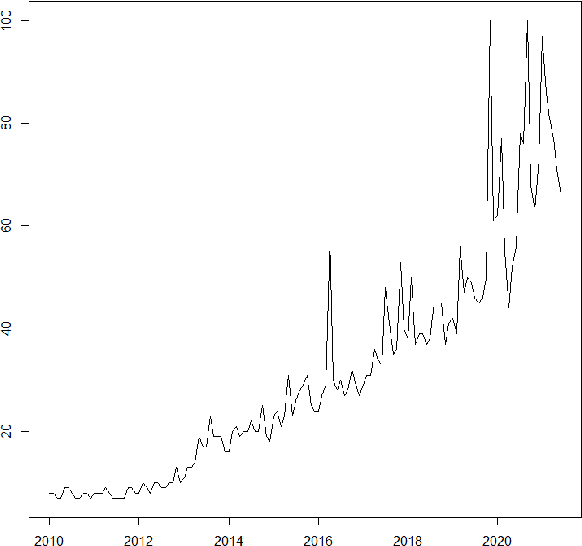

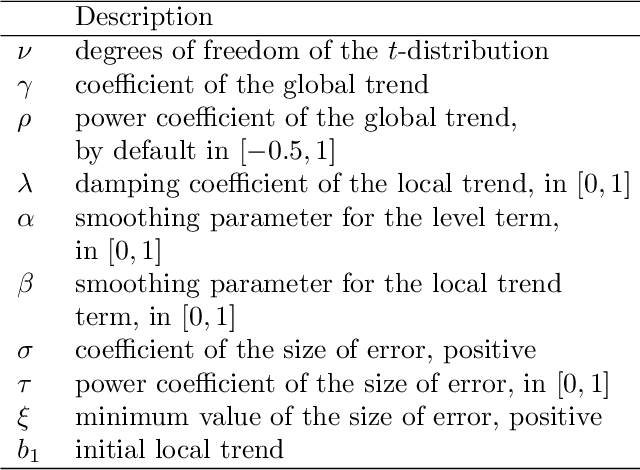

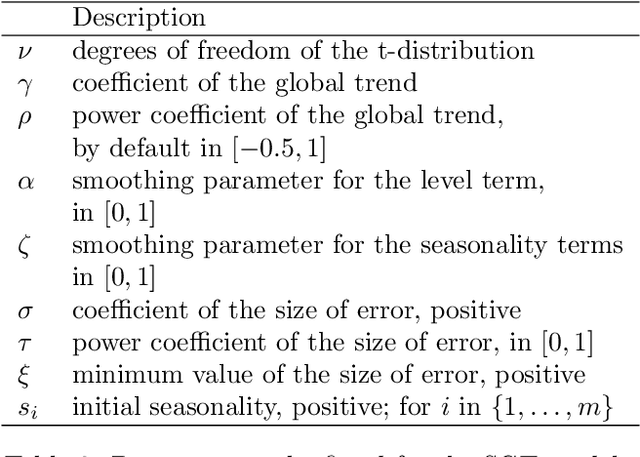

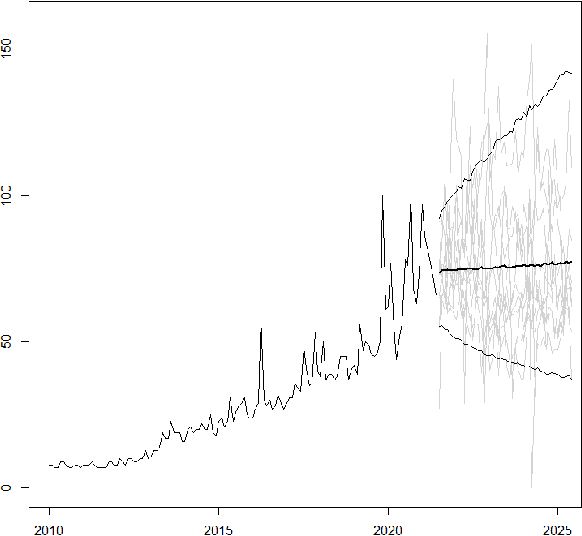

Fast Gibbs sampling for the local and global trend Bayesian exponential smoothing model

Jun 29, 2024

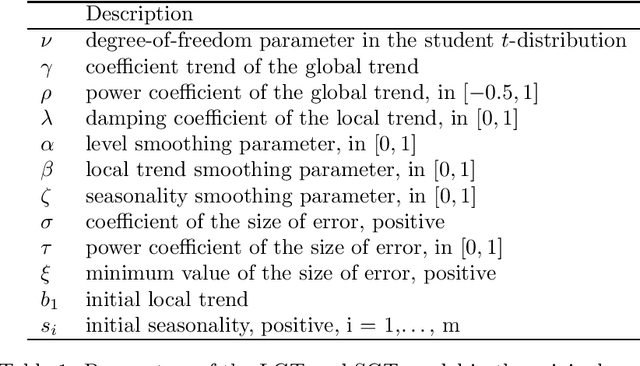

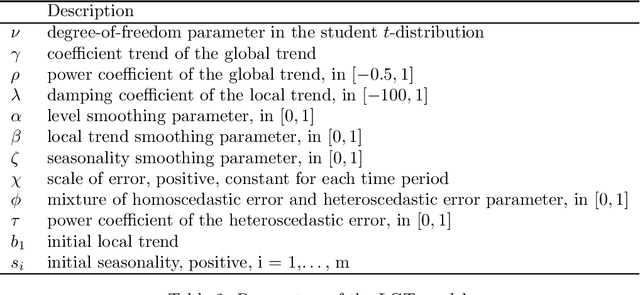

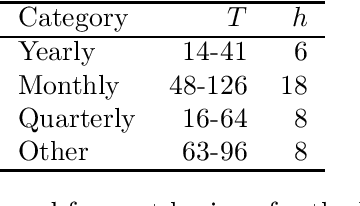

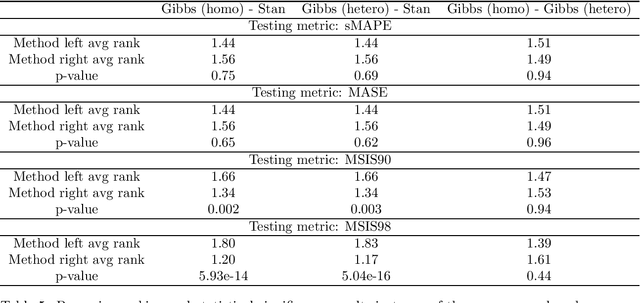

Abstract:In Smyl et al. [Local and global trend Bayesian exponential smoothing models. International Journal of Forecasting, 2024.], a generalised exponential smoothing model was proposed that is able to capture strong trends and volatility in time series. This method achieved state-of-the-art performance in many forecasting tasks, but its fitting procedure, which is based on the NUTS sampler, is very computationally expensive. In this work, we propose several modifications to the original model, as well as a bespoke Gibbs sampler for posterior exploration; these changes improve sampling time by an order of magnitude, thus rendering the model much more practically relevant. The new model, and sampler, are evaluated on the M3 dataset and are shown to be competitive, or superior, in terms of accuracy to the original method, while being substantially faster to run.

Context Neural Networks: A Scalable Multivariate Model for Time Series Forecasting

May 12, 2024

Abstract:Real-world time series often exhibit complex interdependencies that cannot be captured in isolation. Global models that model past data from multiple related time series globally while producing series-specific forecasts locally are now common. However, their forecasts for each individual series remain isolated, failing to account for the current state of its neighbouring series. Multivariate models like multivariate attention and graph neural networks can explicitly incorporate inter-series information, thus addressing the shortcomings of global models. However, these techniques exhibit quadratic complexity per timestep, limiting scalability. This paper introduces the Context Neural Network, an efficient linear complexity approach for augmenting time series models with relevant contextual insights from neighbouring time series without significant computational overhead. The proposed method enriches predictive models by providing the target series with real-time information from its neighbours, addressing the limitations of global models, yet remaining computationally tractable for large datasets.

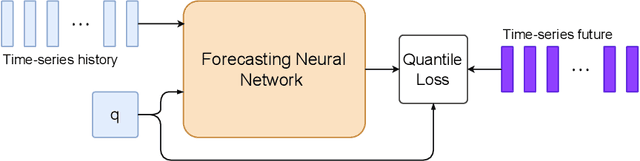

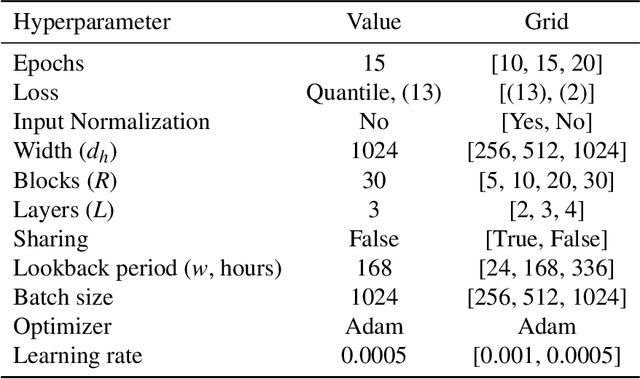

Any-Quantile Probabilistic Forecasting of Short-Term Electricity Demand

Apr 26, 2024

Abstract:Power systems operate under uncertainty originating from multiple factors that are impossible to account for deterministically. Distributional forecasting is used to control and mitigate risks associated with this uncertainty. Recent progress in deep learning has helped to significantly improve the accuracy of point forecasts, while accurate distributional forecasting still presents a significant challenge. In this paper, we propose a novel general approach for distributional forecasting capable of predicting arbitrary quantiles. We show that our general approach can be seamlessly applied to two distinct neural architectures leading to the state-of-the-art distributional forecasting results in the context of short-term electricity demand forecasting task. We empirically validate our method on 35 hourly electricity demand time-series for European countries. Our code is available here: https://github.com/boreshkinai/any-quantile.

Local and Global Trend Bayesian Exponential Smoothing Models

Sep 25, 2023

Abstract:This paper describes a family of seasonal and non-seasonal time series models that can be viewed as generalisations of additive and multiplicative exponential smoothing models. Their development is motivated by fast-growing, volatile time series, and facilitated by state-of-the-art Bayesian fitting techniques. When applied to the M3 competition data set, they outperform the best algorithms in the competition as well as other benchmarks, thus achieving to the best of our knowledge the best results of univariate methods on this dataset in the literature.

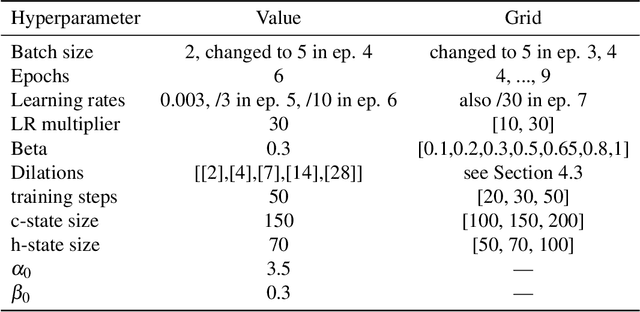

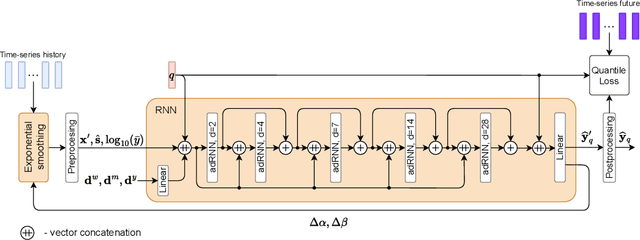

Contextually Enhanced ES-dRNN with Dynamic Attention for Short-Term Load Forecasting

Dec 18, 2022Abstract:In this paper, we propose a new short-term load forecasting (STLF) model based on contextually enhanced hybrid and hierarchical architecture combining exponential smoothing (ES) and a recurrent neural network (RNN). The model is composed of two simultaneously trained tracks: the context track and the main track. The context track introduces additional information to the main track. It is extracted from representative series and dynamically modulated to adjust to the individual series forecasted by the main track. The RNN architecture consists of multiple recurrent layers stacked with hierarchical dilations and equipped with recently proposed attentive dilated recurrent cells. These cells enable the model to capture short-term, long-term and seasonal dependencies across time series as well as to weight dynamically the input information. The model produces both point forecasts and predictive intervals. The experimental part of the work performed on 35 forecasting problems shows that the proposed model outperforms in terms of accuracy its predecessor as well as standard statistical models and state-of-the-art machine learning models.

Recurrent Neural Networks for Forecasting Time Series with Multiple Seasonality: A Comparative Study

Mar 17, 2022

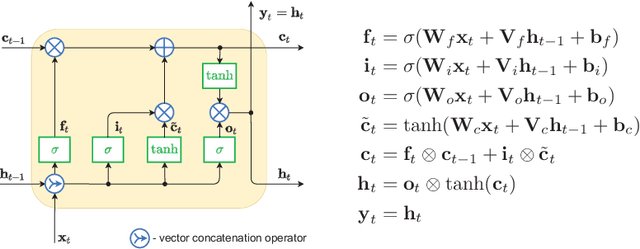

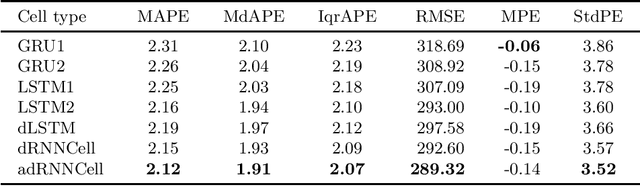

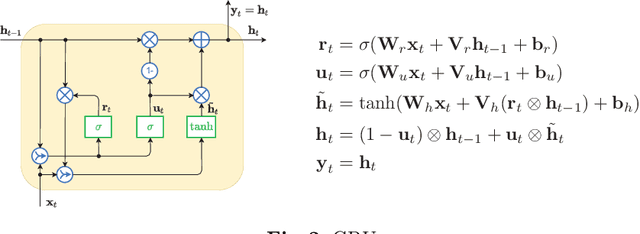

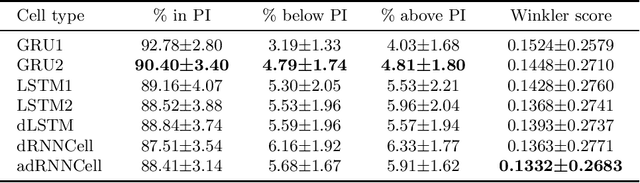

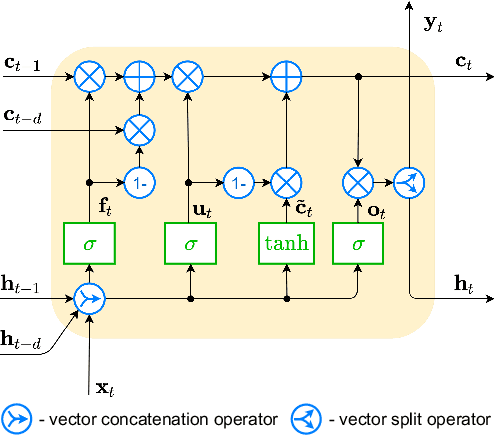

Abstract:This paper compares recurrent neural networks (RNNs) with different types of gated cells for forecasting time series with multiple seasonality. The cells we compare include classical long short term memory (LSTM), gated recurrent unit (GRU), modified LSTM with dilation, and two new cells we proposed recently, which are equipped with dilation and attention mechanisms. To model the temporal dependencies of different scales, our RNN architecture has multiple dilated recurrent layers stacked with hierarchical dilations. The proposed RNN produces both point forecasts and predictive intervals (PIs) for them. An empirical study concerning short-term electrical load forecasting for 35 European countries confirmed that the new gated cells with dilation and attention performed best.

ES-dRNN with Dynamic Attention for Short-Term Load Forecasting

Mar 02, 2022

Abstract:Short-term load forecasting (STLF) is a challenging problem due to the complex nature of the time series expressing multiple seasonality and varying variance. This paper proposes an extension of a hybrid forecasting model combining exponential smoothing and dilated recurrent neural network (ES-dRNN) with a mechanism for dynamic attention. We propose a new gated recurrent cell -- attentive dilated recurrent cell, which implements an attention mechanism for dynamic weighting of input vector components. The most relevant components are assigned greater weights, which are subsequently dynamically fine-tuned. This attention mechanism helps the model to select input information and, along with other mechanisms implemented in ES-dRNN, such as adaptive time series processing, cross-learning, and multiple dilation, leads to a significant improvement in accuracy when compared to well-established statistical and state-of-the-art machine learning forecasting models. This was confirmed in the extensive experimental study concerning STLF for 35 European countries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge