Simon J. C. Soerensen

Bridging the gap between prostate radiology and pathology through machine learning

Dec 03, 2021

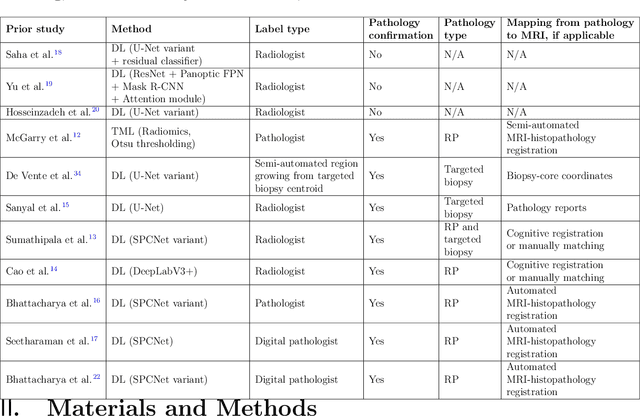

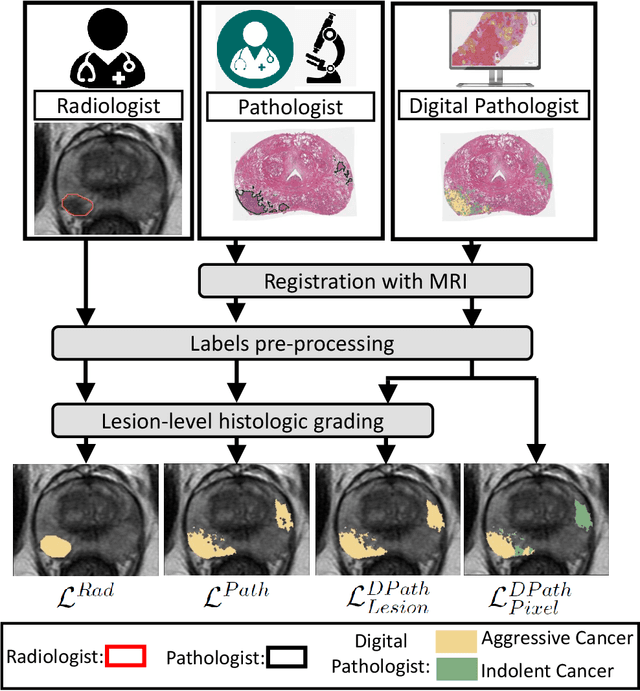

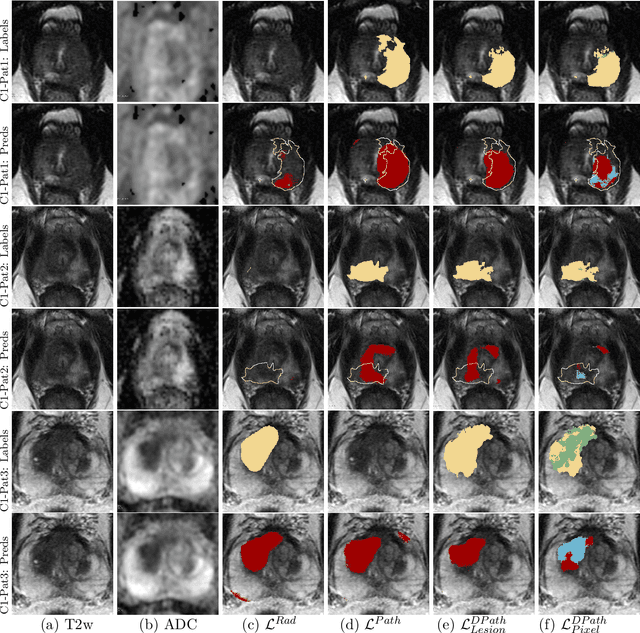

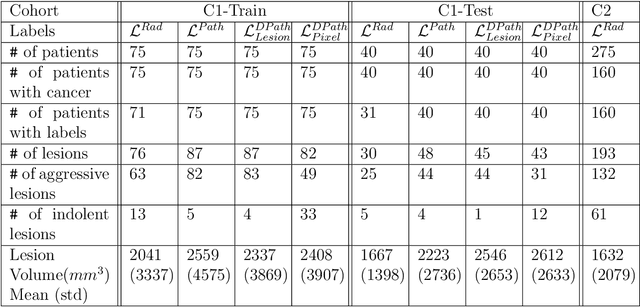

Abstract:Prostate cancer is the second deadliest cancer for American men. While Magnetic Resonance Imaging (MRI) is increasingly used to guide targeted biopsies for prostate cancer diagnosis, its utility remains limited due to high rates of false positives and false negatives as well as low inter-reader agreements. Machine learning methods to detect and localize cancer on prostate MRI can help standardize radiologist interpretations. However, existing machine learning methods vary not only in model architecture, but also in the ground truth labeling strategies used for model training. In this study, we compare different labeling strategies, namely, pathology-confirmed radiologist labels, pathologist labels on whole-mount histopathology images, and lesion-level and pixel-level digital pathologist labels (previously validated deep learning algorithm on histopathology images to predict pixel-level Gleason patterns) on whole-mount histopathology images. We analyse the effects these labels have on the performance of the trained machine learning models. Our experiments show that (1) radiologist labels and models trained with them can miss cancers, or underestimate cancer extent, (2) digital pathologist labels and models trained with them have high concordance with pathologist labels, and (3) models trained with digital pathologist labels achieve the best performance in prostate cancer detection in two different cohorts with different disease distributions, irrespective of the model architecture used. Digital pathologist labels can reduce challenges associated with human annotations, including labor, time, inter- and intra-reader variability, and can help bridge the gap between prostate radiology and pathology by enabling the training of reliable machine learning models to detect and localize prostate cancer on MRI.

Weakly Supervised Registration of Prostate MRI and Histopathology Images

Jun 23, 2021

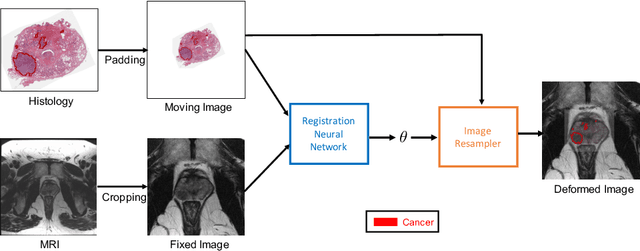

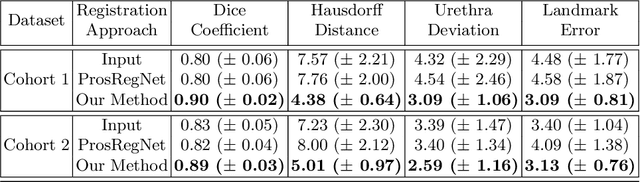

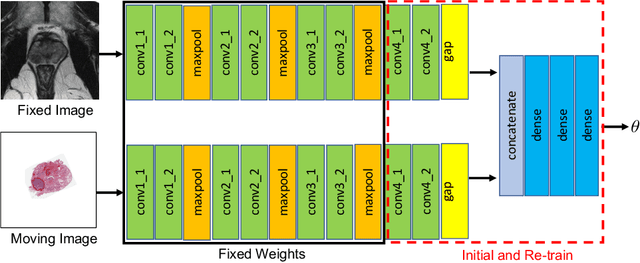

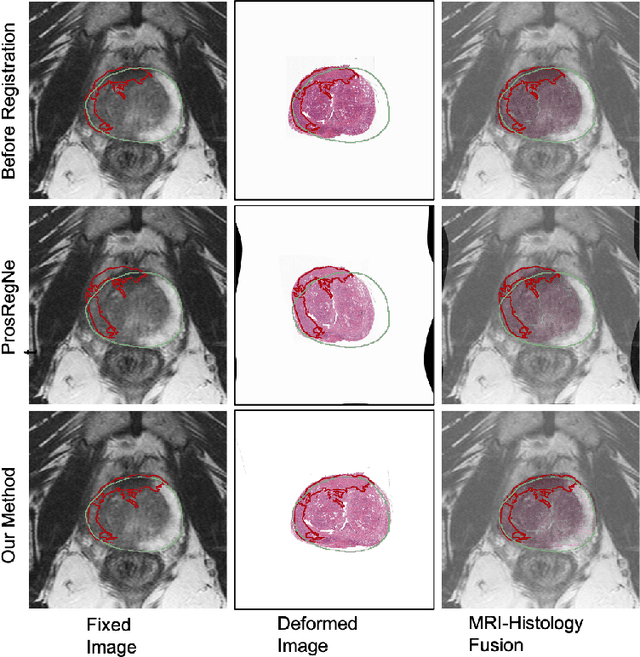

Abstract:The interpretation of prostate MRI suffers from low agreement across radiologists due to the subtle differences between cancer and normal tissue. Image registration addresses this issue by accurately mapping the ground-truth cancer labels from surgical histopathology images onto MRI. Cancer labels achieved by image registration can be used to improve radiologists' interpretation of MRI by training deep learning models for early detection of prostate cancer. A major limitation of current automated registration approaches is that they require manual prostate segmentations, which is a time-consuming task, prone to errors. This paper presents a weakly supervised approach for affine and deformable registration of MRI and histopathology images without requiring prostate segmentations. We used manual prostate segmentations and mono-modal synthetic image pairs to train our registration networks to align prostate boundaries and local prostate features. Although prostate segmentations were used during the training of the network, such segmentations were not needed when registering unseen images at inference time. We trained and validated our registration network with 135 and 10 patients from an internal cohort, respectively. We tested the performance of our method using 16 patients from the internal cohort and 22 patients from an external cohort. The results show that our weakly supervised method has achieved significantly higher registration accuracy than a state-of-the-art method run without prostate segmentations. Our deep learning framework will ease the registration of MRI and histopathology images by obviating the need for prostate segmentations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge