Simon Hangl

A novel Skill-based Programming Paradigm based on Autonomous Playing and Skill-centric Testing

Sep 18, 2017

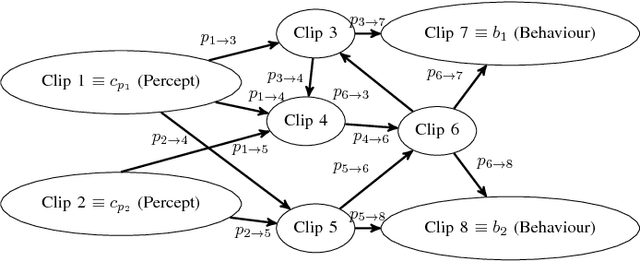

Abstract:We introduce a novel paradigm for robot pro- gramming with which we aim to make robot programming more accessible for unexperienced users. In order to do so we incorporate two major components in one single framework: autonomous skill acquisition by robotic playing and visual programming. Simple robot program skeletons solving a task for one specific situation, so-called basic behaviours, are provided by the user. The robot then learns how to solve the same task in many different situations by autonomous playing which reduces the barrier for unexperienced robot programmers. Programmers can use a mix of visual programming and kinesthetic teaching in order to provide these simple program skeletons. The robot program can be implemented interactively by programming parts with visual programming and kinesthetic teaching. We further integrate work on experience-based skill-centric robot software testing which enables the user to continuously test implemented skills without having to deal with the details of specific components.

Robotic Playing for Hierarchical Complex Skill Learning

Aug 13, 2017

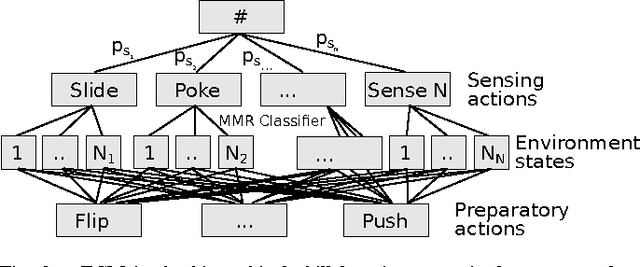

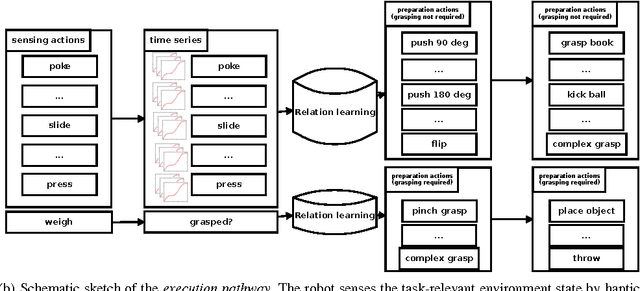

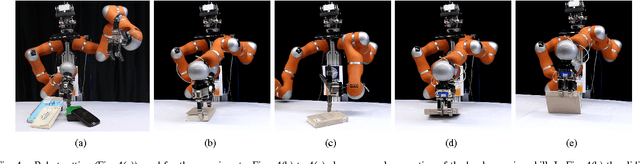

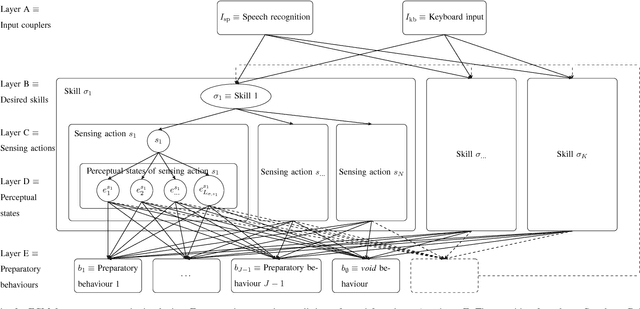

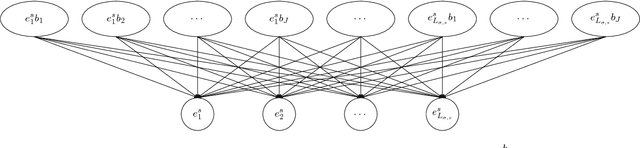

Abstract:In complex manipulation scenarios (e.g. tasks requiring complex interaction of two hands or in-hand manipulation), generalization is a hard problem. Current methods still either require a substantial amount of (supervised) training data and / or strong assumptions on both the environment and the task. In this paradigm, controllers solving these tasks tend to be complex. We propose a paradigm of maintaining simpler controllers solving the task in a small number of specific situations. In order to generalize to novel situations, the robot transforms the environment from novel situations into a situation where the solution of the task is already known. Our solution to this problem is to play with objects and use previously trained skills (basis skills). These skills can either be used for estimating or for changing the current state of the environment and are organized in skill hierarchies. The approach is evaluated in complex pick-and-place scenarios that involve complex manipulation. We further show that these skills can be learned by autonomous playing.

Autonomous Skill-centric Testing using Deep Learning

Aug 13, 2017

Abstract:Software testing is an important tool to ensure software quality. This is a hard task in robotics due to dynamic environments and the expensive development and time-consuming execution of test cases. Most testing approaches use model-based and / or simulation-based testing to overcome these problems. We propose model-free skill-centric testing in which a robot autonomously executes skills in the real world and compares it to previous experiences. The skills are selected by maximising the expected information gain on the distribution of erroneous software functions. We use deep learning to model the sensor data observed during previous successful skill executions and to detect irregularities. Sensor data is connected to function call profiles such that certain misbehaviour can be related to specific functions. We evaluate our approach in simulation and in experiments with a KUKA LWR 4+ robot by purposefully introducing bugs to the software. We demonstrate that these bugs can be detected with high accuracy and without the need for the implementation of specific tests or task-specific models.

Skill Learning by Autonomous Robotic Playing using Active Learning and Creativity

Jun 26, 2017

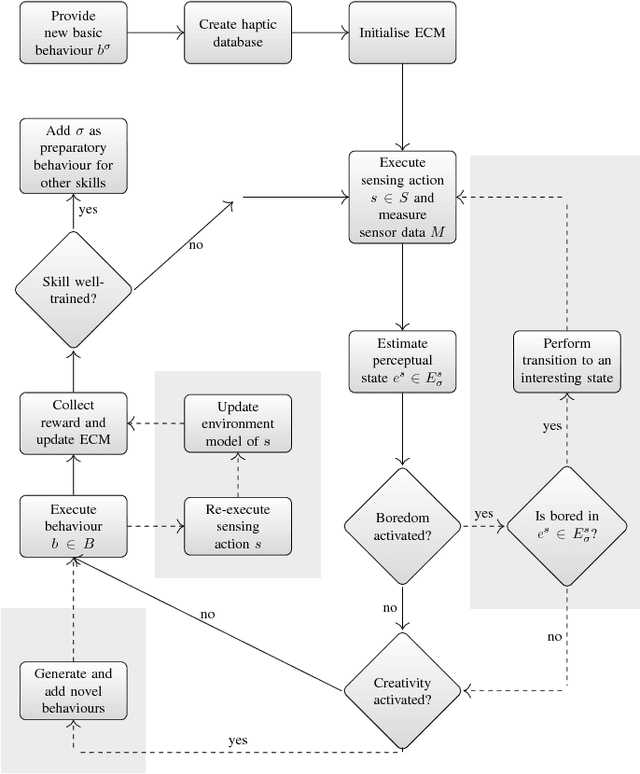

Abstract:We treat the problem of autonomous acquisition of manipulation skills where problem-solving strategies are initially available only for a narrow range of situations. We propose to extend the range of solvable situations by autonomous playing with the object. By applying previously-trained skills and behaviours, the robot learns how to prepare situations for which a successful strategy is already known. The information gathered during autonomous play is additionally used to learn an environment model. This model is exploited for active learning and the creative generation of novel preparatory behaviours. We apply our approach on a wide range of different manipulation tasks, e.g. book grasping, grasping of objects of different sizes by selecting different grasping strategies, placement on shelves, and tower disassembly. We show that the creative behaviour generation mechanism enables the robot to solve previously-unsolvable tasks, e.g. tower disassembly. We use success statistics gained during real-world experiments to simulate the convergence behaviour of our system. Experiments show that active improves the learning speed by around 9 percent in the book grasping scenario.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge