Siew-Chong Tan

Context-Aware Transformer for 3D Point Cloud Automatic Annotation

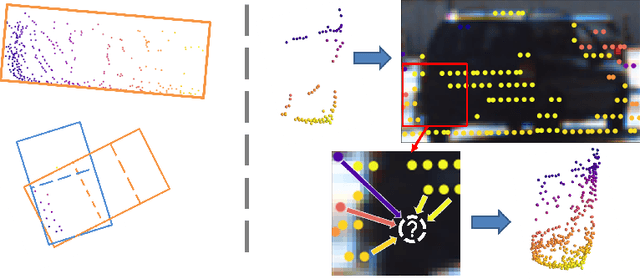

Mar 27, 2023Abstract:3D automatic annotation has received increased attention since manually annotating 3D point clouds is laborious. However, existing methods are usually complicated, e.g., pipelined training for 3D foreground/background segmentation, cylindrical object proposals, and point completion. Furthermore, they often overlook the inter-object feature relation that is particularly informative to hard samples for 3D annotation. To this end, we propose a simple yet effective end-to-end Context-Aware Transformer (CAT) as an automated 3D-box labeler to generate precise 3D box annotations from 2D boxes, trained with a small number of human annotations. We adopt the general encoder-decoder architecture, where the CAT encoder consists of an intra-object encoder (local) and an inter-object encoder (global), performing self-attention along the sequence and batch dimensions, respectively. The former models intra-object interactions among points, and the latter extracts feature relations among different objects, thus boosting scene-level understanding. Via local and global encoders, CAT can generate high-quality 3D box annotations with a streamlined workflow, allowing it to outperform existing state-of-the-art by up to 1.79% 3D AP on the hard task of the KITTI test set.

Multimodal Transformer for Automatic 3D Annotation and Object Detection

Jul 20, 2022

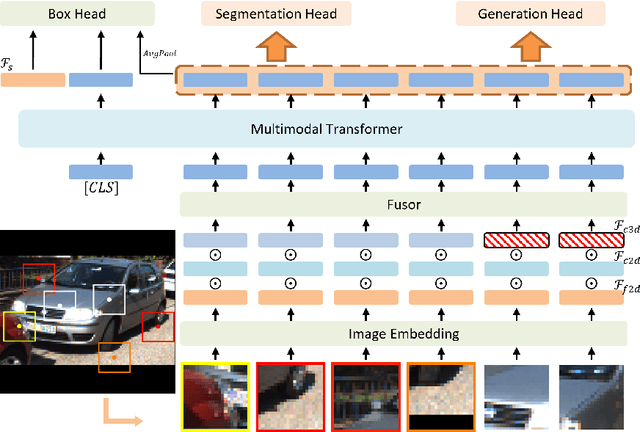

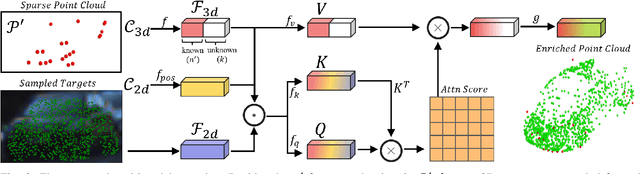

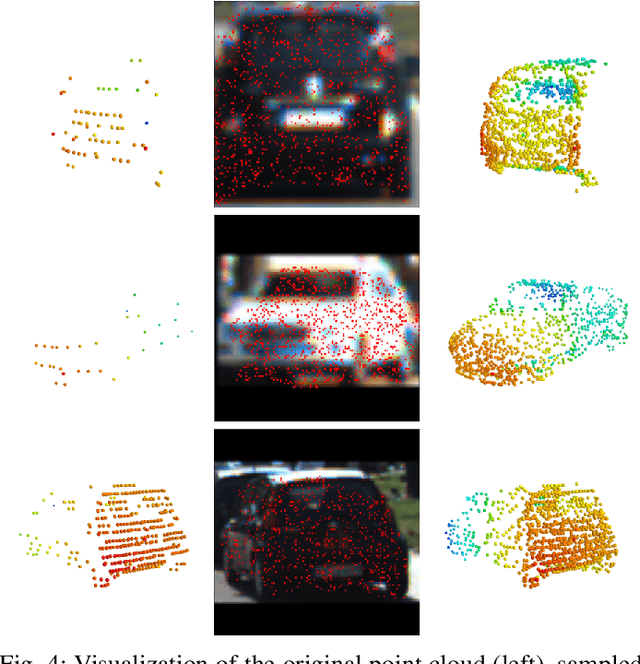

Abstract:Despite a growing number of datasets being collected for training 3D object detection models, significant human effort is still required to annotate 3D boxes on LiDAR scans. To automate the annotation and facilitate the production of various customized datasets, we propose an end-to-end multimodal transformer (MTrans) autolabeler, which leverages both LiDAR scans and images to generate precise 3D box annotations from weak 2D bounding boxes. To alleviate the pervasive sparsity problem that hinders existing autolabelers, MTrans densifies the sparse point clouds by generating new 3D points based on 2D image information. With a multi-task design, MTrans segments the foreground/background, densifies LiDAR point clouds, and regresses 3D boxes simultaneously. Experimental results verify the effectiveness of the MTrans for improving the quality of the generated labels. By enriching the sparse point clouds, our method achieves 4.48\% and 4.03\% better 3D AP on KITTI moderate and hard samples, respectively, versus the state-of-the-art autolabeler. MTrans can also be extended to improve the accuracy for 3D object detection, resulting in a remarkable 89.45\% AP on KITTI hard samples. Codes are at \url{https://github.com/Cliu2/MTrans}.

MAP-Gen: An Automated 3D-Box Annotation Flow with Multimodal Attention Point Generator

Mar 29, 2022

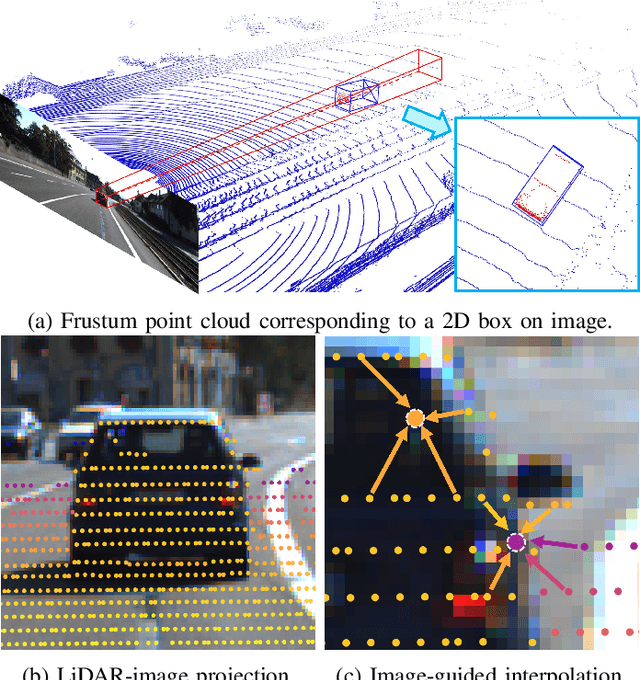

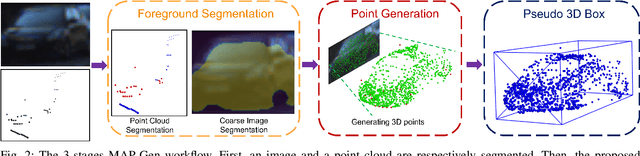

Abstract:Manually annotating 3D point clouds is laborious and costly, limiting the training data preparation for deep learning in real-world object detection. While a few previous studies tried to automatically generate 3D bounding boxes from weak labels such as 2D boxes, the quality is sub-optimal compared to human annotators. This work proposes a novel autolabeler, called multimodal attention point generator (MAP-Gen), that generates high-quality 3D labels from weak 2D boxes. It leverages dense image information to tackle the sparsity issue of 3D point clouds, thus improving label quality. For each 2D pixel, MAP-Gen predicts its corresponding 3D coordinates by referencing context points based on their 2D semantic or geometric relationships. The generated 3D points densify the original sparse point clouds, followed by an encoder to regress 3D bounding boxes. Using MAP-Gen, object detection networks that are weakly supervised by 2D boxes can achieve 94~99% performance of those fully supervised by 3D annotations. It is hopeful this newly proposed MAP-Gen autolabeling flow can shed new light on utilizing multimodal information for enriching sparse point clouds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge