Shunji Yang

Deep Reinforcement Learning-Assisted Federated Learning for Robust Short-term Utility Demand Forecasting in Electricity Wholesale Markets

Jun 23, 2022

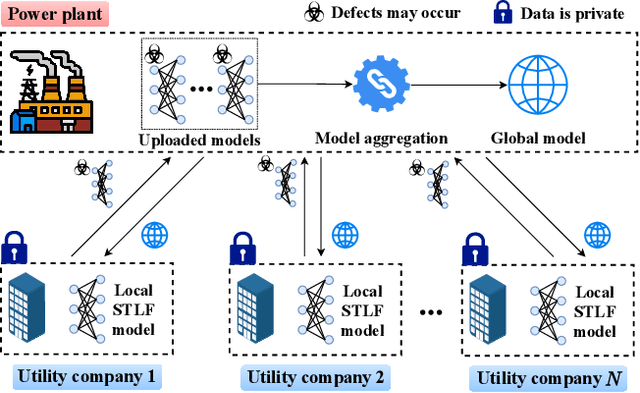

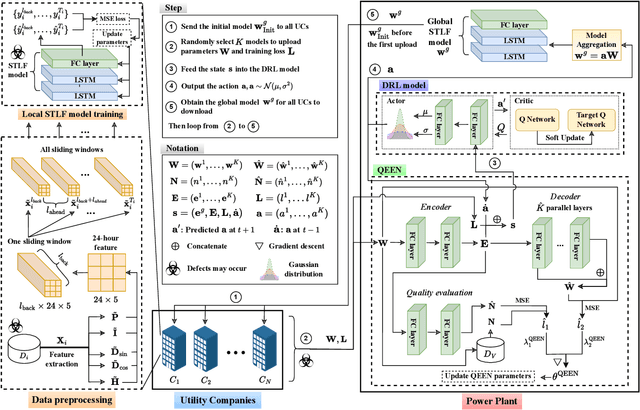

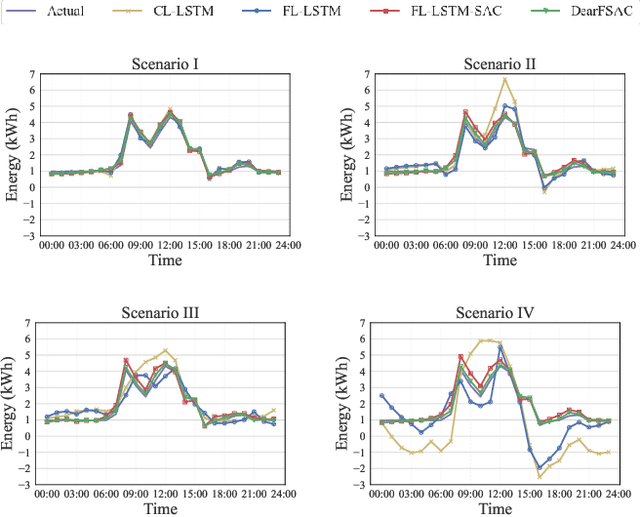

Abstract:Short-term load forecasting (STLF) plays a significant role in the operation of electricity trading markets. Considering the growing concern of data privacy, federated learning (FL) is increasingly adopted to train STLF models for utility companies (UCs) in recent research. Inspiringly, in wholesale markets, as it is not realistic for power plants (PPs) to access UCs' data directly, FL is definitely a feasible solution of obtaining an accurate STLF model for PPs. However, due to FL's distributed nature and intense competition among UCs, defects increasingly occur and lead to poor performance of the STLF model, indicating that simply adopting FL is not enough. In this paper, we propose a DRL-assisted FL approach, DEfect-AwaRe federated soft actor-critic (DearFSAC), to robustly train an accurate STLF model for PPs to forecast precise short-term utility electricity demand. Firstly. we design a STLF model based on long short-term memory (LSTM) using just historical load data and time data. Furthermore, considering the uncertainty of defects occurrence, a deep reinforcement learning (DRL) algorithm is adopted to assist FL by alleviating model degradation caused by defects. In addition, for faster convergence of FL training, an auto-encoder is designed for both dimension reduction and quality evaluation of uploaded models. In the simulations, we validate our approach on real data of Helsinki's UCs in 2019. The results show that DearFSAC outperforms all the other approaches no matter if defects occur or not.

DearFSAC: An Approach to Optimizing Unreliable Federated Learning via Deep Reinforcement Learning

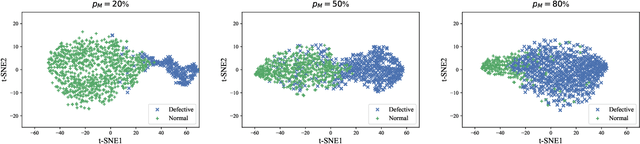

Jan 30, 2022Abstract:In federated learning (FL), model aggregation has been widely adopted for data privacy. In recent years, assigning different weights to local models has been used to alleviate the FL performance degradation caused by differences between local datasets. However, when various defects make the FL process unreliable, most existing FL approaches expose weak robustness. In this paper, we propose the DEfect-AwaRe federated soft actor-critic (DearFSAC) to dynamically assign weights to local models to improve the robustness of FL. The deep reinforcement learning algorithm soft actor-critic is adopted for near-optimal performance and stable convergence. Besides, an auto-encoder is trained to output low-dimensional embedding vectors that are further utilized to evaluate model quality. In the experiments, DearFSAC outperforms three existing approaches on four datasets for both independent and identically distributed (IID) and non-IID settings under defective scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge