Shubham Trehan

FSP-DETR: Few-Shot Prototypical Parasitic Ova Detection

Oct 10, 2025

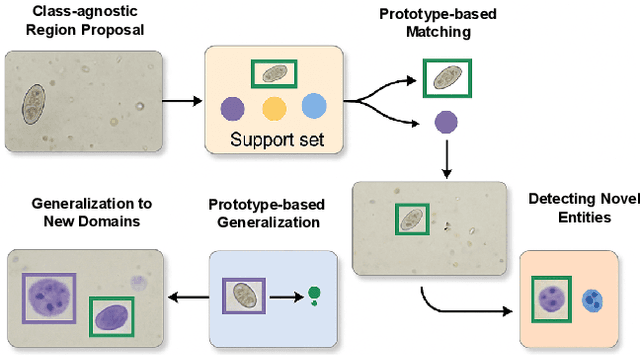

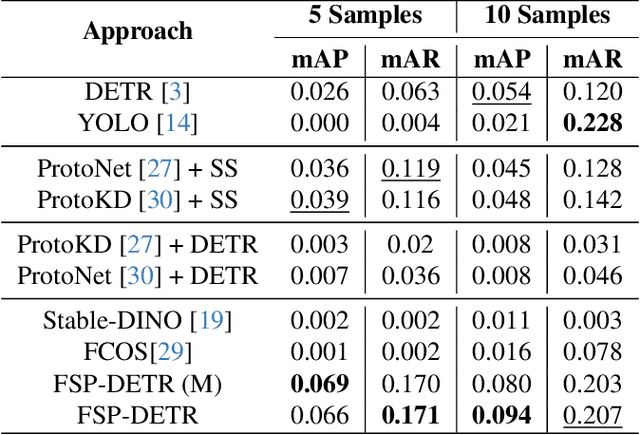

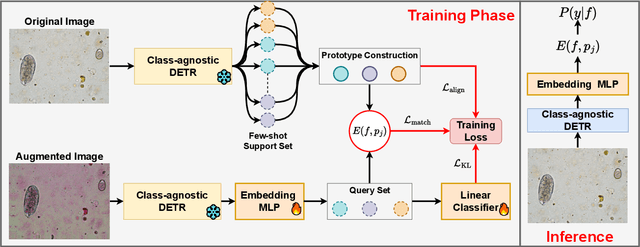

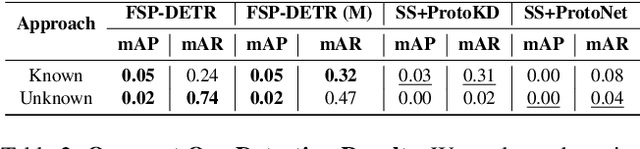

Abstract:Object detection in biomedical settings is fundamentally constrained by the scarcity of labeled data and the frequent emergence of novel or rare categories. We present FSP-DETR, a unified detection framework that enables robust few-shot detection, open-set recognition, and generalization to unseen biomedical tasks within a single model. Built upon a class-agnostic DETR backbone, our approach constructs class prototypes from original support images and learns an embedding space using augmented views and a lightweight transformer decoder. Training jointly optimizes a prototype matching loss, an alignment-based separation loss, and a KL divergence regularization to improve discriminative feature learning and calibration under scarce supervision. Unlike prior work that tackles these tasks in isolation, FSP-DETR enables inference-time flexibility to support unseen class recognition, background rejection, and cross-task adaptation without retraining. We also introduce a new ova species detection benchmark with 20 parasite classes and establish standardized evaluation protocols. Extensive experiments across ova, blood cell, and malaria detection tasks demonstrate that FSP-DETR significantly outperforms prior few-shot and prototype-based detectors, especially in low-shot and open-set scenarios.

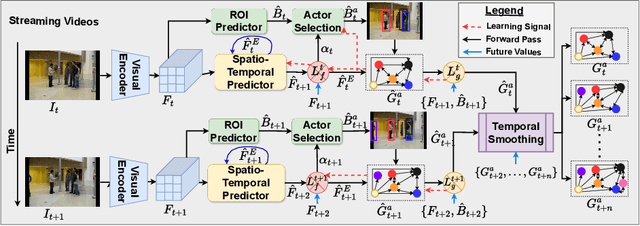

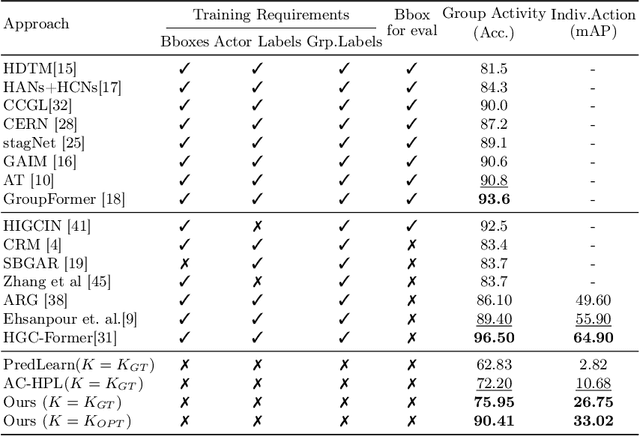

Self-supervised Multi-actor Social Activity Understanding in Streaming Videos

Jun 20, 2024

Abstract:This work addresses the problem of Social Activity Recognition (SAR), a critical component in real-world tasks like surveillance and assistive robotics. Unlike traditional event understanding approaches, SAR necessitates modeling individual actors' appearance and motions and contextualizing them within their social interactions. Traditional action localization methods fall short due to their single-actor, single-action assumption. Previous SAR research has relied heavily on densely annotated data, but privacy concerns limit their applicability in real-world settings. In this work, we propose a self-supervised approach based on multi-actor predictive learning for SAR in streaming videos. Using a visual-semantic graph structure, we model social interactions, enabling relational reasoning for robust performance with minimal labeled data. The proposed framework achieves competitive performance on standard group activity recognition benchmarks. Evaluation on three publicly available action localization benchmarks demonstrates its generalizability to arbitrary action localization.

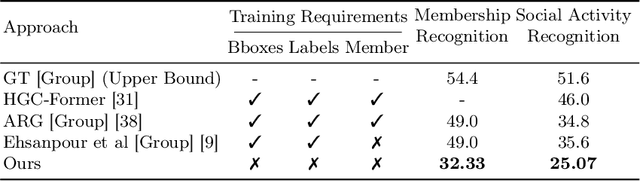

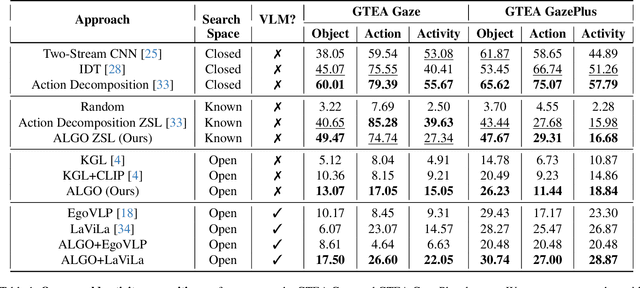

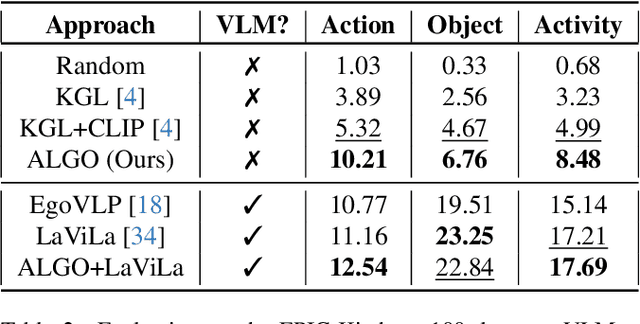

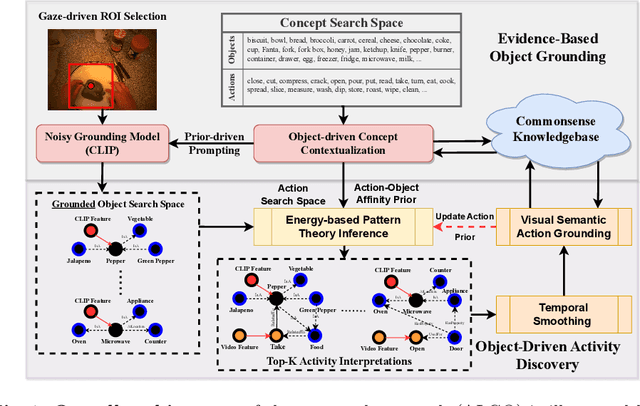

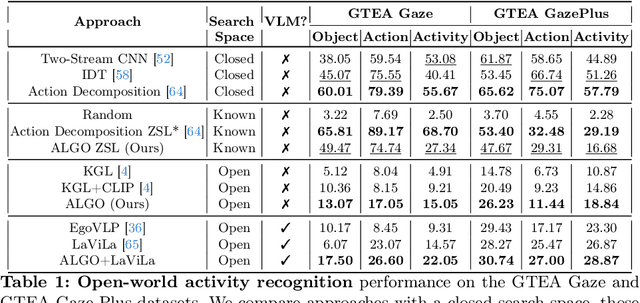

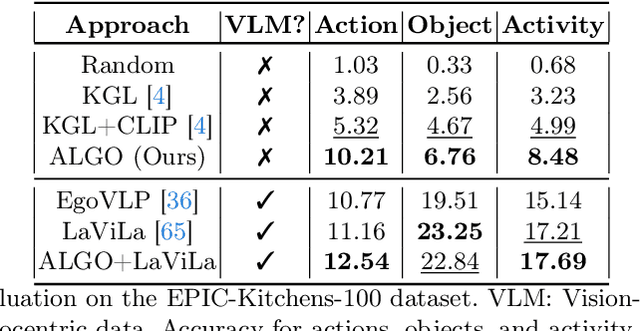

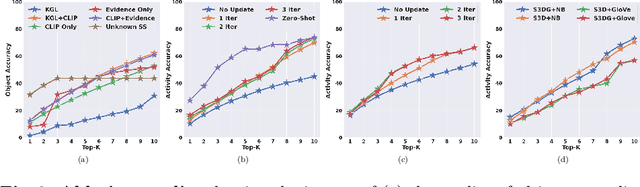

ALGO: Object-Grounded Visual Commonsense Reasoning for Open-World Egocentric Action Recognition

Jun 09, 2024

Abstract:Learning to infer labels in an open world, i.e., in an environment where the target "labels" are unknown, is an important characteristic for achieving autonomy. Foundation models pre-trained on enormous amounts of data have shown remarkable generalization skills through prompting, particularly in zero-shot inference. However, their performance is restricted to the correctness of the target label's search space. In an open world, this target search space can be unknown or exceptionally large, which severely restricts the performance of such models. To tackle this challenging problem, we propose a neuro-symbolic framework called ALGO - Action Learning with Grounded Object recognition that uses symbolic knowledge stored in large-scale knowledge bases to infer activities in egocentric videos with limited supervision using two steps. First, we propose a neuro-symbolic prompting approach that uses object-centric vision-language models as a noisy oracle to ground objects in the video through evidence-based reasoning. Second, driven by prior commonsense knowledge, we discover plausible activities through an energy-based symbolic pattern theory framework and learn to ground knowledge-based action (verb) concepts in the video. Extensive experiments on four publicly available datasets (EPIC-Kitchens, GTEA Gaze, GTEA Gaze Plus) demonstrate its performance on open-world activity inference.

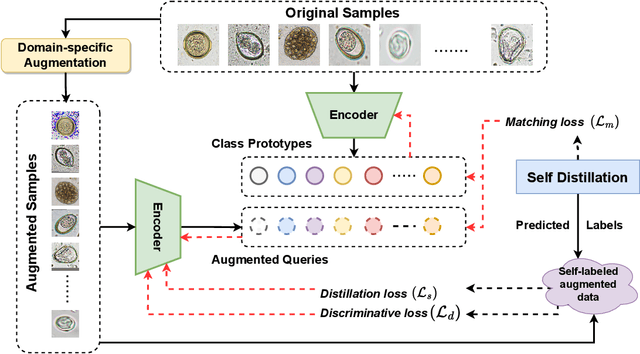

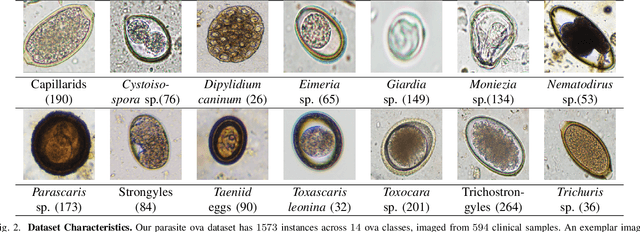

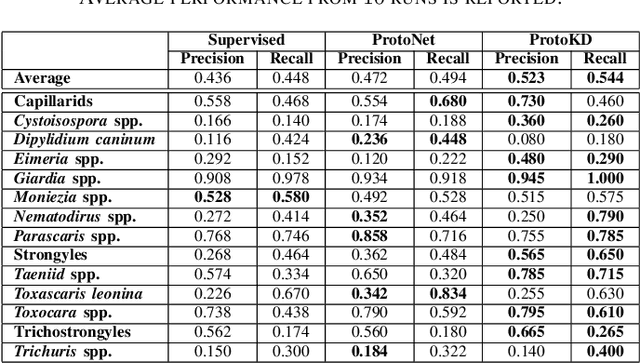

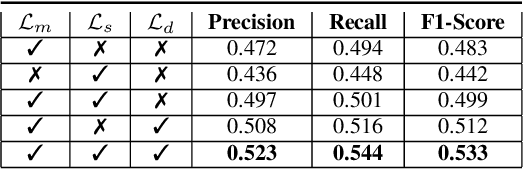

ProtoKD: Learning from Extremely Scarce Data for Parasite Ova Recognition

Sep 18, 2023

Abstract:Developing reliable computational frameworks for early parasite detection, particularly at the ova (or egg) stage is crucial for advancing healthcare and effectively managing potential public health crises. While deep learning has significantly assisted human workers in various tasks, its application and diagnostics has been constrained by the need for extensive datasets. The ability to learn from an extremely scarce training dataset, i.e., when fewer than 5 examples per class are present, is essential for scaling deep learning models in biomedical applications where large-scale data collection and annotation can be expensive or not possible (in case of novel or unknown infectious agents). In this study, we introduce ProtoKD, one of the first approaches to tackle the problem of multi-class parasitic ova recognition using extremely scarce data. Combining the principles of prototypical networks and self-distillation, we can learn robust representations from only one sample per class. Furthermore, we establish a new benchmark to drive research in this critical direction and validate that the proposed ProtoKD framework achieves state-of-the-art performance. Additionally, we evaluate the framework's generalizability to other downstream tasks by assessing its performance on a large-scale taxonomic profiling task based on metagenomes sequenced from real-world clinical data.

Discovering Novel Actions in an Open World with Object-Grounded Visual Commonsense Reasoning

May 26, 2023

Abstract:Learning to infer labels in an open world, i.e., in an environment where the target ``labels'' are unknown, is an important characteristic for achieving autonomy. Foundation models pre-trained on enormous amounts of data have shown remarkable generalization skills through prompting, particularly in zero-shot inference. However, their performance is restricted to the correctness of the target label's search space. In an open world where these labels are unknown, the search space can be exceptionally large. It can require reasoning over several combinations of elementary concepts to arrive at an inference, which severely restricts the performance of such models. To tackle this challenging problem, we propose a neuro-symbolic framework called ALGO - novel Action Learning with Grounded Object recognition that can use symbolic knowledge stored in large-scale knowledge bases to infer activities (verb-noun combinations) in egocentric videos with limited supervision using two steps. First, we propose a novel neuro-symbolic prompting approach that uses object-centric vision-language foundation models as a noisy oracle to ground objects in the video through evidence-based reasoning. Second, driven by prior commonsense knowledge, we discover plausible activities through an energy-based symbolic pattern theory framework and learn to ground knowledge-based action (verb) concepts in the video. Extensive experiments on two publicly available datasets (GTEA Gaze and GTEA Gaze Plus) demonstrate its performance on open-world activity inference and its generalization to unseen actions in an unknown search space. We show that ALGO can be extended to zero-shot settings and demonstrate its competitive performance to multimodal foundation models.

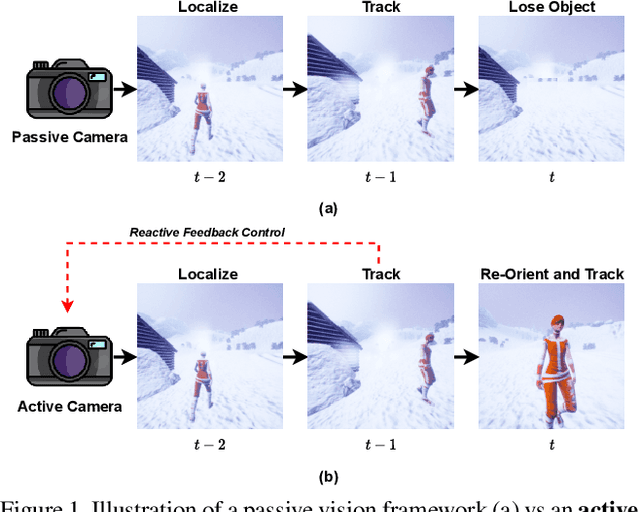

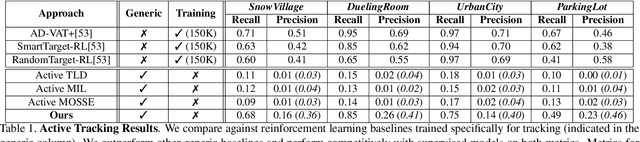

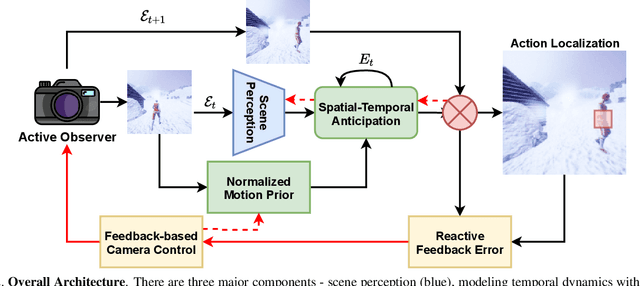

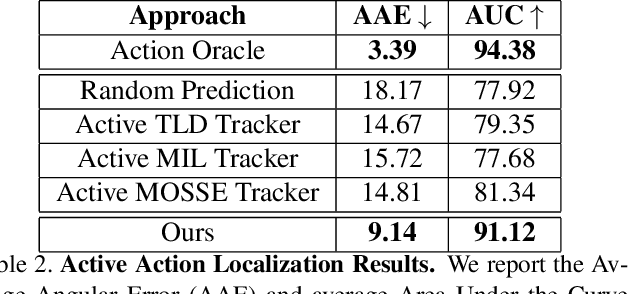

Towards Active Vision for Action Localization with Reactive Control and Predictive Learning

Nov 09, 2021

Abstract:Visual event perception tasks such as action localization have primarily focused on supervised learning settings under a static observer, i.e., the camera is static and cannot be controlled by an algorithm. They are often restricted by the quality, quantity, and diversity of \textit{annotated} training data and do not often generalize to out-of-domain samples. In this work, we tackle the problem of active action localization where the goal is to localize an action while controlling the geometric and physical parameters of an active camera to keep the action in the field of view without training data. We formulate an energy-based mechanism that combines predictive learning and reactive control to perform active action localization without rewards, which can be sparse or non-existent in real-world environments. We perform extensive experiments in both simulated and real-world environments on two tasks - active object tracking and active action localization. We demonstrate that the proposed approach can generalize to different tasks and environments in a streaming fashion, without explicit rewards or training. We show that the proposed approach outperforms unsupervised baselines and obtains competitive performance compared to those trained with reinforcement learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge