Shrisha Rao

The Limits of AI Explainability: An Algorithmic Information Theory Approach

Apr 29, 2025Abstract:This paper establishes a theoretical foundation for understanding the fundamental limits of AI explainability through algorithmic information theory. We formalize explainability as the approximation of complex models by simpler ones, quantifying both approximation error and explanation complexity using Kolmogorov complexity. Our key theoretical contributions include: (1) a complexity gap theorem proving that any explanation significantly simpler than the original model must differ from it on some inputs; (2) precise bounds showing that explanation complexity grows exponentially with input dimension but polynomially with error tolerance for Lipschitz functions; and (3) a characterization of the gap between local and global explainability, demonstrating that local explanations can be significantly simpler while maintaining accuracy in relevant regions. We further establish a regulatory impossibility theorem proving that no governance framework can simultaneously pursue unrestricted AI capabilities, human-interpretable explanations, and negligible error. These results highlight considerations likely to be relevant to the design, evaluation, and oversight of explainable AI systems.

Analyzing Value Functions of States in Parametric Markov Chains

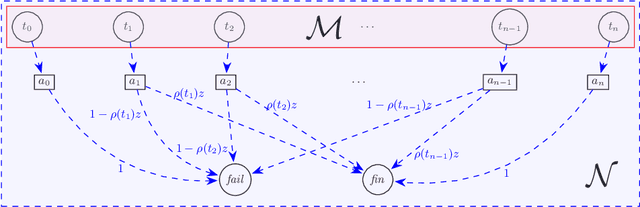

Apr 23, 2025Abstract:Parametric Markov chains (pMC) are used to model probabilistic systems with unknown or partially known probabilities. Although (universal) pMC verification for reachability properties is known to be coETR-complete, there have been efforts to approach it using potentially easier-to-check properties such as asking whether the pMC is monotonic in certain parameters. In this paper, we first reduce monotonicity to asking whether the reachability probability from a given state is never less than that of another given state. Recent results for the latter property imply an efficient algorithm to collapse same-value equivalence classes, which in turn preserves verification results and monotonicity. We implement our algorithm to collapse "trivial" equivalence classes in the pMC and show empirical evidence for the following: First, the collapse gives reductions in size for some existing benchmarks and significant reductions on some custom benchmarks; Second, the collapse speeds up existing algorithms to check monotonicity and parameter lifting, and hence can be used as a fast pre-processing step in practice.

Deontic Temporal Logic for Formal Verification of AI Ethics

Jan 10, 2025Abstract:Ensuring ethical behavior in Artificial Intelligence (AI) systems amidst their increasing ubiquity and influence is a major concern the world over. The use of formal methods in AI ethics is a possible crucial approach for specifying and verifying the ethical behavior of AI systems. This paper proposes a formalization based on deontic logic to define and evaluate the ethical behavior of AI systems, focusing on system-level specifications, contributing to this important goal. It introduces axioms and theorems to capture ethical requirements related to fairness and explainability. The formalization incorporates temporal operators to reason about the ethical behavior of AI systems over time. The authors evaluate the effectiveness of this formalization by assessing the ethics of the real-world COMPAS and loan prediction AI systems. Various ethical properties of the COMPAS and loan prediction systems are encoded using deontic logical formulas, allowing the use of an automated theorem prover to verify whether these systems satisfy the defined properties. The formal verification reveals that both systems fail to fulfill certain key ethical properties related to fairness and non-discrimination, demonstrating the effectiveness of the proposed formalization in identifying potential ethical issues in real-world AI applications.

Dynamic Pricing for Electric Vehicle Charging

Aug 26, 2024

Abstract:Dynamic pricing is a promising strategy to address the challenges of smart charging, as traditional time-of-use (ToU) rates and stationary pricing (SP) do not dynamically react to changes in operating conditions, reducing revenue for charging station (CS) vendors and affecting grid stability. Previous studies evaluated single objectives or linear combinations of objectives for EV CS pricing solutions, simplifying trade-offs and preferences among objectives. We develop a novel formulation for the dynamic pricing problem by addressing multiple conflicting objectives efficiently instead of solely focusing on one objective or metric, as in earlier works. We find optimal trade-offs or Pareto solutions efficiently using Non-dominated Sorting Genetic Algorithms (NSGA) II and NSGA III. A dynamic pricing model quantifies the relationship between demand and price while simultaneously solving multiple conflicting objectives, such as revenue, quality of service (QoS), and peak-to-average ratios (PAR). A single method can only address some of the above aspects of dynamic pricing comprehensively. We present a three-part dynamic pricing approach using a Bayesian model, multi-objective optimization, and multi-criteria decision-making (MCDM) using pseudo-weight vectors. To address the research gap in CS pricing, our method selects solutions using revenue, QoS, and PAR metrics simultaneously. Two California charging sites' real-world data validates our approach.

Near-optimal Differentially Private Client Selection in Federated Settings

Oct 13, 2023

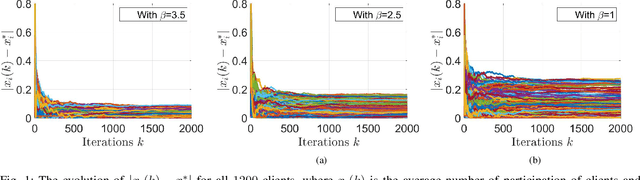

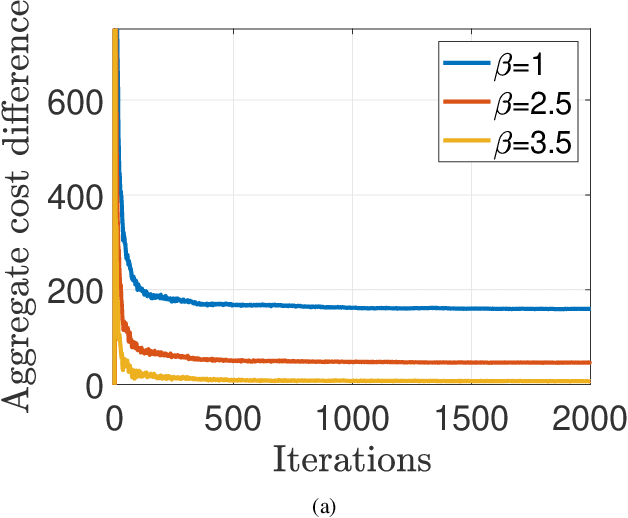

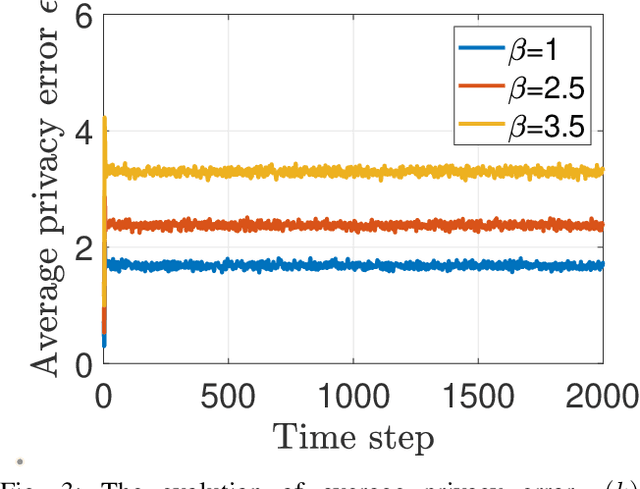

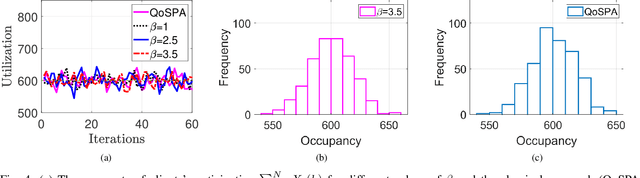

Abstract:We develop an iterative differentially private algorithm for client selection in federated settings. We consider a federated network wherein clients coordinate with a central server to complete a task; however, the clients decide whether to participate or not at a time step based on their preferences -- local computation and probabilistic intent. The algorithm does not require client-to-client information exchange. The developed algorithm provides near-optimal values to the clients over long-term average participation with a certain differential privacy guarantee. Finally, we present the experimental results to check the algorithm's efficacy.

3HAN: A Deep Neural Network for Fake News Detection

Jun 21, 2023Abstract:The rapid spread of fake news is a serious problem calling for AI solutions. We employ a deep learning based automated detector through a three level hierarchical attention network (3HAN) for fast, accurate detection of fake news. 3HAN has three levels, one each for words, sentences, and the headline, and constructs a news vector: an effective representation of an input news article, by processing an article in an hierarchical bottom-up manner. The headline is known to be a distinguishing feature of fake news, and furthermore, relatively few words and sentences in an article are more important than the rest. 3HAN gives a differential importance to parts of an article, on account of its three layers of attention. By experiments on a large real-world data set, we observe the effectiveness of 3HAN with an accuracy of 96.77%. Unlike some other deep learning models, 3HAN provides an understandable output through the attention weights given to different parts of an article, which can be visualized through a heatmap to enable further manual fact checking.

Graph-Based Reductions for Parametric and Weighted MDPs

May 09, 2023

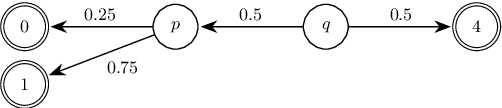

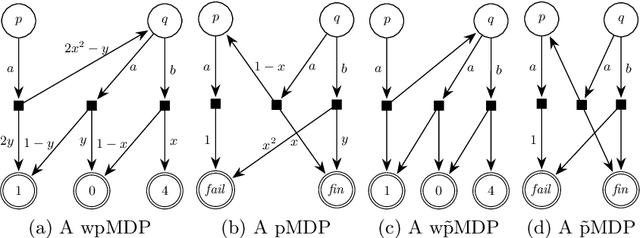

Abstract:We study the complexity of reductions for weighted reachability in parametric Markov decision processes. That is, we say a state p is never worse than q if for all valuations of the polynomial indeterminates it is the case that the maximal expected weight that can be reached from p is greater than the same value from q. In terms of computational complexity, we establish that determining whether p is never worse than q is coETR-complete. On the positive side, we give a polynomial-time algorithm to compute the equivalence classes of the order we study for Markov chains. Additionally, we describe and implement two inference rules to under-approximate the never-worse relation and empirically show that it can be used as an efficient preprocessing step for the analysis of large Markov decision processes.

A Robust Classification Framework for Byzantine-Resilient Stochastic Gradient Descent

Jan 16, 2023Abstract:This paper proposes a Robust Gradient Classification Framework (RGCF) for Byzantine fault tolerance in distributed stochastic gradient descent. The framework consists of a pattern recognition filter which we train to be able to classify individual gradients as Byzantine by using their direction alone. This filter is robust to an arbitrary number of Byzantine workers for convex as well as non-convex optimisation settings, which is a significant improvement on the prior work that is robust to Byzantine faults only when up to 50% of the workers are Byzantine. This solution does not require an estimate of the number of Byzantine workers; its running time is not dependent on the number of workers and can scale up to training instances with a large number of workers without a loss in performance. We validate our solution by training convolutional neural networks on the MNIST dataset in the presence of Byzantine workers.

Bio-inspired Rhythmic Locomotion in a Six-Legged Robot

Jul 27, 2021

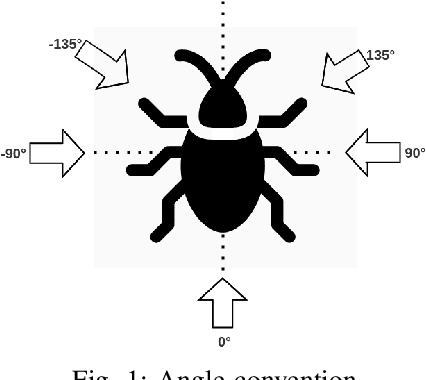

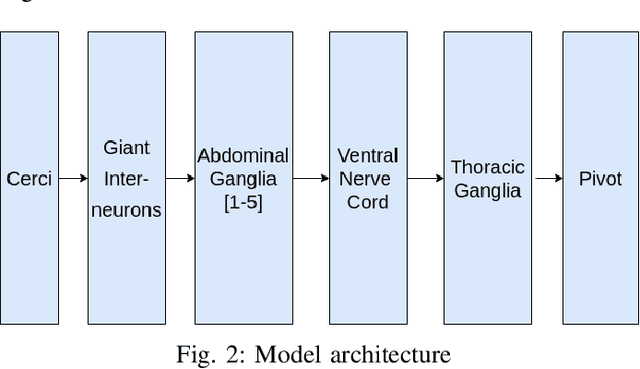

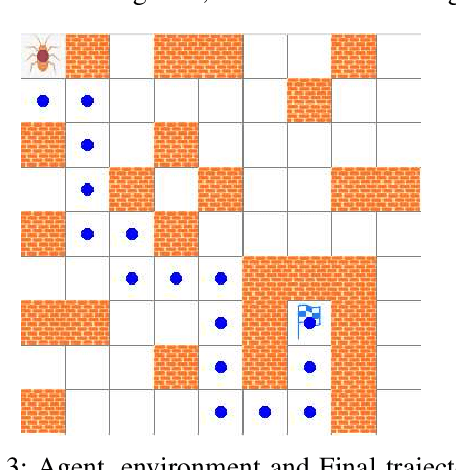

Abstract:Developing a framework for the locomotion of a six-legged robot or a hexapod is a complex task that has extensive hardware and computational requirements. In this paper, we present a bio-inspired framework for the locomotion of a hexapod. Our locomotion model draws inspiration from the structure of a cockroach, with its fairly simple central nervous system, and results in our model being computationally inexpensive with simpler control mechanisms. We consider the limb morphology for a hexapod, the corresponding central pattern generators for its limbs, and the inter-limb coordination required to generate appropriate patterns in its limbs. We also designed two experiments to validate our locomotion model. Our first experiment models the predator-prey dynamics between a cockroach and its predator. Our second experiment makes use of a reinforcement learning-based algorithm, putting forward a realization of our locomotion model. These experiments suggest that this model will help realize practical hexapod robot designs.

Modeling Influencer Marketing Campaigns In Social Networks

Jun 03, 2021

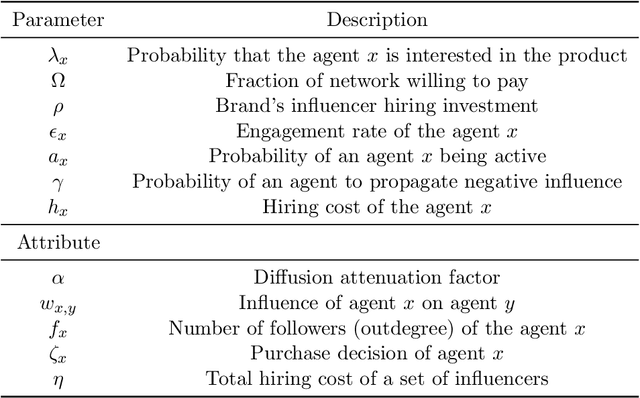

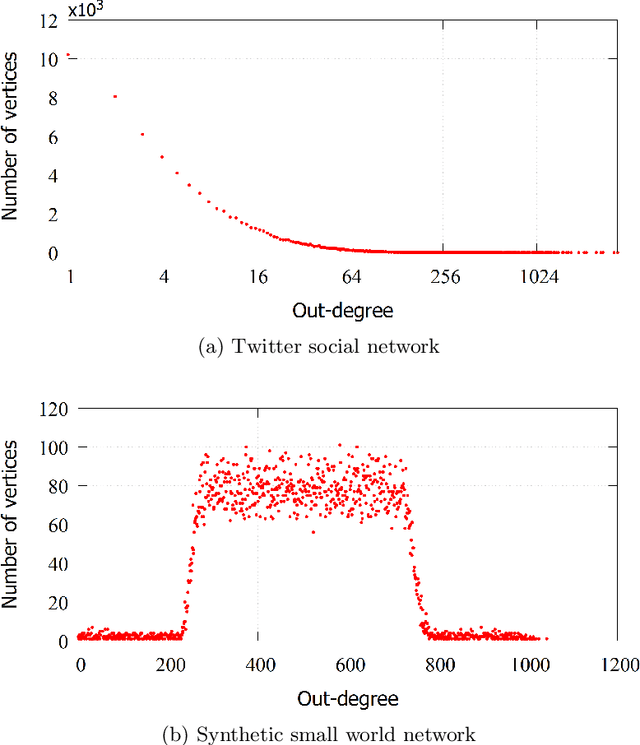

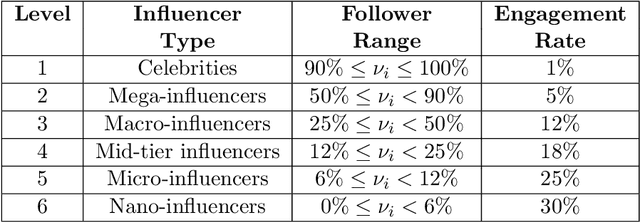

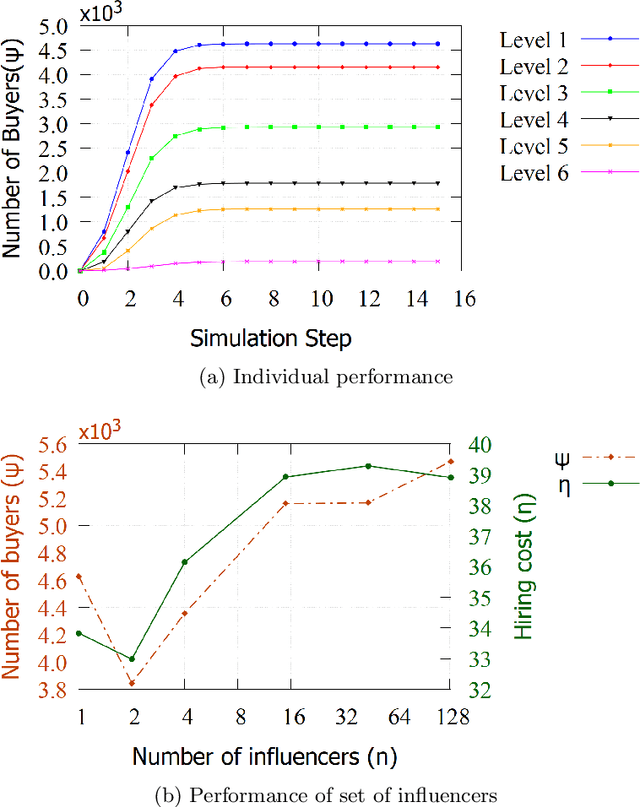

Abstract:In the present day, more than 3.8 billion people around the world actively use social media. The effectiveness of social media in facilitating quick and easy sharing of information has attracted brands and advertizers who wish to use the platform to market products via the influencers in the network. Influencers, owing to their massive popularity, provide a huge potential customer base generating higher returns of investment in a very short period. However, it is not straightforward to decide which influencers should be selected for an advertizing campaign that can generate maximum returns with minimum investment. In this work, we present an agent-based model (ABM) that can simulate the dynamics of influencer advertizing campaigns in a variety of scenarios and can help to discover the best influencer marketing strategy. Our system is a probabilistic graph-based model that incorporates real-world factors such as customers' interest in a product, customer behavior, the willingness to pay, a brand's investment cap, influencers' engagement with influence diffusion, and the nature of the product being advertized viz. luxury and non-luxury.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge