Shreeyash Gowaikar

COSMIR: Chain Orchestrated Structured Memory for Iterative Reasoning over Long Context

Oct 06, 2025

Abstract:Reasoning over very long inputs remains difficult for large language models (LLMs). Common workarounds either shrink the input via retrieval (risking missed evidence), enlarge the context window (straining selectivity), or stage multiple agents to read in pieces. In staged pipelines (e.g., Chain of Agents, CoA), free-form summaries passed between agents can discard crucial details and amplify early mistakes. We introduce COSMIR (Chain Orchestrated Structured Memory for Iterative Reasoning), a chain-style framework that replaces ad hoc messages with a structured memory. A Planner agent first turns a user query into concrete, checkable sub-questions. worker agents process chunks via a fixed micro-cycle: Extract, Infer, Refine, writing all updates to the shared memory. A Manager agent then Synthesizes the final answer directly from the memory. This preserves step-wise read-then-reason benefits while changing both the communication medium (structured memory) and the worker procedure (fixed micro-cycle), yielding higher faithfulness, better long-range aggregation, and auditability. On long-context QA from the HELMET suite, COSMIR reduces propagation-stage information loss and improves accuracy over a CoA baseline.

An Agentic Approach to Automatic Creation of P&ID Diagrams from Natural Language Descriptions

Dec 17, 2024Abstract:The Piping and Instrumentation Diagrams (P&IDs) are foundational to the design, construction, and operation of workflows in the engineering and process industries. However, their manual creation is often labor-intensive, error-prone, and lacks robust mechanisms for error detection and correction. While recent advancements in Generative AI, particularly Large Language Models (LLMs) and Vision-Language Models (VLMs), have demonstrated significant potential across various domains, their application in automating generation of engineering workflows remains underexplored. In this work, we introduce a novel copilot for automating the generation of P&IDs from natural language descriptions. Leveraging a multi-step agentic workflow, our copilot provides a structured and iterative approach to diagram creation directly from Natural Language prompts. We demonstrate the feasibility of the generation process by evaluating the soundness and completeness of the workflow, and show improved results compared to vanilla zero-shot and few-shot generation approaches.

AI-EDI-SPACE: A Co-designed Dataset for Evaluating the Quality of Public Spaces

Nov 01, 2024

Abstract:Advancements in AI heavily rely on large-scale datasets meticulously curated and annotated for training. However, concerns persist regarding the transparency and context of data collection methodologies, especially when sourced through crowdsourcing platforms. Crowdsourcing often employs low-wage workers with poor working conditions and lacks consideration for the representativeness of annotators, leading to algorithms that fail to represent diverse views and perpetuate biases against certain groups. To address these limitations, we propose a methodology involving a co-design model that actively engages stakeholders at key stages, integrating principles of Equity, Diversity, and Inclusion (EDI) to ensure diverse viewpoints. We apply this methodology to develop a dataset and AI model for evaluating public space quality using street view images, demonstrating its effectiveness in capturing diverse perspectives and fostering higher-quality data.

From Efficiency to Equity: Measuring Fairness in Preference Learning

Oct 24, 2024

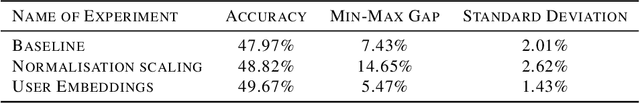

Abstract:As AI systems, particularly generative models, increasingly influence decision-making, ensuring that they are able to fairly represent diverse human preferences becomes crucial. This paper introduces a novel framework for evaluating epistemic fairness in preference learning models inspired by economic theories of inequality and Rawlsian justice. We propose metrics adapted from the Gini Coefficient, Atkinson Index, and Kuznets Ratio to quantify fairness in these models. We validate our approach using two datasets: a custom visual preference dataset (AI-EDI-Space) and the Jester Jokes dataset. Our analysis reveals variations in model performance across users, highlighting potential epistemic injustices. We explore pre-processing and in-processing techniques to mitigate these inequalities, demonstrating a complex relationship between model efficiency and fairness. This work contributes to AI ethics by providing a framework for evaluating and improving epistemic fairness in preference learning models, offering insights for developing more inclusive AI systems in contexts where diverse human preferences are crucial.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge