Shivkumar Kalyanaraman

LABELING COPILOT: A Deep Research Agent for Automated Data Curation in Computer Vision

Sep 26, 2025Abstract:Curating high-quality, domain-specific datasets is a major bottleneck for deploying robust vision systems, requiring complex trade-offs between data quality, diversity, and cost when researching vast, unlabeled data lakes. We introduce Labeling Copilot, the first data curation deep research agent for computer vision. A central orchestrator agent, powered by a large multimodal language model, uses multi-step reasoning to execute specialized tools across three core capabilities: (1) Calibrated Discovery sources relevant, in-distribution data from large repositories; (2) Controllable Synthesis generates novel data for rare scenarios with robust filtering; and (3) Consensus Annotation produces accurate labels by orchestrating multiple foundation models via a novel consensus mechanism incorporating non-maximum suppression and voting. Our large-scale validation proves the effectiveness of Labeling Copilot's components. The Consensus Annotation module excels at object discovery: on the dense COCO dataset, it averages 14.2 candidate proposals per image-nearly double the 7.4 ground-truth objects-achieving a final annotation mAP of 37.1%. On the web-scale Open Images dataset, it navigated extreme class imbalance to discover 903 new bounding box categories, expanding its capability to over 1500 total. Concurrently, our Calibrated Discovery tool, tested at a 10-million sample scale, features an active learning strategy that is up to 40x more computationally efficient than alternatives with equivalent sample efficiency. These experiments validate that an agentic workflow with optimized, scalable tools provides a robust foundation for curating industrial-scale datasets.

Grammars of Formal Uncertainty: When to Trust LLMs in Automated Reasoning Tasks

May 26, 2025Abstract:Large language models (LLMs) show remarkable promise for democratizing automated reasoning by generating formal specifications. However, a fundamental tension exists: LLMs are probabilistic, while formal verification demands deterministic guarantees. This paper addresses this epistemological gap by comprehensively investigating failure modes and uncertainty quantification (UQ) in LLM-generated formal artifacts. Our systematic evaluation of five frontier LLMs reveals Satisfiability Modulo Theories (SMT) based autoformalization's domain-specific impact on accuracy (from +34.8% on logical tasks to -44.5% on factual ones), with known UQ techniques like the entropy of token probabilities failing to identify these errors. We introduce a probabilistic context-free grammar (PCFG) framework to model LLM outputs, yielding a refined uncertainty taxonomy. We find uncertainty signals are task-dependent (e.g., grammar entropy for logic, AUROC>0.93). Finally, a lightweight fusion of these signals enables selective verification, drastically reducing errors (14-100%) with minimal abstention, transforming LLM-driven formalization into a reliable engineering discipline.

AI Greenferencing: Routing AI Inferencing to Green Modular Data Centers with Heron

May 15, 2025Abstract:AI power demand is growing unprecedentedly thanks to the high power density of AI compute and the emerging inferencing workload. On the supply side, abundant wind power is waiting for grid access in interconnection queues. In this light, this paper argues bringing AI workload to modular compute clusters co-located in wind farms. Our deployment right-sizing strategy makes it economically viable to deploy more than 6 million high-end GPUs today that could consume cheap, green power at its source. We built Heron, a cross-site software router, that could efficiently leverage the complementarity of power generation across wind farms by routing AI inferencing workload around power drops. Using 1-week ofcoding and conversation production traces from Azure and (real) variable wind power traces, we show how Heron improves aggregate goodput of AI compute by up to 80% compared to the state-of-the-art.

An Agentic Approach to Automatic Creation of P&ID Diagrams from Natural Language Descriptions

Dec 17, 2024Abstract:The Piping and Instrumentation Diagrams (P&IDs) are foundational to the design, construction, and operation of workflows in the engineering and process industries. However, their manual creation is often labor-intensive, error-prone, and lacks robust mechanisms for error detection and correction. While recent advancements in Generative AI, particularly Large Language Models (LLMs) and Vision-Language Models (VLMs), have demonstrated significant potential across various domains, their application in automating generation of engineering workflows remains underexplored. In this work, we introduce a novel copilot for automating the generation of P&IDs from natural language descriptions. Leveraging a multi-step agentic workflow, our copilot provides a structured and iterative approach to diagram creation directly from Natural Language prompts. We demonstrate the feasibility of the generation process by evaluating the soundness and completeness of the workflow, and show improved results compared to vanilla zero-shot and few-shot generation approaches.

Proof of Thought : Neurosymbolic Program Synthesis allows Robust and Interpretable Reasoning

Sep 25, 2024Abstract:Large Language Models (LLMs) have revolutionized natural language processing, yet they struggle with inconsistent reasoning, particularly in novel domains and complex logical sequences. This research introduces Proof of Thought, a framework that enhances the reliability and transparency of LLM outputs. Our approach bridges LLM-generated ideas with formal logic verification, employing a custom interpreter to convert LLM outputs into First Order Logic constructs for theorem prover scrutiny. Central to our method is an intermediary JSON-based Domain-Specific Language, which by design balances precise logical structures with intuitive human concepts. This hybrid representation enables both rigorous validation and accessible human comprehension of LLM reasoning processes. Key contributions include a robust type system with sort management for enhanced logical integrity, explicit representation of rules for clear distinction between factual and inferential knowledge, and a flexible architecture that allows for easy extension to various domain-specific applications. We demonstrate Proof of Thought's effectiveness through benchmarking on StrategyQA and a novel multimodal reasoning task, showing improved performance in open-ended scenarios. By providing verifiable and interpretable results, our technique addresses critical needs for AI system accountability and sets a foundation for human-in-the-loop oversight in high-stakes domains.

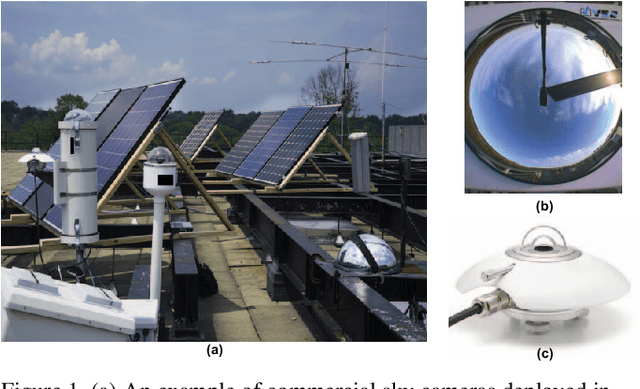

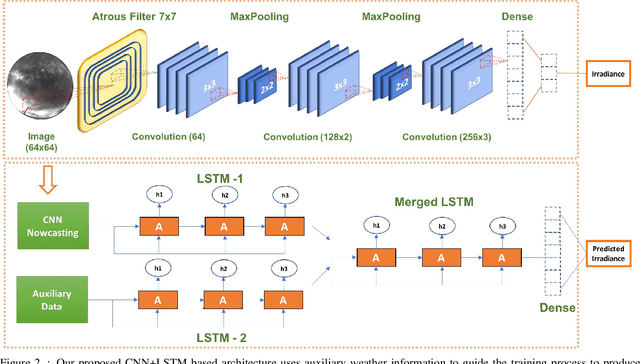

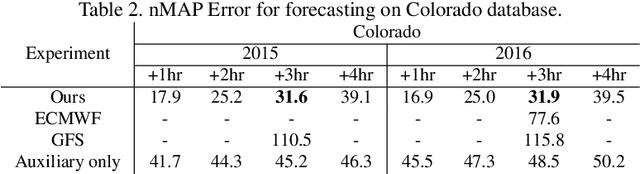

A deep learning approach to solar-irradiance forecasting in sky-videos

Jan 15, 2019

Abstract:Ahead-of-time forecasting of incident solar-irradiance on a panel is indicative of expected energy yield and is essential for efficient grid distribution and planning. Traditionally, these forecasts are based on meteorological physics models whose parameters are tuned by coarse-grained radiometric tiles sensed from geo-satellites. This research presents a novel application of deep neural network approach to observe and estimate short-term weather effects from videos. Specifically, we use time-lapsed videos (sky-videos) obtained from upward facing wide-lensed cameras (sky-cameras) to directly estimate and forecast solar irradiance. We introduce and present results on two large publicly available datasets obtained from weather stations in two regions of North America using relatively inexpensive optical hardware. These datasets contain over a million images that span for 1 and 12 years respectively, the largest such collection to our knowledge. Compared to satellite based approaches, the proposed deep learning approach significantly reduces the normalized mean-absolute-percentage error for both nowcasting, i.e. prediction of the solar irradiance at the instance the frame is captured, as well as forecasting, ahead-of-time irradiance prediction for a duration for upto 4 hours.

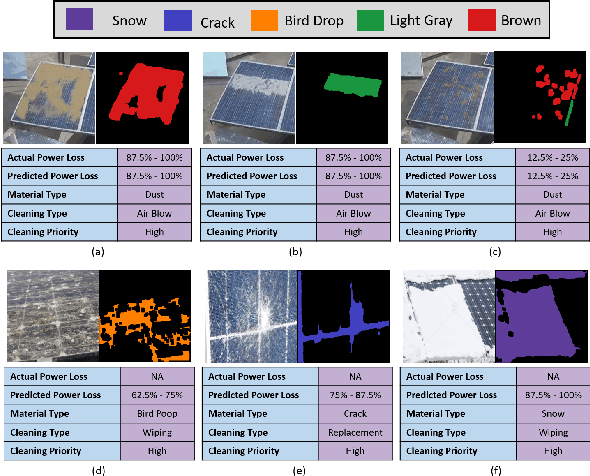

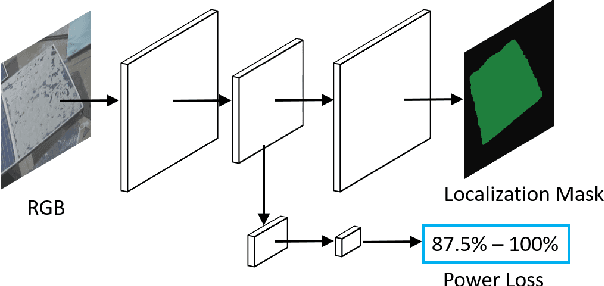

DeepSolarEye: Power Loss Prediction and Weakly Supervised Soiling Localization via Fully Convolutional Networks for Solar Panels

Mar 18, 2018

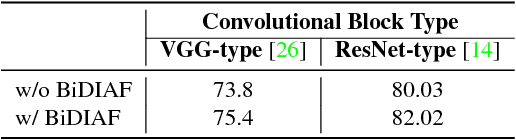

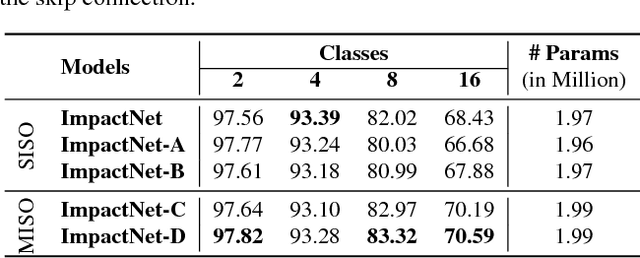

Abstract:The impact of soiling on solar panels is an important and well-studied problem in renewable energy sector. In this paper, we present the first convolutional neural network (CNN) based approach for solar panel soiling and defect analysis. Our approach takes an RGB image of solar panel and environmental factors as inputs to predict power loss, soiling localization, and soiling type. In computer vision, localization is a complex task which typically requires manually labeled training data such as bounding boxes or segmentation masks. Our proposed approach consists of specialized four stages which completely avoids localization ground truth and only needs panel images with power loss labels for training. The region of impact area obtained from the predicted localization masks are classified into soiling types using the webly supervised learning. For improving localization capabilities of CNNs, we introduce a novel bi-directional input-aware fusion (BiDIAF) block that reinforces the input at different levels of CNN to learn input-specific feature maps. Our empirical study shows that BiDIAF improves the power loss prediction accuracy by about 3% and localization accuracy by about 4%. Our end-to-end model yields further improvement of about 24% on localization when learned in a weakly supervised manner. Our approach is generalizable and showed promising results on web crawled solar panel images. Our system has a frame rate of 22 fps (including all steps) on a NVIDIA TitanX GPU. Additionally, we collected first of it's kind dataset for solar panel image analysis consisting 45,000+ images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge