Shivesh Khaitan

Leveraging State Space Models in Long Range Genomics

Apr 07, 2025

Abstract:Long-range dependencies are critical for understanding genomic structure and function, yet most conventional methods struggle with them. Widely adopted transformer-based models, while excelling at short-context tasks, are limited by the attention module's quadratic computational complexity and inability to extrapolate to sequences longer than those seen in training. In this work, we explore State Space Models (SSMs) as a promising alternative by benchmarking two SSM-inspired architectures, Caduceus and Hawk, on long-range genomics modeling tasks under conditions parallel to a 50M parameter transformer baseline. We discover that SSMs match transformer performance and exhibit impressive zero-shot extrapolation across multiple tasks, handling contexts 10 to 100 times longer than those seen during training, indicating more generalizable representations better suited for modeling the long and complex human genome. Moreover, we demonstrate that these models can efficiently process sequences of 1M tokens on a single GPU, allowing for modeling entire genomic regions at once, even in labs with limited compute. Our findings establish SSMs as efficient and scalable for long-context genomic analysis.

Reasoning with Latent Diffusion in Offline Reinforcement Learning

Sep 12, 2023

Abstract:Offline reinforcement learning (RL) holds promise as a means to learn high-reward policies from a static dataset, without the need for further environment interactions. However, a key challenge in offline RL lies in effectively stitching portions of suboptimal trajectories from the static dataset while avoiding extrapolation errors arising due to a lack of support in the dataset. Existing approaches use conservative methods that are tricky to tune and struggle with multi-modal data (as we show) or rely on noisy Monte Carlo return-to-go samples for reward conditioning. In this work, we propose a novel approach that leverages the expressiveness of latent diffusion to model in-support trajectory sequences as compressed latent skills. This facilitates learning a Q-function while avoiding extrapolation error via batch-constraining. The latent space is also expressive and gracefully copes with multi-modal data. We show that the learned temporally-abstract latent space encodes richer task-specific information for offline RL tasks as compared to raw state-actions. This improves credit assignment and facilitates faster reward propagation during Q-learning. Our method demonstrates state-of-the-art performance on the D4RL benchmarks, particularly excelling in long-horizon, sparse-reward tasks.

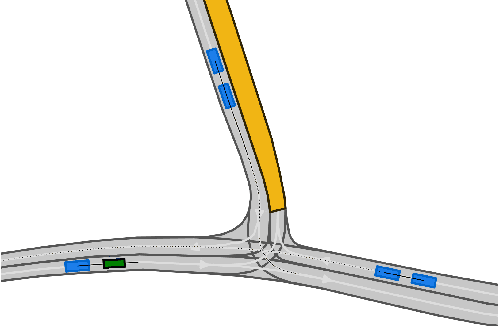

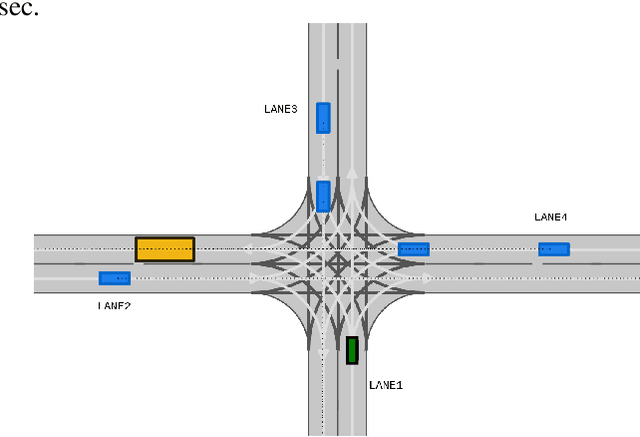

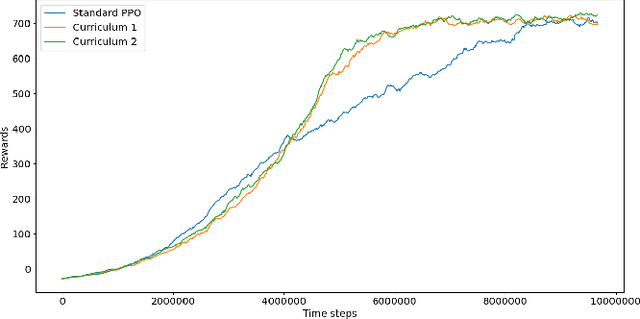

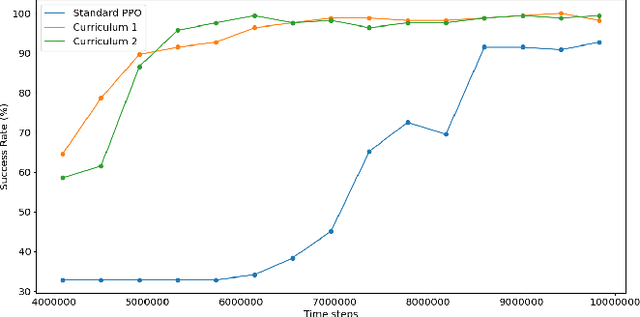

State Dropout-Based Curriculum Reinforcement Learning for Self-Driving at Unsignalized Intersections

Jul 10, 2022

Abstract:Traversing intersections is a challenging problem for autonomous vehicles, especially when the intersections do not have traffic control. Recently deep reinforcement learning has received massive attention due to its success in dealing with autonomous driving tasks. In this work, we address the problem of traversing unsignalized intersections using a novel curriculum for deep reinforcement learning. The proposed curriculum leads to: 1) A faster training process for the reinforcement learning agent, and 2) Better performance compared to an agent trained without curriculum. Our main contribution is two-fold: 1) Presenting a unique curriculum for training deep reinforcement learning agents, and 2) showing the application of the proposed curriculum for the unsignalized intersection traversal task. The framework expects processed observations of the surroundings from the perception system of the autonomous vehicle. We test our method in the CommonRoad motion planning simulator on T-intersections and four-way intersections.

Safe planning and control under uncertainty for self-driving

Oct 21, 2020

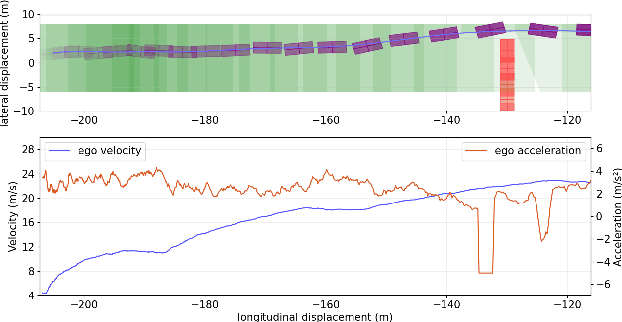

Abstract:Motion Planning under uncertainty is critical for safe self-driving. In this paper, we propose a unified obstacle avoidance framework that deals with 1) uncertainty in ego-vehicle motion; and 2) prediction uncertainty of dynamic obstacles from environment. A two-stage traffic participant trajectory predictor comprising short-term and long-term prediction is used in the planning layer to generate safe but not over-conservative trajectories for the ego vehicle. The prediction module cooperates well with existing planning approaches. Our work showcases its effectiveness in a Frenet frame planner. A robust controller using tube MPC guarantees safe execution of the trajectory in the presence of state noise and dynamic model uncertainty. A Gaussian process regression model is used for online identification of the uncertainty's bound. We demonstrate effectiveness, safety, and real-time performance of our framework in the CARLA simulator.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge