Shima Kamyab

Deep-MDS Framework for Recovering the 3D Shape of 2D Landmarks from a Single Image

Oct 27, 2022Abstract:In this paper, a low parameter deep learning framework utilizing the Non-metric Multi-Dimensional scaling (NMDS) method, is proposed to recover the 3D shape of 2D landmarks on a human face, in a single input image. Hence, NMDS approach is used for the first time to establish a mapping from a 2D landmark space to the corresponding 3D shape space. A deep neural network learns the pairwise dissimilarity among 2D landmarks, used by NMDS approach, whose objective is to learn the pairwise 3D Euclidean distance of the corresponding 2D landmarks on the input image. This scheme results in a symmetric dissimilarity matrix, with the rank larger than 2, leading the NMDS approach toward appropriately recovering the 3D shape of corresponding 2D landmarks. In the case of posed images and complex image formation processes like perspective projection which causes occlusion in the input image, we consider an autoencoder component in the proposed framework, as an occlusion removal part, which turns different input views of the human face into a profile view. The results of a performance evaluation using different synthetic and real-world human face datasets, including Besel Face Model (BFM), CelebA, CoMA - FLAME, and CASIA-3D, indicates the comparable performance of the proposed framework, despite its small number of training parameters, with the related state-of-the-art and powerful 3D reconstruction methods from the literature, in terms of efficiency and accuracy.

Survey of Deep Learning Methods for Inverse Problems

Nov 13, 2021

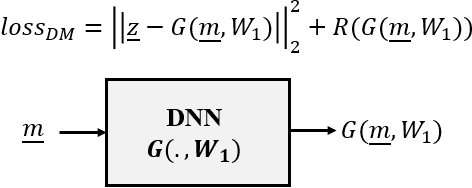

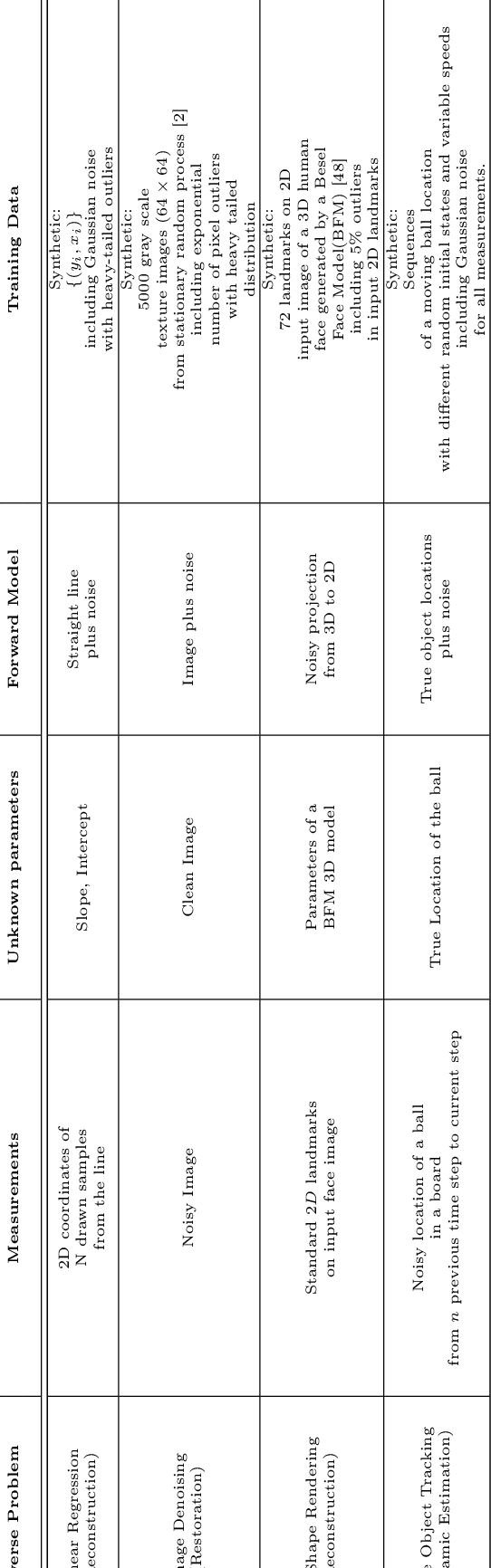

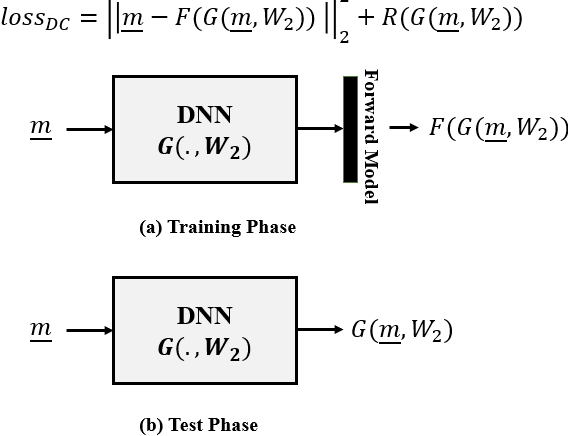

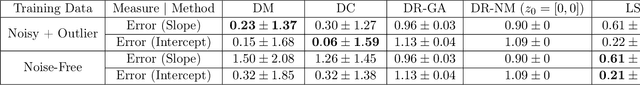

Abstract:In this paper we investigate a variety of deep learning strategies for solving inverse problems. We classify existing deep learning solutions for inverse problems into three categories of Direct Mapping, Data Consistency Optimizer, and Deep Regularizer. We choose a sample of each inverse problem type, so as to compare the robustness of the three categories, and report a statistical analysis of their differences. We perform extensive experiments on the classic problem of linear regression and three well-known inverse problems in computer vision, namely image denoising, 3D human face inverse rendering, and object tracking, selected as representative prototypes for each class of inverse problems. The overall results and the statistical analyses show that the solution categories have a robustness behaviour dependent on the type of inverse problem domain, and specifically dependent on whether or not the problem includes measurement outliers. Based on our experimental results, we conclude by proposing the most robust solution category for each inverse problem class.

Meta-heuristic for non-homogeneous peak density spaces and implementation on 2 real-world parameter learning/tuning applications

Jun 13, 2019

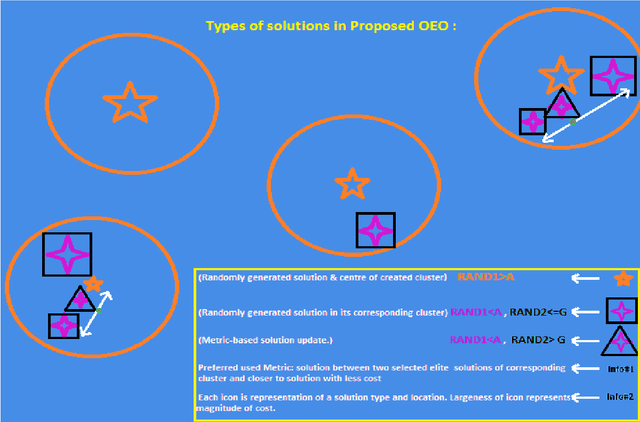

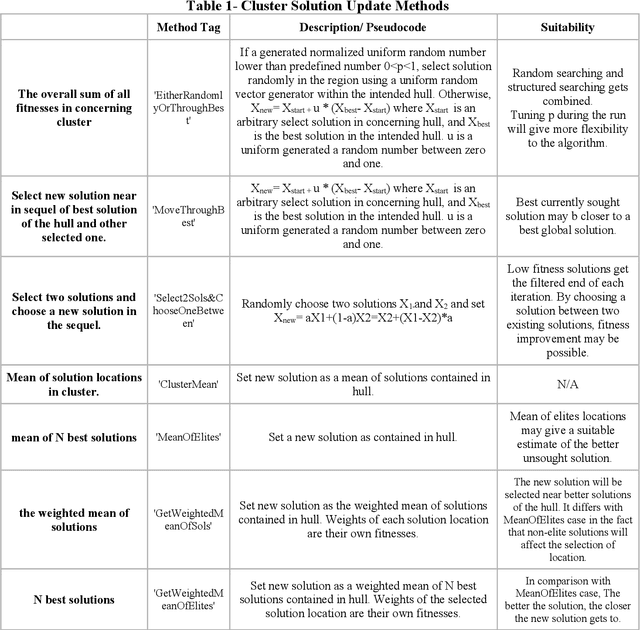

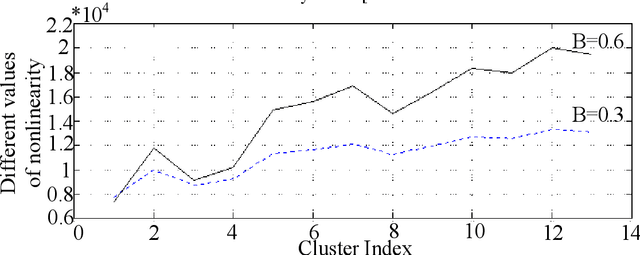

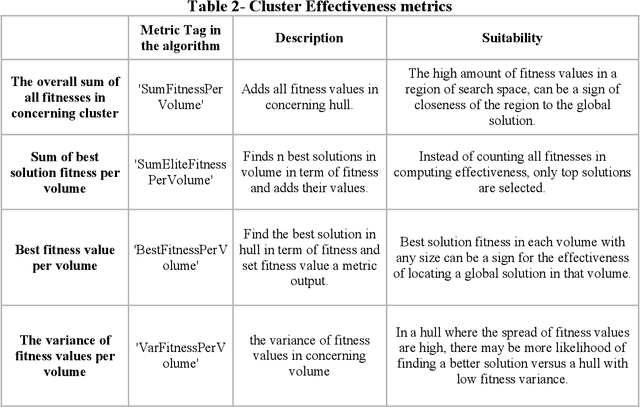

Abstract:Observer effect in physics (/psychology) regards bias in measurement (/perception) due to the interference of instrument (/knowledge). Based on these concepts, a new meta-heuristic algorithm is proposed for controlling memory usage per localities without pursuing Tabu-like cut-off approaches. In this paper, first, variations of observer effect are explained in different branches of science from physics to psychology. Then, a metaheuristic algorithm is proposed based on observer effect concepts and the used metrics are explained. The derived optimizer performance has been compared between 1st, non-homogeneous-peaks-density functions, and 2nd, homogeneous-peaks-density functions to verify the algorithm outperformance in the 1st scheme. Finally, performance analysis of the novel algorithms is derived using two real-world engineering applications in Electroencephalogram feature learning and Distributed Generator parameter tuning, each of which having nonlinearity and complex multi-modal peaks distributions as its characteristics. Also, the effect of version improvement has been assessed. The performance analysis among other optimizers in the same context suggests that the proposed algorithm is useful both solely and in hybrid Gradient Descent settings where problem's search space is nonhomogeneous in terms of local peaks density.

Deep Generative Models: Deterministic Prediction with an Application in Inverse Rendering

Mar 11, 2019

Abstract:Deep generative models are stochastic neural networks capable of learning the distribution of data so as to generate new samples. Conditional Variational Autoencoder (CVAE) is a powerful deep generative model aiming at maximizing the lower bound of training data log-likelihood. In the CVAE structure, there is appropriate regularizer, which makes it applicable for suitably constraining the solution space in solving ill-posed problems and providing high generalization power. Considering the stochastic prediction characteristic in CVAE, depending on the problem at hand, it is desirable to be able to control the uncertainty in CVAE predictions. Therefore, in this paper we analyze the impact of CVAE's condition on the diversity of solutions given by our designed CVAE in 3D shape inverse rendering as a prediction problem. The experimental results using Modelnet10 and Shapenet datasets show the appropriate performance of our designed CVAE and verify the hypothesis: \emph{"The more informative the conditions in terms of object pose are, the less diverse the CVAE predictions are}".

End-to-end 3D shape inverse rendering of different classes of objects from a single input image

Nov 11, 2017

Abstract:In this paper a semi-supervised deep framework is proposed for the problem of 3D shape inverse rendering from a single 2D input image. The main structure of proposed framework consists of unsupervised pre-trained components which significantly reduce the need to labeled data for training the whole framework. using labeled data has the advantage of achieving to accurate results without the need to predefined assumptions about image formation process. Three main components are used in the proposed network: an encoder which maps 2D input image to a representation space, a 3D decoder which decodes a representation to a 3D structure and a mapping component in order to map 2D to 3D representation. The only part that needs label for training is the mapping part with not too many parameters. The other components in the network can be pre-trained unsupervised using only 2D images or 3D data in each case. The way of reconstructing 3D shapes in the decoder component, inspired by the model based methods for 3D reconstruction, maps a low dimensional representation to 3D shape space with the advantage of extracting the basis vectors of shape space from training data itself and is not restricted to a small set of examples as used in predefined models. Therefore, the proposed framework deals directly with coordinate values of the point cloud representation which leads to achieve dense 3D shapes in the output. The experimental results on several benchmark datasets of objects and human faces and comparing with recent similar methods shows the power of proposed network in recovering more details from single 2D images.

Deep Structure for end-to-end inverse rendering

Aug 25, 2017

Abstract:Inverse rendering in a 3D format denoted to recovering the 3D properties of a scene given 2D input image(s) and is typically done using 3D Morphable Model (3DMM) based methods from single view images. These models formulate each face as a weighted combination of some basis vectors extracted from the training data. In this paper a deep framework is proposed in which the coefficients and basis vectors are computed by training an autoencoder network and a Convolutional Neural Network (CNN) simultaneously. The idea is to find a common cause which can be mapped to both the 3D structure and corresponding 2D image using deep networks. The empirical results verify the power of deep framework in finding accurate 3D shapes of human faces from their corresponding 2D images on synthetic datasets of human faces.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge