Shijie Jiang

Multi-Stage Patient Role-Playing Framework for Realistic Clinical Interactions

Jan 16, 2026Abstract:The simulation of realistic clinical interactions plays a pivotal role in advancing clinical Large Language Models (LLMs) and supporting medical diagnostic education. Existing approaches and benchmarks rely on generic or LLM-generated dialogue data, which limits the authenticity and diversity of doctor-patient interactions. In this work, we propose the first Chinese patient simulation dataset (Ch-PatientSim), constructed from realistic clinical interaction scenarios to comprehensively evaluate the performance of models in emulating patient behavior. Patients are simulated based on a five-dimensional persona structure. To address issues of the persona class imbalance, a portion of the dataset is augmented using few-shot generation, followed by manual verification. We evaluate various state-of-the-art LLMs and find that most produce overly formal responses that lack individual personality. To address this limitation, we propose a training-free Multi-Stage Patient Role-Playing (MSPRP) framework, which decomposes interactions into three stages to ensure both personalization and realism in model responses. Experimental results demonstrate that our approach significantly improves model performance across multiple dimensions of patient simulation.

VERHallu: Evaluating and Mitigating Event Relation Hallucination in Video Large Language Models

Jan 15, 2026Abstract:Video Large Language Models (VideoLLMs) exhibit various types of hallucinations. Existing research has primarily focused on hallucinations involving the presence of events, objects, and scenes in videos, while largely neglecting event relation hallucination. In this paper, we introduce a novel benchmark for evaluating the Video Event Relation Hallucination, named VERHallu. This benchmark focuses on causal, temporal, and subevent relations between events, encompassing three types of tasks: relation classification, question answering, and counterfactual question answering, for a comprehensive evaluation of event relation hallucination. Additionally, it features counterintuitive video scenarios that deviate from typical pretraining distributions, with each sample accompanied by human-annotated candidates covering both vision-language and pure language biases. Our analysis reveals that current state-of-the-art VideoLLMs struggle with dense-event relation reasoning, often relying on prior knowledge due to insufficient use of frame-level cues. Although these models demonstrate strong grounding capabilities for key events, they often overlook the surrounding subevents, leading to an incomplete and inaccurate understanding of event relations. To tackle this, we propose a Key-Frame Propagating (KFP) strategy, which reallocates frame-level attention within intermediate layers to enhance multi-event understanding. Experiments show it effectively mitigates the event relation hallucination without affecting inference speed.

Applications of Explainable artificial intelligence in Earth system science

Jun 12, 2024

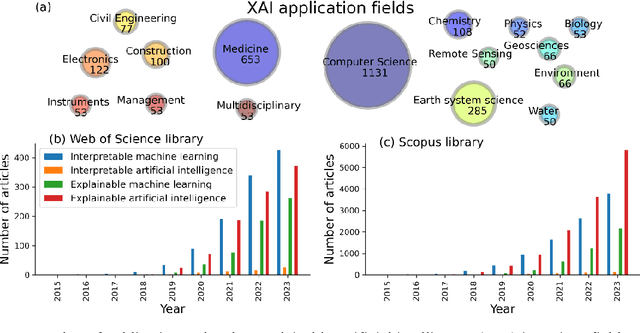

Abstract:In recent years, artificial intelligence (AI) rapidly accelerated its influence and is expected to promote the development of Earth system science (ESS) if properly harnessed. In application of AI to ESS, a significant hurdle lies in the interpretability conundrum, an inherent problem of black-box nature arising from the complexity of AI algorithms. To address this, explainable AI (XAI) offers a set of powerful tools that make the models more transparent. The purpose of this review is twofold: First, to provide ESS scholars, especially newcomers, with a foundational understanding of XAI, serving as a primer to inspire future research advances; second, to encourage ESS professionals to embrace the benefits of AI, free from preconceived biases due to its lack of interpretability. We begin with elucidating the concept of XAI, along with typical methods. We then delve into a review of XAI applications in the ESS literature, highlighting the important role that XAI has played in facilitating communication with AI model decisions, improving model diagnosis, and uncovering scientific insights. We identify four significant challenges that XAI faces within the ESS, and propose solutions. Furthermore, we provide a comprehensive illustration of multifaceted perspectives. Given the unique challenges in ESS, an interpretable hybrid approach that seamlessly integrates AI with domain-specific knowledge appears to be a promising way to enhance the utility of AI in ESS. A visionary outlook for ESS envisions a harmonious blend where process-based models govern the known, AI models explore the unknown, and XAI bridges the gap by providing explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge