Applications of Explainable artificial intelligence in Earth system science

Paper and Code

Jun 12, 2024

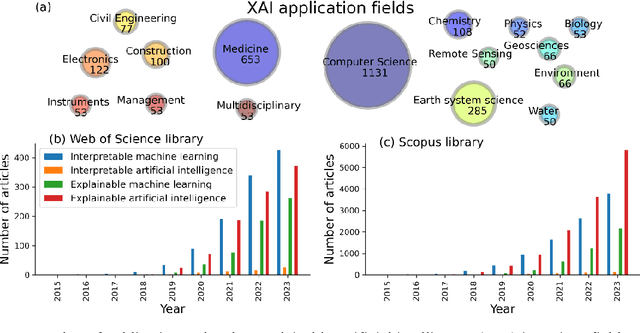

In recent years, artificial intelligence (AI) rapidly accelerated its influence and is expected to promote the development of Earth system science (ESS) if properly harnessed. In application of AI to ESS, a significant hurdle lies in the interpretability conundrum, an inherent problem of black-box nature arising from the complexity of AI algorithms. To address this, explainable AI (XAI) offers a set of powerful tools that make the models more transparent. The purpose of this review is twofold: First, to provide ESS scholars, especially newcomers, with a foundational understanding of XAI, serving as a primer to inspire future research advances; second, to encourage ESS professionals to embrace the benefits of AI, free from preconceived biases due to its lack of interpretability. We begin with elucidating the concept of XAI, along with typical methods. We then delve into a review of XAI applications in the ESS literature, highlighting the important role that XAI has played in facilitating communication with AI model decisions, improving model diagnosis, and uncovering scientific insights. We identify four significant challenges that XAI faces within the ESS, and propose solutions. Furthermore, we provide a comprehensive illustration of multifaceted perspectives. Given the unique challenges in ESS, an interpretable hybrid approach that seamlessly integrates AI with domain-specific knowledge appears to be a promising way to enhance the utility of AI in ESS. A visionary outlook for ESS envisions a harmonious blend where process-based models govern the known, AI models explore the unknown, and XAI bridges the gap by providing explanations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge