Shaohua Zheng

COTR: Convolution in Transformer Network for End to End Polyp Detection

May 23, 2021

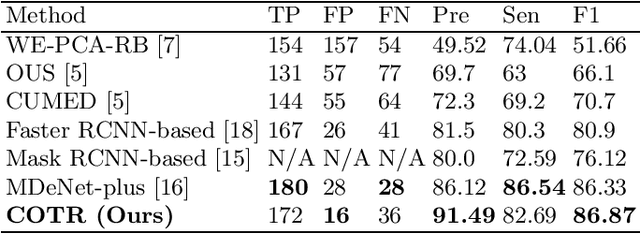

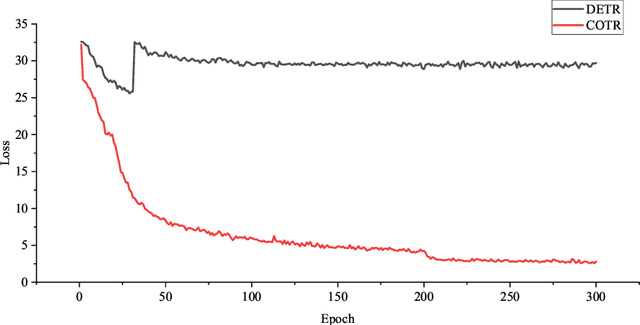

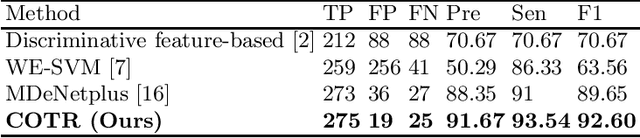

Abstract:Purpose: Colorectal cancer (CRC) is the second most common cause of cancer mortality worldwide. Colonoscopy is a widely used technique for colon screening and polyp lesions diagnosis. Nevertheless, manual screening using colonoscopy suffers from a substantial miss rate of polyps and is an overwhelming burden for endoscopists. Computer-aided diagnosis (CAD) for polyp detection has the potential to reduce human error and human burden. However, current polyp detection methods based on object detection framework need many handcrafted pre-processing and post-processing operations or user guidance that require domain-specific knowledge. Methods: In this paper, we propose a convolution in transformer (COTR) network for end-to-end polyp detection. Motivated by the detection transformer (DETR), COTR is constituted by a CNN for feature extraction, transformer encoder layers interleaved with convolutional layers for feature encoding and recalibration, transformer decoder layers for object querying, and a feed-forward network for detection prediction. Considering the slow convergence of DETR, COTR embeds convolution layers into transformer encoder for feature reconstruction and convergence acceleration. Results: Experimental results on two public polyp datasets show that COTR achieved 91.49\% precision, 82.69% sensitivity, and 86.87% F1-score on the ETIS-LARIB, and 91.67% precision, 93.54% sensitivity, and 92.60% F1-score on the CVC-ColonDB. Conclusion: This study proposed an end to end detection method based on detection transformer for colorectal polyp detection. Experimental results on ETIS-LARIB and CVC-ColonDB dataset demonstrated that the proposed model achieved comparable performance against state-of-the-art methods.

Automatic Pulmonary Artery and Vein Separation Algorithm Based on Multitask Classification Network and Topology Reconstruction in Chest CT Images

Mar 22, 2021

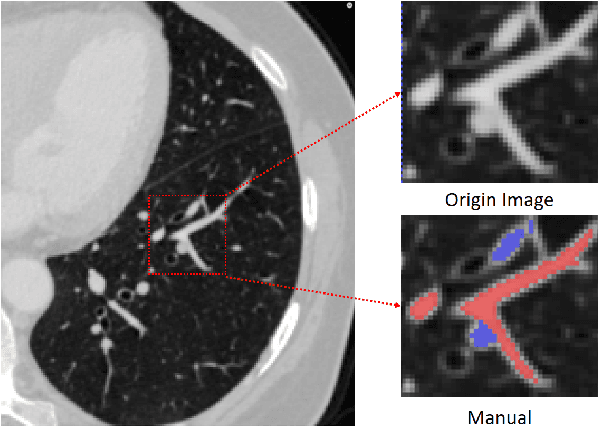

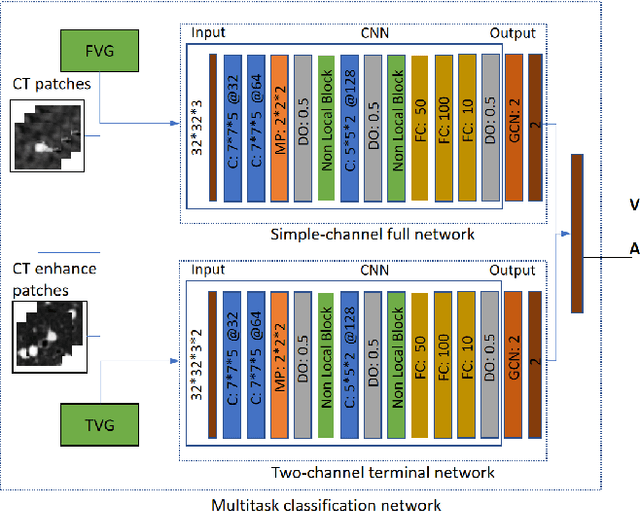

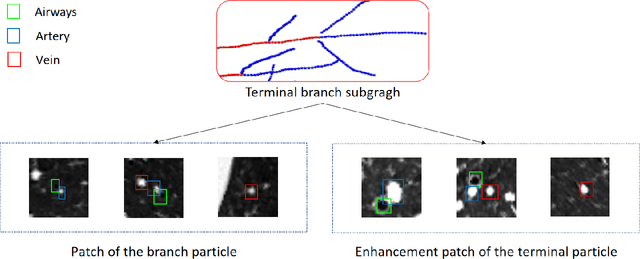

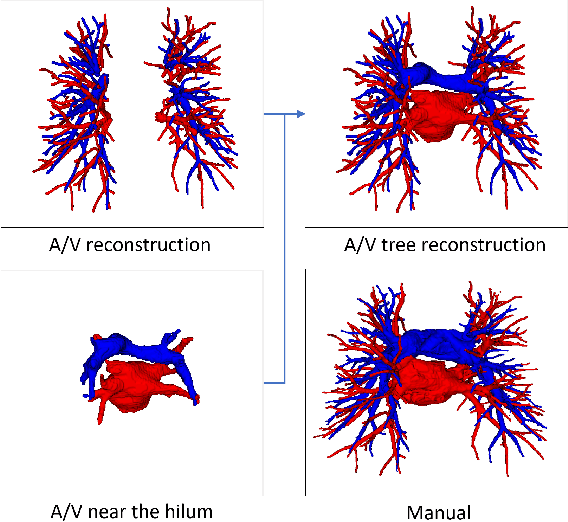

Abstract:With the development of medical computer-aided diagnostic systems, pulmonary artery-vein(A/V) reconstruction plays a crucial role in assisting doctors in preoperative planning for lung cancer surgery. However, distinguishing arterial from venous irrigation in chest CT images remains a challenge due to the similarity and complex structure of the arteries and veins. We propose a novel method for automatic separation of pulmonary arteries and veins from chest CT images. The method consists of three parts. First, global connection information and local feature information are used to construct a complete topological tree and ensure the continuity of vessel reconstruction. Second, the multitask classification network proposed can automatically learn the differences between arteries and veins at different scales to reduce classification errors caused by changes in terminal vessel characteristics. Finally, the topology optimizer considers interbranch and intrabranch topological relationships to maintain spatial consistency to avoid the misclassification of A/V irrigations. We validate the performance of the method on chest CT images. Compared with manual classification, the proposed method achieves an average accuracy of 96.2% on noncontrast chest CT. In addition, the method has been proven to have good generalization, that is, the accuracies of 93.8% and 94.8% are obtained for CT scans from other devices and other modes, respectively. The result of pulmonary artery-vein reconstruction obtained by the proposed method can provide better assistance for preoperative planning of lung cancer surgery.

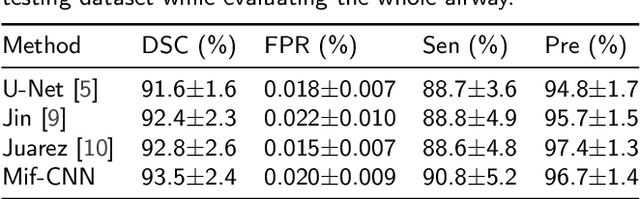

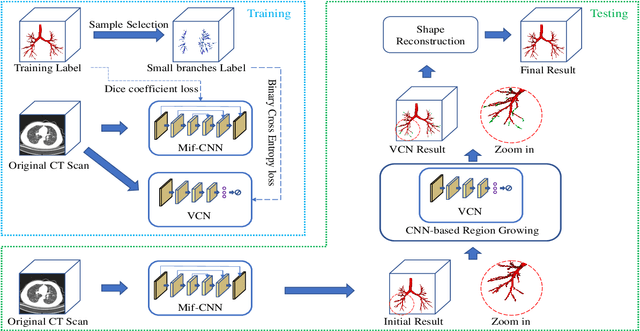

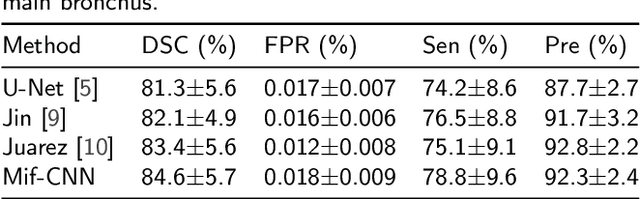

Coarse-to-fine Airway Segmentation Using Multi information Fusion Network and CNN-based Region Growing

Feb 25, 2021

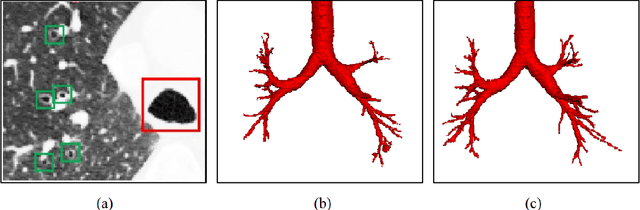

Abstract:Automatic airway segmentation from chest computed tomography (CT) scans plays an important role in pulmonary disease diagnosis and computer-assisted therapy. However, low contrast at peripheral branches and complex tree-like structures remain as two mainly challenges for airway segmentation. Recent research has illustrated that deep learning methods perform well in segmentation tasks. Motivated by these works, a coarse-to-fine segmentation framework is proposed to obtain a complete airway tree. Our framework segments the overall airway and small branches via the multi-information fusion convolution neural network (Mif-CNN) and the CNN-based region growing, respectively. In Mif-CNN, atrous spatial pyramid pooling (ASPP) is integrated into a u-shaped network, and it can expend the receptive field and capture multi-scale information. Meanwhile, boundary and location information are incorporated into semantic information. These information are fused to help Mif-CNN utilize additional context knowledge and useful features. To improve the performance of the segmentation result, the CNN-based region growing method is designed to focus on obtaining small branches. A voxel classification network (VCN), which can entirely capture the rich information around each voxel, is applied to classify the voxels into airway and non-airway. In addition, a shape reconstruction method is used to refine the airway tree.

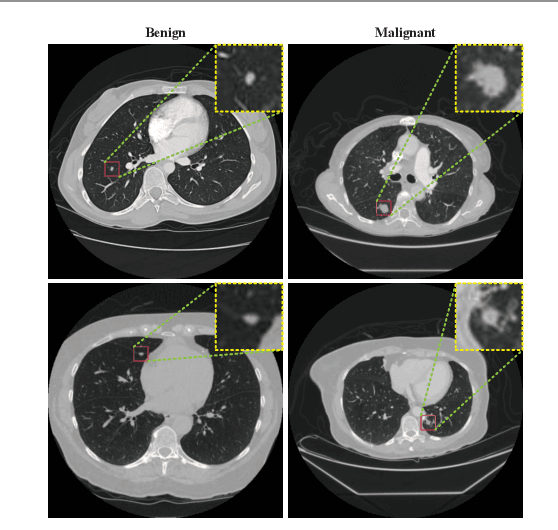

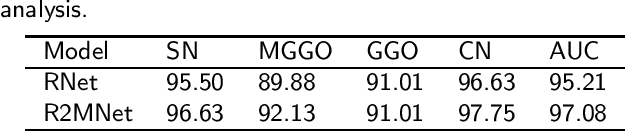

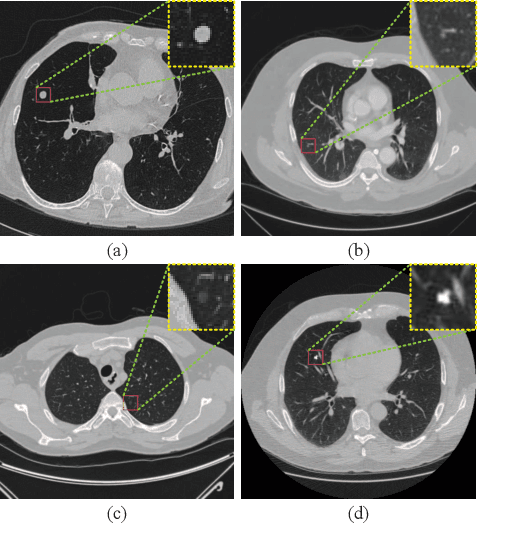

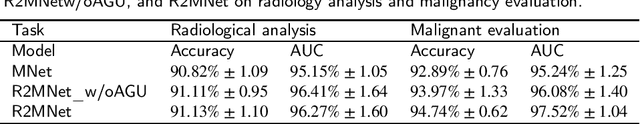

Interpretative Computer-aided Lung Cancer Diagnosis: from Radiology Analysis to Malignancy Evaluation

Feb 22, 2021

Abstract:Background and Objective:Computer-aided diagnosis (CAD) systems promote diagnosis effectiveness and alleviate pressure of radiologists. A CAD system for lung cancer diagnosis includes nodule candidate detection and nodule malignancy evaluation. Recently, deep learning-based pulmonary nodule detection has reached satisfactory performance ready for clinical application. However, deep learning-based nodule malignancy evaluation depends on heuristic inference from low-dose computed tomography volume to malignant probability, which lacks clinical cognition. Methods:In this paper, we propose a joint radiology analysis and malignancy evaluation network (R2MNet) to evaluate the pulmonary nodule malignancy via radiology characteristics analysis. Radiological features are extracted as channel descriptor to highlight specific regions of the input volume that are critical for nodule malignancy evaluation. In addition, for model explanations, we propose channel-dependent activation mapping to visualize the features and shed light on the decision process of deep neural network. Results:Experimental results on the LIDC-IDRI dataset demonstrate that the proposed method achieved area under curve of 96.27% on nodule radiology analysis and AUC of 97.52% on nodule malignancy evaluation. In addition, explanations of CDAM features proved that the shape and density of nodule regions were two critical factors that influence a nodule to be inferred as malignant, which conforms with the diagnosis cognition of experienced radiologists. Conclusion:Incorporating radiology analysis with nodule malignant evaluation, the network inference process conforms to the diagnostic procedure of radiologists and increases the confidence of evaluation results. Besides, model interpretation with CDAM features shed light on the regions which DNNs focus on when they estimate nodule malignancy probabilities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge