Shalutha Rajapakshe

Giving Sense to Inputs: Toward an Accessible Control Framework for Shared Autonomy

Jan 28, 2025Abstract:While shared autonomy offers significant potential for assistive robotics, key questions remain about how to effectively map 2D control inputs to 6D robot motions. An intuitive framework should allow users to input commands effortlessly, with the robot responding as expected, without users needing to anticipate the impact of their inputs. In this article, we propose a dynamic input mapping framework that links joystick movements to motions on control frames defined along a trajectory encoded with canal surfaces. We evaluate our method in a user study with 20 participants, demonstrating that our input mapping framework reduces the workload and improves usability compared to a baseline mapping with similar motion encoding. To prepare for deployment in assistive scenarios, we built on the development from the accessible gaming community to select an accessible control interface. We then tested the system in an exploratory study, where three wheelchair users controlled the robot for both daily living activities and a creative painting task, demonstrating its feasibility for users closer to our target population.

GeoSACS: Geometric Shared Autonomy via Canal Surfaces

Apr 15, 2024Abstract:We introduce GeoSACS, a geometric framework for shared autonomy (SA). In variable environments, SA methods can be used to combine robotic capabilities with real-time human input in a way that offloads the physical task from the human. To remain intuitive, it can be helpful to simplify requirements for human input (i.e., reduce the dimensionality), which create challenges for to map low-dimensional human inputs to the higher dimensional control space of robots without requiring large amounts of data. We built GeoSACS on canal surfaces, a geometric framework that represents potential robot trajectories as a canal from as few as two demonstrations. GeoSACS maps user corrections on the cross-sections of this canal to provide an efficient SA framework. We extend canal surfaces to consider orientation and update the control frames to support intuitive mapping from user input to robot motions. Finally, we demonstrate GeoSACS in two preliminary studies, including a complex manipulation task where a robot loads laundry into a washer.

Collaborative Ground-Aerial Multi-Robot System for Disaster Response Missions with a Low-Cost Drone Add-On for Off-the-Shelf Drones

Apr 14, 2023

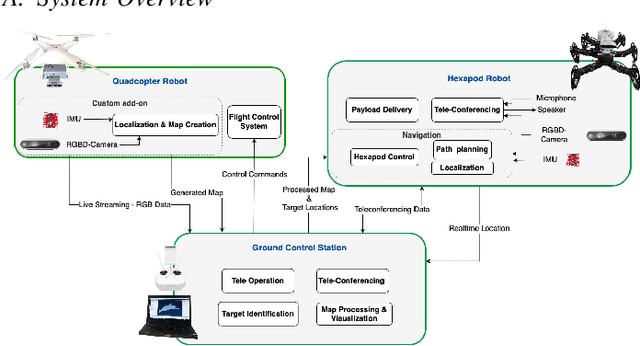

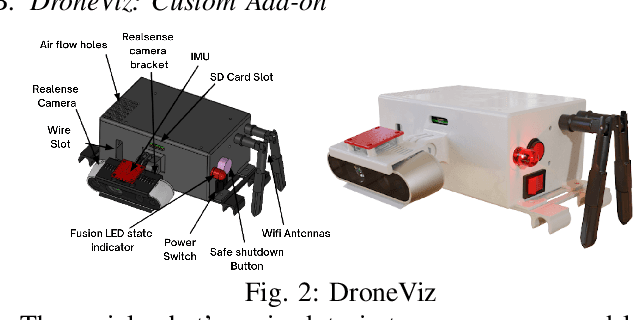

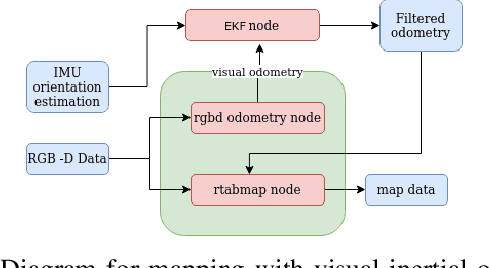

Abstract:In disaster-stricken environments, it's vital to assess the damage quickly, analyse the stability of the environment, and allocate resources to the most vulnerable areas where victims might be present. These missions are difficult and dangerous to be conducted directly by humans. Using the complementary capabilities of both the ground and aerial robots, we investigate a collaborative approach of aerial and ground robots to address this problem. With an increased field of view, faster speed, and compact size, the aerial robot explores the area and creates a 3D feature-based map graph of the environment while providing a live video stream to the ground control station. Once the aerial robot finishes the exploration run, the ground control station processes the map and sends it to the ground robot. The ground robot, with its higher operation time, static stability, payload delivery and tele-conference capabilities, can then autonomously navigate to identified high-vulnerability locations. We have conducted experiments using a quadcopter and a hexapod robot in an indoor modelled environment with obstacles and uneven ground. Additionally, we have developed a low-cost drone add-on with value-added capabilities, such as victim detection, that can be attached to an off-the-shelf drone. The system was assessed for cost-effectiveness, energy efficiency, and scalability.

Design and Development of a Research Oriented Low Cost Robotics Platform with a Novel Dynamic Global Path Planning Approach

Mar 19, 2022

Abstract:Autonomous navigation systems based on computer vision sensors often require sophisticated robotics platforms which are very expensive. This poses a barrier for the implementation and testing of complex localization, mapping, and navigation algorithms that are vital in robotics applications. Addressing this issue, in this work, Robot Operating System (ROS) supported mobile robotics platforms are compared and an end-to-end implementation of an autonomous navigation system based on a low-cost educational robotics platform, AlphaBot2 is presented, while integrating the Intel RealSense D435 camera. Furthermore, a novel approach to implement dynamic path planners as global path planners in the ROS framework is presented. We evaluate the performance of this approach and highlight the improvements that could be achieved through a dynamic global path planner. This low-cost modified AlphaBot2 robotics platform along with the proposed dynamic global path planning approach will be useful for researchers and students for getting hands-on experience with computer vision-based navigation systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge