SeungHwan An

Causally Disentangled Generative Variational AutoEncoder

Feb 23, 2023

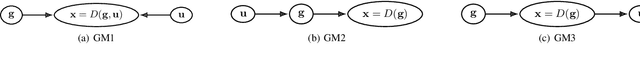

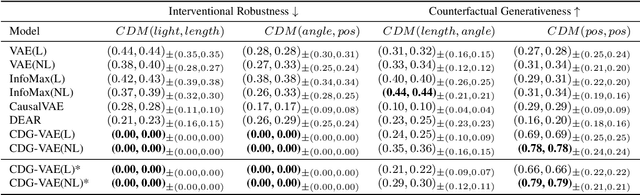

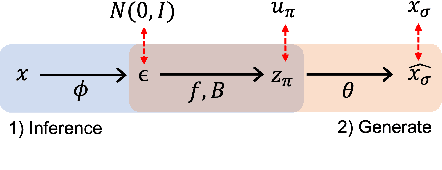

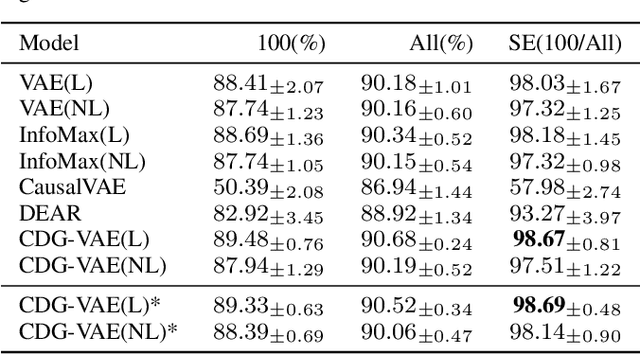

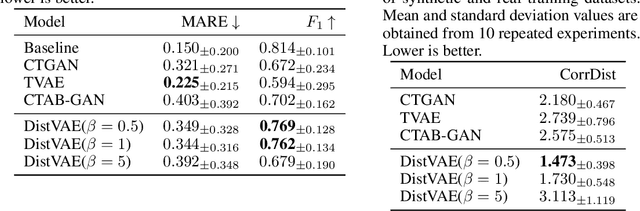

Abstract:We propose a new supervised learning method for Variational AutoEncoder (VAE) which has a causally disentangled representation and achieves the causally disentangled generation (CDG) simultaneously. In this paper, CDG is defined as a generative model able to decode an output precisely according to the causally disentangled representation. We found that the supervised regularization of the encoder is not enough to obtain a generative model with CDG. Consequently, we explore sufficient and necessary conditions for the decoder and the causal effect to achieve CDG. Moreover, we propose a generalized metric measuring how a model is causally disentangled generative. Numerical results with the image and tabular datasets corroborate our arguments.

Distributional Variational AutoEncoder To Infinite Quantiles and Beyond Gaussianity

Feb 22, 2023

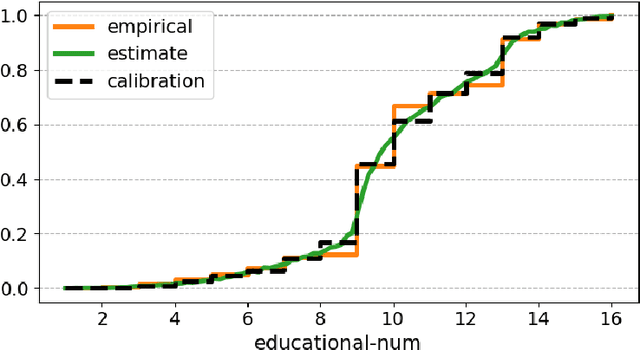

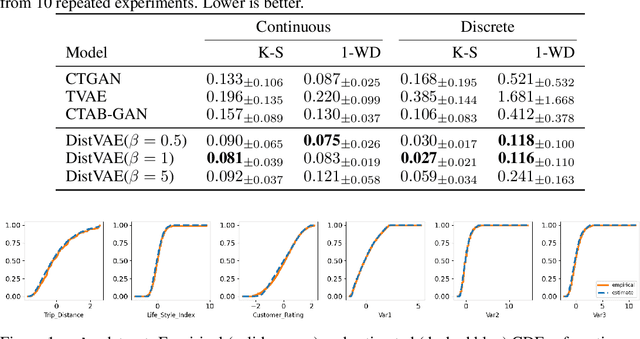

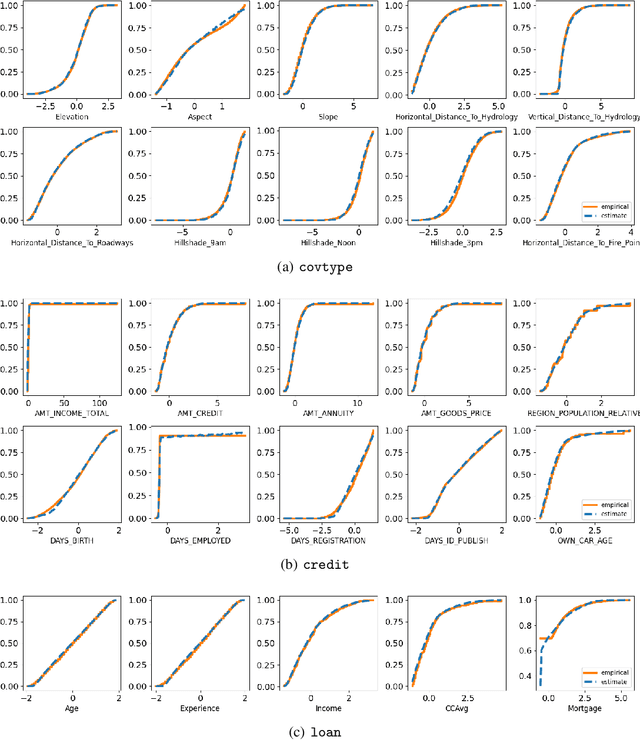

Abstract:The Gaussianity assumption has been pointed out as the main limitation of the Variational AutoEncoder (VAE) in spite of its usefulness in computation. To improve the distributional capacity (i.e., expressive power of distributional family) of the VAE, we propose a new VAE learning method with a nonparametric distributional assumption on its generative model. By estimating an infinite number of conditional quantiles, our proposed VAE model directly estimates the conditional cumulative distribution function, and we call this approach distributional learning of the VAE. Furthermore, by adopting the continuous ranked probability score (CRPS) loss, our proposed learning method becomes computationally tractable. To evaluate how well the underlying distribution of the dataset is captured, we apply our model for synthetic data generation based on inverse transform sampling. Numerical results with real tabular datasets corroborate our arguments.

EXoN: EXplainable encoder Network

May 28, 2021

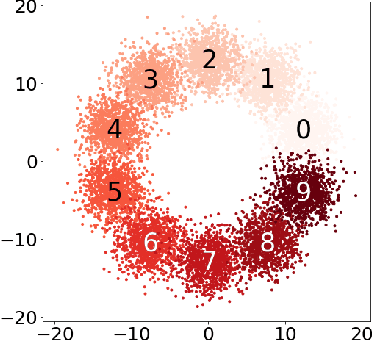

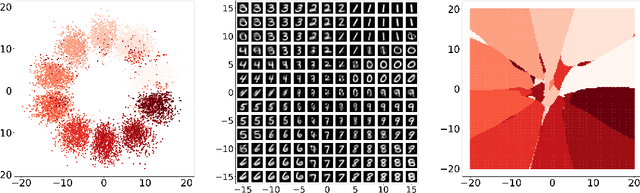

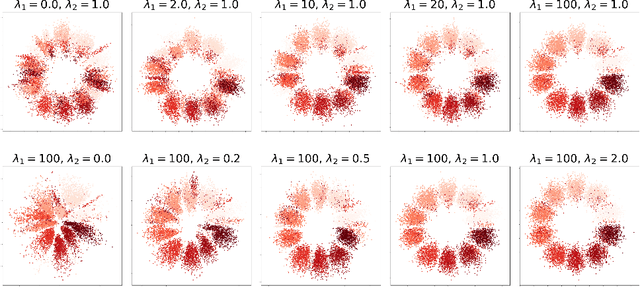

Abstract:We propose a new semi-supervised learning method of Variational AutoEncoder (VAE) which yields an explainable latent space by EXplainable encoder Network (EXoN). The EXoN provides two useful tools for implementing VAE. First, we can freely assign a conceptual center of the latent distribution for a specific label. The latent space of VAE is separated with the multi-modal property of the Gaussian mixture distribution according to the labels of observations. Next, we can easily investigate the latent subspace by a simple statistics obtained from the EXoN. We found that both the negative cross-entropy and the Kullback-Leibler divergence play a crucial role in constructing explainable latent space and the variability of generated samples from our proposed model depends on a specific subspace, called `activated latent subspace'. With MNIST and CIFAR-10 dataset, we show that the EXoN can produce an explainable latent space that effectively represents the labels and characteristics of the images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge