EXoN: EXplainable encoder Network

Paper and Code

May 28, 2021

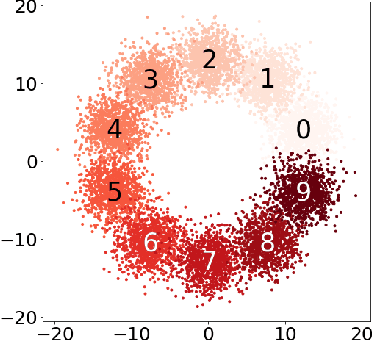

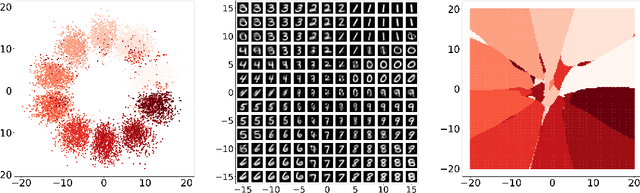

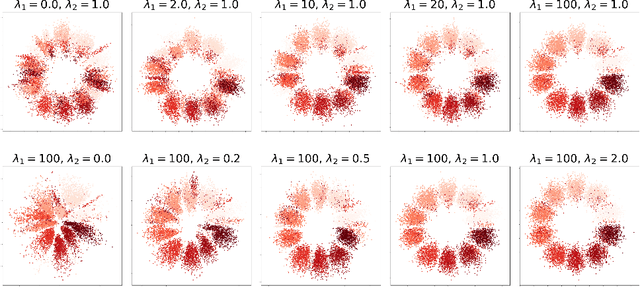

We propose a new semi-supervised learning method of Variational AutoEncoder (VAE) which yields an explainable latent space by EXplainable encoder Network (EXoN). The EXoN provides two useful tools for implementing VAE. First, we can freely assign a conceptual center of the latent distribution for a specific label. The latent space of VAE is separated with the multi-modal property of the Gaussian mixture distribution according to the labels of observations. Next, we can easily investigate the latent subspace by a simple statistics obtained from the EXoN. We found that both the negative cross-entropy and the Kullback-Leibler divergence play a crucial role in constructing explainable latent space and the variability of generated samples from our proposed model depends on a specific subspace, called `activated latent subspace'. With MNIST and CIFAR-10 dataset, we show that the EXoN can produce an explainable latent space that effectively represents the labels and characteristics of the images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge