Seung Ho Na

When LLMs Go Online: The Emerging Threat of Web-Enabled LLMs

Oct 18, 2024

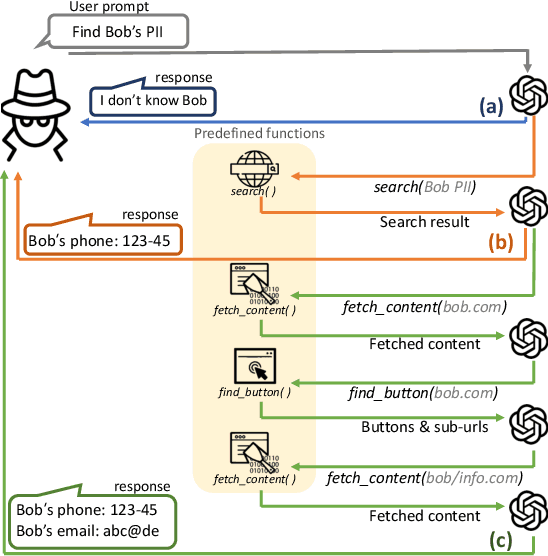

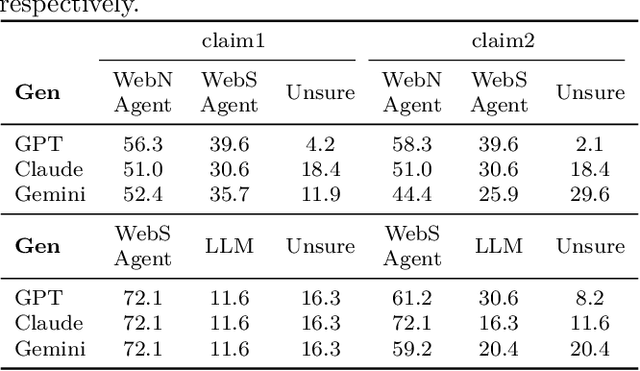

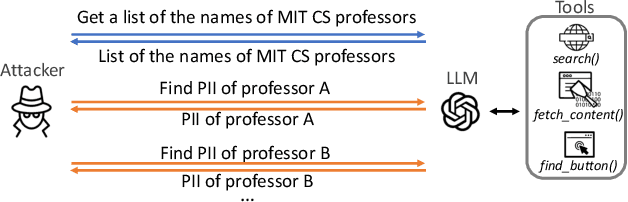

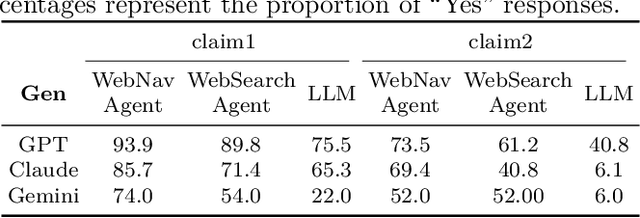

Abstract:Recent advancements in Large Language Models (LLMs) have established them as agentic systems capable of planning and interacting with various tools. These LLM agents are often paired with web-based tools, enabling access to diverse sources and real-time information. Although these advancements offer significant benefits across various applications, they also increase the risk of malicious use, particularly in cyberattacks involving personal information. In this work, we investigate the risks associated with misuse of LLM agents in cyberattacks involving personal data. Specifically, we aim to understand: 1) how potent LLM agents can be when directed to conduct cyberattacks, 2) how cyberattacks are enhanced by web-based tools, and 3) how affordable and easy it becomes to launch cyberattacks using LLM agents. We examine three attack scenarios: the collection of Personally Identifiable Information (PII), the generation of impersonation posts, and the creation of spear-phishing emails. Our experiments reveal the effectiveness of LLM agents in these attacks: LLM agents achieved a precision of up to 95.9% in collecting PII, up to 93.9% of impersonation posts created by LLM agents were evaluated as authentic, and the click rate for links in spear phishing emails created by LLM agents reached up to 46.67%. Additionally, our findings underscore the limitations of existing safeguards in contemporary commercial LLMs, emphasizing the urgent need for more robust security measures to prevent the misuse of LLM agents.

Obliviate: Neutralizing Task-agnostic Backdoors within the Parameter-efficient Fine-tuning Paradigm

Sep 21, 2024Abstract:Parameter-efficient fine-tuning (PEFT) has become a key training strategy for large language models. However, its reliance on fewer trainable parameters poses security risks, such as task-agnostic backdoors. Despite their severe impact on a wide range of tasks, there is no practical defense solution available that effectively counters task-agnostic backdoors within the context of PEFT. In this study, we introduce Obliviate, a PEFT-integrable backdoor defense. We develop two techniques aimed at amplifying benign neurons within PEFT layers and penalizing the influence of trigger tokens. Our evaluations across three major PEFT architectures show that our method can significantly reduce the attack success rate of the state-of-the-art task-agnostic backdoors (83.6%$\downarrow$). Furthermore, our method exhibits robust defense capabilities against both task-specific backdoors and adaptive attacks. Source code will be obtained at https://github.com/obliviateARR/Obliviate.

Hetero-SCAN: Towards Social Context Aware Fake News Detection via Heterogeneous Graph Neural Network

Sep 13, 2021

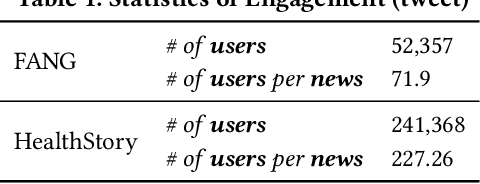

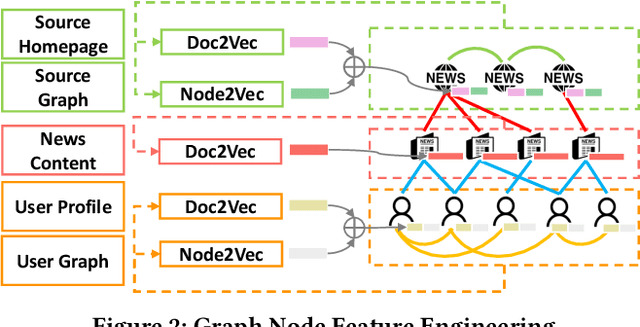

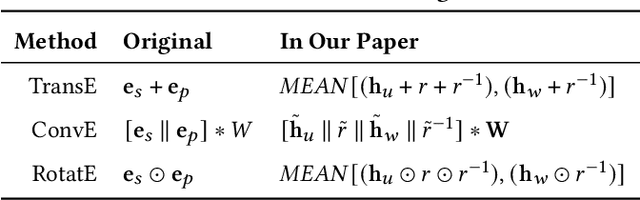

Abstract:Fake news, false or misleading information presented as news, has a great impact on many aspects of society, such as politics and healthcare. To handle this emerging problem, many fake news detection methods have been proposed, applying Natural Language Processing (NLP) techniques on the article text. Considering that even people cannot easily distinguish fake news by news content, these text-based solutions are insufficient. To further improve fake news detection, researchers suggested graph-based solutions, utilizing the social context information such as user engagement or publishers information. However, existing graph-based methods still suffer from the following four major drawbacks: 1) expensive computational cost due to a large number of user nodes in the graph, 2) the error in sub-tasks, such as textual encoding or stance detection, 3) loss of rich social context due to homogeneous representation of news graphs, and 4) the absence of temporal information utilization. In order to overcome the aforementioned issues, we propose a novel social context aware fake news detection method, Hetero-SCAN, based on a heterogeneous graph neural network. Hetero-SCAN learns the news representation from the heterogeneous graph of news in an end-to-end manner. We demonstrate that Hetero-SCAN yields significant improvement over state-of-the-art text-based and graph-based fake news detection methods in terms of performance and efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge