Sergio Vitale

An Efficient Machine Learning Framework for Forest Height Estimation from Multi-Polarimetric Multi-Baseline SAR data

Jul 28, 2025Abstract:Accurate forest height estimation is crucial for climate change monitoring and carbon cycle assessment. Synthetic Aperture Radar (SAR), particularly in multi-channel configurations, has provided support for a long time in 3D forest structure reconstruction through model-based techniques. More recently, data-driven approaches using Machine Learning (ML) and Deep Learning (DL) have enabled new opportunities for forest parameter retrieval. This paper introduces FGump, a forest height estimation framework by gradient boosting using multi-channel SAR processing with LiDAR profiles as Ground Truth(GT). Unlike typical ML and DL approaches that require large datasets and complex architectures, FGump ensures a strong balance between accuracy and computational efficiency, using a limited set of hand-designed features and avoiding heavy preprocessing (e.g., calibration and/or quantization). Evaluated under both classification and regression paradigms, the proposed framework demonstrates that the regression formulation enables fine-grained, continuous estimations and avoids quantization artifacts by resulting in more precise measurements without rounding. Experimental results confirm that FGump outperforms State-of-the-Art (SOTA) AI-based and classical methods, achieving higher accuracy and significantly lower training and inference times, as demonstrated in our results.

Assessment of Spectral based Solutions for the Detection of Floating Marine Debris

Aug 19, 2024Abstract:Typically, the detection of marine debris relies on in-situ campaigns that are characterized by huge human effort and limited spatial coverage. Following the need of a rapid solution for the detection of floating plastic, methods based on remote sensing data have been proposed recently. Their main limitation is represented by the lack of a general reference for evaluating performance. Recently, the Marine Debris Archive (MARIDA) has been released as a standard dataset to develop and evaluate Machine Learning (ML) algorithms for detection of Marine Plastic Debris. The MARIDA dataset has been created for simplifying the comparison between detection solutions with the aim of stimulating the research in the field of marine environment preservation. In this work, an assessment of spectral based solutions is proposed by evaluating performance on MARIDA dataset. The outcome highlights the need of precise reference for fair evaluation.

CATSNet: a context-aware network for Height Estimation in a Forested Area based on Pol-TomoSAR data

Mar 29, 2024

Abstract:Tropical forests are a key component of the global carbon cycle. With plans for upcoming space-borne missions like BIOMASS to monitor forestry, several airborne missions, including TropiSAR and AfriSAR campaigns, have been successfully launched and experimented. Typical Synthetic Aperture Radar Tomography (TomoSAR) methods involve complex models with low accuracy and high computation costs. In recent years, deep learning methods have also gained attention in the TomoSAR framework, showing interesting performance. Recently, a solution based on a fully connected Tomographic Neural Network (TSNN) has demonstrated its effectiveness in accurately estimating forest and ground heights by exploiting the pixel-wise elements of the covariance matrix derived from TomoSAR data. This work instead goes beyond the pixel-wise approach to define a context-aware deep learning-based solution named CATSNet. A convolutional neural network is considered to leverage patch-based information and extract features from a neighborhood rather than focus on a single pixel. The training is conducted by considering TomoSAR data as the input and Light Detection and Ranging (LiDAR) values as the ground truth. The experimental results show striking advantages in both performance and generalization ability by leveraging context information within Multiple Baselines (MB) TomoSAR data across different polarimetric modalities, surpassing existing techniques.

Pansharpening by convolutional neural networks in the full resolution framework

Nov 16, 2021

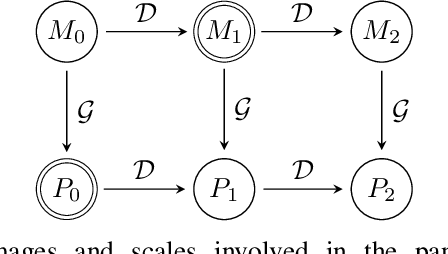

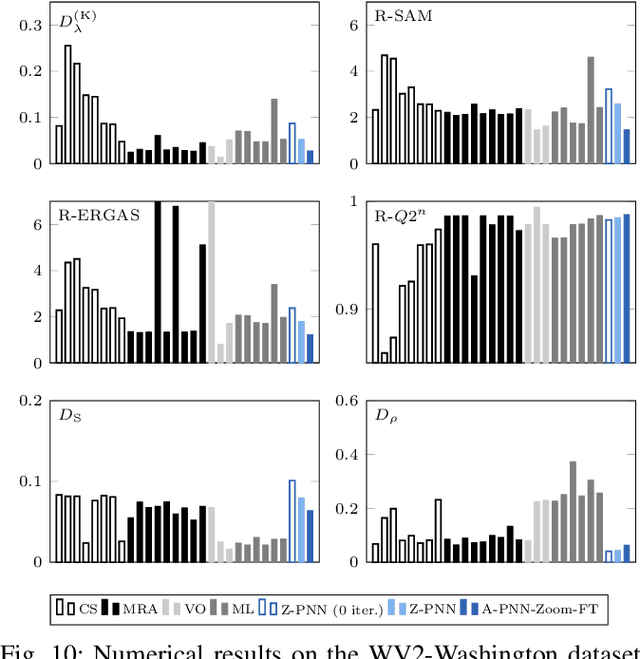

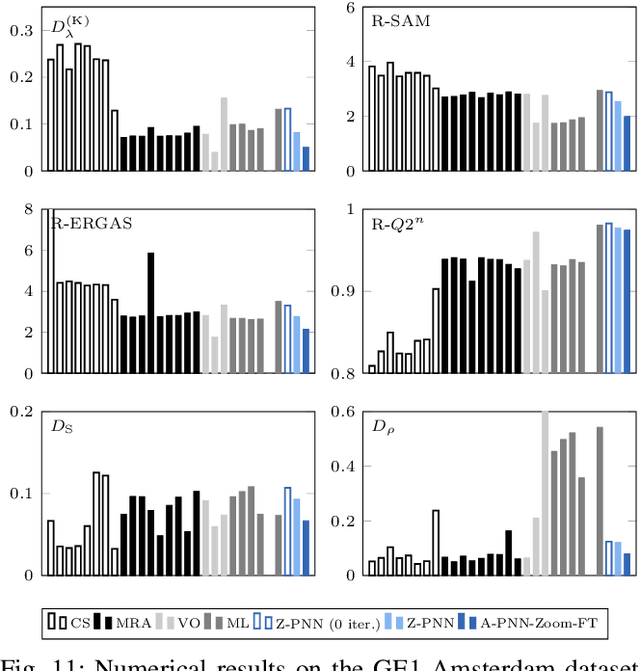

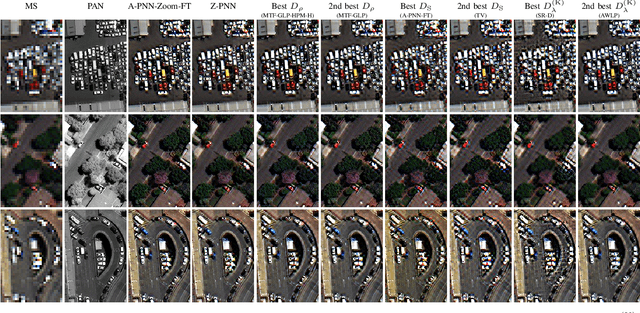

Abstract:In recent years, there has been a growing interest on deep learning-based pansharpening. Research has mainly focused on architectures. However, lacking a ground truth, model training is also a major issue. A popular approach is to train networks in a reduced resolution domain, using the original data as ground truths. The trained networks are then used on full resolution data, relying on an implicit scale invariance hypothesis. Results are generally good at reduced resolution, but more questionable at full resolution. Here, we propose a full-resolution training framework for deep learning-based pansharpening. Training takes place in the high resolution domain, relying only on the original data, with no loss of information. To ensure spectral and spatial fidelity, suitable losses are defined, which force the pansharpened output to be consistent with the available panchromatic and multispectral input. Experiments carried out on WorldView-3, WorldView-2, and GeoEye-1 images show that methods trained with the proposed framework guarantee an excellent performance in terms of both full-resolution numerical indexes and visual quality. The framework is fully general, and can be used to train and fine-tune any deep learning-based pansharpening network.

Multi-Objective CNN Based Algorithm for SAR Despeckling

Jun 16, 2020

Abstract:Deep learning (DL) in remote sensing has nowadays became an effective operative tool: it is largely used in applications such as change detection, image restoration, segmentation, detection and classification. With reference to synthetic aperture radar (SAR) domain the application of DL techniques is not straightforward due to non trivial interpretation of SAR images, specially caused by the presence of speckle. Several deep learning solutions for SAR despeckling have been proposed in the last few years. Most of these solutions focus on the definition of different network architectures with similar cost functions not involving SAR image properties. In this paper, a convolutional neural network (CNN) with a multi-objective cost function taking care of spatial and statistical properties of the SAR image is proposed. This is achieved by the definition of a peculiar loss function obtained by the weighted combination of three different terms. Each of this term is dedicated mainly to one of the following SAR image characteristics: spatial details, speckle statistical properties and strong scatterers preservation. Their combination allows to balance these effects. Moreover, a specifically designed architecture is proposed for effectively extract distinctive features within the considered framework. Experiments on simulated and real SAR images show the accuracy of the proposed method compared to the State-of-Art despeckling algorithms, both from quantitative and qualitative point of view. The importance of considering such SAR properties in the cost function is crucial for a correct noise rejection and object preservation in different underlined scenarios, such as homogeneous, heterogeneous and extremely heterogeneous.

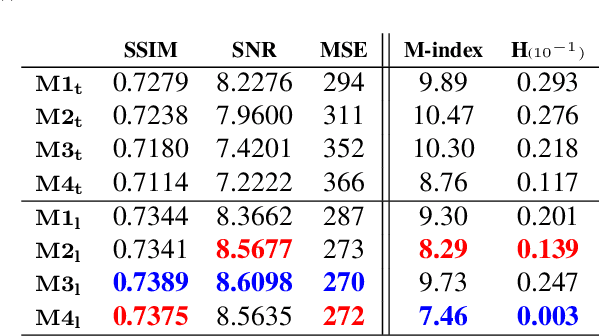

Complexity Analysis of an Edge Preserving CNN SAR Despeckling Algorithm

Apr 17, 2020

Abstract:SAR images are affected by multiplicative noise that impairs their interpretations. In the last decades several methods for SAR denoising have been proposed and in the last years great attention has moved towards deep learning based solutions. Based on our last proposed convolutional neural network for SAR despeckling, here we exploit the effect of the complexity of the network. More precisely, once a dataset has been fixed, we carry out an analysis of the network performance with respect to the number of layers and numbers of features the network is composed of. Evaluation on simulated and real data are carried out. The results show that deeper networks better generalize on both simulated and real images.

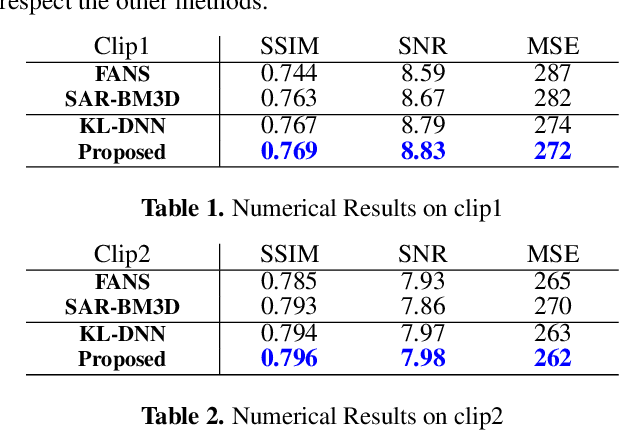

Edge Preserving CNN SAR Despeckling Algorithm

Feb 05, 2020

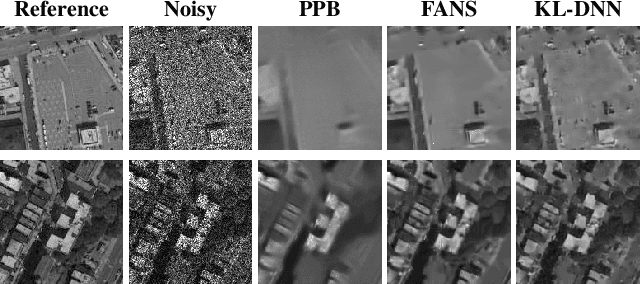

Abstract:SAR despeckling is a key tool for Earth Observation. Interpretation of SAR images are impaired by speckle, a multiplicative noise related to interference of backscattering from the illuminated scene towards the sensor. Reducing the noise is a crucial task for the understanding of the scene. Based on the results of our previous solution KL-DNN, in this work we define a new cost function for training a convolutional neural network for despeckling. The aim is to control the edge preservation and to better filter manmade structures and urban areas that are very challenging for KL-DNN. The results show a very good improvement on the not homogeneous areas keeping the good results in the homogeneous ones. Result on both simulated and real data are shown in the paper.

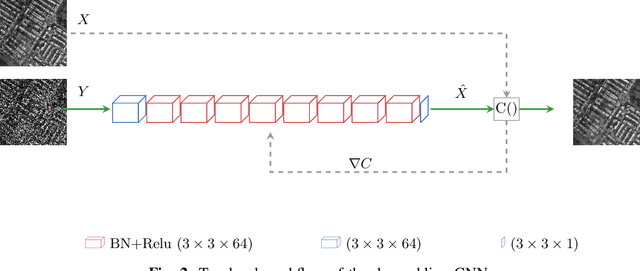

A Novel Cost Function for Despeckling using Convolutional Neural Networks

Jun 11, 2019

Abstract:Removing speckle noise from SAR images is still an open issue. It is well know that the interpretation of SAR images is very challenging and despeckling algorithms are necessary to improve the ability of extracting information. An urban environment makes this task more heavy due to different structures and to different objects scale. Following the recent spread of deep learning methods related to several remote sensing applications, in this work a convolutional neural networks based algorithm for despeckling is proposed. The network is trained on simulated SAR data. The paper is mainly focused on the implementation of a cost function that takes account of both spatial consistency of image and statistical properties of noise.

A New Ratio Image Based CNN Algorithm For SAR Despeckling

Jun 10, 2019

Abstract:In SAR domain many application like classification, detection and segmentation are impaired by speckle. Hence, despeckling of SAR images is the key for scene understanding. Usually despeckling filters face the trade-off of speckle suppression and information preservation. In the last years deep learning solutions for speckle reduction have been proposed. One the biggest issue for these methods is how to train a network given the lack of a reference. In this work we proposed a convolutional neural network based solution trained on simulated data. We propose the use of a cost function taking into account both spatial and statistical properties. The aim is two fold: overcome the trade-off between speckle suppression and details suppression; find a suitable cost function for despeckling in unsupervised learning. The algorithm is validated on both real and simulated data, showing interesting performances.

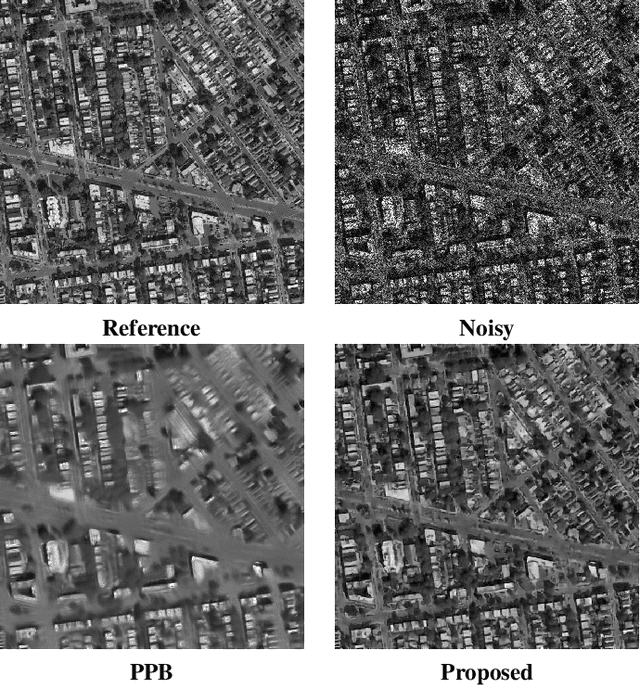

Guided patch-wise nonlocal SAR despeckling

Nov 28, 2018

Abstract:We propose a new method for SAR image despeckling which leverages information drawn from co-registered optical imagery. Filtering is performed by plain patch-wise nonlocal means, operating exclusively on SAR data. However, the filtering weights are computed by taking into account also the optical guide, which is much cleaner than the SAR data, and hence more discriminative. To avoid injecting optical-domain information into the filtered image, a SAR-domain statistical test is preliminarily performed to reject right away any risky predictor. Experiments on two SAR-optical datasets prove the proposed method to suppress very effectively the speckle, preserving structural details, and without introducing visible filtering artifacts. Overall, the proposed method compares favourably with all state-of-the-art despeckling filters, and also with our own previous optical-guided filter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge