Serge Massar

Equilibrium Propagation for Non-Conservative Systems

Feb 03, 2026Abstract:Equilibrium Propagation (EP) is a physics-inspired learning algorithm that uses stationary states of a dynamical system both for inference and learning. In its original formulation it is limited to conservative systems, $\textit{i.e.}$ to dynamics which derive from an energy function. Given their importance in applications, it is important to extend EP to nonconservative systems, $\textit{i.e.}$ systems with non-reciprocal interactions. Previous attempts to generalize EP to such systems failed to compute the exact gradient of the cost function. Here we propose a framework that extends EP to arbitrary nonconservative systems, including feedforward networks. We keep the key property of equilibrium propagation, namely the use of stationary states both for inference and learning. However, we modify the dynamics in the learning phase by a term proportional to the non-reciprocal part of the interaction so as to obtain the exact gradient of the cost function. This algorithm can also be derived using a variational formulation that generates the learning dynamics through an energy function defined over an augmented state space. Numerical experiments using the MNIST database show that this algorithm achieves better performance and learns faster than previous proposals.

Equilibrium Propagation for Learning in Lagrangian Dynamical Systems

May 13, 2025Abstract:We propose a method for training dynamical systems governed by Lagrangian mechanics using Equilibrium Propagation. Our approach extends Equilibrium Propagation -- initially developed for energy-based models -- to dynamical trajectories by leveraging the principle of action extremization. Training is achieved by gently nudging trajectories toward desired targets and measuring how the variables conjugate to the parameters to be trained respond. This method is particularly suited to systems with periodic boundary conditions or fixed initial and final states, enabling efficient parameter updates without requiring explicit backpropagation through time. In the case of periodic boundary conditions, this approach yields the semiclassical limit of Quantum Equilibrium Propagation. Applications to systems with dissipation are also discussed.

Equilibrium Propagation: the Quantum and the Thermal Cases

May 14, 2024Abstract:Equilibrium propagation is a recently introduced method to use and train artificial neural networks in which the network is at the minimum (more generally extremum) of an energy functional. Equilibrium propagation has shown good performance on a number of benchmark tasks. Here we extend equilibrium propagation in two directions. First we show that there is a natural quantum generalization of equilibrium propagation in which a quantum neural network is taken to be in the ground state (more generally any eigenstate) of the network Hamiltonian, with a similar training mechanism that exploits the fact that the mean energy is extremal on eigenstates. Second we extend the analysis of equilibrium propagation at finite temperature, showing that thermal fluctuations allow one to naturally train the network without having to clamp the output layer during training. We also study the low temperature limit of equilibrium propagation.

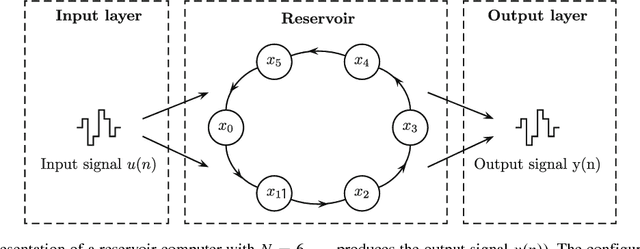

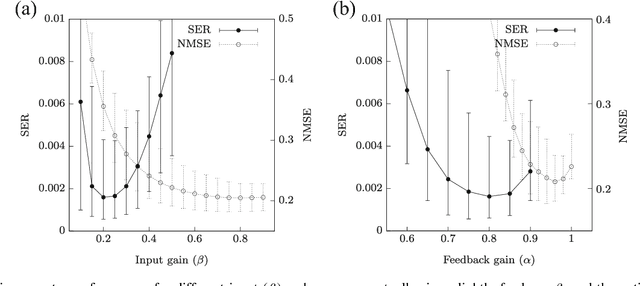

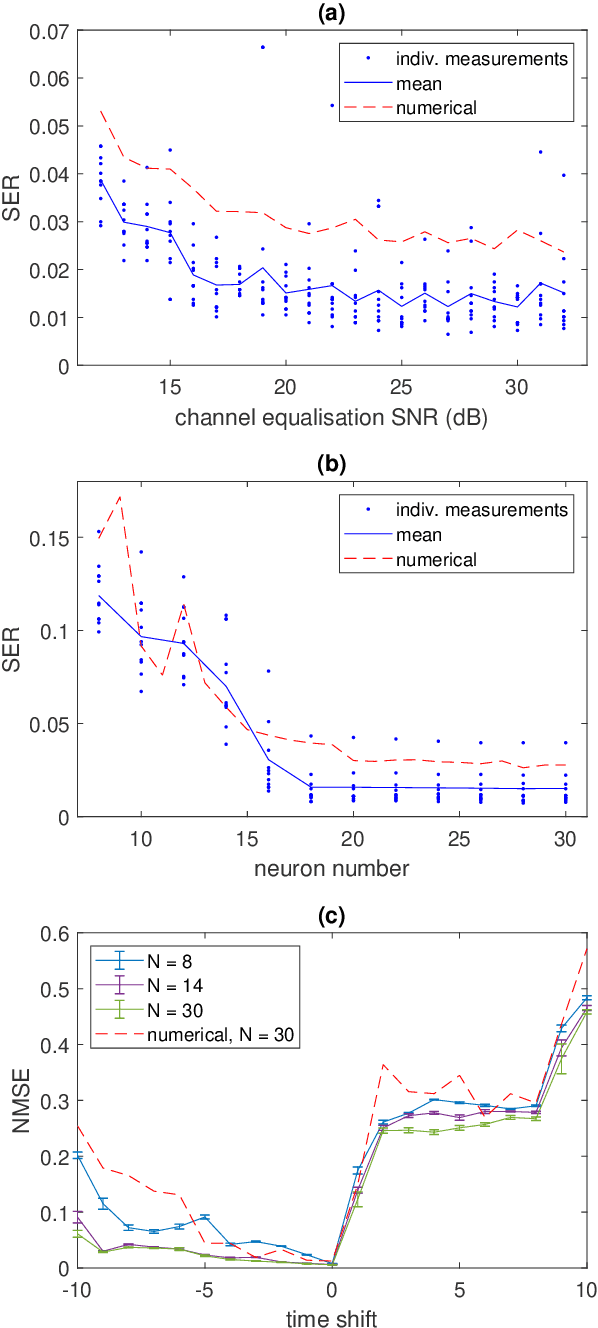

Efficient Optimisation of Physical Reservoir Computers using only a Delayed Input

Jan 25, 2024Abstract:We present an experimental validation of a recently proposed optimization technique for reservoir computing, using an optoelectronic setup. Reservoir computing is a robust framework for signal processing applications, and the development of efficient optimization approaches remains a key challenge. The technique we address leverages solely a delayed version of the input signal to identify the optimal operational region of the reservoir, simplifying the traditionally time-consuming task of hyperparameter tuning. We verify the effectiveness of this approach on different benchmark tasks and reservoir operating conditions.

Weak Kerr Nonlinearity Boosts the Performance of Frequency-Multiplexed Photonic Extreme Learning Machines: A Multifaceted Approach

Dec 19, 2023Abstract:We provide a theoretical, numerical, and experimental investigation of the Kerr nonlinearity impact on the performance of a frequency-multiplexed Extreme Learning Machine (ELM). In such ELM, the neuron signals are encoded in the lines of a frequency comb. The Kerr nonlinearity facilitates the randomized neuron connections allowing for efficient information mixing. A programmable spectral filter applies the output weights. The system operates in a continuous-wave regime. Even at low input peak powers, the resulting weak Kerr nonlinearity is sufficient to significantly boost the performance on several tasks. This boost already arises when one uses only the very small Kerr nonlinearity present in a 20-meter long erbium-doped fiber amplifier. In contrast, a subsequent propagation in 540 meters of a single-mode fiber improves the performance only slightly, whereas additional information mixing with a phase modulator does not result in a further improvement at all. We introduce a model to show that, in frequency-multiplexed ELMs, the Kerr nonlinearity mixes information via four-wave mixing, rather than via self- or cross-phase modulation. At low powers, this effect is quartic in the comb-line amplitudes. Numerical simulations validate our experimental results and interpretation.

Deep Photonic Reservoir Computer for Speech Recognition

Dec 11, 2023

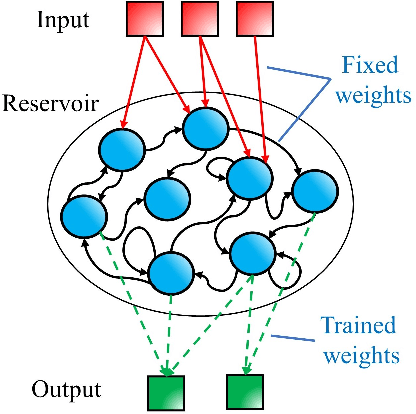

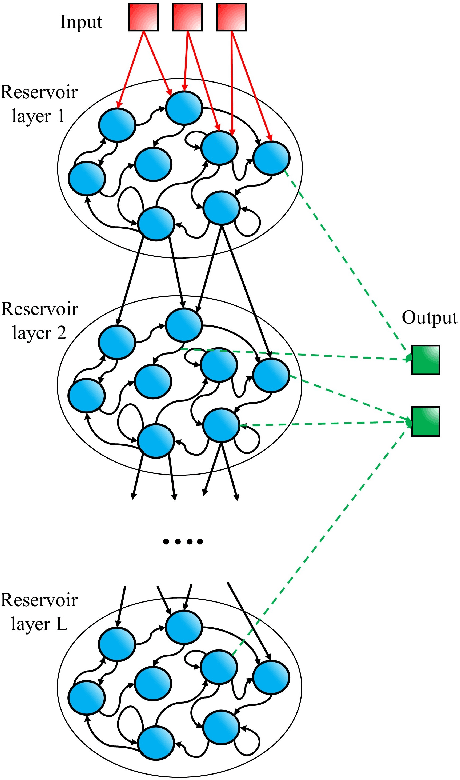

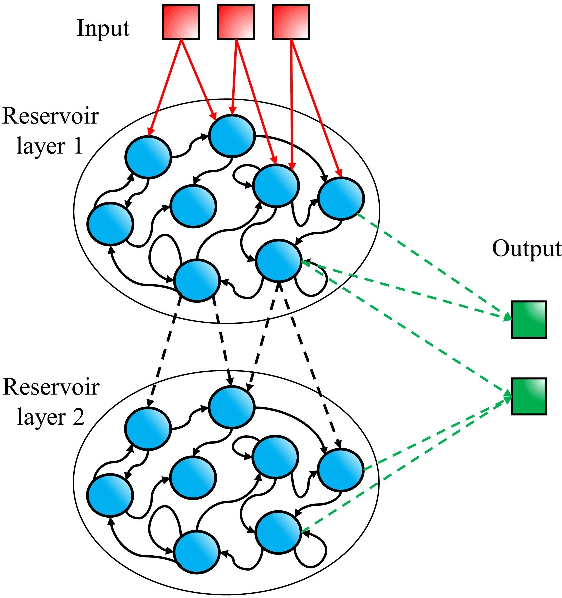

Abstract:Speech recognition is a critical task in the field of artificial intelligence and has witnessed remarkable advancements thanks to large and complex neural networks, whose training process typically requires massive amounts of labeled data and computationally intensive operations. An alternative paradigm, reservoir computing, is energy efficient and is well adapted to implementation in physical substrates, but exhibits limitations in performance when compared to more resource-intensive machine learning algorithms. In this work we address this challenge by investigating different architectures of interconnected reservoirs, all falling under the umbrella of deep reservoir computing. We propose a photonic-based deep reservoir computer and evaluate its effectiveness on different speech recognition tasks. We show specific design choices that aim to simplify the practical implementation of a reservoir computer while simultaneously achieving high-speed processing of high-dimensional audio signals. Overall, with the present work we hope to help the advancement of low-power and high-performance neuromorphic hardware.

High Speed Human Action Recognition using a Photonic Reservoir Computer

May 24, 2023

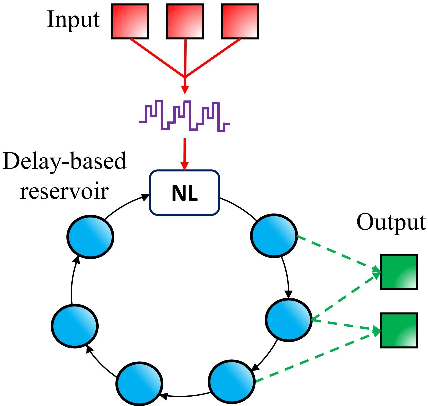

Abstract:The recognition of human actions in videos is one of the most active research fields in computer vision. The canonical approach consists in a more or less complex preprocessing stages of the raw video data, followed by a relatively simple classification algorithm. Here we address recognition of human actions using the reservoir computing algorithm, which allows us to focus on the classifier stage. We introduce a new training method for the reservoir computer, based on "Timesteps Of Interest", which combines in a simple way short and long time scales. We study the performance of this algorithm using both numerical simulations and a photonic implementation based on a single non-linear node and a delay line on the well known KTH dataset. We solve the task with high accuracy and speed, to the point of allowing for processing multiple video streams in real time. The present work is thus an important step towards developing efficient dedicated hardware for video processing.

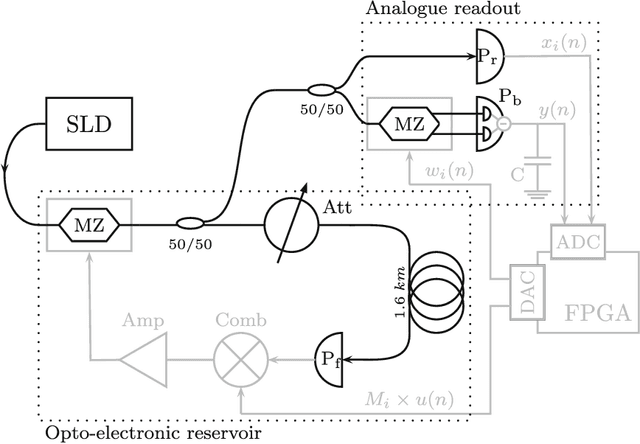

Random pattern and frequency generation using a photonic reservoir computer with output feedback

Dec 19, 2020

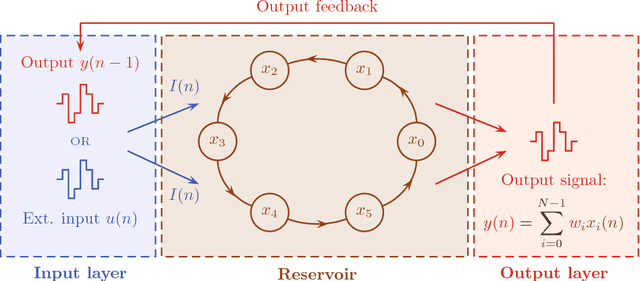

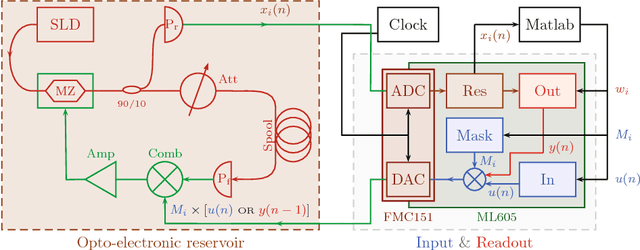

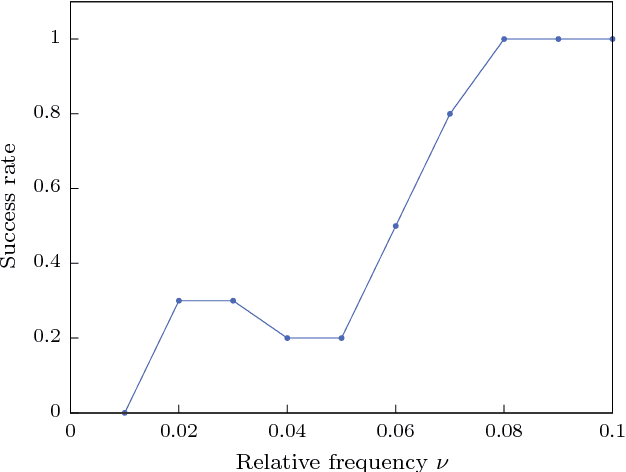

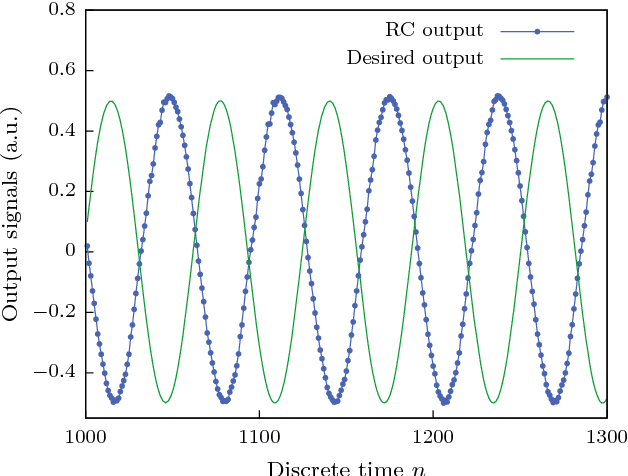

Abstract:Reservoir computing is a bio-inspired computing paradigm for processing time dependent signals. The performance of its analogue implementations matches other digital algorithms on a series of benchmark tasks. Their potential can be further increased by feeding the output signal back into the reservoir, which would allow to apply the algorithm to time series generation. This requires, in principle, implementing a sufficiently fast readout layer for real-time output computation. Here we achieve this with a digital output layer driven by a FPGA chip. We demonstrate the first opto-electronic reservoir computer with output feedback and test it on two examples of time series generation tasks: frequency and random pattern generation. We obtain very good results on the first task, similar to idealised numerical simulations. The performance on the second one, however, suffers from the experimental noise. We illustrate this point with a detailed investigation of the consequences of noise on the performance of a physical reservoir computer with output feedback. Our work thus opens new possible applications for analogue reservoir computing and brings new insights on the impact of noise on the output feedback.

* 15 pages, 9 figures. arXiv admin note: substantial text overlap with arXiv:1802.02026

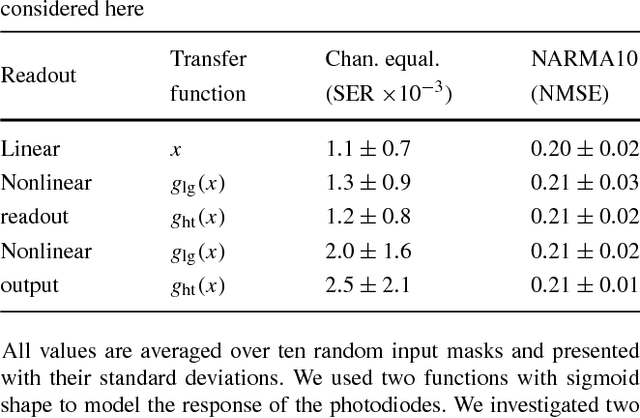

Online training for high-performance analogue readout layers in photonic reservoir computers

Dec 19, 2020

Abstract:Introduction. Reservoir Computing is a bio-inspired computing paradigm for processing time-dependent signals. The performance of its hardware implementation is comparable to state-of-the-art digital algorithms on a series of benchmark tasks. The major bottleneck of these implementation is the readout layer, based on slow offline post-processing. Few analogue solutions have been proposed, but all suffered from notice able decrease in performance due to added complexity of the setup. Methods. Here we propose the use of online training to solve these issues. We study the applicability of this method using numerical simulations of an experimentally feasible reservoir computer with an analogue readout layer. We also consider a nonlinear output layer, which would be very difficult to train with traditional methods. Results. We show numerically that online learning allows to circumvent the added complexity of the analogue layer and obtain the same level of performance as with a digital layer. Conclusion. This work paves the way to high-performance fully-analogue reservoir computers through the use of online training of the output layers.

* 11 pages, 5 figures

Parallel photonic reservoir computing based on frequency multiplexing of neurons

Aug 25, 2020

Abstract:Photonic implementations of reservoir computing can achieve state-of-the-art performance on a number of benchmark tasks, but are predominantly based on sequential data processing. Here we report a parallel implementation that uses frequency domain multiplexing of neuron states, with the potential of significantly reducing the computation time compared to sequential architectures. We illustrate its performance on two standard benchmark tasks. The present work represents an important advance towards high speed, low footprint, all optical photonic information processing.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge