Marc Haelterman

Random pattern and frequency generation using a photonic reservoir computer with output feedback

Dec 19, 2020

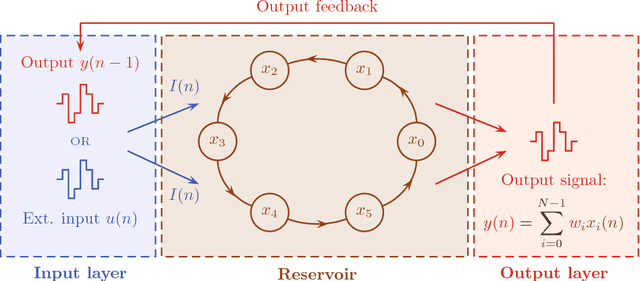

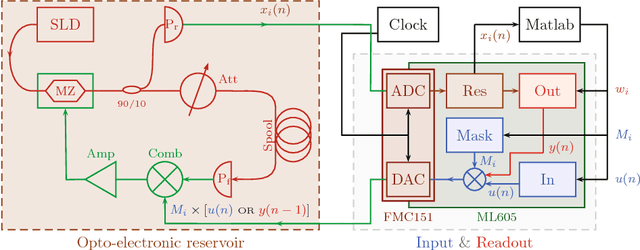

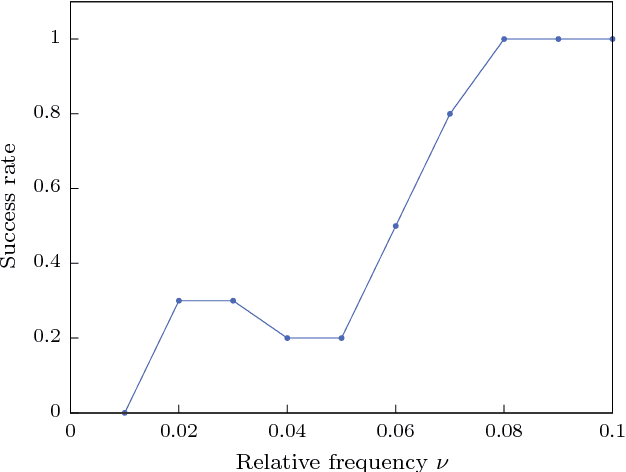

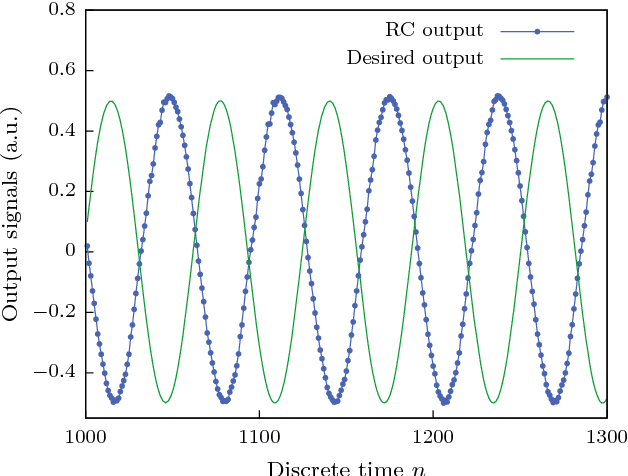

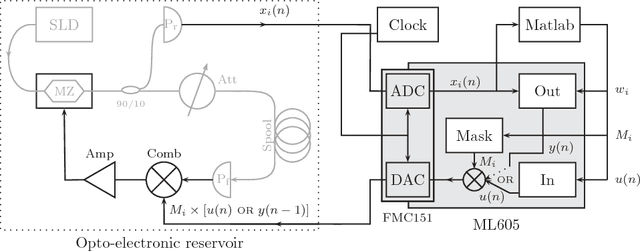

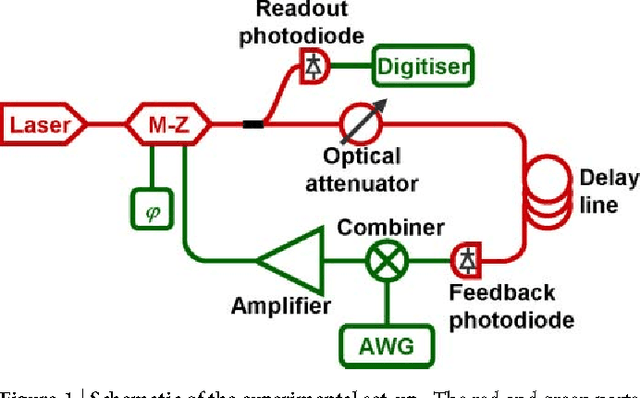

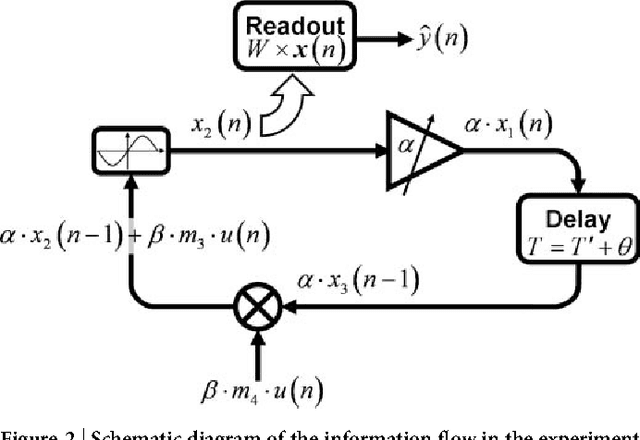

Abstract:Reservoir computing is a bio-inspired computing paradigm for processing time dependent signals. The performance of its analogue implementations matches other digital algorithms on a series of benchmark tasks. Their potential can be further increased by feeding the output signal back into the reservoir, which would allow to apply the algorithm to time series generation. This requires, in principle, implementing a sufficiently fast readout layer for real-time output computation. Here we achieve this with a digital output layer driven by a FPGA chip. We demonstrate the first opto-electronic reservoir computer with output feedback and test it on two examples of time series generation tasks: frequency and random pattern generation. We obtain very good results on the first task, similar to idealised numerical simulations. The performance on the second one, however, suffers from the experimental noise. We illustrate this point with a detailed investigation of the consequences of noise on the performance of a physical reservoir computer with output feedback. Our work thus opens new possible applications for analogue reservoir computing and brings new insights on the impact of noise on the output feedback.

* 15 pages, 9 figures. arXiv admin note: substantial text overlap with arXiv:1802.02026

Online training for high-performance analogue readout layers in photonic reservoir computers

Dec 19, 2020

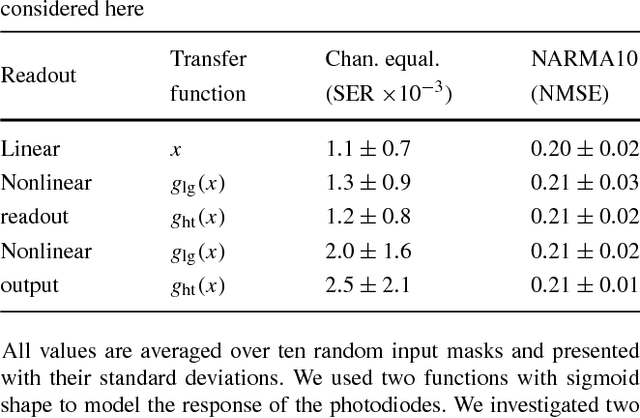

Abstract:Introduction. Reservoir Computing is a bio-inspired computing paradigm for processing time-dependent signals. The performance of its hardware implementation is comparable to state-of-the-art digital algorithms on a series of benchmark tasks. The major bottleneck of these implementation is the readout layer, based on slow offline post-processing. Few analogue solutions have been proposed, but all suffered from notice able decrease in performance due to added complexity of the setup. Methods. Here we propose the use of online training to solve these issues. We study the applicability of this method using numerical simulations of an experimentally feasible reservoir computer with an analogue readout layer. We also consider a nonlinear output layer, which would be very difficult to train with traditional methods. Results. We show numerically that online learning allows to circumvent the added complexity of the analogue layer and obtain the same level of performance as with a digital layer. Conclusion. This work paves the way to high-performance fully-analogue reservoir computers through the use of online training of the output layers.

* 11 pages, 5 figures

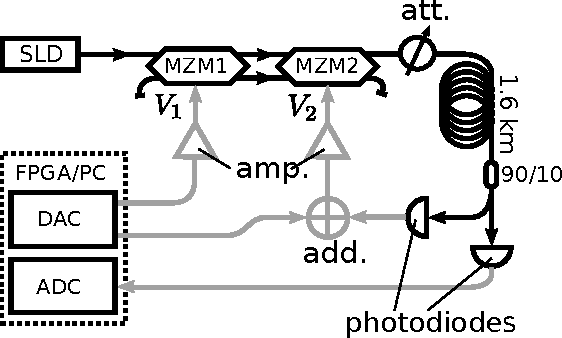

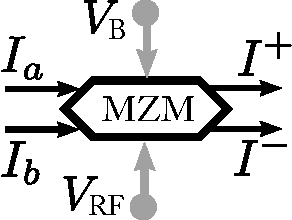

Parallel photonic reservoir computing based on frequency multiplexing of neurons

Aug 25, 2020

Abstract:Photonic implementations of reservoir computing can achieve state-of-the-art performance on a number of benchmark tasks, but are predominantly based on sequential data processing. Here we report a parallel implementation that uses frequency domain multiplexing of neuron states, with the potential of significantly reducing the computation time compared to sequential architectures. We illustrate its performance on two standard benchmark tasks. The present work represents an important advance towards high speed, low footprint, all optical photonic information processing.

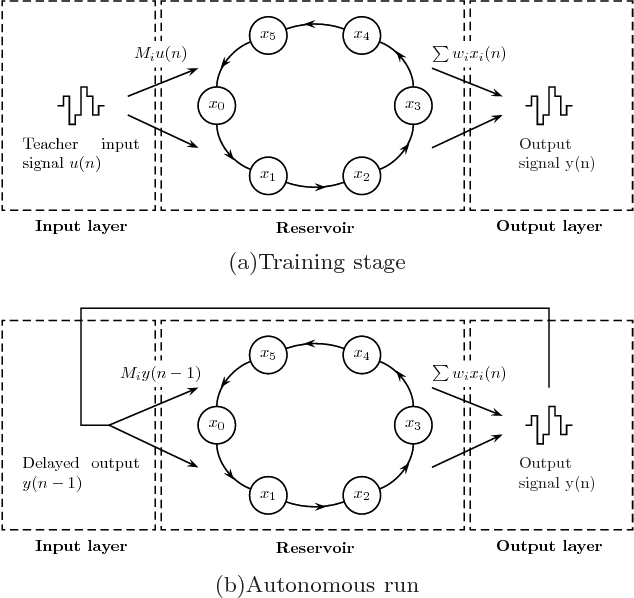

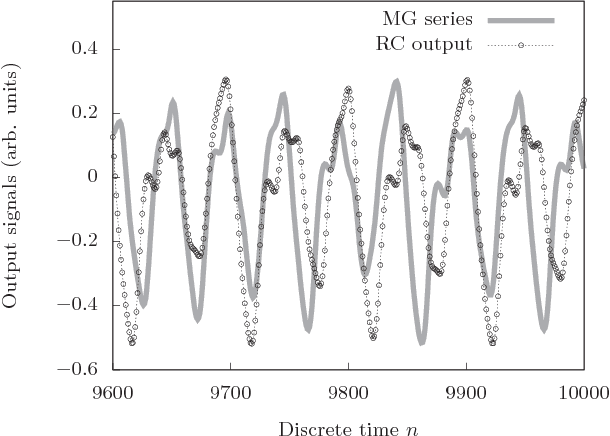

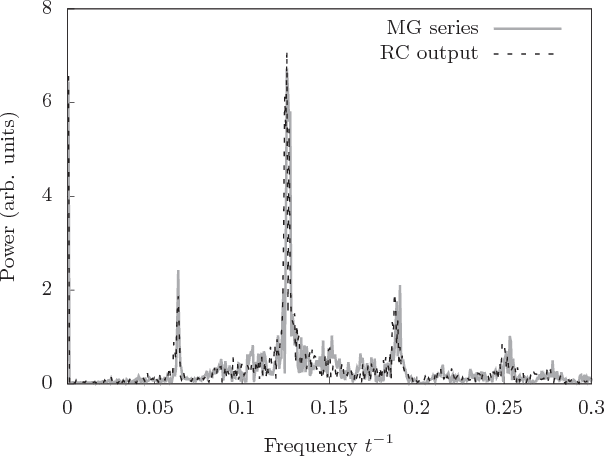

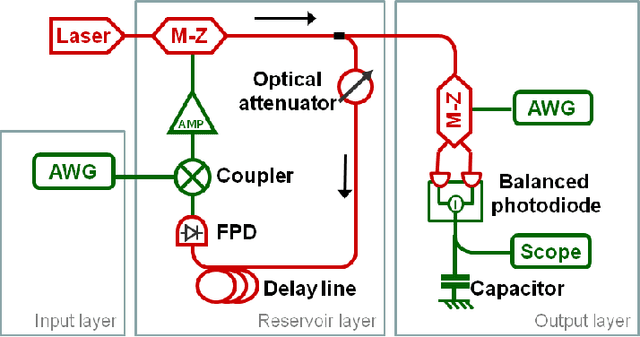

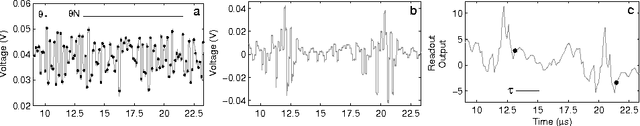

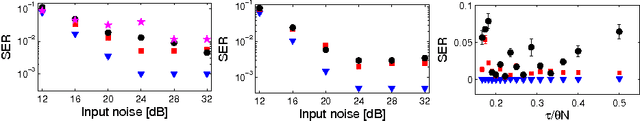

Brain-inspired photonic signal processor for periodic pattern generation and chaotic system emulation

Feb 06, 2018

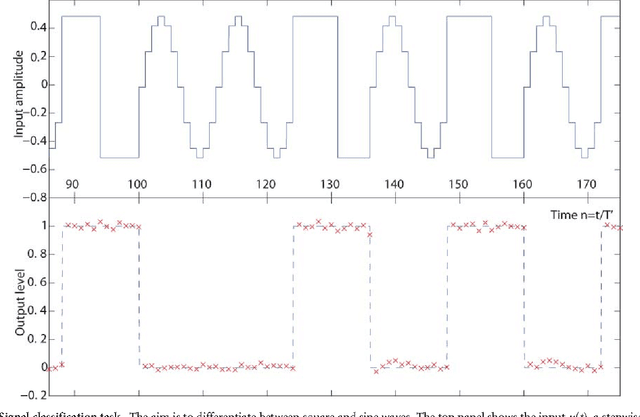

Abstract:Reservoir computing is a bio-inspired computing paradigm for processing time-dependent signals. Its hardware implementations have received much attention because of their simplicity and remarkable performance on a series of benchmark tasks. In previous experiments the output was uncoupled from the system and in most cases simply computed offline on a post-processing computer. However, numerical investigations have shown that feeding the output back into the reservoir would open the possibility of long-horizon time series forecasting. Here we present a photonic reservoir computer with output feedback, and demonstrate its capacity to generate periodic time series and to emulate chaotic systems. We study in detail the effect of experimental noise on system performance. In the case of chaotic systems, this leads us to introduce several metrics, based on standard signal processing techniques, to evaluate the quality of the emulation. Our work significantly enlarges the range of tasks that can be solved by hardware reservoir computers, and therefore the range of applications they could potentially tackle. It also raises novel questions in nonlinear dynamics and chaos theory.

* 16 pages, 18 figures

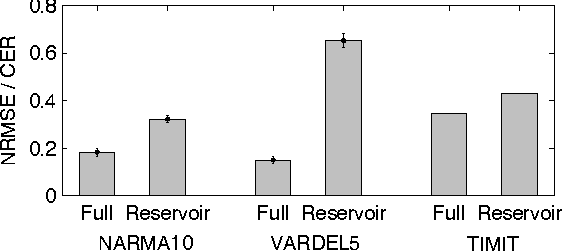

Embodiment of Learning in Electro-Optical Signal Processors

Oct 27, 2016

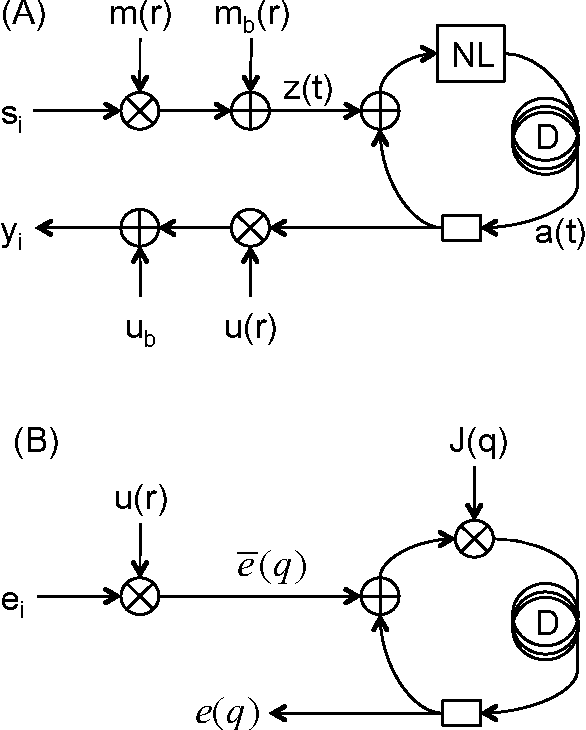

Abstract:Delay-coupled electro-optical systems have received much attention for their dynamical properties and their potential use in signal processing. In particular it has recently been demonstrated, using the artificial intelligence algorithm known as reservoir computing, that photonic implementations of such systems solve complex tasks such as speech recognition. Here we show how the backpropagation algorithm can be physically implemented on the same electro-optical delay-coupled architecture used for computation with only minor changes to the original design. We find that, compared when the backpropagation algorithm is not used, the error rate of the resulting computing device, evaluated on three benchmark tasks, decreases considerably. This demonstrates that electro-optical analog computers can embody a large part of their own training process, allowing them to be applied to new, more difficult tasks.

* Main text (5 pages, 2 figures) merged with the supplementary material (8 pages, 5 figures)

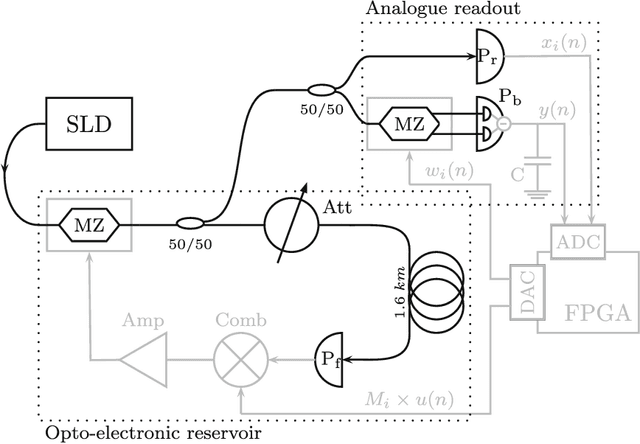

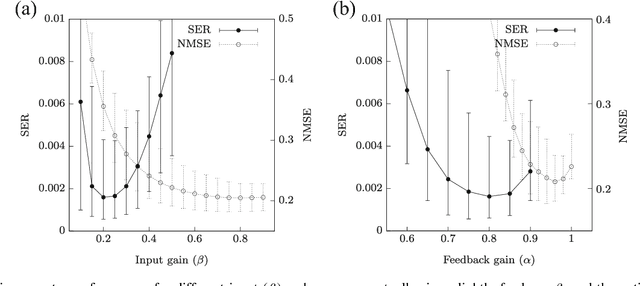

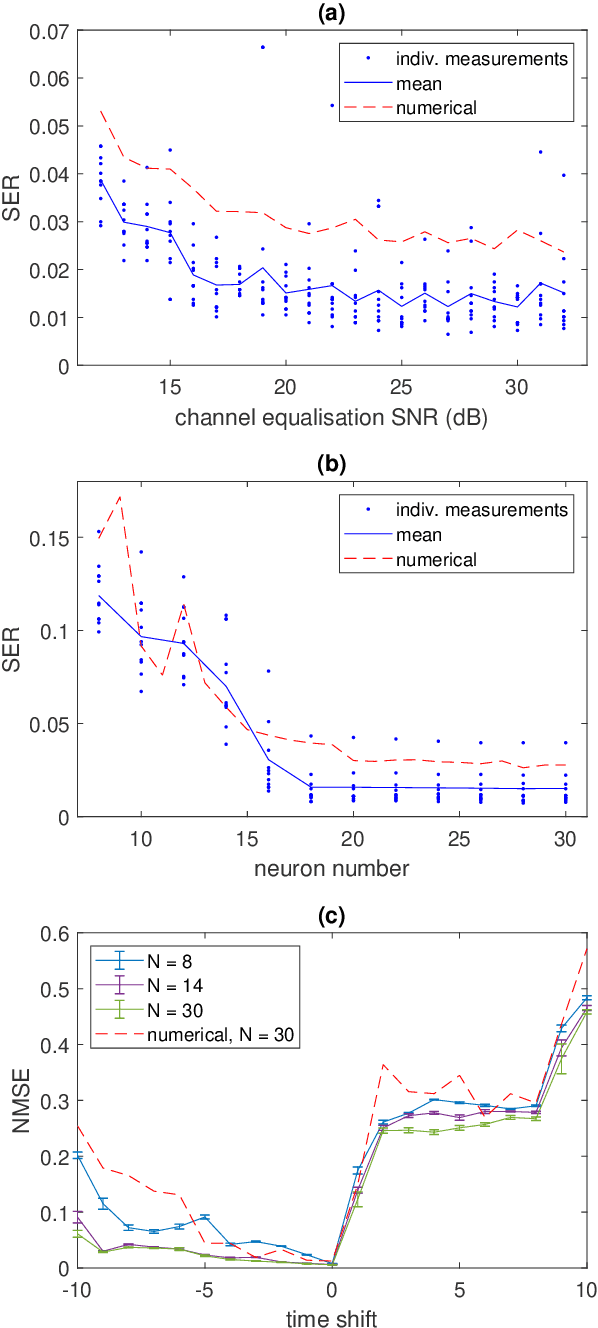

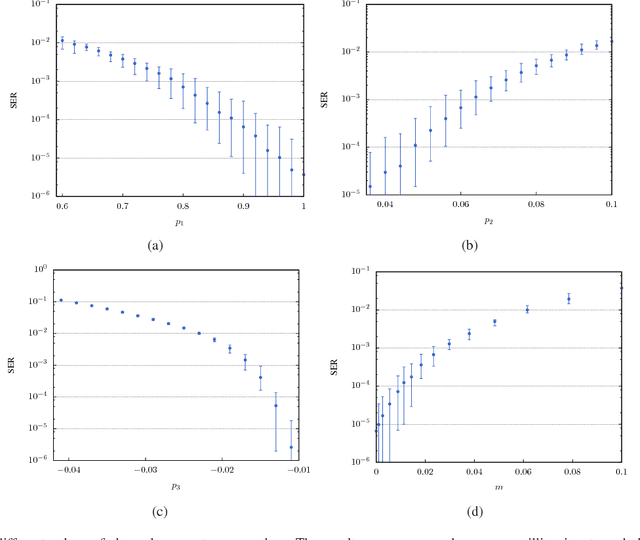

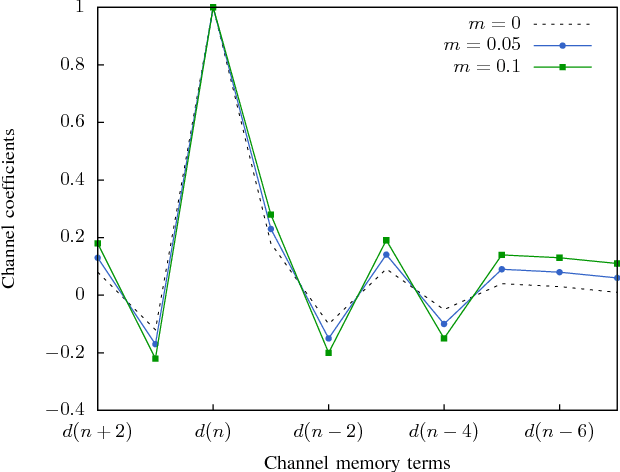

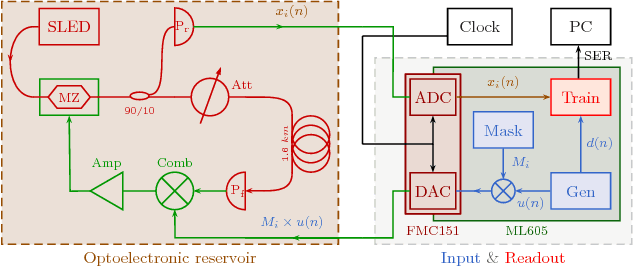

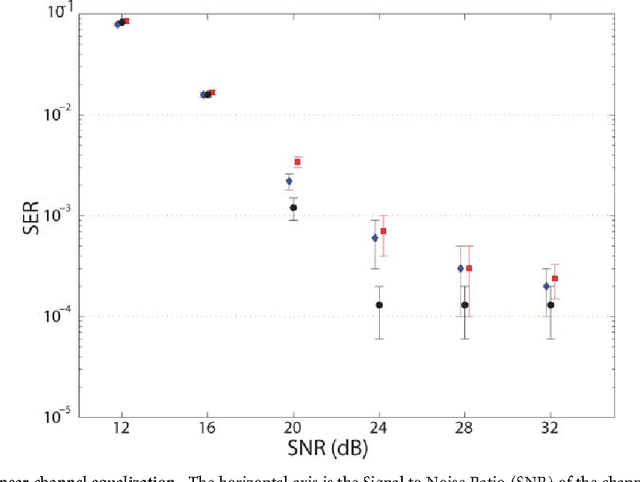

Online Training of an Opto-Electronic Reservoir Computer Applied to Real-Time Channel Equalisation

Oct 20, 2016

Abstract:Reservoir Computing is a bio-inspired computing paradigm for processing time dependent signals. The performance of its analogue implementation are comparable to other state of the art algorithms for tasks such as speech recognition or chaotic time series prediction, but these are often constrained by the offline training methods commonly employed. Here we investigated the online learning approach by training an opto-electronic reservoir computer using a simple gradient descent algorithm, programmed on an FPGA chip. Our system was applied to wireless communications, a quickly growing domain with an increasing demand for fast analogue devices to equalise the nonlinear distorted channels. We report error rates up to two orders of magnitude lower than previous implementations on this task. We show that our system is particularly well-suited for realistic channel equalisation by testing it on a drifting and a switching channels and obtaining good performances

* 13 pages, 10 figures

Analog readout for optical reservoir computers

Sep 14, 2012

Abstract:Reservoir computing is a new, powerful and flexible machine learning technique that is easily implemented in hardware. Recently, by using a time-multiplexed architecture, hardware reservoir computers have reached performance comparable to digital implementations. Operating speeds allowing for real time information operation have been reached using optoelectronic systems. At present the main performance bottleneck is the readout layer which uses slow, digital postprocessing. We have designed an analog readout suitable for time-multiplexed optoelectronic reservoir computers, capable of working in real time. The readout has been built and tested experimentally on a standard benchmark task. Its performance is better than non-reservoir methods, with ample room for further improvement. The present work thereby overcomes one of the major limitations for the future development of hardware reservoir computers.

* to appear in NIPS 2012

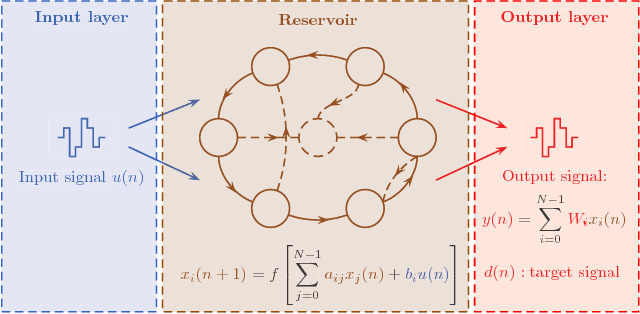

Optoelectronic Reservoir Computing

Nov 30, 2011

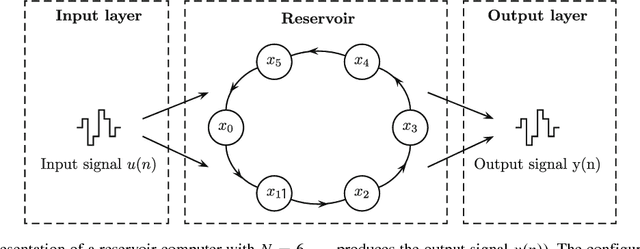

Abstract:Reservoir computing is a recently introduced, highly efficient bio-inspired approach for processing time dependent data. The basic scheme of reservoir computing consists of a non linear recurrent dynamical system coupled to a single input layer and a single output layer. Within these constraints many implementations are possible. Here we report an opto-electronic implementation of reservoir computing based on a recently proposed architecture consisting of a single non linear node and a delay line. Our implementation is sufficiently fast for real time information processing. We illustrate its performance on tasks of practical importance such as nonlinear channel equalization and speech recognition, and obtain results comparable to state of the art digital implementations.

* Contains main paper and two Supplementary Materials

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge