Piotr Antonik

High Speed Human Action Recognition using a Photonic Reservoir Computer

May 24, 2023

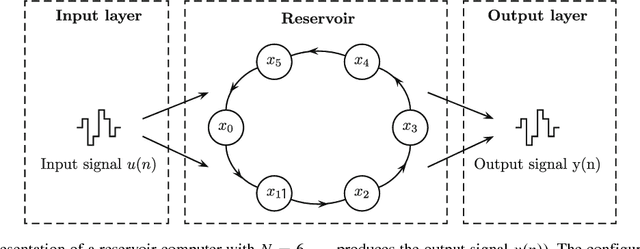

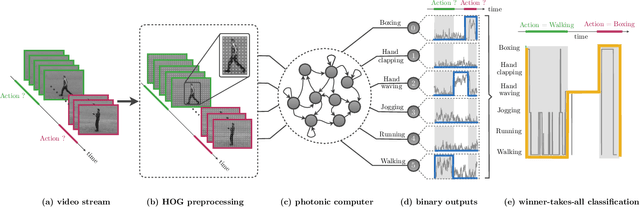

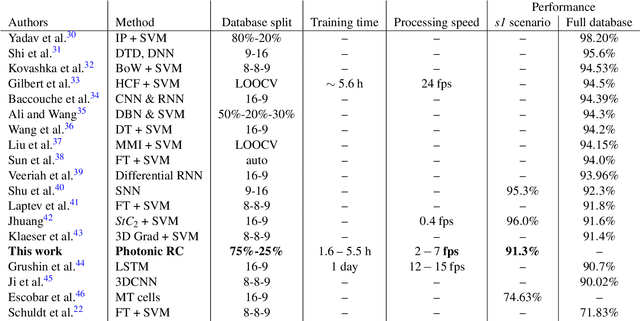

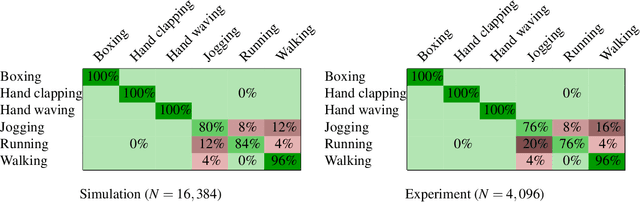

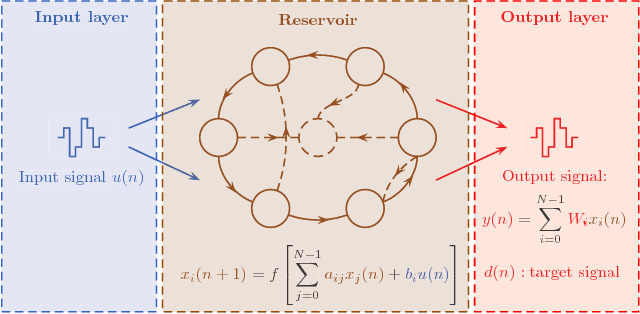

Abstract:The recognition of human actions in videos is one of the most active research fields in computer vision. The canonical approach consists in a more or less complex preprocessing stages of the raw video data, followed by a relatively simple classification algorithm. Here we address recognition of human actions using the reservoir computing algorithm, which allows us to focus on the classifier stage. We introduce a new training method for the reservoir computer, based on "Timesteps Of Interest", which combines in a simple way short and long time scales. We study the performance of this algorithm using both numerical simulations and a photonic implementation based on a single non-linear node and a delay line on the well known KTH dataset. We solve the task with high accuracy and speed, to the point of allowing for processing multiple video streams in real time. The present work is thus an important step towards developing efficient dedicated hardware for video processing.

Random pattern and frequency generation using a photonic reservoir computer with output feedback

Dec 19, 2020

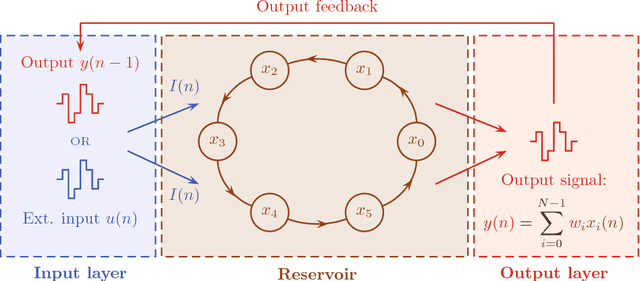

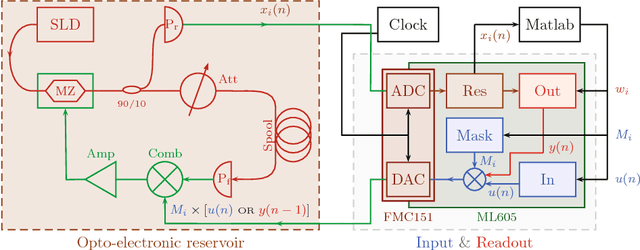

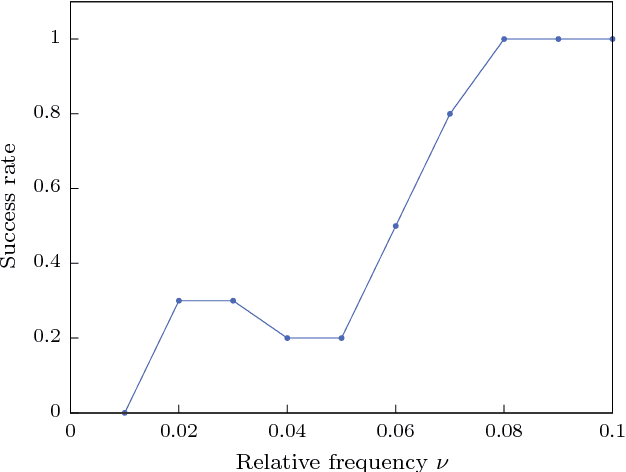

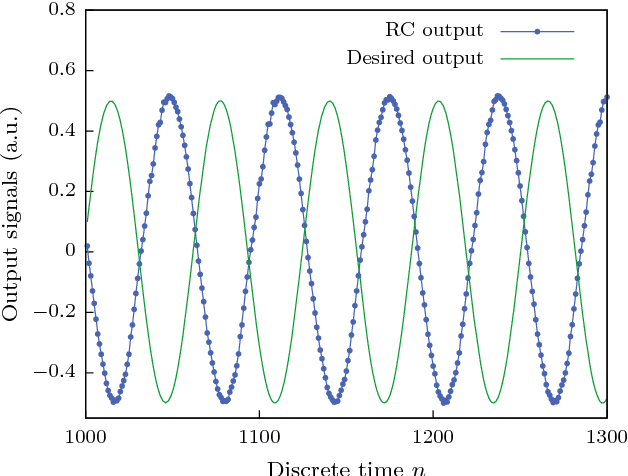

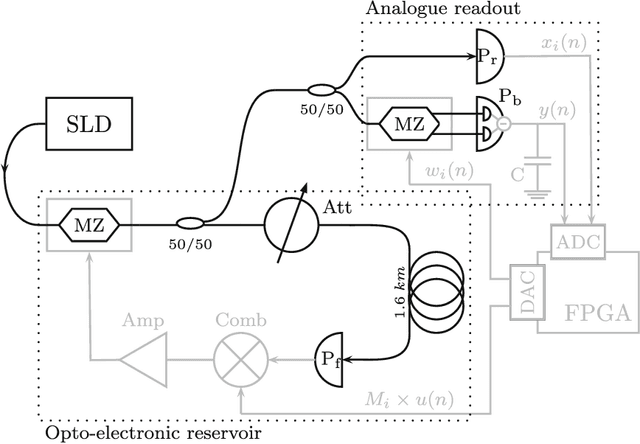

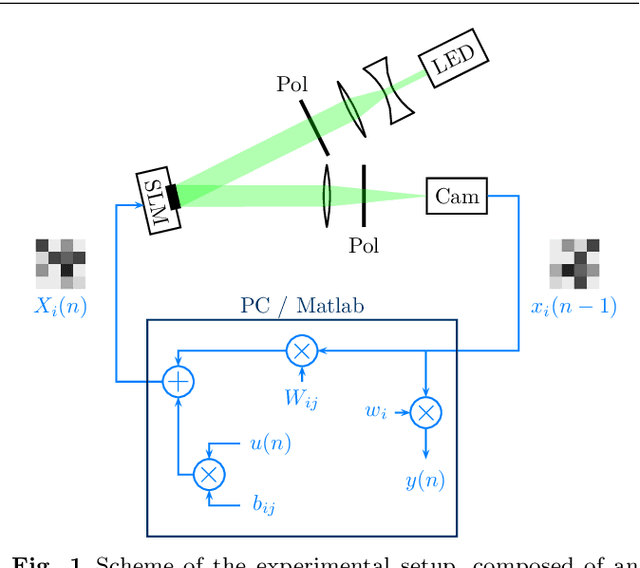

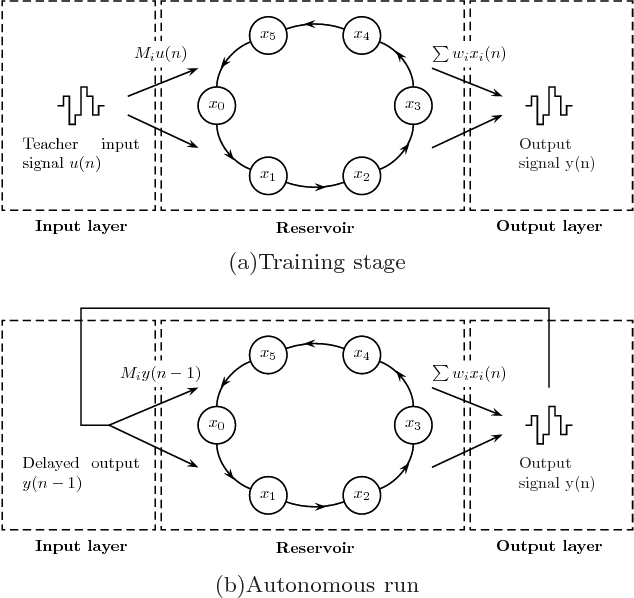

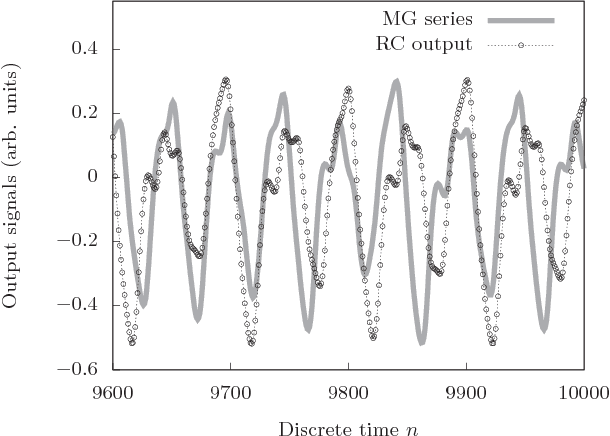

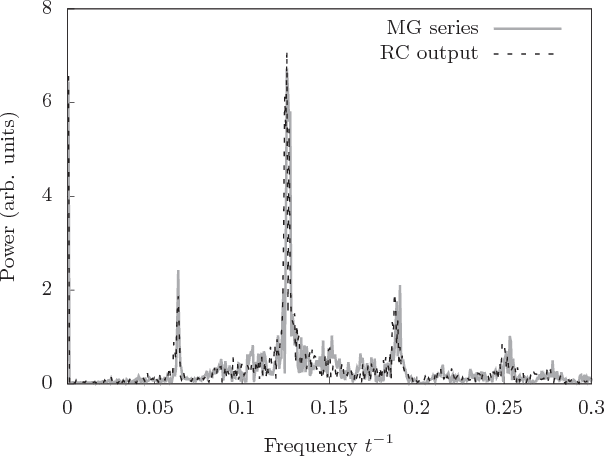

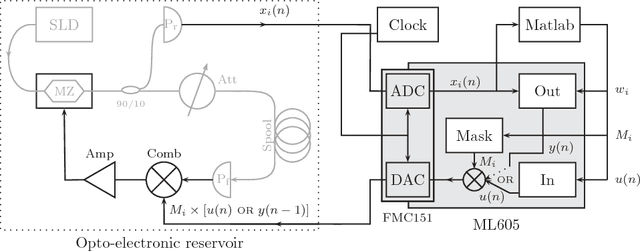

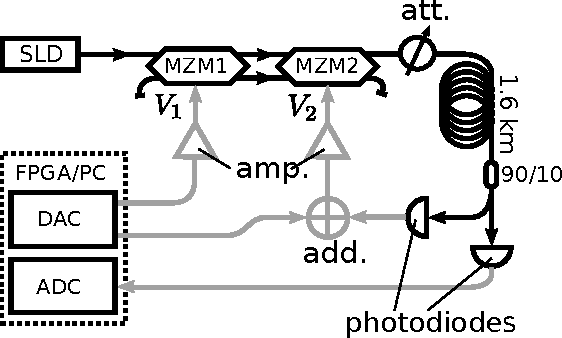

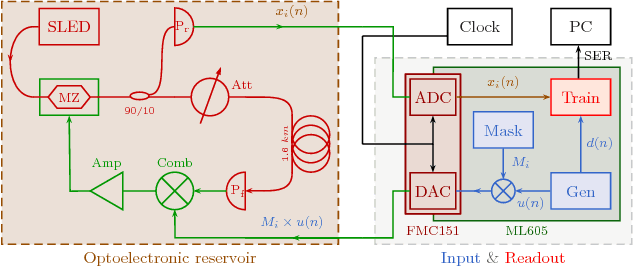

Abstract:Reservoir computing is a bio-inspired computing paradigm for processing time dependent signals. The performance of its analogue implementations matches other digital algorithms on a series of benchmark tasks. Their potential can be further increased by feeding the output signal back into the reservoir, which would allow to apply the algorithm to time series generation. This requires, in principle, implementing a sufficiently fast readout layer for real-time output computation. Here we achieve this with a digital output layer driven by a FPGA chip. We demonstrate the first opto-electronic reservoir computer with output feedback and test it on two examples of time series generation tasks: frequency and random pattern generation. We obtain very good results on the first task, similar to idealised numerical simulations. The performance on the second one, however, suffers from the experimental noise. We illustrate this point with a detailed investigation of the consequences of noise on the performance of a physical reservoir computer with output feedback. Our work thus opens new possible applications for analogue reservoir computing and brings new insights on the impact of noise on the output feedback.

* 15 pages, 9 figures. arXiv admin note: substantial text overlap with arXiv:1802.02026

Online training for high-performance analogue readout layers in photonic reservoir computers

Dec 19, 2020

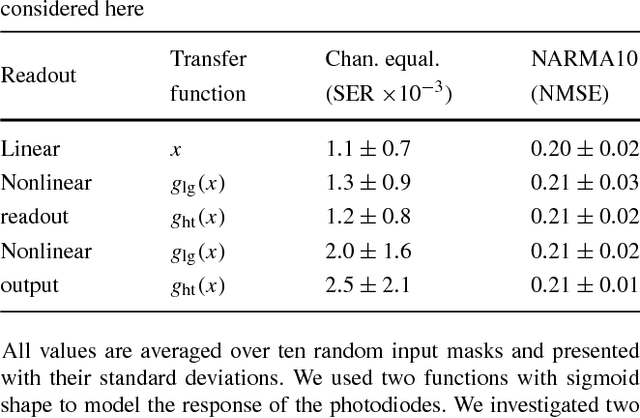

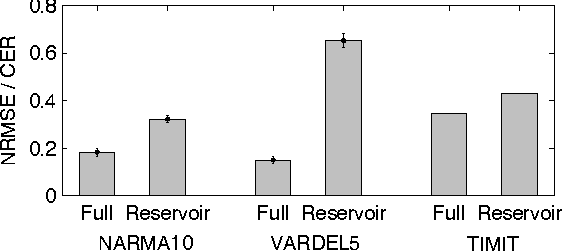

Abstract:Introduction. Reservoir Computing is a bio-inspired computing paradigm for processing time-dependent signals. The performance of its hardware implementation is comparable to state-of-the-art digital algorithms on a series of benchmark tasks. The major bottleneck of these implementation is the readout layer, based on slow offline post-processing. Few analogue solutions have been proposed, but all suffered from notice able decrease in performance due to added complexity of the setup. Methods. Here we propose the use of online training to solve these issues. We study the applicability of this method using numerical simulations of an experimentally feasible reservoir computer with an analogue readout layer. We also consider a nonlinear output layer, which would be very difficult to train with traditional methods. Results. We show numerically that online learning allows to circumvent the added complexity of the analogue layer and obtain the same level of performance as with a digital layer. Conclusion. This work paves the way to high-performance fully-analogue reservoir computers through the use of online training of the output layers.

* 11 pages, 5 figures

Human action recognition with a large-scale brain-inspired photonic computer

Apr 06, 2020

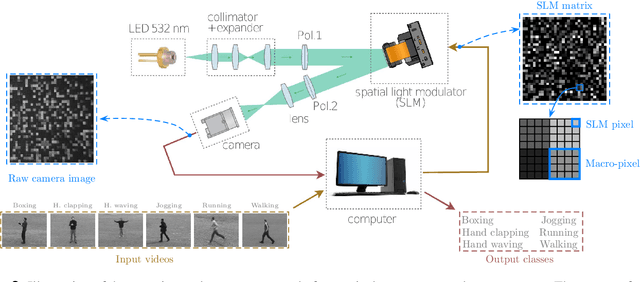

Abstract:The recognition of human actions in video streams is a challenging task in computer vision, with cardinal applications in e.g. brain-computer interface and surveillance. Deep learning has shown remarkable results recently, but can be found hard to use in practice, as its training requires large datasets and special purpose, energy-consuming hardware. In this work, we propose a scalable photonic neuro-inspired architecture based on the reservoir computing paradigm, capable of recognising video-based human actions with state-of-the-art accuracy. Our experimental optical setup comprises off-the-shelf components, and implements a large parallel recurrent neural network that is easy to train and can be scaled up to hundreds of thousands of nodes. This work paves the way towards simply reconfigurable and energy-efficient photonic information processing systems for real-time video processing.

* Authors' version before final reviews (12 pages, 4 figures)

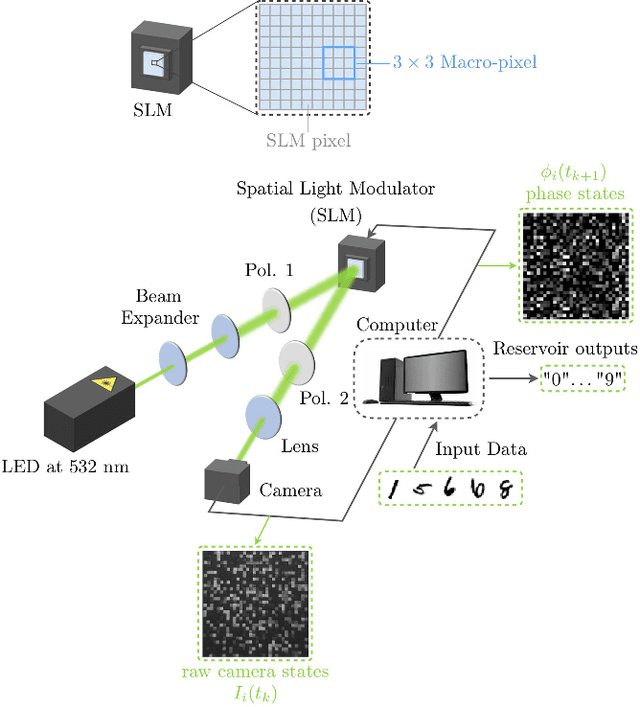

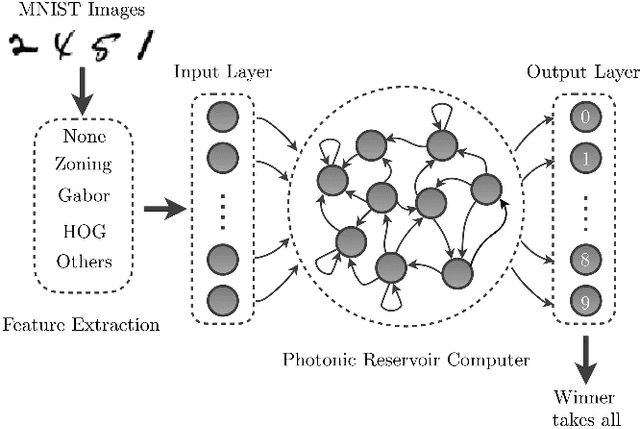

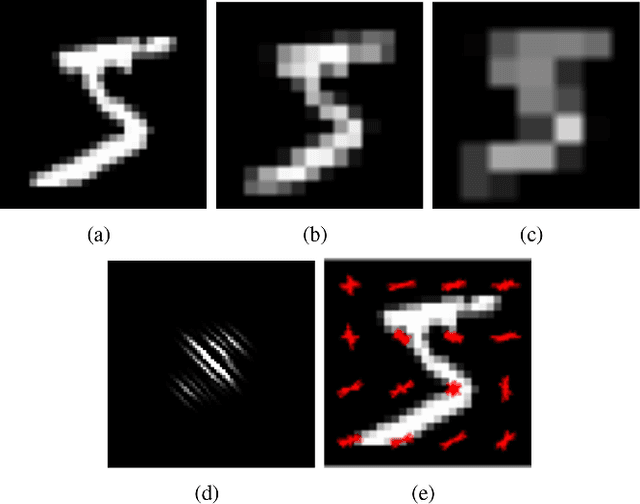

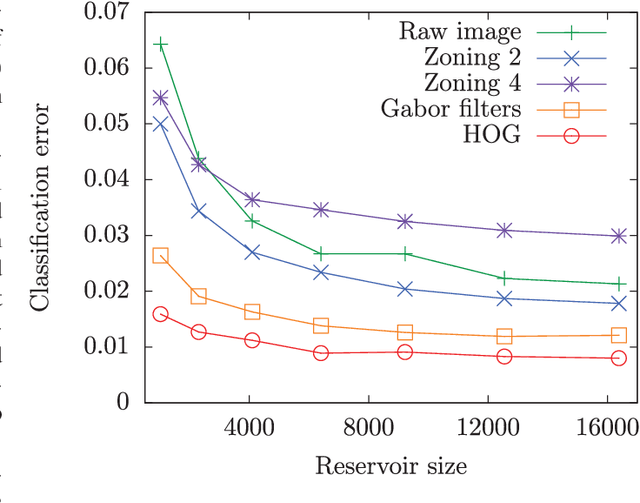

Large-scale spatiotemporal photonic reservoir computer for image classification

Apr 06, 2020

Abstract:We propose a scalable photonic architecture for implementation of feedforward and recurrent neural networks to perform the classification of handwritten digits from the MNIST database. Our experiment exploits off-the-shelf optical and electronic components to currently achieve a network size of 16,384 nodes. Both network types are designed within the the reservoir computing paradigm with randomly weighted input and hidden layers. Using various feature extraction techniques (e.g. histograms of oriented gradients, zoning, Gabor filters) and a simple training procedure consisting of linear regression and winner-takes-all decision strategy, we demonstrate numerically and experimentally that a feedforward network allows for classification error rate of 1%, which is at the state-of-the-art for experimental implementations and remains competitive with more advanced algorithmic approaches. We also investigate recurrent networks in numerical simulations by explicitly activating the temporal dynamics, and predict a performance improvement over the feedforward configuration.

* 12 pages, 9 figures

Bayesian optimisation of large-scale photonic reservoir computers

Apr 06, 2020

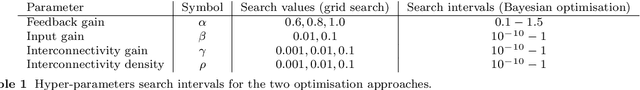

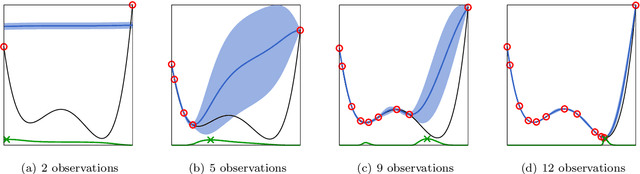

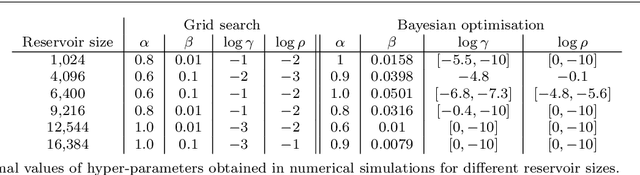

Abstract:Introduction. Reservoir computing is a growing paradigm for simplified training of recurrent neural networks, with a high potential for hardware implementations. Numerous experiments in optics and electronics yield comparable performance to digital state-of-the-art algorithms. Many of the most recent works in the field focus on large-scale photonic systems, with tens of thousands of physical nodes and arbitrary interconnections. While this trend significantly expands the potential applications of photonic reservoir computing, it also complicates the optimisation of the high number of hyper-parameters of the system. Methods. In this work, we propose the use of Bayesian optimisation for efficient exploration of the hyper-parameter space in a minimum number of iteration. Results. We test this approach on a previously reported large-scale experimental system, compare it to the commonly used grid search, and report notable improvements in performance and the number of experimental iterations required to optimise the hyper-parameters. Conclusion. Bayesian optimisation thus has the potential to become the standard method for tuning the hyper-parameters in photonic reservoir computing.

Using a reservoir computer to learn chaotic attractors, with applications to chaos synchronisation and cryptography

Jun 27, 2018

Abstract:Using the machine learning approach known as reservoir computing, it is possible to train one dynamical system to emulate another. We show that such trained reservoir computers reproduce the properties of the attractor of the chaotic system sufficiently well to exhibit chaos synchronisation. That is, the trained reservoir computer, weakly driven by the chaotic system, will synchronise with the chaotic system. Conversely, the chaotic system, weakly driven by a trained reservoir computer, will synchronise with the reservoir computer. We illustrate this behaviour on the Mackey-Glass and Lorenz systems. We then show that trained reservoir computers can be used to crack chaos based cryptography and illustrate this on a chaos cryptosystem based on the Mackey-Glass system. We conclude by discussing why reservoir computers are so good at emulating chaotic systems.

* 10 pages, 6 figures

Brain-inspired photonic signal processor for periodic pattern generation and chaotic system emulation

Feb 06, 2018

Abstract:Reservoir computing is a bio-inspired computing paradigm for processing time-dependent signals. Its hardware implementations have received much attention because of their simplicity and remarkable performance on a series of benchmark tasks. In previous experiments the output was uncoupled from the system and in most cases simply computed offline on a post-processing computer. However, numerical investigations have shown that feeding the output back into the reservoir would open the possibility of long-horizon time series forecasting. Here we present a photonic reservoir computer with output feedback, and demonstrate its capacity to generate periodic time series and to emulate chaotic systems. We study in detail the effect of experimental noise on system performance. In the case of chaotic systems, this leads us to introduce several metrics, based on standard signal processing techniques, to evaluate the quality of the emulation. Our work significantly enlarges the range of tasks that can be solved by hardware reservoir computers, and therefore the range of applications they could potentially tackle. It also raises novel questions in nonlinear dynamics and chaos theory.

* 16 pages, 18 figures

Embodiment of Learning in Electro-Optical Signal Processors

Oct 27, 2016

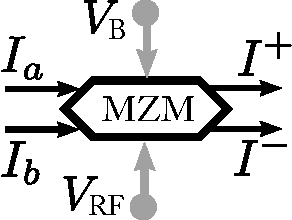

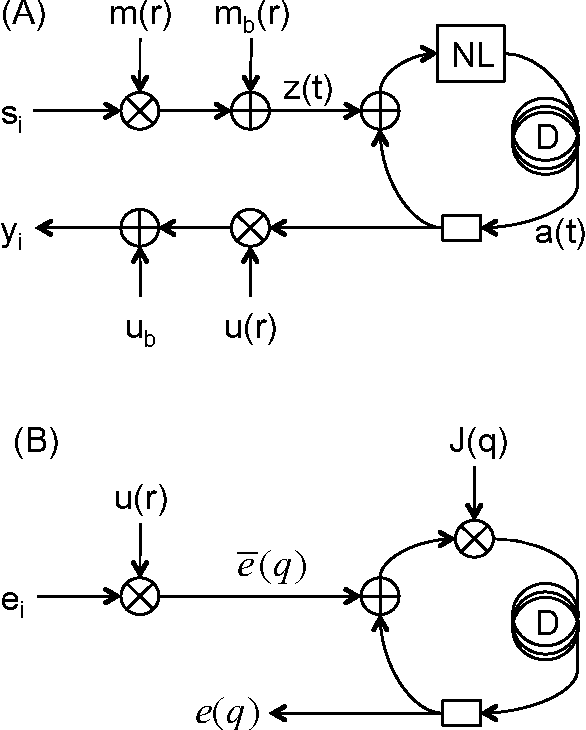

Abstract:Delay-coupled electro-optical systems have received much attention for their dynamical properties and their potential use in signal processing. In particular it has recently been demonstrated, using the artificial intelligence algorithm known as reservoir computing, that photonic implementations of such systems solve complex tasks such as speech recognition. Here we show how the backpropagation algorithm can be physically implemented on the same electro-optical delay-coupled architecture used for computation with only minor changes to the original design. We find that, compared when the backpropagation algorithm is not used, the error rate of the resulting computing device, evaluated on three benchmark tasks, decreases considerably. This demonstrates that electro-optical analog computers can embody a large part of their own training process, allowing them to be applied to new, more difficult tasks.

* Main text (5 pages, 2 figures) merged with the supplementary material (8 pages, 5 figures)

Online Training of an Opto-Electronic Reservoir Computer Applied to Real-Time Channel Equalisation

Oct 20, 2016

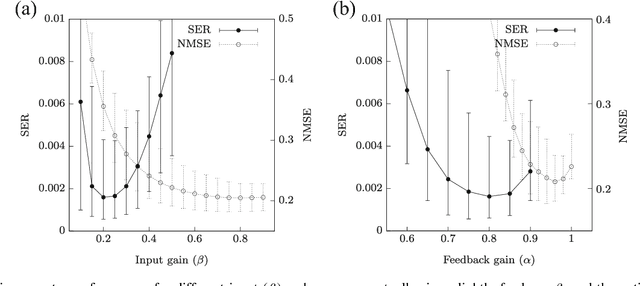

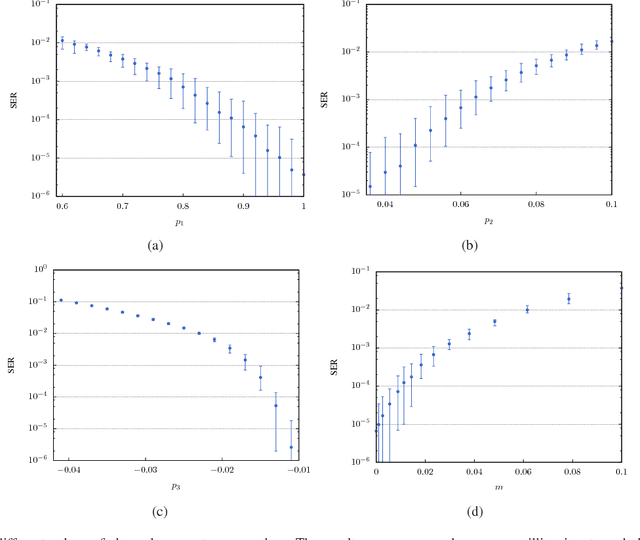

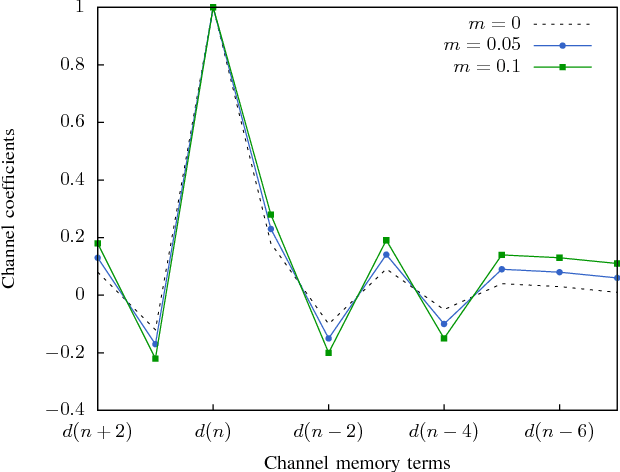

Abstract:Reservoir Computing is a bio-inspired computing paradigm for processing time dependent signals. The performance of its analogue implementation are comparable to other state of the art algorithms for tasks such as speech recognition or chaotic time series prediction, but these are often constrained by the offline training methods commonly employed. Here we investigated the online learning approach by training an opto-electronic reservoir computer using a simple gradient descent algorithm, programmed on an FPGA chip. Our system was applied to wireless communications, a quickly growing domain with an increasing demand for fast analogue devices to equalise the nonlinear distorted channels. We report error rates up to two orders of magnitude lower than previous implementations on this task. We show that our system is particularly well-suited for realistic channel equalisation by testing it on a drifting and a switching channels and obtaining good performances

* 13 pages, 10 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge